Natural Phonology and Sound Change

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

4. R-Influence on Vowels

4. R-influence on vowels Before you study this chapter, check whether you are familiar with the following terms: allophone, centring diphthong, complementary distribution, diphthong, distribution, foreignism, fricative, full vowel, GA, hiatus, homophone, Intrusive-R, labial, lax, letter-to-sound rule, Linking-R, low-starting diphthong, minimal pair, monophthong, morpheme, nasal, non-productive suffix, non-rhotic accent, phoneme, productive suffix, rhotic accent, R-dropping, RP, tense, triphthong This chapter mainly focuses on the behaviour of full vowels before an /r/, the phonological and letter-to-sound rules related to this behaviour and some further phenomena concerning vowels. As it is demonstrated in Chapter 2 the two main accent types of English, rhotic and non-rhotic accents, are most easily distinguished by whether an /r/ is pronounced in all positions or not. In General American, a rhotic accent, all /r/'s are pronounced while in Received Pronunciation, a non-rhotic variant, only prevocalic ones are. Besides this, these – and other – dialects may also be distinguished by the behaviour of stressed vowels before an /r/, briefly mentioned in the previous chapter. To remind the reader of the most important vowel classes that will be referred to we repeat one of the tables from Chapter 3 for convenience. Tense Lax Monophthongs i, u, 3 , e, , , , , , , 1, 2 Diphthongs and , , , , , , , , triphthongs , Chapter 4 Recall that we have come up with a few generalizations in Chapter 3, namely that all short vowels are lax, all diphthongs and triphthongs are tense, non- high long monophthongs are lax, except for //, which behaves in an ambiguous way: sometimes it is tense, in other cases it is lax. -

Phonetic and Phonological Systems Analysis (PPSA) User Notes For

Phonetic and Phonological Systems Analysis (PPSA) User Notes for English Systems Sally Bates* and Jocelynne Watson** *University of St Mark and St John **Queen Margaret University, Edinburgh “A fully comprehensive analysis is not required for every child. A systematic, principled analysis is, however, necessary in all cases since it forms an integral part of the clinical decision-making process.” Bates & Watson (2012, p 105) Sally Bates & Jocelynne Watson (Authors) QMU & UCP Marjon © Phonetic and Phonological Systems Analysis (PPSA) is licensed under a Creative Commons Attribution-Non-Commercial-NoDerivs 3.0 Unported License. Table of Contents Phonetic and Phonological Systems Analysis (PPSA) Introduction 3 PPSA Child 1 Completed PPSA 5 Using the PPSA (Page 1) Singleton Consonants and Word Structure 8 PI (Phonetic Inventory) 8 Target 8 Correct Realisation 10 Errored Realisation and Deletion 12 Other Errors 15 Using the PPSA (Page 2) Consonant Clusters 16 WI Clusters 17 WM Clusters 18 WF Clusters 19 Using the PPSA (Page 3) Vowels 20 Using the PPSA (Page 2) Error Pattern Summary 24 Child 1 Interpretation 25 Child 5 Data Sample 27 Child 5 Completed PPSA 29 Child 5 Interpretation 32 Advantages of the PPSA – why we like this approach 34 What the PPSA doesn’t do 35 References 37 Key points of the Creative Commons License operating with this PPSA Resource 37 N.B. We recommend that the reader has a blank copy of the 3 page PPSA to follow as they go through this guide. This is also available as a free download (PPSA Charting and Summary Form) under the same creative commons license conditions. -

Lenition and Optimality Theory

Lenition and Optimality Theory (Proceedings of LSRL XXIV, Feb. 1994) Haike Jacobs French Department Nijmegen University/Free University Amsterdam 1. Introduction Since Kiparsky (1968) generative historical phonology has relied primarily on the following means in accounting for sound change: rule addition, rule simplification, rule loss and rule reordering. Given that the phonological rule as such no longer exists in the recently proposed framework of Optimality theory (cf. Prince and Smolensky 1993), the question arises how sound change can be accounted for in this theory. Prince and Smolensky (1993) contains mainly applications of the theory to non-segmental phonology (that is, stress and syllable structure), whereas the segmental phonology is only briefly (the segmental inventory of Yidiny) touched upon. In this paper, we will present and discuss an account of consonantal weakening processes within the framework of Optimality theory. We will concentrate on lenition in the historical phonology of French, but take into account synchronic allophonic lenition processes as well. This paper purports to demonstrate not only that segmental phonology can straightforwardly be dealt with in optimality theory, but, moreover, that an optimality-based account of lenition is not thwarted by the drawbacks of previous proposals. This paper is organized as follows. In section 2, we will present the main facts of lenition in the historical phonology of French. After that, section 3 briefly discusses previous analyses of this phenomenon, mainly concentrating on their problematic aspects. Section 4 considers the possibilities of accounting for lenition in Optimality theory. Finally, in section 5 the main results of the present paper are summarized. -

University of Victoria

WPLC Vo1.1 No.1 (1981), 1-17 1 An Assimilation Process in A1tamurano and Other Apu1ian Dialects: an Argument for Lahio-ve1ars. Terry B. Cox University of VictorIa 1.0 INTRODUCTION AND PLAN In a cursory examination of data gathered during a recent field trip to the central and southern regions of Apulia in southeast Italy, I was struck by a seeming complementarity of contexts for 1 two superficially distinct phono10Bica1 processes: a ue diphthong ~ reduces to e after certain consonants; an insertion of u takes place after certain other consonants. On closer scrutiny, it was ascertained that the complementarity of contexts for the processes in the dialects taken as a whole was more illusory than real: some dialects had ue reduction and a restricted type of u insertion; ~ others had ue reduction and no u insertion; still others had spo ~ radic instances of u insertion and no ue reduction. In A1tamurano, ~ however, the contexts for the two processes were fully complementary. In this paper I shall attempt to demonstrate that these two processes can best be understood when seen as two parts of a single diachronic process of consonant labialization, subject to a single surface phonetic condition that permitted u insertion after certain consonants and not only blocked it after certain other consonants, but also eliminated the glide of ue after these same consonants. " I shall also show that A1tamurano alone holds the key to this solution as it is the only dialect where both processes reached 1 For expositional purposes I use ue as a general transcription for a diphthong which in some dialects~is realised phonetically as [WE] and in others has the allophones [we] and [we], as seen below. -

The Phonetics-Phonology Interface in Romance Languages José Ignacio Hualde, Ioana Chitoran

Surface sound and underlying structure : The phonetics-phonology interface in Romance languages José Ignacio Hualde, Ioana Chitoran To cite this version: José Ignacio Hualde, Ioana Chitoran. Surface sound and underlying structure : The phonetics- phonology interface in Romance languages. S. Fischer and C. Gabriel. Manual of grammatical interfaces in Romance, 10, Mouton de Gruyter, pp.23-40, 2016, Manuals of Romance Linguistics, 978-3-11-031186-0. hal-01226122 HAL Id: hal-01226122 https://hal-univ-paris.archives-ouvertes.fr/hal-01226122 Submitted on 24 Dec 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Manual of Grammatical Interfaces in Romance MRL 10 Brought to you by | Université de Paris Mathematiques-Recherche Authenticated | [email protected] Download Date | 11/1/16 3:56 PM Manuals of Romance Linguistics Manuels de linguistique romane Manuali di linguistica romanza Manuales de lingüística románica Edited by Günter Holtus and Fernando Sánchez Miret Volume 10 Brought to you by | Université de Paris Mathematiques-Recherche Authenticated | [email protected] Download Date | 11/1/16 3:56 PM Manual of Grammatical Interfaces in Romance Edited by Susann Fischer and Christoph Gabriel Brought to you by | Université de Paris Mathematiques-Recherche Authenticated | [email protected] Download Date | 11/1/16 3:56 PM ISBN 978-3-11-031178-5 e-ISBN (PDF) 978-3-11-031186-0 e-ISBN (EPUB) 978-3-11-039483-2 Library of Congress Cataloging-in-Publication Data A CIP catalog record for this book has been applied for at the Library of Congress. -

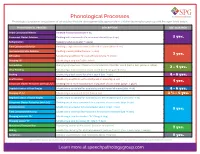

Phonological Processes

Phonological Processes Phonological processes are patterns of articulation that are developmentally appropriate in children learning to speak up until the ages listed below. PHONOLOGICAL PROCESS DESCRIPTION AGE ACQUIRED Initial Consonant Deletion Omitting first consonant (hat → at) Consonant Cluster Deletion Omitting both consonants of a consonant cluster (stop → op) 2 yrs. Reduplication Repeating syllables (water → wawa) Final Consonant Deletion Omitting a singleton consonant at the end of a word (nose → no) Unstressed Syllable Deletion Omitting a weak syllable (banana → nana) 3 yrs. Affrication Substituting an affricate for a nonaffricate (sheep → cheep) Stopping /f/ Substituting a stop for /f/ (fish → tish) Assimilation Changing a phoneme so it takes on a characteristic of another sound (bed → beb, yellow → lellow) 3 - 4 yrs. Velar Fronting Substituting a front sound for a back sound (cat → tat, gum → dum) Backing Substituting a back sound for a front sound (tap → cap) 4 - 5 yrs. Deaffrication Substituting an affricate with a continuant or stop (chip → sip) 4 yrs. Consonant Cluster Reduction (without /s/) Omitting one or more consonants in a sequence of consonants (grape → gape) Depalatalization of Final Singles Substituting a nonpalatal for a palatal sound at the end of a word (dish → dit) 4 - 6 yrs. Stopping of /s/ Substituting a stop sound for /s/ (sap → tap) 3 ½ - 5 yrs. Depalatalization of Initial Singles Substituting a nonpalatal for a palatal sound at the beginning of a word (shy → ty) Consonant Cluster Reduction (with /s/) Omitting one or more consonants in a sequence of consonants (step → tep) Alveolarization Substituting an alveolar for a nonalveolar sound (chew → too) 5 yrs. -

The Phonology, Phonetics, and Diachrony of Sturtevant's

Indo-European Linguistics 7 (2019) 241–307 brill.com/ieul The phonology, phonetics, and diachrony of Sturtevant’s Law Anthony D. Yates University of California, Los Angeles [email protected] Abstract This paper presents a systematic reassessment of Sturtevant’s Law (Sturtevant 1932), which governs the differing outcomes of Proto-Indo-European voiced and voice- less obstruents in Hittite (Anatolian). I argue that Sturtevant’s Law was a con- ditioned pre-Hittite sound change whereby (i) contrastively voiceless word-medial obstruents regularly underwent gemination (cf. Melchert 1994), but gemination was blocked for stops in pre-stop position; and (ii) the inherited [±voice] contrast was then lost, replaced by the [±long] opposition observed in Hittite (cf. Blevins 2004). I pro- vide empirical and typological support for this novel restriction, which is shown not only to account straightforwardly for data that is problematic under previous analy- ses, but also to be phonetically motivated, a natural consequence of the poorly cued durational contrast between voiceless and voiced stops in pre-stop environments. I develop an optimality-theoretic analysis of this gemination pattern in pre-Hittite, and discuss how this grammar gave rise to synchronic Hittite via “transphonologization” (Hyman 1976, 2013). Finally, it is argued that this analysis supports deriving the Hittite stop system from the Proto-Indo-European system as traditionally reconstructed with an opposition between voiceless, voiced, and breathy voiced stops (contra Kloekhorst 2016, Jäntti 2017). Keywords Hittite – Indo-European – diachronic phonology – language change – phonological typology © anthony d. yates, 2019 | doi:10.1163/22125892-00701006 This is an open access article distributed under the terms of the CC-BY-NCDownloaded4.0 License. -

Labialized Consonants in Iraqw Alain Ghio1 Maarten Mous2 and Didier Demolin3

Labialized consonants in Iraqw Alain Ghio1 Maarten Mous2 and Didier Demolin3, Aix-Marseille Univ. & CNRS UMR7309 – LPL1, Universiteit Leiden2, Laboratoire de phonétique et phonologie, CNRS-UMR 7018, Sorbonne nouvelle3 Iraqw a Cushitic language spoken in Tanzania has a set of labialized consonants /ŋw, kw, gw, qw ́, xw/ in its phonological inventory (Mous, 1993). This study makes a comparison between labial movements involved in the production of these consonants and compares them with the gestures of the bilabial nasal [m] and the labiovelar approximant [w]. Data were recorded combining acoustic, EGG and video data. The latter were taken by using simultaneous front and profile images, first at normal speed (25 fps) and then at high-speed (300 fps). Data were recorded with 4 women and 5 men. Results show that the labialized consonants [ŋw, kw, gw, qw ́, xw] are produced with a gesture different from the bilabial nasal and the labiovelar approximant that both involve some lip rounding and protrusion. The labialized consonants show a slow vertical opening movement of the lips with a very slight inter-lip rounding. Figure 1 compares lips positions immediately after the release of the different closures for [kw and qw ́] and in the middle of the gesture of [m] and [w]. Figure 1. Lips front and profile positions shortly after the closure release of [kw] and [qw ́] showing a small inter-lip rounding for [qw ́]. [w] and [m] show both lip rounding and protrusion. High-speed video confirms 2 different types of labial gestures. These findings sustain a claim made by Ladefoged & Maddieson (1996) who suggested 2 possible types of labial gestures involved in the production of labial consonants. -

Lecture 5 Sound Change

An articulatory theory of sound change An articulatory theory of sound change Hypothesis: Most common initial motivation for sound change is the automation of production. Tokens reduced online, are perceived as reduced and represented in the exemplar cluster as reduced. Therefore we expect sound changes to reflect a decrease in gestural magnitude and an increase in gestural overlap. What are some ways to test the articulatory model? The theory makes predictions about what is a possible sound change. These predictions could be tested on a cross-linguistic database. Sound changes that take place in the languages of the world are very similar (Blevins 2004, Bateman 2000, Hajek 1997, Greenberg et al. 1978). We should consider both common and rare changes and try to explain both. Common and rare changes might have different characteristics. Among the properties we could look for are types of phonetic motivation, types of lexical diffusion, gradualness, conditioning environment and resulting segments. Common vs. rare sound change? We need a database that allows us to test hypotheses concerning what types of changes are common and what types are not. A database of sound changes? Most sound changes have occurred in undocumented periods so that we have no record of them. Even in cases with written records, the phonetic interpretation may be unclear. Only a small number of languages have historic records. So any sample of known sound changes would be biased towards those languages. A database of sound changes? Sound changes are known only for some languages of the world: Languages with written histories. Sound changes can be reconstructed by comparing related languages. -

Use of Theses

THESES SIS/LIBRARY TELEPHONE: +61 2 6125 4631 R.G. MENZIES LIBRARY BUILDING NO:2 FACSIMILE: +61 2 6125 4063 THE AUSTRALIAN NATIONAL UNIVERSITY EMAIL: [email protected] CANBERRA ACT 0200 AUSTRALIA USE OF THESES This copy is supplied for purposes of private study and research only. Passages from the thesis may not be copied or closely paraphrased without the written consent of the author. Language in a Fijian Village An Ethnolinguistic Study Annette Schmidt A thesis submitted for the degree of Doctor of Philosophy of the Australian National University. September 1988 ABSTRACT This thesis investigates sociolinguistic variation in the Fijian village of Waitabu. The aim is to investigate how particular uses, functions and varieties of language relate to social patterns and modes of interaction. ·The investigation focuses on the various ways of speaking which characterise the Waitabu repertoire, and attempts to explicate basic sociolinguistic principles and norms for contextually appropriate behaviour.The general purpose is to explicate what the outsider needs to know to communicate appropriately in Waitabu community. Chapter one discusses relevant literature and the theoretical perspective of the thesis. I also detail the fieldwork setting, problems and restrictions, and thesis plan. Chapter two provides the necessary background information to this study, describing the geographical, demographical and sociohistorical setting. Description is given of the contemporary language situation, structure of Fijian (Bouma dialect), and Waitabu social structure and organisation. In Chapter 3, the kinship system which lies at the heart of Waitabu social organisation, and kin-based sociolinguistic roles are analysed. This chapter gives detailed description of the kin categories and the established modes of sociolinguistic behaviour which are associated with various kin-based social identities. -

Is Phonological Consonant Epenthesis Possible? a Series of Artificial Grammar Learning Experiments

Is Phonological Consonant Epenthesis Possible? A Series of Artificial Grammar Learning Experiments Rebecca L. Morley Abstract Consonant epenthesis is typically assumed to be part of the basic repertoire of phonological gram- mars. This implies that there exists some set of linguistic data that entails epenthesis as the best analy- sis. However, a series of artificial grammar learning experiments found no evidence that learners ever selected an epenthesis analysis. Instead, phonetic and morphological biases were revealed, along with individual variation in how learners generalized and regularized their input. These results, in combi- nation with previous work, suggest that synchronic consonant epenthesis may only emerge very rarely, from a gradual accumulation of changes over time. It is argued that the theoretical status of epenthesis must be reconsidered in light of these results, and that investigation of the sufficient learning conditions, and the diachronic developments necessary to produce those conditions, are of central importance to synchronic theory generally. 1 Introduction Epenthesis is defined as insertion of a segment that has no correspondent in the relevant lexical, or un- derlying, form. There are various types of epenthesis that can be defined in terms of either the insertion environment, the features of the epenthesized segment, or both. The focus of this paper is on consonant epenthesis and, more specifically, default consonant epenthesis that results in markedness reduction (e.g. Prince and Smolensky (1993/2004)). Consonant -

L Vocalisation As a Natural Phenomenon

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of Essex Research Repository L Vocalisation as a Natural Phenomenon Wyn Johnson and David Britain Essex University [email protected] [email protected] 1. Introduction The sound /l/ is generally characterised in the literature as a coronal lateral approximant. This standard description holds that the sounds involves contact between the tip of the tongue and the alveolar ridge, but instead of the air being blocked at the sides of the tongue, it is also allowed to pass down the sides. In many (but not all) dialects of English /l/ has two allophones – clear /l/ ([l]), roughly as described, and dark, or velarised, /l/ ([…]) involving a secondary articulation – the retraction of the back of the tongue towards the velum. In dialects which exhibit this allophony, the clear /l/ occurs in syllable onsets and the dark /l/ in syllable rhymes (leaf [li˘f] vs. feel [fi˘…] and table [te˘b…]). The focus of this paper is the phenomenon of l-vocalisation, that is to say the vocalisation of dark /l/ in syllable rhymes 1. feel [fi˘w] table [te˘bu] but leaf [li˘f] 1 This process is widespread in the varieties of English spoken in the South-Eastern part of Britain (Bower 1973; Hardcastle & Barry 1989; Hudson and Holloway 1977; Meuter 2002, Przedlacka 2001; Spero 1996; Tollfree 1999, Trudgill 1986; Wells 1982) (indeed, it appears to be categorical in some varieties there) and which extends to many other dialects including American English (Ash 1982; Hubbell 1950; Pederson 2001); Australian English (Borowsky 2001, Borowsky and Horvath 1997, Horvath and Horvath 1997, 2001, 2002), New Zealand English (Bauer 1986, 1994; Horvath and Horvath 2001, 2002) and Falkland Island English (Sudbury 2001).