With Natural Language-Processing (In the Brain)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Malware Classification with BERT

San Jose State University SJSU ScholarWorks Master's Projects Master's Theses and Graduate Research Spring 5-25-2021 Malware Classification with BERT Joel Lawrence Alvares Follow this and additional works at: https://scholarworks.sjsu.edu/etd_projects Part of the Artificial Intelligence and Robotics Commons, and the Information Security Commons Malware Classification with Word Embeddings Generated by BERT and Word2Vec Malware Classification with BERT Presented to Department of Computer Science San José State University In Partial Fulfillment of the Requirements for the Degree By Joel Alvares May 2021 Malware Classification with Word Embeddings Generated by BERT and Word2Vec The Designated Project Committee Approves the Project Titled Malware Classification with BERT by Joel Lawrence Alvares APPROVED FOR THE DEPARTMENT OF COMPUTER SCIENCE San Jose State University May 2021 Prof. Fabio Di Troia Department of Computer Science Prof. William Andreopoulos Department of Computer Science Prof. Katerina Potika Department of Computer Science 1 Malware Classification with Word Embeddings Generated by BERT and Word2Vec ABSTRACT Malware Classification is used to distinguish unique types of malware from each other. This project aims to carry out malware classification using word embeddings which are used in Natural Language Processing (NLP) to identify and evaluate the relationship between words of a sentence. Word embeddings generated by BERT and Word2Vec for malware samples to carry out multi-class classification. BERT is a transformer based pre- trained natural language processing (NLP) model which can be used for a wide range of tasks such as question answering, paraphrase generation and next sentence prediction. However, the attention mechanism of a pre-trained BERT model can also be used in malware classification by capturing information about relation between each opcode and every other opcode belonging to a malware family. -

Bros:Apre-Trained Language Model for Understanding Textsin Document

Under review as a conference paper at ICLR 2021 BROS: A PRE-TRAINED LANGUAGE MODEL FOR UNDERSTANDING TEXTS IN DOCUMENT Anonymous authors Paper under double-blind review ABSTRACT Understanding document from their visual snapshots is an emerging and chal- lenging problem that requires both advanced computer vision and NLP methods. Although the recent advance in OCR enables the accurate extraction of text seg- ments, it is still challenging to extract key information from documents due to the diversity of layouts. To compensate for the difficulties, this paper introduces a pre-trained language model, BERT Relying On Spatiality (BROS), that represents and understands the semantics of spatially distributed texts. Different from pre- vious pre-training methods on 1D text, BROS is pre-trained on large-scale semi- structured documents with a novel area-masking strategy while efficiently includ- ing the spatial layout information of input documents. Also, to generate structured outputs in various document understanding tasks, BROS utilizes a powerful graph- based decoder that can capture the relation between text segments. BROS achieves state-of-the-art results on four benchmark tasks: FUNSD, SROIE*, CORD, and SciTSR. Our experimental settings and implementation codes will be publicly available. 1 INTRODUCTION Document intelligence (DI)1, which understands industrial documents from their visual appearance, is a critical application of AI in business. One of the important challenges of DI is a key information extraction task (KIE) (Huang et al., 2019; Jaume et al., 2019; Park et al., 2019) that extracts struc- tured information from documents such as financial reports, invoices, business emails, insurance quotes, and many others. -

Information Extraction Based on Named Entity for Tourism Corpus

Information Extraction based on Named Entity for Tourism Corpus Chantana Chantrapornchai Aphisit Tunsakul Dept. of Computer Engineering Dept. of Computer Engineering Faculty of Engineering Faculty of Engineering Kasetsart University Kasetsart University Bangkok, Thailand Bangkok, Thailand [email protected] [email protected] Abstract— Tourism information is scattered around nowa- The ontology is extracted based on HTML web structure, days. To search for the information, it is usually time consuming and the corpus is based on WordNet. For these approaches, to browse through the results from search engine, select and the time consuming process is the annotation which is to view the details of each accommodation. In this paper, we present a methodology to extract particular information from annotate the type of name entity. In this paper, we target at full text returned from the search engine to facilitate the users. the tourism domain, and aim to extract particular information Then, the users can specifically look to the desired relevant helping for ontology data acquisition. information. The approach can be used for the same task in We present the framework for the given named entity ex- other domains. The main steps are 1) building training data traction. Starting from the web information scraping process, and 2) building recognition model. First, the tourism data is gathered and the vocabularies are built. The raw corpus is used the data are selected based on the HTML tag for corpus to train for creating vocabulary embedding. Also, it is used building. The data is used for model creation for automatic for creating annotated data. -

NLP with BERT: Sentiment Analysis Using SAS® Deep Learning and Dlpy Doug Cairns and Xiangxiang Meng, SAS Institute Inc

Paper SAS4429-2020 NLP with BERT: Sentiment Analysis Using SAS® Deep Learning and DLPy Doug Cairns and Xiangxiang Meng, SAS Institute Inc. ABSTRACT A revolution is taking place in natural language processing (NLP) as a result of two ideas. The first idea is that pretraining a deep neural network as a language model is a good starting point for a range of NLP tasks. These networks can be augmented (layers can be added or dropped) and then fine-tuned with transfer learning for specific NLP tasks. The second idea involves a paradigm shift away from traditional recurrent neural networks (RNNs) and toward deep neural networks based on Transformer building blocks. One architecture that embodies these ideas is Bidirectional Encoder Representations from Transformers (BERT). BERT and its variants have been at or near the top of the leaderboard for many traditional NLP tasks, such as the general language understanding evaluation (GLUE) benchmarks. This paper provides an overview of BERT and shows how you can create your own BERT model by using SAS® Deep Learning and the SAS DLPy Python package. It illustrates the effectiveness of BERT by performing sentiment analysis on unstructured product reviews submitted to Amazon. INTRODUCTION Providing a computer-based analog for the conceptual and syntactic processing that occurs in the human brain for spoken or written communication has proven extremely challenging. As a simple example, consider the abstract for this (or any) technical paper. If well written, it should be a concise summary of what you will learn from reading the paper. As a reader, you expect to see some or all of the following: • Technical context and/or problem • Key contribution(s) • Salient result(s) If you were tasked to create a computer-based tool for summarizing papers, how would you translate your expectations as a reader into an implementable algorithm? This is the type of problem that the field of natural language processing (NLP) addresses. -

What the Neurocognitive Study of Inner Language Reveals About Our Inner Space Hélène Loevenbruck

What the neurocognitive study of inner language reveals about our inner space Hélène Loevenbruck To cite this version: Hélène Loevenbruck. What the neurocognitive study of inner language reveals about our inner space. Epistémocritique, épistémocritique : littérature et savoirs, 2018, Langage intérieur - Espaces intérieurs / Inner Speech - Inner Space, 18. hal-02039667 HAL Id: hal-02039667 https://hal.archives-ouvertes.fr/hal-02039667 Submitted on 20 Sep 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Preliminary version produced by the author. In Lœvenbruck H. (2008). Épistémocritique, n° 18 : Langage intérieur - Espaces intérieurs / Inner Speech - Inner Space, Stéphanie Smadja, Pierre-Louis Patoine (eds.) [http://epistemocritique.org/what-the-neurocognitive- study-of-inner-language-reveals-about-our-inner-space/] - hal-02039667 What the neurocognitive study of inner language reveals about our inner space Hélène Lœvenbruck Université Grenoble Alpes, CNRS, Laboratoire de Psychologie et NeuroCognition (LPNC), UMR 5105, 38000, Grenoble France Abstract Our inner space is furnished, and sometimes even stuffed, with verbal material. The nature of inner language has long been under the careful scrutiny of scholars, philosophers and writers, through the practice of introspection. The use of recent experimental methods in the field of cognitive neuroscience provides a new window of insight into the format, properties, qualities and mechanisms of inner language. -

Unified Language Model Pre-Training for Natural

Unified Language Model Pre-training for Natural Language Understanding and Generation Li Dong∗ Nan Yang∗ Wenhui Wang∗ Furu Wei∗† Xiaodong Liu Yu Wang Jianfeng Gao Ming Zhou Hsiao-Wuen Hon Microsoft Research {lidong1,nanya,wenwan,fuwei}@microsoft.com {xiaodl,yuwan,jfgao,mingzhou,hon}@microsoft.com Abstract This paper presents a new UNIfied pre-trained Language Model (UNILM) that can be fine-tuned for both natural language understanding and generation tasks. The model is pre-trained using three types of language modeling tasks: unidirec- tional, bidirectional, and sequence-to-sequence prediction. The unified modeling is achieved by employing a shared Transformer network and utilizing specific self-attention masks to control what context the prediction conditions on. UNILM compares favorably with BERT on the GLUE benchmark, and the SQuAD 2.0 and CoQA question answering tasks. Moreover, UNILM achieves new state-of- the-art results on five natural language generation datasets, including improving the CNN/DailyMail abstractive summarization ROUGE-L to 40.51 (2.04 absolute improvement), the Gigaword abstractive summarization ROUGE-L to 35.75 (0.86 absolute improvement), the CoQA generative question answering F1 score to 82.5 (37.1 absolute improvement), the SQuAD question generation BLEU-4 to 22.12 (3.75 absolute improvement), and the DSTC7 document-grounded dialog response generation NIST-4 to 2.67 (human performance is 2.65). The code and pre-trained models are available at https://github.com/microsoft/unilm. 1 Introduction Language model (LM) pre-training has substantially advanced the state of the art across a variety of natural language processing tasks [8, 29, 19, 31, 9, 1]. -

Question Answering by Bert

Running head: QUESTION AND ANSWERING USING BERT 1 Question and Answering Using BERT Suman Karanjit, Computer SCience Major Minnesota State University Moorhead Link to GitHub https://github.com/skaranjit/BertQA QUESTION AND ANSWERING USING BERT 2 Table of Contents ABSTRACT .................................................................................................................................................... 3 INTRODUCTION .......................................................................................................................................... 4 SQUAD ............................................................................................................................................................ 5 BERT EXPLAINED ...................................................................................................................................... 5 WHAT IS BERT? .......................................................................................................................................... 5 ARCHITECTURE ............................................................................................................................................ 5 INPUT PROCESSING ...................................................................................................................................... 6 GETTING ANSWER ........................................................................................................................................ 8 SETTING UP THE ENVIRONMENT. .................................................................................................... -

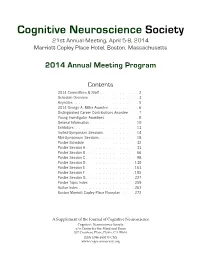

CNS 2014 Program

Cognitive Neuroscience Society 21st Annual Meeting, April 5-8, 2014 Marriott Copley Place Hotel, Boston, Massachusetts 2014 Annual Meeting Program Contents 2014 Committees & Staff . 2 Schedule Overview . 3 . Keynotes . 5 2014 George A . Miller Awardee . 6. Distinguished Career Contributions Awardee . 7 . Young Investigator Awardees . 8 . General Information . 10 Exhibitors . 13 . Invited-Symposium Sessions . 14 Mini-Symposium Sessions . 18 Poster Schedule . 32. Poster Session A . 33 Poster Session B . 66 Poster Session C . 98 Poster Session D . 130 Poster Session E . 163 Poster Session F . 195 . Poster Session G . 227 Poster Topic Index . 259. Author Index . 261 . Boston Marriott Copley Place Floorplan . 272. A Supplement of the Journal of Cognitive Neuroscience Cognitive Neuroscience Society c/o Center for the Mind and Brain 267 Cousteau Place, Davis, CA 95616 ISSN 1096-8857 © CNS www.cogneurosociety.org 2014 Committees & Staff Governing Board Mini-Symposium Committee Roberto Cabeza, Ph.D., Duke University David Badre, Ph.D., Brown University (Chair) Marta Kutas, Ph.D., University of California, San Diego Adam Aron, Ph.D., University of California, San Diego Helen Neville, Ph.D., University of Oregon Lila Davachi, Ph.D., New York University Daniel Schacter, Ph.D., Harvard University Elizabeth Kensinger, Ph.D., Boston College Michael S. Gazzaniga, Ph.D., University of California, Gina Kuperberg, Ph.D., Harvard University Santa Barbara (ex officio) Thad Polk, Ph.D., University of Michigan George R. Mangun, Ph.D., University of California, -

Talking to Computers in Natural Language

feature Talking to Computers in Natural Language Natural language understanding is as old as computing itself, but recent advances in machine learning and the rising demand of natural-language interfaces make it a promising time to once again tackle the long-standing challenge. By Percy Liang DOI: 10.1145/2659831 s you read this sentence, the words on the page are somehow absorbed into your brain and transformed into concepts, which then enter into a rich network of previously-acquired concepts. This process of language understanding has so far been the sole privilege of humans. But the universality of computation, Aas formalized by Alan Turing in the early 1930s—which states that any computation could be done on a Turing machine—offers a tantalizing possibility that a computer could understand language as well. Later, Turing went on in his seminal 1950 article, “Computing Machinery and Intelligence,” to propose the now-famous Turing test—a bold and speculative method to evaluate cial intelligence at the time. Daniel Bo- Figure 1). SHRDLU could both answer whether a computer actually under- brow built a system for his Ph.D. thesis questions and execute actions, for ex- stands language (or more broadly, is at MIT to solve algebra word problems ample: “Find a block that is taller than “intelligent”). While this test has led to found in high-school algebra books, the one you are holding and put it into the development of amusing chatbots for example: “If the number of custom- the box.” In this case, SHRDLU would that attempt to fool human judges by ers Tom gets is twice the square of 20% first have to understand that the blue engaging in light-hearted banter, the of the number of advertisements he runs, block is the referent and then perform grand challenge of developing serious and the number of advertisements is 45, the action by moving the small green programs that can truly understand then what is the numbers of customers block out of the way and then lifting the language in useful ways remains wide Tom gets?” [1]. -

Revealing the Language of Thought Brent Silby 1

Revealing the Language of Thought Brent Silby 1 Revealing the Language of Thought An e-book by BRENT SILBY This paper was produced at the Department of Philosophy, University of Canterbury, New Zealand Copyright © Brent Silby 2000 Revealing the Language of Thought Brent Silby 2 Contents Abstract Chapter 1: Introduction Chapter 2: Thinking Sentences 1. Preliminary Thoughts 2. The Language of Thought Hypothesis 3. The Map Alternative 4. Problems with Mentalese Chapter 3: Installing New Technology: Natural Language and the Mind 1. Introduction 2. Language... what's it for? 3. Natural Language as the Language of Thought 4. What can we make of the evidence? Chapter 4: The Last Stand... Don't Replace The Old Code Yet 1. The Fight for Mentalese 2. Pinker's Resistance 3. Pinker's Continued Resistance 4. A Concluding Thought about Thought Chapter 5: A Direction for Future Thought 1. The Review 2. The Conclusion 3. Expanding the mind beyond the confines of the biological brain References / Acknowledgments Revealing the Language of Thought Brent Silby 3 Abstract Language of thought theories fall primarily into two views. The first view sees the language of thought as an innate language known as mentalese, which is hypothesized to operate at a level below conscious awareness while at the same time operating at a higher level than the neural events in the brain. The second view supposes that the language of thought is not innate. Rather, the language of thought is natural language. So, as an English speaker, my language of thought would be English. My goal is to defend the second view. -

Distilling BERT for Natural Language Understanding

TinyBERT: Distilling BERT for Natural Language Understanding Xiaoqi Jiao1∗,y Yichun Yin2∗z, Lifeng Shang2z, Xin Jiang2 Xiao Chen2, Linlin Li3, Fang Wang1z and Qun Liu2 1Key Laboratory of Information Storage System, Huazhong University of Science and Technology, Wuhan National Laboratory for Optoelectronics 2Huawei Noah’s Ark Lab 3Huawei Technologies Co., Ltd. fjiaoxiaoqi,[email protected] fyinyichun,shang.lifeng,[email protected] fchen.xiao2,lynn.lilinlin,[email protected] Abstract natural language processing (NLP). Pre-trained lan- guage models (PLMs), such as BERT (Devlin et al., Language model pre-training, such as BERT, has significantly improved the performances 2019), XLNet (Yang et al., 2019), RoBERTa (Liu of many natural language processing tasks. et al., 2019), ALBERT (Lan et al., 2020), T5 (Raf- However, pre-trained language models are usu- fel et al., 2019) and ELECTRA (Clark et al., 2020), ally computationally expensive, so it is diffi- have achieved great success in many NLP tasks cult to efficiently execute them on resource- (e.g., the GLUE benchmark (Wang et al., 2018) restricted devices. To accelerate inference and the challenging multi-hop reasoning task (Ding and reduce model size while maintaining et al., 2019)). However, PLMs usually have a accuracy, we first propose a novel Trans- former distillation method that is specially de- large number of parameters and take long infer- signed for knowledge distillation (KD) of the ence time, which are difficult to be deployed on Transformer-based models. By leveraging this edge devices such as mobile phones. Recent stud- new KD method, the plenty of knowledge en- ies (Kovaleva et al., 2019; Michel et al., 2019; Voita coded in a large “teacher” BERT can be ef- et al., 2019) demonstrate that there is redundancy fectively transferred to a small “student” Tiny- in PLMs. -

![Arxiv:2104.05274V2 [Cs.CL] 27 Aug 2021 NLP field Due to Its Excellent Performance in Various Tasks](https://docslib.b-cdn.net/cover/6269/arxiv-2104-05274v2-cs-cl-27-aug-2021-nlp-eld-due-to-its-excellent-performance-in-various-tasks-886269.webp)

Arxiv:2104.05274V2 [Cs.CL] 27 Aug 2021 NLP field Due to Its Excellent Performance in Various Tasks

Learning to Remove: Towards Isotropic Pre-trained BERT Embedding Yuxin Liang1, Rui Cao1, Jie Zheng?1, Jie Ren2, and Ling Gao1 1 Northwest University, Xi'an, China fliangyuxin,[email protected], fjzheng,[email protected] 2 Shannxi Normal University, Xi'an, China [email protected] Abstract. Research in word representation shows that isotropic em- beddings can significantly improve performance on downstream tasks. However, we measure and analyze the geometry of pre-trained BERT embedding and find that it is far from isotropic. We find that the word vectors are not centered around the origin, and the average cosine similar- ity between two random words is much higher than zero, which indicates that the word vectors are distributed in a narrow cone and deteriorate the representation capacity of word embedding. We propose a simple, and yet effective method to fix this problem: remove several dominant directions of BERT embedding with a set of learnable weights. We train the weights on word similarity tasks and show that processed embed- ding is more isotropic. Our method is evaluated on three standardized tasks: word similarity, word analogy, and semantic textual similarity. In all tasks, the word embedding processed by our method consistently out- performs the original embedding (with average improvement of 13% on word analogy and 16% on semantic textual similarity) and two baseline methods. Our method is also proven to be more robust to changes of hyperparameter. Keywords: Natural language processing · Pre-trained embedding · Word representation · Anisotropic. 1 Introduction With the rise of Transformers [12], its derivative model BERT [1] stormed the arXiv:2104.05274v2 [cs.CL] 27 Aug 2021 NLP field due to its excellent performance in various tasks.