Data Mining for Material Flow Analysis: Application in the Territorial Breakdown of French Regions Brinduşa Smaranda

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Towards a Dynamic Assessment of Raw Materials Criticality: Linking Agent-Based Demand--With Material Flow Supply Modelling Approaches

This is a repository copy of Towards a dynamic assessment of raw materials criticality: linking agent-based demand--with material flow supply modelling approaches.. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/80022/ Version: Accepted Version Article: Knoeri, C, Wäger, PA, Stamp, A et al. (2 more authors) (2013) Towards a dynamic assessment of raw materials criticality: linking agent-based demand--with material flow supply modelling approaches. Science of the Total Environment, 461-46. 808 - 812. ISSN 0048-9697 https://doi.org/10.1016/j.scitotenv.2013.02.001 Reuse Unless indicated otherwise, fulltext items are protected by copyright with all rights reserved. The copyright exception in section 29 of the Copyright, Designs and Patents Act 1988 allows the making of a single copy solely for the purpose of non-commercial research or private study within the limits of fair dealing. The publisher or other rights-holder may allow further reproduction and re-use of this version - refer to the White Rose Research Online record for this item. Where records identify the publisher as the copyright holder, users can verify any specific terms of use on the publisher’s website. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Towards a dynamic assessment of raw materials criticality: Linking agent-based demand - with material flow supply modelling approaches Christof Knoeri1, Patrick A. -

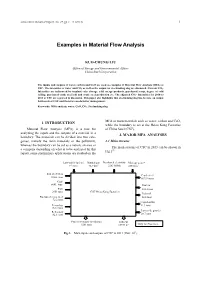

Examples in Material Flow Analysis

China Steel Technical Report, No. 27, pp.1-5, (2014) Kuo-Chung Liu 1 Examples in Material Flow Analysis KUO-CHUNG LIU Office of Energy and Environmental Affairs China Steel Corporation The inputs and outputs of water, carbon and CaO are used as examples of Material Flow Analysis (MFA) at CSC. The intensities of water and CO2 as well as the output for steelmaking slag are discussed. Current CO2- Intensities are influenced by in-plant coke storage, sold energy products, purchased scrap, degree of cold rolling, purchased crude steel/roll and crude steel production etc. The adjusted CO2- Intensities for 2010 to 2013 at CSC are reported in discussion. This paper also highlights that steelmaking slag has become an output bottleneck at CSC and therefore needs better management. Keywords: MFA analysis, water, CaO, CO2, Steelmaking slag MFA of main materials such as water, carbon and CaO, 1. INTRODUCTION while the boundary is set at the Hsiao Kang Factories Material Flow Analysis (MFA) is a tool for of China Steel (CSC). analyzing the inputs and the outputs of a material in a 2. MAJOR MFA ANALYSES boundary. The materials can be divided into two cate- gories, namely the main materials or the pollutants, 2.1 Main streams whereas the boundary can be set as a nation, an area or The main streams of CSC in 2013 can be shown in a company depending on what is to be analyzed. In this Fig.1(1). report, some preliminary applications are studied on the Low-sulfer fuel oil Natural gas Purchased electricity Makeup water* 9.3 tons 76.9 km3 2283 MWh 45554 m3 Iron ore/Pellets Crude steel 13066 tons 8693.6 tons Coal 6801 tons Coal tar Flux 205.2 tons 2951 tons CSC Hsiao Kang Factories Light oil Purchased scrap steel 54.8 tons 72.2 tons Liquid sulfur Ferroalloy 11.3 tons 132.1 tons Iron oxide powder Refractory 82.6 tons 28.7 tons Process residues (wet basis) Effluent 5281 tons 14953 m3 Only for Processes. -

Final Energy and Exergy Flow Portuguese Energy Sector

Primary-to-Final Energy and Exergy Flow s in the Portuguese Energy Sector 1960 to 2009 Dominique Anjo da Silva Thesis to obtain the M aster of Science Degree in Mechanical Engineering Examination Committee Chairperson: Prof. Dr. Mário Manuel Gonçalves da Costa Supervisor: Prof.ª Dr. ª Tânia Alexandra dos Santos Costa e Sousa Co-supervisor : Eng. André González Cabrera Honório Serrenho Members of the committee: Dr. Miguel Perez Neves Águas July 2013 1 Acknowledgements To Prof.ª Dr.ª Tânia Sousa for providing me the opportunity to elaborate my thesis on a topic I am passionate about. Her support and guidance throughout this thesis, as well as her openness to fruitful discussions, made this journey an enjoyable one. To Eng. André Serrenho, for his valuable knowledge and support. His expertise was proved fundamental to understand the workings of the IEA database and our discussions constituted a great learning opportunity. To the team at the EDP Foundation, in particular Eng. Pires Barbosa, Eng. Luis Cruz and Eng. Eduardo Moura for their technical expertise and valuable insight on the history of energy production in Portugal. Also to the team at the Electricity Museum in Lisbon, in particular Raquel Eleutério, for providing the opportunity to undertake a 6-month internship, which helped me develop a better technical, historical and societal understanding of the evolution of energy supply in Portugal from 1920 to the present. To Eng. Ana Pipio and Prof. Dr. José Santos-Victor, for their mentorship and support while I worked at the International Affairs team at Instituto Superior Técnico. To Prof. -

A Review of Circular Economy Development Models in China, Germany and Japan

Review A Review of Circular Economy Development Models in China, Germany and Japan Olabode Emmanuel Ogunmakinde School of Architecture and Built Environment, University of Newcastle, Callaghan, New South Wales 2308, Australia; [email protected], Tel: +61415815561 Received: 15 May 2019; Accepted: 2 July 2019; Published: 3 July 2019 Abstract: The circular economy (CE) concept is gaining traction as a sustainable strategy for reducing waste and enhancing resource efficiency. This concept has been adopted in some countries such as Denmark, Netherlands, Scotland, Sweden, Japan, China, and Germany while it is being considered by others including England, Austria, and Finland. The CE has been employed in the manufacturing, agricultural, textile, and steel industries but its implementation varies. It is against this backdrop that this study seeks to identify CE implementation in three pioneering countries (China, Japan, and Germany). A critical review and analysis of the literature was conducted. The results revealed enabling and core policies/laws for the development of the CE concept. It also identified the implementation structure of the CE in China, Germany, and Japan. In conclusion, the findings of this study are expected to serve as a guide for developing and implementing the CE concept in various sectors of the economy. Keywords: China; circular economy; Germany; implementation; Japan; resource efficiency; waste management 1. Background of the Study The linear economy is a waste-generating model. It is a system where resources become waste due to production and consumption processes [1]. The linear economy model is a “take-make-waste” approach [2,3] that extracts raw materials to manufacture products, which are disposed of by consumers after use. -

Material Flow Analysis of the Forest-Wood

Material flow analysis of the forest-wood supply chain: A consequential approach for log export policies in France Jonathan Lenglet, Jean-Yves Courtonne, Sylvain Caurla To cite this version: Jonathan Lenglet, Jean-Yves Courtonne, Sylvain Caurla. Material flow analysis of the forest-wood supply chain: A consequential approach for log export policies in France. Journal of Cleaner Produc- tion, Elsevier, 2017, 165, pp.1296-1305. 10.1016/j.jclepro.2017.07.177. hal-01612454 HAL Id: hal-01612454 https://hal.archives-ouvertes.fr/hal-01612454 Submitted on 6 Oct 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Material flow analysis of the forest-wood supply chain: a consequential approach for log export policies in France Jonathan Lengleta,∗, Jean-Yves Courtonneb,c,d, Sylvain Caurlae aAgroParisTech, France bSTEEP team, INRIA Grenoble - Rhˆone-Alpes,Montbonnot, France cUniversit´eGrenoble Alpes, France dArtelia Eau et Environnement, Echirolles, France eUMR INRA – AgroParisTech, Laboratoire d’Economie´ Foresti`ere, 54042 Nancy Cedex, France Abstract. Part of the French timber transformation industry suffers from difficulties to adapt to recent changes on global markets. This translates into net exports of raw wood and imports of transformed products, detrimental to both the trade balance and the local creation of wealth. -

The State of the Art of Material Flow Analysis Research Based on Construction and Demolition Waste Recycling and Disposal

buildings Review The State of the Art of Material Flow Analysis Research Based on Construction and Demolition Waste Recycling and Disposal Dongming Guo * and Lizhen Huang Department of Manufacturing and Civil Engineering, Norwegian University of Science and Technology, 2802 Gjovik, Norway; [email protected] * Correspondence: [email protected]; Tel.: +47-925-59-641 Received: 9 August 2019; Accepted: 18 September 2019; Published: 21 September 2019 Abstract: Construction and demolition waste (C&D waste) are widely recognized as the main form municipal solid waste, and its recycling and reuse are an important issue in sustainable city development. Material flow analysis (MFA) can quantify materials flows and stocks, and is a useful tool for the analysis of construction and demolition waste management. In recent years, material flow analysis has been continually researched in construction and demolition waste processing considering both single waste material and mixed wastes, and at regional, national, and global scales. Moreover, material flow analysis has had some new research extensions and new combined methods that provide dynamic, robust, and multifaceted assessments of construction and demolition waste. In this paper, we summarize and discuss the state of the art of material flow analysis research in the context of construction and demolition waste recycling and disposal. Furthermore, we also identify the current research gaps and future research directions that are expected to promote the development of MFA for construction and demolition waste processing in the field of sustainable city development. Keywords: Material flow analysis (MFA); construction and demolition waste (C&D waste); recycling and reuse; environmental impact 1. Introduction The construction and operation of buildings occupy almost 40% of the depletion of natural resources and 25% of global waste [1,2]. -

Industrial Ecology Approaches to Improve Metal Management

Industrial Ecology Approaches to Improve Metal Management Three Modeling Experiments Rajib Sinha Licentiate Thesis Industrial Ecology Department of Sustainable Development, Environmental Science and Engineering (SEED) KTH Royal Institute of Technology Stockholm, Sweden 2014 Title: Industrial Ecology Approaches to Improve Metal Management: Three Modeling Experiments Author: Rajib Sinha Registration: TRITA-IM-LIC 2014:01 ISBN: 978-91-7595-396-0 Contact information: Industrial Ecology Department of Sustainable Development, Environmental Science and Engineering (SEED) School of Architecture and the Built Environment, KTH Royal Institute of Technology Technikringen 34, SE-100 44 Stockholm, Sweden Email: [email protected] www.kth.se Printed by: Universitetetsservice US-AB, Stockholm, Sweden, 2014 ii Preface This licentiate thesis1 attempts to capture specific, but also diverse, research interests in the field of industrial ecology. It therefore has a broader scope than a normal licentiate thesis. Here, I would like to share my academic journey in producing the thesis to guide the reader in understanding the content and the background. At high school (standard 9−12), I was most interested in mathematics and physics. This led me to study engineering, and I completed my B.Sc. in Civil Engineering at BUET, Dhaka, Bangladesh. During my undergraduate studies, I developed a great interest in structural engineering. As a result, I chose finite element analysis of shear stress as my Bachelor's degree project. The title of the dissertation was Analysis of Steel-Concrete Composite Bridges with Special Reference to Shear Connectors. All my close classmates at university and I chose an environmental engineering path for further studies. With my interest in environmental engineering, I completed my M.Sc. -

SHC Project 3.63 Sustainable Materials Management Project Plan

Sustainable & Healthy Communities Research Program Project Plan for Project 3.63 Sustainable Materials Management Project Lead and Deputy Lead Thabet Tolaymat, NRMRL, Project Lead Edwin Barth, NRMRL, Deputy Project Lead Project Period October 1, 2015 (from FY16) to September 30, 2019 (through FY 19) Table of Contents Project Summary ............................................................................................................................. 1 Project Description.......................................................................................................................... 2 Outputs ............................................................................................................................................ 2 Focus Areas ..................................................................................................................................... 2 Focus Area 1: Life Cycle Management of Materials .................................................................. 3 Focus Area 2: Reuse of Organics and Other Materials............................................................... 5 Focus Area 3: Regulatory Support .............................................................................................. 7 Collaboration................................................................................................................................... 8 Task 1 – Tools and Methods for SMM Decision Analytics ......................................................... 10 Task 2 – Beneficial Use of -

Urban Metabolism and Its Applications to Urban Planning and Design

Environmental Pollution xxx (2010) 1e9 Contents lists available at ScienceDirect Environmental Pollution journal homepage: www.elsevier.com/locate/envpol Review The study of urban metabolism and its applications to urban planning and design C. Kennedy a,*, S. Pincetl b, P. Bunje b a Department of Civil Engineering, University of Toronto, Toronto, Canada b Institute of the Environment, UCLA, CA, United States The presents a chronological review of urban metabolism studies and highlights four areas of application. article info abstract Article history: Following formative work in the 1970s, disappearance in the 1980s, and reemergence in the 1990s, Received 12 October 2010 a chronological review shows that the past decade has witnessed increasing interest in the study of Accepted 15 October 2010 urban metabolism. The review finds that there are two related, non-conflicting, schools of urban metabolism: one following Odum describes metabolism in terms of energy equivalents; while the second Keywords: more broadly expresses a city’s flows of water, materials and nutrients in terms of mass fluxes. Four Cities example applications of urban metabolism studies are discussed: urban sustainability indicators; inputs Energy to urban greenhouse gas emissions calculation; mathematical models of urban metabolism for policy Materials Waste analysis; and as a basis for sustainable urban design. Future directions include fuller integration of social, Urban planning health and economic indicators into the urban metabolism framework, while tackling the great Urban design sustainability challenge of reconstructing cities. Greenhouse gas emissions Ó 2010 Elsevier Ltd. All rights reserved. Sustainability indicators 1. Introduction humans, animals and vegetation. Thus, the notion that cities are like ecosystems is also appropriate. -

Scaling up Circular Strategies to Achieve Zero Plastic Waste in Thailand

SCALING UP CIRCULAR STRATEGIES TO ACHIEVE ZERO PLASTIC WASTE IN THAILAND WWF Thailand, November 2020 ABOUT THIS REPORT PURPOSE: This report intends to promote existing circular strategies that support plastic waste management in Thailand, as well as to provide key insights and considerations to guide future strategic development among government and the private sector towards a more collaborative, fair and impactful circular waste management system for all those involved. In doing so, it aims to envision a sustainable, healthy and prosperous ‘zero plastic waste’ Thailand. Desired outcomes: • To serve as a knowledge base for conversations between WWF, national and local government, private sector businesses and supporting organisations (civil society, universities, think tanks, etc.). • To bring together diverse stakeholders to connect, collaborate and be part of an action- led network. • To develop trusted and impactful partnerships in Thailand between WWF and national and local government, private sector businesses and supporting organisations. • To generate awareness on a broader level around the benefits of reducing plastic waste. • To build a case for empowering informal waste collectors in their efforts to support national plastic waste achievements and targets. WWF THAILAND 2020 HOW TO READ THIS REPORT: This is Urgent: The COVID-19 Pandemic is The first half of this report - Sections Escalating Plastic Waste 1 to 3 - is intended to raise awareness While much has been made of the unexpected and empower conversations with environmental benefits of the COVID-19 pandemic and among policymakers, the private (e.g. an initial 5% reduction in greenhouse gas emissions), it has also brought additional challenges sector and supporting organisations. -

Contribution of Material Flow Assessment in Recycling Processes to Environmental Management Information Systems (EMIS)

EnviroInfo 2010 (Cologne/Bonn) Integration of Environmental Information in Europe Copyright © Shaker Verlag 2010. ISBN: 978-3-8322-9458-8 Contribution of Material Flow Assessment in Recycling Processes to Environmental Management Information Systems (EMIS) Alexandra Pehlken, Martin Rolbiecki, André Decker, Klaus-Dieter Thoben Bremen University, Institute of Integrated Product Development Badgasteinerstr. 1, D-28359 Bremen [email protected] Abstract Material Flow Assessment (MFA) is a method of analyzing the material flow of a process in a well-defined system. Referring to the life cycle of a product the Material Flow Assessment is part of a Life Cycle Assessment (LCA) and provides the possibility of assessing the environmental impact of a process and product respectively. Applying these methods to recycling processes the potential of saving primary and secondary resources may be measurable. The presented paper will give an overview on the strategy how MFA can contribute to Environmental Management Information Systems (EMIS). 1. Introduction Recycling processes can contribute to lower environmental impacts because they possess a huge potential of secondary resources. The input of a recycling plant is no longer considered as waste stream; instead it is high valuable material that enters the recycling process and the output is even more valuable. Due to the fact that residues always vary in their composition and material flow only data ranges can be used as input parameter. A simulation of recycling processes is therefore often difficult. Notten and Petrie (2003) substantiate the statement that “different sources of uncertainty require different methods for their assessment”. Therefore, recycling process models rely not only on the quality of the process data but also on the uncertainty assessment. -

Toward Social Material Flow Analysis: on the Usefulness of Boundary Objects in Urban Mining Research

Toward Social Material Flow Analysis: On the Usefulness of Boundary Objects in Urban Mining Research Björn Wallsten Linköping University Post Print N.B.: When citing this work, cite the original article. Original Publication: Björn Wallsten, Toward Social Material Flow Analysis: On the Usefulness of Boundary Objects in Urban Mining Research, 2015, Journal of Industrial Ecology, (19), 5, 742-752. http://dx.doi.org/10.1111/jiec.12361 Copyright: Wiley: No OnlineOpen http://eu.wiley.com/WileyCDA/Brand/id-35.html Postprint available at: Linköping University Electronic Press http://urn.kb.se/resolve?urn=urn:nbn:se:liu:diva-122664 Toward Social Material Flow Analysis – On the Usefulness of Boundary Objects in Urban Mining Research Author: Björn Wallsten* Published in Journal of Industrial Ecology 19(5) 742–752. * Corresponding author. Department of Management and Engineering, Environmental Technology and Management, Linköping University, SE- 581 83 Linköping, Sweden. Tel: +4613285625 Email: [email protected] Summary Material flow analysis (MFA) has been an effective tool to identify the scale of physical activity, the allocation of materials across economic sectors for different purposes and to identify inefficiencies in production systems or in urban contexts. However, MFA relies on the invisibilization of the social drivers of those flows to be able to perform its calculations. In many cases therefor, it remains detached from the for example urban processes that underpin them. This becomes a problem when the purpose of research is to design detailed recycling schemes or the like, for which micro-level practice knowledge on how material flows are mediated by human agency is needed.