F15 Morphcore

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

We Shape the Connected World Automotive Autonomy Generating Energy Effectively Wearable Technology ARM’S Technology Is Cars Are Becoming Mobile Computing Platforms

ARM Holdings plc Annual Report 2015: Strategic Report We shape the connected world Automotive autonomy Generating energy effectively Wearable technology ARM’s technology is Cars are becoming mobile computing platforms. Wind turbines and solar panels can be made Smart watches, biometric-monitors and More sensors and cameras are being included more effective by including technology that augmented reality headsets are intelligent, to assist the driver with lane detection, reading controls and monitors the wind turbine, and connected devices that can give us extra shaping the way we roadside signage and identifying potential hazards aggregates data across the entire wind farm. information to improve our health and or people crossing the road. In time, driver wellness, or just to help us have more fun. all live our lives; in the assistance may lead to a fully automated vehicle. home, as we travel, at school or work, and as we have fun with our friends Mobile computing Smart city streets Intelligent networks Smarter homes ARM-based mobile computers, including City infrastructure from street lights to car Broadband and mobile phone network speeds Cost-efficiency in the home can be improved smartphones, tablets and some laptops are, parking meters can be made more effective by are increasing, and latency decreasing, enabling through learning thermostats that understand for many people, the primary device for their embedding intelligent chips. Street lights that new services for operators to provide to your daily routine, domestic appliances that use work, whether in an office or on the road; can dim when no one is nearby will save energy consumers and enterprises, from delivering advanced algorithms for calculating water and for researching and writing school assignments; and reduce carbon emissions, and prognostics in more movie and TV options to collating and detergent requirements, and smart meters that and for engaging with friends. -

Internet & Connectivity

Cover - 228.qxp 6/10/2009 8:42 AM Page 1 Battery-in-a-Chip Technology p. 62 • Up & Running With C Language p. 70 www.circuitcellar.com CIRCUITTHE MAGAZINE FOR COMPUTER CELLAR APPLICATIONS #228 July 2009 INTERNET & CONNECTIVITY Internet-Based Weather Data Acquisition A Compact Webcam Design From Start to Finish Ethernet-Controlled HERMS Text Library for Real-Time Translation I2C Master Bus Controller $5.95 U.S. ($6.95 Canada) C2.qxp 1/29/2009 10:42 AM Page 1 1.qxp 4/3/2009 10:20 AM Page 1 25.qxp 4/27/2009 8:36 PM Page 1 3.qxp 6/2/2009 1:42 PM Page 1 Let your geek shine. Meet Vanessa Carpenter and Diesel Møbius, SparkFun customers and developers of the Critical Corset. Using a Polar heart rate monitor, an Arduino, and a cleverly hidden air pump system, Vanessa and Diesel designed a corset that explores the rules of attraction. As the user’s heart rate increases, the corset gently tightens, creating a more confident posture. Whether you need a heart rate monitor or just a handful of LEDs, the tools are out there. Create a project you’ll love, and let your geek shine too. Sharing Ingenuity WWW.SPARKFUN.COM ©2009 SparkFun Electronics, Inc. All rights reserved. For more info on Vanessa and Diesel’s project visit www.illutron.dk. SFE-0015-PrintAd05-CircuitCellar.indd 1 5/19/09 4:49 PM Task_Masthead_228.qxp 6/12/2009 8:46 AM Page 4 ASK ® TMANAGER CIRCUIT CELLAR THE MAGAZINE FOR COMPUTER APPLICATIONS ’Net Tech and You FOUNDER/EDITORIAL DIRECTOR Steve Ciarcia CHIEF FINANCIAL OFFICER Jeannette Ciarcia MANAGING EDITOR With each passing year, we receive more and more arti- C. -

ECE 4999: INDEPENDENT STUDY on PORTING AMBER CPU to DE1-SOC and ALTERA BUS Mohammad Saifee Dohadwala

ECE 4999: INDEPENDENT STUDY ON PORTING AMBER CPU TO DE1-SOC AND ALTERA BUS Mohammad Saifee Dohadwala Under the supervision of: Professor Bruce R. Land Fall 2016 Table of Contents Table of Figures ............................................................................................................................................ 2 1. Introduction ........................................................................................................................................... 3 2. FPGA .................................................................................................................................................... 3 3. Amber Core ........................................................................................................................................... 5 3.1 Amber 23 Pipeline Architecture ................................................................................................... 6 3.2 Registers ........................................................................................................................................ 6 3.3 Cache............................................................................................................................................. 7 4. Amber FPGA system ............................................................................................................................ 7 4.1 Clocks and Reset ........................................................................................................................... 8 4.2 BOOT Memory -

Amber : Enabling Precise Full-System Simulation with Detailed Modeling

Amber*: Enabling Precise Full-System Simulation with Detailed Modeling of All SSD Resources Donghyun Gouk1, Miryeong Kwon1, Jie Zhang1, Sungjoon Koh1, Wonil Choi1,2, Nam Sung Kim3, Mahmut Kandemir2 and Myoungsoo Jung1 Yonsei University, 1Computer Architecture and Memory Systems Lab, 2Pennsylvania State University, 3University of Illinois Urbana-Champaign, http://camelab.org Abstract—SSDs become a major storage component in modern While most of the time simulation-based studies are nec- memory hierarchies, and SSD research demands exploring future essary to explore a full design space by taking into account simulation-based studies by integrating SSD subsystems into a different SSD technologies, it is non-trivial to put SSD-based full-system environment. However, several challenges exist to model SSDs under a full-system simulations; SSDs are composed subsystems into a full-system simulation environment. First, upon their own complete system and architecture, which employ even though SSDs are considered as storage or memory all necessary hardware, such as CPUs, DRAM and interconnect subsystems, in contrast to conventional memory technologies, network. Employing the hardware components, SSDs also require they are in practice incarnated on top of a complete, in- to have multiple device controllers, internal caches and software dependent, and complex system that has its own computer modules that respect a wide spectrum of storage interfaces and protocols. These SSD hardware and software are all necessary architecture and system organization. Second, SSDs consist to incarnate storage subsystems under full-system environment, of not only a storage backend (by having multiple flash pack- which can operate in parallel with the host system. -

The Design of a Debugger Unit for a RISC Processor Core

Rochester Institute of Technology RIT Scholar Works Theses 3-2018 The Design of a Debugger Unit for a RISC Processor Core Nikhil Velguenkar [email protected] Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Velguenkar, Nikhil, "The Design of a Debugger Unit for a RISC Processor Core" (2018). Thesis. Rochester Institute of Technology. Accessed from This Master's Project is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. The Design of a Debugger Unit for a RISC Processor Core by Nikhil Velguenkar Graduate Paper Submitted in partial fulfillment of the requirements for the degree of Master of Science in Electrical Engineering Approved by: Mr. Mark A. Indovina, Lecturer Graduate Research Advisor, Department of Electrical and Microelectronic Engineering Dr. Sohail A. Dianat, Professor Department Head, Department of Electrical and Microelectronic Engineering Department of Electrical and Microelectronic Engineering Kate Gleason College of Engineering Rochester Institute of Technology Rochester, New York March 2018 To my family and friends, for all of their endless love, support, and encouragement throughout my career at Rochester Institute of Technology Abstract Recently, there has been a significant increase in design complexity for Embedded Sys- tems often referred to as Hardware Software Co-Design. Complexity in design is due to both hardware and firmware closely coupled together in-order to achieve features forlow power, high performance and low area. Due to these demands, embedded systems consist of multiple interconnected hardware IPs with complex firmware algorithms running on the device. -

WIRELESS COMMUNICATIONS a Platform for Wireless Sensor Networks

Cover.qxp 1/4/2008 2:43 PM Page 1 TRANSFORMER BASICS P. 70 • MCU-BASED POLL SERVER RESULTS P. 74 • HOT CHIPS REVIEW P. 78 w w w . c i r c u i t c e l l a r . c o m CIRCUITTHE MAGAZINE FOR COMPUTER CELLAR APPLICATIONS #211 February 2008 WIRELESS COMMUNICATIONS A Platform for Wireless Sensor Networks Build an RFID Payment System Ciarcia’s PV System (Part 3) Antenna Technology 101 TCP/IP Socket Communication Firmware Protection & Publishing 02> 7925274 75349 $4.95 U.S. ($5.95 Canada) C2.qxp 10/1/2007 10:27 AM Page 1 1.qxp 10/1/2007 3:50 PM Page 1 It’s showtime. Get Your Ticket to the Ultimate Embedded Design Starter Kit. Fixed-function microcontrollers had their share of the spotlight. It’s time for a revolutionary—and simplified—approach to embedded application development. Get a Cypress PSoC® FirstTouch™ Starter Kit now and discover how much PSoC mixed-signal arrays—powerful, programmable analog and digital blocks, embedded memory and a fast MCU—shorten your time-to-market. This kit includes the easy-to-use PSoC Express™ visual embedded system design tool, and gives you 5 25507595 embedded designs you can evaluate right out of the box. Get yours and step into the spotlight today. M Includes four ready-to-use mixed-signal CAPSENSE CAPSENSE TOUCH TEMPERATURE PROXIMITY applications on a single platform. SENSING SENSING LIGHT SENSING SENSING Buy your PSoC FirstTouch Ultimate Starter Kit now at: www.cypress.com/go/FirstTouch C 5 25 50 75 95 Y 5 25 50 75 95 K 5 25 50 75 95 MY CY CM Buy Now $29.95* Cypress, the Cypress logo and PSoC are registered trademarks, and Programmable System-on-Chip, PSoC Express, and FirstTouch are trademarks of Cypress Semiconductor Corporation. -

CUDA NEW FEATURES and BEYOND Stephen Jones, GTC 2019 a QUICK LOOK BACK This Time Last Year

CUDA NEW FEATURES AND BEYOND Stephen Jones, GTC 2019 A QUICK LOOK BACK This Time Last Year... 3x3 ReLU convolution 5x5 input ReLU concat convolution max 1x1 ReLU pool convolution DGX-2 + Unified Memory Asynchronous Task Graphs S9241 – All You Need To Know About Programming NVIDIA’s DGX-2, Wednesday March 20, 1-2PM 2 ACCELERATED COMPUTING IS FULL-STACK OPTIMIZATION 2X More Performance With Software Optimizations Alone HPC Applications Speedup 24x 20x CUDA 10 CUBLAS 10 16x CUFFT 10 12x 2X CUDA 8 on same CUBLAS 8 hardware 8x CUFFT 8 4x 0x 1 2 3 4 5 2x Broadwell vs 4xP100 2x Broadwell vs 4xV100 3 HPC Apps: AMBER, Chroma, GROMACS, GTC, LAMMPS, MILC, NAMD, QE, RTM, SPECFEM3D, VASP TESLA UNIVERSAL ACCELERATION PLATFORM Single Platform To Drive Utilization and Productivity CUSTOMER USECASES Speech Translate Recommender Healthcare Manufacturing Finance Molecular Weather Seismic Simulations Forecasting Mapping CONSUMER INTERNET INDUSTRIAL APPLICATIONS SUPERCOMPUTING APPS & Amber +550 FRAMEWORKS Applications NAMD MACHINE LEARNING | RAPIDS DEEP LEARNING SUPERCOMPUTING NVIDIA SDK & LIBRARIES cuDF cuML cuGRAPH cuDNN cuBLAS CUTLASS NCCL TensorRT CuBLAS CuFFT OpenACC CUDA TESLA GPUs & SYSTEMS TESLA GPU VIRTUAL GPU NVIDIA DGX FAMILY NVIDIA HGX SYSTEM OEM CLOUD 4 TECHNOLOGY PLATFORM DEVELOPMENT TOOLKIT 5 NEW TURING GPU GREATEST LEAP SINCE 2006 CUDA GPU 6 TESLA T4 WORLD’S MOST ADVANCED SCALE-OUT GPU 320 Turing Tensor Cores 2,560 CUDA Cores 65 FP16 TFLOPS | 130 INT8 TOPS | 260 INT4 TOPS 16GB | 320GB/s 70 W Deep Learning Training & Inference HPC Workloads -

Joel Fernandes 4501 Sunset Drive, Joel Fernandes Charlottesville VA 23059 (214) 516 8050 Linux Kernel Engineer and Systems Engineer

Joel Fernandes 4501 Sunset Drive, Joel Fernandes Charlottesville VA 23059 (214) 516 8050 Linux Kernel Engineer and Systems Engineer. [email protected] EXPERIENCE SKILLS Programming: C, Assembly, Google, Mountain View — Linux Kernel Engineer Embedded C, Lisp, Ruby, SEPTEMBER 2016 - PRESENT Python, Perl, Erlang, LATEX, Android kernel team - Worked on Linux memory management. Verilog. Improved performance of virtual address space manipulations in Linux. Processors: ARM Cortex-A, Worked on EAS (Energy Aware Scheduling) patches of the Completely Cortex-M, ARM9, Amber core, Fair Scheduler (CFS) of the Linux kernel. Performed performance MSP430, x86, x86-64, 8051. OS analysis and improvement of Android’s Linux kernel including optimizing of Binder driver (Android’s IPC mechanism) and locking OS’s: Linux, Android, Windows. mechanisms. Worked on LISA tools to better trace and understand Debug Tools: GDB, KGDB, KDB, Linux kernel scheduler behavior. Worked on tools to detect Long Ftrace, SystemTap, ktap, Critical sections in the kernel, and upstreamed it. Improved CPU Lauterbach, OpenOCD, Eclipse, frequency scaling drivers, and upstreamed fixes and improvements. Wireshark. Other Tools Git, SVN, Vim, Emacs, cscope, Gnuplot, Amazon, Sunnyvale — Senior Systems Engineer Matlab, ModelSim, Pandas, AUGUST 2014 - AUGUST 2016 matplotlib. Worked on device bring up of various products including Amazon Echo, Show, Dot, Kindle etc. Fixed issues in MMC and PMIC driver stack by working closely with Hardware engineers. Worked on a mechanism to COMMUNITY write kernel function execution traces to PSTORE (persistent store) of Contributed patches to various the Linux kernel and upstreamed them. Fixed bugs in the Linux open source projects including kernel’s tracing infrastructure and upstreamed them. -

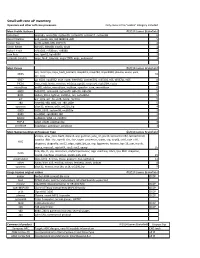

Small Soft Core up Inventory Opencore and Other Soft Core Processors Only Cores in the "Usable" Category Included

Small soft core uP Inventory Opencore and other soft core processors Only cores in the "usable" category included Most Prolific Authors ©2014 James Brakefield John Kent micro8a, micro16b, system05, system09, system11, system68 6 Daniel Wallner ax8, ppx16, t65, t80 (8080 & z80) 5 Shawn Tan ae18, aeMB, k68, DCPU16 4 Ulrich Riedel 68hc05, 68hc08, tiny64, tiny8 4 Robert Finch rtf65002, rtf68ksys, rtf8088 3 Jose Ruiz ion, light52, light8080 3 Lazaridis Dimitris mips_fault_tolerant, mipsr2000, mips_enhanced 3 Most Clones ©2014 James Brakefield ion, minimips, mips_fault_tolerant, misp32r1, misp789, mipsr2000, plasma, ucore, yacc, MIPS 10 m1_core 6502 ag_6502, cpu6502_true_cycle, free6502, lattice6502, m65c02, t65, t6507lp, m65 8 PIC16 free_risc8, lwrisc, minirisc, m16c5x, ppx16, recore54, risc16f84, risc5x 8 microblaze aeMB, mblite, microblaze, myblaze, openfire_core, secretblaze 6 6800 hd63701, system68, system05, 68hc05, 68hc08 5 8051 dalton_8051, light52, mc8051, t51, turbo8051 5 avr avr_core, avr_hp, avr8, navre, riscmcu 5 z80 nextz80, t80, tv80, wb_z80, y80e 5 openrisc altor32, minsoc, or1k, or1200_hp 4 6809 6809_6309, system09, mc6809e 3 8080 cpu8080, light8080, t80 3 68000 ao68000, tg68, v1_coldfire 3 PDP-8 pdp8, pdp8l, pdp8verilog 3 picoblaze copyblaze, pacoblaze, picoblaze 3 Most Numerous Original Processor Type ©2014 James Brakefield alwcpu, atlas_core, ba22, dcpu16, erp, gumnut, jane_nn, jpu16, latticemicro32, latticemicro8, natalius_8bit_risc, open8_risc, rise, sayeh_processor, scarts, szp, tiny64, xr16, cole_c16, RISC diogenes, dragonfly, -

Tesis (5.719Mb)

FRAMEWORK CONCEPTUAL PARA DESARROLLO DE INTERFACES MÓVILES EN SOLUCIONES IOT QUE PERMITAN APROPIACIÓN TECNOLÓGICA EN ZONAS RURALES ALEDAÑAS AL MUNICIPIO DE BUCARAMANGA DESDE LA PERSPECTIVA DE UX. ANDREA CATALINA MARTÍNEZ PINZÓN KEVIN JULIÁN VILLAMIZAR CALDERÓN UNIVERSIDAD AUTÓNOMA DE BUCARAMANGA FACULTAD DE INGENIERÍAS PROGAMA DE INGENIERÍA DE SISTEMAS BUCARAMANGA 2020 FRAMEWORK CONCEPTUAL PARA DESARROLLO DE INTERFACES MÓVILES EN SOLUCIONES IOT QUE PERMITAN APROPIACIÓN TECNOLÓGICA EN ZONAS RURALES ALEDAÑAS AL MUNICIPIO DE BUCARAMANGA DESDE LA PERSPECTIVA DE UX. ANDREA CATALINA MARTÍNEZ PINZÓN KEVIN JULIÁN VILLAMIZAR CALDERÓN TRABAJO DE GRADO PARA OPTAR POR EL TÍTULO DE INGENIERO DE SISTEMAS MsC. MARIA ALEXANDRA ESPINOSA CARREÑO Directora de proyecto MsC. LEIDY YOHANA FLÓREZ GÓMEZ Co-directora de proyecto UNIVERSIDAD AUTÓNOMA DE BUCARAMANGA FACULTAD DE INGENIERÍAS PROGAMA DE INGENIERÍA DE SISTEMAS BUCARAMANGA 2020 2 Nota de Aceptación Presidente del Jurado Jurado Jurado 3 Bucaramanga, 15 Mayo 2020 4 CONTENIDO Pág. INTRODUCCIÓN ................................................................................................... 14 2. OBJETIVOS ....................................................................................................... 16 2.1 OBJETIVO GENERAL .................................................................................. 16 2.2 OBJETIVOS ESPECÍFICOS ....................................................................... 16 3. PLANTEAMIENTO Y JUSTICACIÓN DEL PROBLEMA ................................... 17 4. ANTECEDENTES -

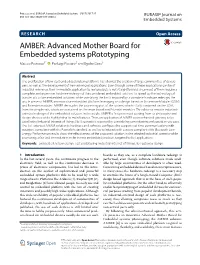

AMBER: Advanced Mother Board for Embedded Systems Prototyping Matteo Petracca1* , Pierluigi Passaro2 and Egidio Gioia3

Petracca et al. EURASIP Journal on Embedded Systems (2017) 2017:32 EURASIP Journal on DOI 10.1186/s13639-017-0080-z Embedded Systems RESEARCH Open Access AMBER: Advanced Mother Board for Embedded systems pRototyping Matteo Petracca1* , Pierluigi Passaro2 and Egidio Gioia3 Abstract The proliferation of low-cost embedded system platforms has allowed the creation of large communities of develo- pers, as well as the development of new advanced applications. Even though some of these applications can be of industrial relevance, their immediate application to real products is not straightforward since most of them require a complete and expensive hardware redesign of the considered embedded solution. To speed up the technological transfer of custom embedded solutions while overtaking the limits imposed by a complete hardware redesign, the article presents AMBER, an innovative embedded platform leveraging on a design based on System-on-Modules (SOM) and Extender modules. AMBER decouples the processing part of the system, which is fully contained on the SOM, from the peripherals, which are contained on the main board and Extender modules. This allows a smooth industrial- oriented redesign of the embedded solution. In the article, AMBER is first presented starting from its philosophy and design choices while highlighting its main features. Then, an application of AMBER as an enhanced gateway to be used in the Industrial Internet of Things (IIoT) scenario is reported by considering a monitoring and actuation use case. The IIoT-oriented AMBER solution is hardware and software configured to support real-time communications with actuators compliant with the Powerlink standard, as well as to interact with sensors compliant with Bluetooth Low Energy. -

A Survey of Open Processor Core Licensing 21

A Survey of Open Processor Core Licensing 21 A Survey of Open Processor Core Licensing Andrew Katz,a (a) Partner, Moorcrofts LLP DOI: 10.5033/ifosslr.v10i1.1 30 Abstract In the Spring of 2018, Western Digital Corporation commissioned Andrew Katz of Moorcrofts LLP to prepare a survey of open processor core licensing. This is an edited version of that report. Keywords Law; information technology; Free and Open Source Software; open hardware licensing; processor cores Foreword by Alan Tse, Western Digital Corporation: Western Digital’s relationship with open source has evolved significantly over the last decade. When I first joined Western Digital, our main focus was on open source compliance. That is because in 2009 we were one of the first major companies sued for our open source use. As a result, the main goal for the next few years was to prevent any litigation happening again and we viewed open source with some trepidation. Over time our view shifted as we had to learn about the importance of the open source community and how to be a good participant in that community. And over the years, we have increased our participation – not to avoid litigation, but because our own business interests have started to align. Over the years we have made multiple contributions to the Linux kernel and other open source projects and we have released internal tools that we thought others could use. Now that it has been almost a decade since that first lawsuit, we would like to think that we have learnt a bit more about the open source community and we are proud to say we are a part of that community.