Waveform Signal Entropy and Compression Study of Whole-Building Energy Datasets

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lossless Audio Codec Comparison

Contents Introduction 3 1 CD-audio test 4 1.1 CD's used . .4 1.2 Results all CD's together . .4 1.3 Interesting quirks . .7 1.3.1 Mono encoded as stereo (Dan Browns Angels and Demons) . .7 1.3.2 Compressibility . .9 1.4 Convergence of the results . 10 2 High-resolution audio 13 2.1 Nine Inch Nails' The Slip . 13 2.2 Howard Shore's soundtrack for The Lord of the Rings: The Return of the King . 16 2.3 Wasted bits . 18 3 Multichannel audio 20 3.1 Howard Shore's soundtrack for The Lord of the Rings: The Return of the King . 20 A Motivation for choosing these CDs 23 B Test setup 27 B.1 Scripting and graphing . 27 B.2 Codecs and parameters used . 27 B.3 MD5 checksumming . 28 C Revision history 30 Bibliography 31 2 Introduction While testing the efficiency of lossy codecs can be quite cumbersome (as results differ for each person), comparing lossless codecs is much easier. As the last well documented and comprehensive test available on the internet has been a few years ago, I thought it would be a good idea to update. Beside comparing with CD-audio (which is often done to assess codec performance) and spitting out a grand total, this comparison also looks at extremes that occurred during the test and takes a look at 'high-resolution audio' and multichannel/surround audio. While the comparison was made to update the comparison-page on the FLAC website, it aims to be fair and unbiased. -

Ardour Export Redesign

Ardour Export Redesign Thorsten Wilms [email protected] Revision 2 2007-07-17 Table of Contents 1 Introduction 4 4.5 Endianness 8 2 Insights From a Survey 4 4.6 Channel Count 8 2.1 Export When? 4 4.7 Mapping Channels 8 2.2 Channel Count 4 4.8 CD Marker Files 9 2.3 Requested File Types 5 4.9 Trimming 9 2.4 Sample Formats and Rates in Use 5 4.10 Filename Conflicts 9 2.5 Wish List 5 4.11 Peaks 10 2.5.1 More than one format at once 5 4.12 Blocking JACK 10 2.5.2 Files per Track / Bus 5 4.13 Does it have to be a dialog? 10 2.5.3 Optionally store timestamps 5 5 Track Export 11 2.6 General Problems 6 6 MIDI 12 3 Feature Requests 6 7 Steps After Exporting 12 3.1 Multichannel 6 7.1 Normalize 12 3.2 Individual Files 6 7.2 Trim silence 13 3.3 Realtime Export 6 7.3 Encode 13 3.4 Range ad File Export History 7 7.4 Tag 13 3.5 Running a Script 7 7.5 Upload 13 3.6 Export Markers as Text 7 7.6 Burn CD / DVD 13 4 The Current Dialog 7 7.7 Backup / Archiving 14 4.1 Time Span Selection 7 7.8 Authoring 14 4.2 Ranges 7 8 Container Formats 14 4.3 File vs Directory Selection 8 8.1 libsndfile, currently offered for Export 14 4.4 Container Types 8 8.2 libsndfile, also interesting 14 8.3 libsndfile, rather exotic 15 12 Specification 18 8.4 Interesting 15 12.1 Core 18 8.4.1 BWF – Broadcast Wave Format 15 12.2 Layout 18 8.4.2 Matroska 15 12.3 Presets 18 8.5 Problematic 15 12.4 Speed 18 8.6 Not of further interest 15 12.5 Time span 19 8.7 Check (Todo) 15 12.6 CD Marker Files 19 9 Encodings 16 12.7 Mapping 19 9.1 Libsndfile supported 16 12.8 Processing 19 9.2 Interesting 16 12.9 Container and Encodings 19 9.3 Problematic 16 12.10 Target Folder 20 9.4 Not of further interest 16 12.11 Filenames 20 10 Container / Encoding Combinations 17 12.12 Multiplication 20 11 Elements 17 12.13 Left out 21 11.1 Input 17 13 Credits 21 11.2 Output 17 14 Todo 22 1 Introduction 4 1 Introduction 2 Insights From a Survey The basic purpose of Ardour's export functionality is I conducted a quick survey on the Linux Audio Users to create mixdowns of multitrack arrangements. -

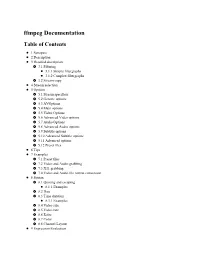

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 6 Tips 7 Examples 7.1 Preset files 7.2 Video and Audio grabbing 7.3 X11 grabbing 7.4 Video and Audio file format conversion 8 Syntax 8.1 Quoting and escaping 8.1.1 Examples 8.2 Date 8.3 Time duration 8.3.1 Examples 8.4 Video size 8.5 Video rate 8.6 Ratio 8.7 Color 8.8 Channel Layout 9 Expression Evaluation 10 OpenCL Options 11 Codec Options 12 Decoders 13 Video Decoders 13.1 rawvideo 13.1.1 Options 14 Audio Decoders 14.1 ac3 14.1.1 AC-3 Decoder Options 14.2 ffwavesynth 14.3 libcelt 14.4 libgsm 14.5 libilbc 14.5.1 Options 14.6 libopencore-amrnb 14.7 libopencore-amrwb 14.8 libopus 15 Subtitles Decoders 15.1 dvdsub 15.1.1 Options 15.2 libzvbi-teletext 15.2.1 Options 16 Encoders 17 Audio Encoders 17.1 aac 17.1.1 Options 17.2 ac3 and ac3_fixed 17.2.1 AC-3 Metadata 17.2.1.1 Metadata Control Options 17.2.1.2 Downmix Levels 17.2.1.3 Audio Production Information 17.2.1.4 Other Metadata Options 17.2.2 Extended Bitstream Information 17.2.2.1 Extended Bitstream Information - Part 1 17.2.2.2 Extended Bitstream Information - Part 2 17.2.3 Other AC-3 Encoding Options 17.2.4 Floating-Point-Only AC-3 Encoding -

Codec Is a Portmanteau of Either

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

Input Formats & Codecs

Input Formats & Codecs Pivotshare offers upload support to over 99.9% of codecs and container formats. Please note that video container formats are independent codec support. Input Video Container Formats (Independent of codec) 3GP/3GP2 ASF (Windows Media) AVI DNxHD (SMPTE VC-3) DV video Flash Video Matroska MOV (Quicktime) MP4 MPEG-2 TS, MPEG-2 PS, MPEG-1 Ogg PCM VOB (Video Object) WebM Many more... Unsupported Video Codecs Apple Intermediate ProRes 4444 (ProRes 422 Supported) HDV 720p60 Go2Meeting3 (G2M3) Go2Meeting4 (G2M4) ER AAC LD (Error Resiliant, Low-Delay variant of AAC) REDCODE Supported Video Codecs 3ivx 4X Movie Alaris VideoGramPiX Alparysoft lossless codec American Laser Games MM Video AMV Video Apple QuickDraw ASUS V1 ASUS V2 ATI VCR-2 ATI VCR1 Auravision AURA Auravision Aura 2 Autodesk Animator Flic video Autodesk RLE Avid Meridien Uncompressed AVImszh AVIzlib AVS (Audio Video Standard) video Beam Software VB Bethesda VID video Bink video Blackmagic 10-bit Broadway MPEG Capture Codec Brooktree 411 codec Brute Force & Ignorance CamStudio Camtasia Screen Codec Canopus HQ Codec Canopus Lossless Codec CD Graphics video Chinese AVS video (AVS1-P2, JiZhun profile) Cinepak Cirrus Logic AccuPak Creative Labs Video Blaster Webcam Creative YUV (CYUV) Delphine Software International CIN video Deluxe Paint Animation DivX ;-) (MPEG-4) DNxHD (VC3) DV (Digital Video) Feeble Files/ScummVM DXA FFmpeg video codec #1 Flash Screen Video Flash Video (FLV) / Sorenson Spark / Sorenson H.263 Forward Uncompressed Video Codec fox motion video FRAPS: -

Name Description Synopsis Options

xcfa_cli(1) Manual: 0.0.6 xcfa_cli(1) NAME xcfa_cli −This program is an implementation of xcfaincommand line. DESCRIPTION xcfa_cli is an application for conversion, normalization, reconfiguring wav files and cut audio files ... What xcfa_cli can do: -replaygain on files: flac, mp3, ogg, wavpack -conversions: -from files: wav, flac, ape, wavpack, ogg, m4a, mpc, mp3, wma, shorten, rm, dts, aif, ac3 -tofiles: wav, flac, ape, wavpack, ogg, m4a, mpc, mp3, aac -conversion settings for file management: flac, ape, wavpack, ogg, m4a, aac, mpc, mp3 -management tags -management cue wav file -manipulation of the frequency, track and bit wav files -standardization on files: wav,mp3, ogg -cuts (split) wav files -displaying information on files SYNOPSIS xcfa_cli [ −i "file.*" ][ −d wav,mpc,... ][ OPTIONS ] OPTIONS −−verbose Verbose mode −h −−help Print help mode and quit −i <"file.type"> −−input <"file.type"> Input name file to convert in inverted commas: −−input "*.flac" Type input files: wav,flac, ape, wavpack, ogg, m4a, mpc, mp3, wma, shorten, rm, dts, aif, ac3 −o <path_dest/> −−output <path_dest/> Destination folder.Bydefault in the source file folder. −d <wav,flac,ape,...> −−dest <wav,flac,ape,...> Destination file: wav,flac, ape, wavpack, ogg, m4a, mpc, mp3, aac −r −−recursion Recursive search −e −−ext2src Extract in the source folder.This option is useful with ’−−recursion’ −−nice <priority> Change the priority of running processes in the interval: 0 .. 20 Management options with default parameters: −−op_flac <"−5"> −−op_ape <"c2000"> −−op_wavpack <"−y −j1"> 0.0.6 Thu, 06 -

Supported Codecs and Formats Codecs

Supported Codecs and Formats Codecs: D..... = Decoding supported .E.... = Encoding supported ..V... = Video codec ..A... = Audio codec ..S... = Subtitle codec ...I.. = Intra frame-only codec ....L. = Lossy compression .....S = Lossless compression ------- D.VI.. 012v Uncompressed 4:2:2 10-bit D.V.L. 4xm 4X Movie D.VI.S 8bps QuickTime 8BPS video .EVIL. a64_multi Multicolor charset for Commodore 64 (encoders: a64multi ) .EVIL. a64_multi5 Multicolor charset for Commodore 64, extended with 5th color (colram) (encoders: a64multi5 ) D.V..S aasc Autodesk RLE D.VIL. aic Apple Intermediate Codec DEVIL. amv AMV Video D.V.L. anm Deluxe Paint Animation D.V.L. ansi ASCII/ANSI art DEVIL. asv1 ASUS V1 DEVIL. asv2 ASUS V2 D.VIL. aura Auravision AURA D.VIL. aura2 Auravision Aura 2 D.V... avrn Avid AVI Codec DEVI.. avrp Avid 1:1 10-bit RGB Packer D.V.L. avs AVS (Audio Video Standard) video DEVI.. avui Avid Meridien Uncompressed DEVI.. ayuv Uncompressed packed MS 4:4:4:4 D.V.L. bethsoftvid Bethesda VID video D.V.L. bfi Brute Force & Ignorance D.V.L. binkvideo Bink video D.VI.. bintext Binary text DEVI.S bmp BMP (Windows and OS/2 bitmap) D.V..S bmv_video Discworld II BMV video D.VI.S brender_pix BRender PIX image D.V.L. c93 Interplay C93 D.V.L. cavs Chinese AVS (Audio Video Standard) (AVS1-P2, JiZhun profile) D.V.L. cdgraphics CD Graphics video D.VIL. cdxl Commodore CDXL video D.V.L. cinepak Cinepak DEVIL. cljr Cirrus Logic AccuPak D.VI.S cllc Canopus Lossless Codec D.V.L. -

Computer Audio Demystified 2.0 December 2012

Computer Audio Demystified 2.0 December 2012 What is Computer Audio? From iTunes®, streaming music and podcasts to watching YouTube videos, TV shows or movies, today’s computers are the hub that connects people to a vast universe of digital entertainment content. Computer audio playback can be as simple as playing those files or streams on a portable media player like an iPod®, iPad®, or a smart phone device such as the iPhone® or an Android™ phone. Or it can be as sophisticated as a component-based home entertainment system using a high-end Digital-Audio Converter (aka DAC). In this brave new frontier of computer-based digital audio, the current reality is that a lossless 16-bit/44.1kHz digital music file can sound far better than the CD it was ripped from when each is played in real time, and a high-resolution 24-bit/88.2kHz digital music file can truly compete with vinyl’s sonic beauty without our beloved vinyl’s flaws. Notice there is a bunch of “cans” in the line above. Great audio is possible in this new computer audio world, but it is not automatic. Just as adjusting the stylus rake angle properly is critical to getting the best performance from a turntable, knowledge is required to optimize the hardware and software that will define the performance boundaries of your computer audio experience. AudioQuest’s Computer Audio Demystified is a guide created to dispel some of the myths about computer audio and establish some “best practices” in streaming, acquiring and managing digital music. -

Desempeño De Codificadores De Audio Sin Pérdidas Y Una Herramienta Que Simplifica Su Análisis

DESEMPEÑO DE CODIFICADORES DE AUDIO SIN PÉRDIDAS Y UNA HERRAMIENTA QUE SIMPLIFICA SU ANÁLISIS Marengo Rodriguez, F. A. ; Roveri, E. A.; Rodríguez Guerrero, J. M.; Treffiló, M.; Miyara, F. Laboratorio de Acústica y Electroacústica, Escuela de Ingeniería Electrónica, Facultad de Ciencias Exactas, Ingeniería y Agrimensura, Universidad Nacional de Rosario, Rosario. Correo electrónico: [email protected] Resumen – A raíz de la mayor capacidad de transmisión y recursos de almacenamiento disponibles, muchos usuarios de equipos reproductores de audio se han volcado por la co- dificación de audio sin pérdidas en lugar de la codificación perceptual (es decir, que pro- ducen pérdida de información no percibidas por el oído común como MP3). Hasta el mo- mento, se hicieron muchas comparaciones entre los codec sin pérdidas, pero ninguna de ellas nos da noción de cuál codec es el mejor para comprimir un archivo de audio dado en formato PCM estéreo. Este trabajo pretende cubrir esa necesidad, haciendo una compara- ción exhaustiva entre varios codec populares en términos del factor de compresión y de la velocidad de codificación para piezas musicales de distintos géneros provenientes de CD comerciales. Los géneros analizados son folklore, tango, música clásica, rock y jazz. Tam- bién se incluyó como categoría adicional recitales de rock en vivo, a fin de evaluar la in- fluencia del ruido ambiente sobre el desempeño de la codificación. En este trabajo se de- muestra que la compresión alcanzada depende esencialmente del género musical, y que cada codificador se caracteriza por su velocidad. Esto se debe a una base tecnológica co- mún a todos los codec analizados, compuesta por predictores y bloques codificadores por entropía. -

Video Encoding with Open Source Tools

Video Encoding with Open Source Tools Steffen Bauer, 26/1/2010 LUG Linux User Group Frankfurt am Main Overview Basic concepts of video compression Video formats and codecs How to do it with Open Source and Linux 1. Basic concepts of video compression Characteristics of video streams Framerate Number of still pictures per unit of time of video; up to 120 frames/s for professional equipment. PAL video specifies 25 frames/s. Interlacing / Progressive Video Interlaced: Lines of one frame are drawn alternatively in two half-frames Progressive: All lines of one frame are drawn in sequence Resolution Size of a video image (measured in pixels for digital video) 768/720×576 for PAL resolution Up to 1920×1080p for HDTV resolution Aspect Ratio Dimensions of the video screen; ratio between width and height. Pixels used in digital video can have non-square aspect ratios! Usual ratios are 4:3 (traditional TV) and 16:9 (anamorphic widescreen) Why video encoding? Example: 52 seconds of DVD PAL movie (16:9, 720x576p, 25 fps, progressive scan) Compression Video codec Raw Size factor Comment 1300 single frames, MotionTarga, Raw frames 1.1 GB - uncompressed HUFFYUV 459 MB 2.2 / 55% Lossless compression MJPEG 60 MB 20 / 95% Motion JPEG; lossy; intraframe only lavc MPEG-2 24 MB 50 / 98% Standard DVD quality X.264 MPEG-4 5.3 MB 200 / 99.5% High efficient video codec AVC Basic principles of multimedia encoding Video compression Lossy compression Lossless (irreversible; (reversible; using shortcomings statistical encoding) in human perception) Intraframe encoding -

Design Considerations for Audio Compression Alexander Benjamin

Design Considerations for Audio Compression Alexander Benjamin 8/01/10 The world of music is very different than it was ten years ago, which was markedly changed from ten years before that. Just as the ways in which people listen to music have evolved steadily over the last couple of decades, so have the demands and considerations of music listeners. People want music to have optimal sound quality while requiring as little space as possible (so they can listen to it on an MP3 player, for example). This paper will discuss the considerations and tradeoffs that go into audio compression as a determinant of audio quality and the amount of memory that the file occupies. Music files are compressed to reduce the amount of data needed to play a song while minimizing the loss (if any) of perceptible sound quality. A decade ago, hard drive storage was much more expensive, and so the norm was to compress music. With the advent of digital audio players (MP3 players), compression is desired so that people can fit more songs onto a device. In addition, music compression is very useful for transferring the data over the internet or between devices. While download and transfer speeds have increased notably over the years, it takes much longer to download an uncompressed music file over the internet in comparison to a compressed one. Different types of compression algorithms have been developed that aim to reduce or alter the digital audio data in order to reduce the number of bits needed for playback. The compression process is called encoding while the reverse is called decoding. -

Supported Codecs and Format of Their Codecprivate Blocks

Supported codecs and format of their CodecPrivate blocks Video (MEDIATYPE_Video) CodecID CodecPrivate format DirectShow mediatype format V_MS/VFW/FOURCC BITMAPINFOHEADER structure Subtype FOURCCMap(dwCompression) Avi compatibility, followed by opaque codec init data Format VideoInfo/VideoInfo2 any VfW codec can CodecPrivate is copied verbatim to the format block starting at the bmiHeader be stored in member of VIDEOINFOHEADER/VIDEOINFOHEADER2 Matroska using this mode V_REAL/RVxy Opaque codec init data Subtype FOURCCMap(‘R’,’V’,x,y) RealVideo, xx is the Format VideoInfo/VideoInfo2 codec version (two CodecPrivate is appended to VIDEOINFOHEADER/VIDEOINFOHEADER2 decimal digits) V_MPEG1 MPEG-1 sequence header extracted Subtype: MPEG1Payload ISO/IEC 11172-2 from elementary stream, the stream Format: MPEGVideo itself does not contain any sequence/gop CodecPrivate is copied to the format block starting at bSequenceHeader member headers anymore of MPEG1VIDEOINFO V_MPEG2 MPEG-2 sequence header extracted Subtype MPEG2_VIDEO ISO/IEC 13818-2 from elementary stream, the stream Format MPEG2Video itself does not contain any sequence/gop CodecPrivate is copied to the format block starting at dwSequence header headers anymore member of MPEG2VIDEOINFO V_THEORA Xiph-lace-encoded sizes of two initial Subtype FOURCCMap(‘THEO’) Theora video codec theora packets followed by three Format MPEG2Video packets. Size of the last packet is Three big-endian 16-bit words with packet sizes followed by the packets calculated from the total CodecPrivate themselves are stored