22C/55:181 1 Predicate Logic Definition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Verifying the Unification Algorithm In

Verifying the Uni¯cation Algorithm in LCF Lawrence C. Paulson Computer Laboratory Corn Exchange Street Cambridge CB2 3QG England August 1984 Abstract. Manna and Waldinger's theory of substitutions and uni¯ca- tion has been veri¯ed using the Cambridge LCF theorem prover. A proof of the monotonicity of substitution is presented in detail, as an exam- ple of interaction with LCF. Translating the theory into LCF's domain- theoretic logic is largely straightforward. Well-founded induction on a complex ordering is translated into nested structural inductions. Cor- rectness of uni¯cation is expressed using predicates for such properties as idempotence and most-generality. The veri¯cation is presented as a series of lemmas. The LCF proofs are compared with the original ones, and with other approaches. It appears di±cult to ¯nd a logic that is both simple and exible, especially for proving termination. Contents 1 Introduction 2 2 Overview of uni¯cation 2 3 Overview of LCF 4 3.1 The logic PPLAMBDA :::::::::::::::::::::::::: 4 3.2 The meta-language ML :::::::::::::::::::::::::: 5 3.3 Goal-directed proof :::::::::::::::::::::::::::: 6 3.4 Recursive data structures ::::::::::::::::::::::::: 7 4 Di®erences between the formal and informal theories 7 4.1 Logical framework :::::::::::::::::::::::::::: 8 4.2 Data structure for expressions :::::::::::::::::::::: 8 4.3 Sets and substitutions :::::::::::::::::::::::::: 9 4.4 The induction principle :::::::::::::::::::::::::: 10 5 Constructing theories in LCF 10 5.1 Expressions :::::::::::::::::::::::::::::::: -

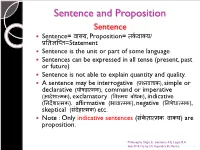

Logic- Sentence and Propositions

Sentence and Proposition Sentence Sentence= वा啍य, Proposition= त셍कवा啍य/ प्रततऻप्तत=Statement Sentence is the unit or part of some language. Sentences can be expressed in all tense (present, past or future) Sentence is not able to explain quantity and quality. A sentence may be interrogative (प्रश्नवाच셍), simple or declarative (घोषड़ा配म셍), command or imperative (आदेशा配म셍), exclamatory (ववमयतबोध셍), indicative (तनदेशा配म셍), affirmative (भावा配म셍), negative (तनषेधा配म셍), skeptical (संदेहा配म셍) etc. Note : Only indicative sentences (सं셍ेता配म셍तवा啍य) are proposition. Philosophy Dept, E- contents-01( Logic-B.A Sem.III & IV) by Dr Rajendra Kr.Verma 1 All kind of sentences are not proposition, only those sentences are called proposition while they will determine or evaluate in terms of truthfulness or falsity. Sentences are governed by its own grammar. (for exp- sentence of hindi language govern by hindi grammar) Sentences are correct or incorrect / pure or impure. Sentences are may be either true or false. Philosophy Dept, E- contents-01( Logic-B.A Sem.III & IV) by Dr Rajendra Kr.Verma 2 Proposition Proposition are regarded as the material of our reasoning and we also say that proposition and statements are regarded as same. Proposition is the unit of logic. Proposition always comes in present tense. (sentences - all tenses) Proposition can explain quantity and quality. (sentences- cannot) Meaning of sentence is called proposition. Sometime more then one sentences can expressed only one proposition. Example : 1. ऩानीतबरसतरहातहैत.(Hindi) 2. ऩावुष तऩड़तोत (Sanskrit) 3. It is raining (English) All above sentences have only one meaning or one proposition. -

First Order Logic and Nonstandard Analysis

First Order Logic and Nonstandard Analysis Julian Hartman September 4, 2010 Abstract This paper is intended as an exploration of nonstandard analysis, and the rigorous use of infinitesimals and infinite elements to explore properties of the real numbers. I first define and explore first order logic, and model theory. Then, I prove the compact- ness theorem, and use this to form a nonstandard structure of the real numbers. Using this nonstandard structure, it it easy to to various proofs without the use of limits that would otherwise require their use. Contents 1 Introduction 2 2 An Introduction to First Order Logic 2 2.1 Propositional Logic . 2 2.2 Logical Symbols . 2 2.3 Predicates, Constants and Functions . 2 2.4 Well-Formed Formulas . 3 3 Models 3 3.1 Structure . 3 3.2 Truth . 4 3.2.1 Satisfaction . 5 4 The Compactness Theorem 6 4.1 Soundness and Completeness . 6 5 Nonstandard Analysis 7 5.1 Making a Nonstandard Structure . 7 5.2 Applications of a Nonstandard Structure . 9 6 Sources 10 1 1 Introduction The founders of modern calculus had a less than perfect understanding of the nuts and bolts of what made it work. Both Newton and Leibniz used the notion of infinitesimal, without a rigorous understanding of what they were. Infinitely small real numbers that were still not zero was a hard thing for mathematicians to accept, and with the rigorous development of limits by the likes of Cauchy and Weierstrass, the discussion of infinitesimals subsided. Now, using first order logic for nonstandard analysis, it is possible to create a model of the real numbers that has the same properties as the traditional conception of the real numbers, but also has rigorously defined infinite and infinitesimal elements. -

Unification: a Multidisciplinary Survey

Unification: A Multidisciplinary Survey KEVIN KNIGHT Computer Science Department, Carnegie-Mellon University, Pittsburgh, Pennsylvania 15213-3890 The unification problem and several variants are presented. Various algorithms and data structures are discussed. Research on unification arising in several areas of computer science is surveyed, these areas include theorem proving, logic programming, and natural language processing. Sections of the paper include examples that highlight particular uses of unification and the special problems encountered. Other topics covered are resolution, higher order logic, the occur check, infinite terms, feature structures, equational theories, inheritance, parallel algorithms, generalization, lattices, and other applications of unification. The paper is intended for readers with a general computer science background-no specific knowledge of any of the above topics is assumed. Categories and Subject Descriptors: E.l [Data Structures]: Graphs; F.2.2 [Analysis of Algorithms and Problem Complexity]: Nonnumerical Algorithms and Problems- Computations on discrete structures, Pattern matching; Ll.3 [Algebraic Manipulation]: Languages and Systems; 1.2.3 [Artificial Intelligence]: Deduction and Theorem Proving; 1.2.7 [Artificial Intelligence]: Natural Language Processing General Terms: Algorithms Additional Key Words and Phrases: Artificial intelligence, computational complexity, equational theories, feature structures, generalization, higher order logic, inheritance, lattices, logic programming, natural language -

First-Order Logic

Lecture 14: First-Order Logic 1 Need For More Than Propositional Logic In normal speaking we could use logic to say something like: If all humans are mortal (α) and all Greeks are human (β) then all Greeks are mortal (γ). So if we have α = (H → M) and β = (G → H) and γ = (G → M) (where H, M, G are propositions) then we could say that ((α ∧ β) → γ) is valid. That is called a Syllogism. This is good because it allows us to focus on a true or false statement about one person. This kind of logic (propositional logic) was adopted by Boole (Boolean Logic) and was the focus of our lectures so far. But what about using “some” instead of “all” in β in the statements above? Would the statement still hold? (After all, Zeus had many children that were half gods and Greeks, so they may not necessarily be mortal.) We would then need to say that “Some Greeks are human” implies “Some Greeks are mortal”. Unfortunately, there is really no way to express this in propositional logic. This points out that we really have been abstracting out the concept of all in propositional logic. It has been assumed in the propositions. If we want to now talk about some, we must make this concept explicit. Also, we should be able to reason that if Socrates is Greek then Socrates is mortal. Also, we should be able to reason that Plato’s teacher, who is Greek, is mortal. First-Order Logic allows us to do this by writing formulas like the following. -

Accuracy Vs. Validity, Consistency Vs. Reliability, and Fairness Vs

Accuracy vs. Validity, Consistency vs. Reliability, and Fairness vs. Absence of Bias: A Call for Quality Accuracy vs. Validity, Consistency vs. Reliability, and Fairness vs. Absence of Bias: A Call for Quality Paper Presented at the Annual Meeting of the American Association of Colleges of Teacher Education (AACTE) New Orleans, LA. February 2008 W. Steve Lang, Ph.D. Professor, Educational Measurement and Research University of South Florida St. Petersburg Email: [email protected] Judy R. Wilkerson, Ph.D. Associate Professor, Research and Assessment Florida Gulf Coast University email: [email protected] Abstract The National Council for Accreditation of Teacher Education (NCATE, 2002) requires teacher education units to develop assessment systems and evaluate both the success of candidates and unit operations. Because of a stated, but misguided, fear of statistics, NCATE fails to use accepted terminology to assure the quality of institutional evaluative decisions with regard to the relevant standard (#2). Instead of “validity” and ”reliability,” NCATE substitutes “accuracy” and “consistency.” NCATE uses the accepted terms of “fairness” and “avoidance of bias” but confuses them with each other and with validity and reliability. It is not surprising, therefore, that this Standard is the most problematic standard in accreditation decisions. This paper seeks to clarify the terms, using scholarly work and measurement standards as a basis for differentiating and explaining the terms. The paper also provides examples to demonstrate how units can seek evidence of validity, reliability, and fairness with either statistical or non-statistical methodologies, disproving the NCATE assertion that statistical methods provide the only sources of evidence. The lack of adherence to professional assessment standards and the knowledge base of the educational profession in both the rubric and web-based resource materials for this standard are discussed. -

Algebraic Structuralism

Algebraic structuralism December 29, 2016 1 Introduction This essay is about how the notion of “structure” in ontic structuralism might be made precise. More specifically, my aim is to make precise the idea that the structure of the world is (somehow) given by the relations inhering in the world, in such a way that the relations are ontologically prior to their relata. The central claim is the following: one can do so by giving due attention to the relationships that hold between those relations, by making use of certain notions from algebraic logic. In the remainder of this introduction, I sketch two motivations for structuralism, and make some preliminary remarks about the relationship between structuralism and ontological dependence; in the next section, I outline my preferred way of unpacking the notion of “structure”; in §3, I compare this view to Dasgupta’s algebraic generalism; and finally, I evaluate the view, by considering how well it can be defended against objections, and how well it discharges the motivations with which we began. 1.1 Motivations for structuralism I don’t intend to spend a lot of time discussing the motivations for structuralism: this essay is more about what the best way might be to understand structuralism, rather than about whether or not structuralism is a compelling position. Nevertheless, whether a given proposal is the best way to understand structuralism will clearly depend on the extent to which that proposal recovers motivations for structuralism and renders them compelling. So in this section, I outline -

Modularity of Simple Termination of Term Rewriting Systems with Shared Constructors

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Theoretical Computer Science 103 (1992) 273-282 273 Elsevier Modularity of simple termination of term rewriting systems with shared constructors Masahito Kurihara and Azuma Ohuchi Departmeni of Information Engineering, Hokkaido University, Sapporo, 060 Japan Communicated by M. Takahashi Received August 1990 Revised March 1991 Abstract Kurihara, M. and A. Ohuchi, Modularity of simple termination of term rewriting systems with shared constructors, Theoretical Computer Science 103 (1992) 273-282. A term rewriting system is simply terminating if there exists a simplification ordering showing its termination. Let R, and R, be term rewriting systems which share no defined symbol (but may share constructors). Constructors are function symbols which do not occur at the leftmost position in left-hand sides of rewrite rules; the rest of the function symbols are defined symbols. In this paper, we prove that R,u R, is simply terminating if and only if both R, and R, are so. 1. Introduction A property P of term rewriting systems (TRSs) [4,6] is modular if R,u R, has the property P if and only if both R0 and R, have that property. Starting with Toyama [ 141, several authors studied modular aspects of TRSs under the disjointness condition which requires that RO and R, share no function symbols. Toyama [14] proved the modularity of confluence. Middeldorp [9] studied the modularity of three properties related to normal forms. In [15] Toyama refuted the modularity of termination. Barendregt and Klop refuted the modularity of completeness (i.e., termination of confluent systems). -

Traditional Logic and Computational Thinking

philosophies Article Traditional Logic and Computational Thinking J.-Martín Castro-Manzano Faculty of Philosophy, UPAEP University, Puebla 72410, Mexico; [email protected] Abstract: In this contribution, we try to show that traditional Aristotelian logic can be useful (in a non-trivial way) for computational thinking. To achieve this objective, we argue in favor of two statements: (i) that traditional logic is not classical and (ii) that logic programming emanating from traditional logic is not classical logic programming. Keywords: syllogistic; aristotelian logic; logic programming 1. Introduction Computational thinking, like critical thinking [1], is a sort of general-purpose thinking that includes a set of logical skills. Hence logic, as a scientific enterprise, is an integral part of it. However, unlike critical thinking, when one checks what “logic” means in the computational context, one cannot help but be surprised to find out that it refers primarily, and sometimes even exclusively, to classical logic (cf. [2–7]). Classical logic is the logic of Boolean operators, the logic of digital circuits, the logic of Karnaugh maps, the logic of Prolog. It is the logic that results from discarding the traditional, Aristotelian logic in order to favor the Fregean–Tarskian paradigm. Classical logic is the logic that defines the standards we typically assume when we teach, research, or apply logic: it is the received view of logic. This state of affairs, of course, has an explanation: classical logic works wonders! However, this is not, by any means, enough warrant to justify the absence or the abandon of Citation: Castro-Manzano, J.-M. traditional logic within the computational thinking literature. -

First Order Syntax

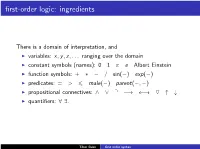

first-order logic: ingredients There is a domain of interpretation, and I variables: x; y; z;::: ranging over the domain I constant symbols (names): 0 1 π e Albert Einstein I function symbols: + ∗ − = sin(−) exp(−) I predicates: = > 6 male(−) parent(−; −) I propositional connectives: ^ _ q −! ! O "# I quantifiers: 8 9. Tibor Beke first order syntax False. sentences Let the domain of interpretation be the set of real numbers. 8a 8b 8c 9x(ax2 + bx + c = 0) This formula is an example of a sentence from first-order logic. It has no free variables: every one of the variables a, b, c, x is in the scope of a quantifier. A sentence has a well-defined truth value that can be discovered (at least, in principle). The sentence above (interpreted over the real numbers) is Tibor Beke first order syntax sentences Let the domain of interpretation be the set of real numbers. 8a 8b 8c 9x(ax2 + bx + c = 0) This formula is an example of a sentence from first-order logic. It has no free variables: every one of the variables a, b, c, x is in the scope of a quantifier. A sentence has a well-defined truth value that can be discovered (at least, in principle). The sentence above (interpreted over the real numbers) is False. Tibor Beke first order syntax \Every woman has two children " but is, in fact, logically equivalent to every woman having at least one child. That child could play the role of both y and z. sentences Let the domain of discourse be a set of people, let F (x) mean \x is female" and let P(x; y) mean \x is a parent of y". -

Programming with Term Logic

Programming with Term Logic J. Martín Castro-Manzano Faculty of Philosophy & Humanities UPAEP Av 9 Pte 1908, Barrio de Santiago, 72410 Puebla, Pue., Mexico +52 (222) 229 94 00 [email protected] L. Ignacio Lozano-Cobos Faculty of Philosophy & Humanities UPAEP Av 9 Pte 1908, Barrio de Santiago, 72410 Puebla, Pue., Mexico +52 (222) 229 94 00 [email protected] Paniel O. Reyes-Cárdenas Faculty of Philosophy & Humanities UPAEP Av 9 Pte 1908, Barrio de Santiago, 72410 Puebla, Pue., Mexico +52 (222) 229 94 00 [email protected] Abstract: Given the core tenets of Term Functor Logic and Aristotelian Databases, in this contribution we present the current advances of a novel logic programming language we call Term Functor Logic Programming Language. Keywords: Syllogistic, logic programming, Aristotelian Database. 1. Introduction Under direct influence of (Sommers, 1967 ; Sommers, 1982 ; Sommers & Englebretsen, 2000, Thompson, 1982 ; Mostowski, 1957), in other place we have offered an intermediate term logic for relational syllogistic that is able to deal with a wide range of common sense inference patterns (we call it the system TFL+); an under direct influence of (Englebretsen, 1987 ; Englebretsen, 1991 ; Englebretsen, 1996 ; Englebretsen & Sayward, 2011) and TFL+, in other place we have presented the diagrammatic counterpart of TFL+ as to perform visual reasoning (we call it the system TFL⊕) (Castro-Manzano & Pacheco-Montes, 2018). Now, under the influence of this couple of systems and the notion of Aristotelian Database (Mozes, 1989), here we introduce the current advances of a novel logic programming language we call Term Functor Logic Programming Language (TFLPL). -

Nominal Unification

Theoretical Computer Science 323 (2004) 473–497 www.elsevier.com/locate/tcs Nominal uniÿcation Christian Urbana , Andrew M. Pittsa;∗ , Murdoch J. Gabbayb aUniversity of Cambridge, Marconi Laboratory, William Gates Bldng, JJ Thomson Ave., Cambridge CB3 OFD, UK bINRIA, Paris, France Received 3 October 2003; received in revised form 8 April 2004; accepted 11 June 2004 Communicated by P.L. Curien Abstract We present a generalisation of ÿrst-order uniÿcation to the practically important case of equa- tions between terms involving binding operations. A substitution of terms for variables solves such an equation if it makes the equated terms -equivalent, i.e. equal up to renaming bound names. For the applications we have in mind, we must consider the simple, textual form of substitution in which names occurring in terms may be captured within the scope of binders upon substitution. We are able to take a “nominal” approach to binding in which bound entities are explicitly named (rather than using nameless, de Bruijn-style representations) and yet get a version of this form of substitution that respects -equivalence and possesses good algorithmic properties. We achieve this by adapting two existing ideas. The ÿrst one is terms involving explicit substitutions of names for names, except that here we only use explicit permutations (bijective substitutions). The second one is that the uniÿcation algorithm should solve not only equational problems, but also problems about the freshness of names for terms. There is a simple generalisation of classical ÿrst-order uniÿcation problems to this setting which retains the latter’s pleasant properties: uniÿcation problems involving -equivalence and freshness are decidable; and solvable problems possess most general solutions.