Unification: a Multidisciplinary Survey

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![Arxiv:1804.04307V1 [Math.RA] 12 Apr 2018 Prtr.A Xml Fsc Lersi H Di the Di Is Algebras of Such of Example an Operators](https://docslib.b-cdn.net/cover/3262/arxiv-1804-04307v1-math-ra-12-apr-2018-prtr-a-xml-fsc-lersi-h-di-the-di-is-algebras-of-such-of-example-an-operators-13262.webp)

Arxiv:1804.04307V1 [Math.RA] 12 Apr 2018 Prtr.A Xml Fsc Lersi H Di the Di Is Algebras of Such of Example an Operators

FREE OPERATED MONOIDS AND REWRITING SYSTEMS JIN ZHANG AND XING GAO ∗ Abstract. The construction of bases for quotients is an important problem. In this paper, applying the method of rewriting systems, we give a unified approach to construct sections—an alternative name for bases in semigroup theory—for quotients of free operated monoids. As applications, we capture sections of free ∗-monoids and free groups, respectively. Contents 1. Introduction 1 2. Rewrting systems and sections 2 2.1. Operated monoids and operated congruences 2 2.2. Relationshipbetweenrewritingsystemsandsections 6 3. Applications 8 3.1. Free ∗-monoids 9 3.2. Free groups 12 References 16 1. Introduction In 1960, A.G. Kurosh [23] first introduced the concept of algebras with one or more linear operators. An example of such algebras is the differential algebra led by the algebraic abstraction of differential operator in analysis. Differential algebra is from a purely algebraic viewpoint to study differentiation and nonlinear differential equations without using an underlying topology, and has been largely successful in many crucial areas, such as uncoupling of nonlinear systems, classification of singular components, and detection of hidden equations [22, 28]. Another im- portant example of such algebras is the Rota-Baxter algebra (first called Baxter algebra) which is the algebraic abstraction of integral operator in analysis [17]. Rota-Baxter algebra, originated from probability study [5], is related beautifully to the classical Yang-Baxter equation, as well as to operads, to combinatorics and, through the Hopf algebra framework of Connes and Kreimer, to the renormalization of quantum field theory [1, 3, 4, 11, 12, 19]. -

Lectures on Algebraic Rewriting

Lectures on Algebraic Rewriting Philippe Malbos Institut Camille Jordan Université Claude Bernard Lyon 1 - December 2019 - PHILIPPE MALBOS Univ Lyon, Université Claude Bernard Lyon 1 [email protected] CNRS UMR 5208, Institut Camille Jordan 43 blvd. du 11 novembre 1918 F-69622 Villeurbanne cedex, France — December 6, 2019 - 17:47 — Contents 1 Abstract Rewriting 1 1.1 Abstract Rewriting Systems . .2 1.2 Confluence . .4 1.3 Normalisation . .6 1.4 From local to global confluence . 10 2 String Rewriting 13 2.1 Preliminaries: one and two-dimensional categories . 13 2.2 String rewriting systems . 21 2.3 The word problem . 25 2.4 Branchings . 26 2.5 Completion . 30 2.6 Existence of finite convergent presentations . 32 3 Coherent presentations and syzygies 35 3.1 Introduction . 35 3.2 Categorical preliminaries . 37 3.3 Coherent presentation of categories . 40 3.4 Finite derivation type . 42 3.5 Coherence from convergence . 44 4 Two-dimensional homological syzygies 51 4.1 Preliminaries on modules . 51 4.2 Monoids of finite homological type . 55 4.3 Squier’s homological theorem . 61 4.4 Homology of monoids with integral coefficients . 63 4.5 Historical notes . 65 iii CONTENTS 5 Linear rewriting 69 5.1 Linear 2-polygraphs . 70 5.2 Linear rewriting steps . 77 5.3 Termination for linear 2-polygraphs . 79 5.4 Monomial orders . 80 5.5 Confluence and convergence . 82 5.6 The Critical Branchings Theorem . 85 5.7 Coherent presentations of algebras . 90 6 Paradigms of linear rewriting 95 6.1 Composition Lemma . 96 6.2 Reduction operators . -

Thue Systems As Rewriting Systems~

J. Symbolic Computation (1987) 3, 39-68 Thue Systems as Rewriting Systems~ RONALD V. BOOK Department of Mathematics, University of California at Santa Barbara, Santa Barbara, California 93106, USA This paper is a survey of recent results on Thue systems, where the systems are viewed as rewriting systems on strings over a finite alphabet. The emphasis is on Thue systems with the Chureh-Rosser property, where the notion of reduction is based on length-decreasing rewriting rules. The main effort is expended in outlining the properties of such systems, paying close attention to the issue of the possible decidability of properties and to the issue of the computational complexity of decidable properties. Since Thue systems may also be considered as presentations of monoids, the language of monoids (and groups) is used to describe some of these properties. 1. Introduction Replacement systems arise in the study of formula manipulation systems such as theorem provers, program optimisers, and algebraic simplifiers. These replacement systems take such forms as term rewriting systems, tree manipulating systems, graph grammars, etc. The study of abstract data types is another area where such systems are useful. The principal problem in this context is the word problem: given a system and two objects, are the two objects equivalent? From a slightly different viewpoint the problem can be stated as follows: can one of these objects be transformed into the other by means of a finite number of applications of the rewriting rules in the given system? This leads naturally to the question of the decidability of the word problem for a given class of systems. -

Strategy Independent Reduction Lengths in Rewriting and Binary Arithmetic

Strategy Independent Reduction Lengths in Rewriting and Binary Arithmetic Hans Zantema University of Technology, Eindhoven, The Netherlands Radboud University, Nijmegen, The Netherlands [email protected] In this paper we give a criterion by which one can conclude that every reduction of a basic term to normal form has the same length. As a consequence, the number of steps to reach the normal form is independent of the chosen strategy. In particular this holds for TRSs computing addition and multiplication of natural numbers, both in unary and binary notation. 1 Introduction For many term rewriting systems (TRSs) the number of rewrite steps to reach a normal form strongly depends on the chosen strategy. For instance, in using the standard if-then-else-rules if(true;x;y) ! x if(false;x;y) ! y it is a good strategy to first rewrite the leftmost (boolean) argument of the symbol if until this argument is rewritten to false or true and then apply the corresponding rewrite rule for if. In this way redundant reductions in the third argument are avoided in case the condition rewrites to true, and redundant reduc- tions in the second argument are avoided in case the condition rewrites to false. More general, choosing a good reduction strategy is essential for doing efficient computation by rewriting. Roughly speaking, for erasing rules, that is, some variable in the left-hand side does not appear in the right-hand side, it seems a good strategy to postpone rewriting this possibly erasing argument, as is the case in the above if-then-else example. -

Verifying the Unification Algorithm In

Verifying the Uni¯cation Algorithm in LCF Lawrence C. Paulson Computer Laboratory Corn Exchange Street Cambridge CB2 3QG England August 1984 Abstract. Manna and Waldinger's theory of substitutions and uni¯ca- tion has been veri¯ed using the Cambridge LCF theorem prover. A proof of the monotonicity of substitution is presented in detail, as an exam- ple of interaction with LCF. Translating the theory into LCF's domain- theoretic logic is largely straightforward. Well-founded induction on a complex ordering is translated into nested structural inductions. Cor- rectness of uni¯cation is expressed using predicates for such properties as idempotence and most-generality. The veri¯cation is presented as a series of lemmas. The LCF proofs are compared with the original ones, and with other approaches. It appears di±cult to ¯nd a logic that is both simple and exible, especially for proving termination. Contents 1 Introduction 2 2 Overview of uni¯cation 2 3 Overview of LCF 4 3.1 The logic PPLAMBDA :::::::::::::::::::::::::: 4 3.2 The meta-language ML :::::::::::::::::::::::::: 5 3.3 Goal-directed proof :::::::::::::::::::::::::::: 6 3.4 Recursive data structures ::::::::::::::::::::::::: 7 4 Di®erences between the formal and informal theories 7 4.1 Logical framework :::::::::::::::::::::::::::: 8 4.2 Data structure for expressions :::::::::::::::::::::: 8 4.3 Sets and substitutions :::::::::::::::::::::::::: 9 4.4 The induction principle :::::::::::::::::::::::::: 10 5 Constructing theories in LCF 10 5.1 Expressions :::::::::::::::::::::::::::::::: -

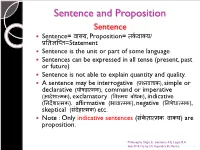

Logic- Sentence and Propositions

Sentence and Proposition Sentence Sentence= वा啍य, Proposition= त셍कवा啍य/ प्रततऻप्तत=Statement Sentence is the unit or part of some language. Sentences can be expressed in all tense (present, past or future) Sentence is not able to explain quantity and quality. A sentence may be interrogative (प्रश्नवाच셍), simple or declarative (घोषड़ा配म셍), command or imperative (आदेशा配म셍), exclamatory (ववमयतबोध셍), indicative (तनदेशा配म셍), affirmative (भावा配म셍), negative (तनषेधा配म셍), skeptical (संदेहा配म셍) etc. Note : Only indicative sentences (सं셍ेता配म셍तवा啍य) are proposition. Philosophy Dept, E- contents-01( Logic-B.A Sem.III & IV) by Dr Rajendra Kr.Verma 1 All kind of sentences are not proposition, only those sentences are called proposition while they will determine or evaluate in terms of truthfulness or falsity. Sentences are governed by its own grammar. (for exp- sentence of hindi language govern by hindi grammar) Sentences are correct or incorrect / pure or impure. Sentences are may be either true or false. Philosophy Dept, E- contents-01( Logic-B.A Sem.III & IV) by Dr Rajendra Kr.Verma 2 Proposition Proposition are regarded as the material of our reasoning and we also say that proposition and statements are regarded as same. Proposition is the unit of logic. Proposition always comes in present tense. (sentences - all tenses) Proposition can explain quantity and quality. (sentences- cannot) Meaning of sentence is called proposition. Sometime more then one sentences can expressed only one proposition. Example : 1. ऩानीतबरसतरहातहैत.(Hindi) 2. ऩावुष तऩड़तोत (Sanskrit) 3. It is raining (English) All above sentences have only one meaning or one proposition. -

First Order Logic and Nonstandard Analysis

First Order Logic and Nonstandard Analysis Julian Hartman September 4, 2010 Abstract This paper is intended as an exploration of nonstandard analysis, and the rigorous use of infinitesimals and infinite elements to explore properties of the real numbers. I first define and explore first order logic, and model theory. Then, I prove the compact- ness theorem, and use this to form a nonstandard structure of the real numbers. Using this nonstandard structure, it it easy to to various proofs without the use of limits that would otherwise require their use. Contents 1 Introduction 2 2 An Introduction to First Order Logic 2 2.1 Propositional Logic . 2 2.2 Logical Symbols . 2 2.3 Predicates, Constants and Functions . 2 2.4 Well-Formed Formulas . 3 3 Models 3 3.1 Structure . 3 3.2 Truth . 4 3.2.1 Satisfaction . 5 4 The Compactness Theorem 6 4.1 Soundness and Completeness . 6 5 Nonstandard Analysis 7 5.1 Making a Nonstandard Structure . 7 5.2 Applications of a Nonstandard Structure . 9 6 Sources 10 1 1 Introduction The founders of modern calculus had a less than perfect understanding of the nuts and bolts of what made it work. Both Newton and Leibniz used the notion of infinitesimal, without a rigorous understanding of what they were. Infinitely small real numbers that were still not zero was a hard thing for mathematicians to accept, and with the rigorous development of limits by the likes of Cauchy and Weierstrass, the discussion of infinitesimals subsided. Now, using first order logic for nonstandard analysis, it is possible to create a model of the real numbers that has the same properties as the traditional conception of the real numbers, but also has rigorously defined infinite and infinitesimal elements. -

Strategies for Linear Rewriting Systems: Link with Parallel Rewriting And

Strategies for linear rewriting systems: link with parallel rewriting and involutive divisions Cyrille Chenavier∗ Maxime Lucas† Abstract We study rewriting systems whose underlying set of terms is equipped with a vector space structure over a given field. We introduce parallel rewriting relations, which are rewriting relations compatible with the vector space structure, as well as rewriting strategies, which consist in choosing one rewriting step for each reducible basis element of the vector space. Using these notions, we introduce the S-confluence property and show that it implies confluence. We deduce a proof of the diamond lemma, based on strategies. We illustrate our general framework with rewriting systems over rational Weyl algebras, that are vector spaces over a field of rational functions. In particular, we show that involutive divisions induce rewriting strategies over rational Weyl algebras, and using the S-confluence property, we show that involutive sets induce confluent rewriting systems over rational Weyl algebras. Keywords: confluence, parallel rewriting, rewriting strategies, involutive divisions. M.S.C 2010 - Primary: 13N10, 68Q42. Secondary: 12H05, 35A25. Contents 1 Introduction 1 2 Rewriting strategies over vector spaces 4 2.1 Confluence relative to a strategy . .......... 4 2.2 Strategies for traditional rewriting relations . .................. 7 3 Rewriting strategies over rational Weyl algebras 10 3.1 Rewriting systems over rational Weyl algebras . ............... 10 3.2 Involutive divisions and strategies . .............. 12 arXiv:2005.05764v2 [cs.LO] 7 Jul 2020 4 Conclusion and perspectives 15 1 Introduction Rewriting systems are computational models given by a set of syntactic expressions and transformation rules used to simplify expressions into equivalent ones. -

Rewriting Via Coinserters

Nordic Journal of Computing 10(2003), 290–312. REWRITING VIA COINSERTERS NEIL GHANI University of Leicester, Department of Mathematics and Computer Science University Road, Leicester LE1 7RH, United Kingdom [email protected] CHRISTOPH LUTH¨ Universitat¨ Bremen, FB 3 — Mathematik und Informatik P.O. Box 330 440, D-28334 Bremen, Germany [email protected] Abstract. This paper introduces a semantics for rewriting that is independent of the data being rewritten and which, nevertheless, models key concepts such as substitution which are central to rewriting algorithms. We demonstrate the naturalness of this construction by showing how it mirrors the usual treatment of algebraic theories as coequalizers of monads. We also demonstrate its naturalness by showing how it captures several canonical forms of rewriting. ACM CCS Categories and Subject Descriptors: F.4.m [Mathematical Logic and Formal Languages]: Miscellaneous – rewrite systems, term rewriting systems; F.4.1 [Mathematical Logic and Formal Languages]: Mathematical Logic – lambda calculus and related systems Key words: rewriting, monads, computation, category theory 1. Introduction Rewrite systems offer a flexible and general notion of computation that has ap- peared in various guises over the last hundred years. The first rewrite systems were used to study the word problem in group theory at the start of the twentieth century [Epstein 1992], and were subsequently further developed in the 1930s in the study of the λ-calculus and combinatory logic [Church 1940]. In the 1950s, Grobner¨ ba- sis were developed to solve the ideal membership problem for polynomials [Buch- berger and Winkler 1998]. In the 1970s, starting with Knuth-Bendix completion [Knuth and Bendix 1970], term rewriting systems (TRSs) have played a prominent roleˆ in the design and implementation of logical and functional programming lan- guages, the theory of abstract data types, and automated theorem proving [Baader and Nipkow 1998]. -

Finite Complete Rewriting Systems for Regular Semigroups

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Theoretical Computer Science 412 (2011) 654–661 Contents lists available at ScienceDirect Theoretical Computer Science journal homepage: www.elsevier.com/locate/tcs Finite complete rewriting systems for regular semigroups R. Gray a,∗, A. Malheiro a,b a Centro de Álgebra da Universidade de Lisboa, Av. Prof. Gama Pinto, 2, 1649-003 Lisboa, Portugal b Departamento de Matemática, Faculdade de Ciências e Tecnologia, Universidade Nova de Lisboa, 2829-516 Caparica, Portugal article info a b s t r a c t Article history: It is proved that, given a (von Neumann) regular semigroup with finitely many left and right Received 11 May 2010 ideals, if every maximal subgroup is presentable by a finite complete rewriting system, then Received in revised form 4 October 2010 so is the semigroup. To achieve this, the following two results are proved: the property Accepted 19 October 2010 of being defined by a finite complete rewriting system is preserved when taking an ideal Communicated by Z. Esik extension by a semigroup defined by a finite complete rewriting system; a completely 0- simple semigroup with finitely many left and right ideals admits a presentation by a finite Keywords: complete rewriting system provided all of its maximal subgroups do. Rewriting systems Finitely presented groups and semigroups ' 2010 Elsevier B.V. All rights reserved. Finite complete rewriting systems Regular semigroups Ideal extensions Completely 0-simple semigroups 1. Introduction This paper is designed to answer a problem posed in [16, Remark and Open Problem 4.5]. -

Lecture 9: Recurrence Relations

Lecture 9: Recurrence Relations Matthew Fricke Definition Examples Guess and Check Lecture 9: Recurrence Relations Binary Search Characteristic Equation Matthew Fricke Method The Fibonacci Sequence Golden Ratio July 15, 2013 Gambler's Ruin Lecture 9: Recurrence Relations This Lecture Matthew Fricke Definition 1 Definition Examples Guess and 2 Examples Check Binary Search 3 Guess and Check Characteristic Equation Method 4 Binary Search The Fibonacci Sequence Golden Ratio 5 Characteristic Equation Method Gambler's Ruin 6 The Fibonacci Sequence 7 Golden Ratio 8 Gambler's Ruin • Recurrence relations are closely tied to differential equations because they are both self referential. • A differential equation relates a function to its own derivative. • The discrete version of a differential equation is a difference equation. • Sometimes people call recurrence relations difference equations but really difference equations are just a type of recurrence relation. • Some of the techniques for solving recurrence relations are almost the same as those used to solve differential equations. Lecture 9: Recurrence Relations What are Recurrence Relations? Matthew Fricke • A recurrence relation is an equation that defines a value in Definition a sequence using previous values in the sequence. Examples Guess and Check Binary Search Characteristic Equation Method The Fibonacci Sequence Golden Ratio Gambler's Ruin • A differential equation relates a function to its own derivative. • The discrete version of a differential equation is a difference equation. • Sometimes people call recurrence relations difference equations but really difference equations are just a type of recurrence relation. • Some of the techniques for solving recurrence relations are almost the same as those used to solve differential equations. -

EQUATIONS and REWRITE RULES a Survey

Stanford Verification Group January 1980 Report No. 15 Computer Science Department Report No. STAN-CS-80-785 EQUATIONS AND REWRITE RULES A Survey bY Gerard Huet and Derek C. Oppen Research sponsored by National Science Foundation Air Force Office of Scientific Research COMPUTER SCIENCE DEPARTMENT Stanford University Stanford Verification Group ‘January 1980 Report No. 15 Computer Science Department Report No. STAN-CS-80-786 EQUATIONS AND REWRITE RULES A Survey Gerard Huet and Derek C. Oppen ABSTRACT Equations occur frequently in mathematics, logic and computer science. in this paper, we survey the main results concerning equations, and the methods avilable for reasoning about them and computing with them. The survey is self-contained and unified, using traditional abstract algebra. Reasoning about equations may involve deciding if an equation follows from a given set of equations (axioms), or if an equation is true in a given theory. When used in this manner, equations state properties that hold between objects. Equations may also be used as definitions; this use is well known in computer science: programs written in applicative languages, abstract interpreter definitions, and algebraic data type definitions are clearly of this nature. When these equations are regarded as oriented “rewrite rules”, we may actually use them to compute. In addition to covering these topics, we discuss the problem of “solving*’ equations (the “unification” problem), the problem of proving termination of sets of rewrite rules, and the decidability and complexity of word problems and of combinations of equat i onal theories. We restrict ourselves to first-order equations, and do not treat equations which define non-terminating computations or recent work on rewrite rules applied to equational congruence classes.