PEGASUS: a Policy Search Method for Large Mdps and Pomdps

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ancient Greece. ¡ the Basilisc Was an Extremely Deadly Serpent, Whose Touch Alone Could Wither Plants and Kill a Man

Ancient Greece. ¡ The Basilisc was an extremely deadly serpent, whose touch alone could wither plants and kill a man. ¡ The creature is later shown in the form of a serpent- tailed bird. ¡ Cerberus was the gigantic hound which guarded the gates of Haides. ¡ He was posted to prevent ghosts of the dead from leaving the underworld. ¡ Cerberus was described as a three- headed dog with a serpent's tail, a mane of snakes, and a lion's claws. The Chimera The Chimera was a monstrous beast with the body and maned head of a lion, a goat's head rising from its back, a set of goat-udders, and a serpents tail. It could also breath fire. The hero Bellerophon rode into battle to kill it on the back of the winged horse Pegasus. ¡ The Gryphon or Griffin was a beast with the head and wings of an eagle and the body of a lion. ¡ A tribe of the beasts guarded rich gold deposits in certain mountains. HYDRA was a gigantic, nine-headed water-serpent. Hercules was sent to destroy her as one of his twelve labours, but for each of her heads that he decapitated, two more sprang forth. So he used burning brands to stop the heads regenerating. The Gorgons The Gorgons were three powerful, winged daemons named Medusa, Sthenno and Euryale. Of the three sisters only Medousa was mortal, and so it was her head which the King commanded the young hero Perseus to fetch. He accomplished this with the help of the gods who equipped him with a reflective shield, curved sword, winged boots and helm of invisibility. -

Pegasus Back from Kenya Sgt

Hawaii Marine CCE Graduates Bodybuilder A-5 Volume 29, Number 23 Serving Marine Corps Base Hawaii June 8, 2000 B-1 A1111111111111111W 1111111111- .111111111111164 Pegasus back from Kenya Sgt. Robert Carlson Natural Fire provided valuable training for "Communications with the JTF headquar- Press Chief the detachment, according to Capt. ters was difficult at times, as was getting Christopher T. Cable, detachment mainte- maintenance parts here all the way from The Marines of Marine Heavy Helicopter nance officer. They not only experienced Hawaii," Cable explained. "Everyone did a Squadron 463 returned to Hawaii Saturday breaking down and deploying their CH-53D great job though, and we were able to get our after their month-long deployment to Kenya "Sea Stallions," they gained the experience of job done without any problems." in support of Operation Natural Fire. overcoming challenges inherent while con- Cable said the Marines in the detachment Working side-by-side with the U.S. Army, ducting operations in a foreign country. are happy to be back in Hawaii. Navy, and Air Force, Pegasus provided airlift "We were located on an airfield in the city "There are a lot of experienced Marines support for medical assistance and humanitar- of Mombasa, Kenya, about 70 miles south of here, but there are also many who are new to ian operations during the joint-combined the Joint Task Force headquarters in Malindi," the squadron," he said. "This evolution operation. said Cable. "The crew had the chance to see allowed the newer members of the squadron a The detachment of Hawaii Marines assist- what it takes to have two helicopters on-call chance to experience everything involved in ed in training Kenyan, Ugandan and 24 hours a day." deploying to a foreign country. -

Greek Myths - Creatures/Monsters Bingo Myfreebingocards.Com

Greek Myths - Creatures/Monsters Bingo myfreebingocards.com Safety First! Before you print all your bingo cards, please print a test page to check they come out the right size and color. Your bingo cards start on Page 3 of this PDF. If your bingo cards have words then please check the spelling carefully. If you need to make any changes go to mfbc.us/e/xs25j Play Once you've checked they are printing correctly, print off your bingo cards and start playing! On the next page you will find the "Bingo Caller's Card" - this is used to call the bingo and keep track of which words have been called. Your bingo cards start on Page 3. Virtual Bingo Please do not try to split this PDF into individual bingo cards to send out to players. We have tools on our site to send out links to individual bingo cards. For help go to myfreebingocards.com/virtual-bingo. Help If you're having trouble printing your bingo cards or using the bingo card generator then please go to https://myfreebingocards.com/faq where you will find solutions to most common problems. Share Pin these bingo cards on Pinterest, share on Facebook, or post this link: mfbc.us/s/xs25j Edit and Create To add more words or make changes to this set of bingo cards go to mfbc.us/e/xs25j Go to myfreebingocards.com/bingo-card-generator to create a new set of bingo cards. Legal The terms of use for these printable bingo cards can be found at myfreebingocards.com/terms. -

Press Release

Press Release German Workmanship for the USA Pegasus & Dragon at Gulfstream Park, Miami, USA: Strassacker art foundry creates largest bronze horse sculpture in the world The Strassacker art foundry has implemented the vision of numerous architects, town plan- ners and artists. The list of national and international references is just as long. After all, the complete processing of small-scale and complex art projects from conceptualisation through coordination of the artists, architects, authorities and companies involved, right through to the final assembly stage is among the core competencies of the company from Süßen. "But when the first sketches of Pegasus & Dragon arrived in Süßen for review around three years ago, even the most experienced members of staff initially looked at it in disbelief", recollect Edith Strassacker and Günter Czasny. "The bronze statue Pegasus & Dragon was already one of the greatest challenges in our company's history, which spans close to a century", report the presiding managing director and the deputy managing direc- tor of the family-owned company that employs 500 members of staff. Even motorists travelling along the busy U.S. Highway 1 in Miami, Florida, over the past few months have wondered, in astonishment, what was being constructed close to Hallandale Beach in Gulfstream Park. The 33-metre-high metal structure that gleams in the sun and that took up to 100 workers to create, all of them working in tandem, was the talk of the town on the legendary federal highway that runs the length of the East Coast of the USA. The result that emerged upon conclusion of construction in November 2014 is no less spec- tacular. -

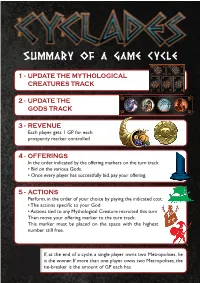

Summary of a Game Cycle

SUMMARY OF A GAME CYCLE 1 - UPDATE THE MYTHOLOGICAL CREATURES TRACK 2 - UPDATE THE GODS TRACK 3 - REVENUE Each player gets 1 GP for each prosperity marker controlled 4 - OFFERINGS In the order indicated by the offering markers on the turn track: s"IDONTHEVARIOUS'ODS s/NCEEVERYPLAYERHASSUCCESSFULLYBID PAYYOUROFFERING 5 - ACTIONS 0ERFORM INTHEORDEROFYOURCHOICEBYPAYINGTHEINDICATEDCOST s4HEACTIONSSPECIlCTOYOUR'OD s!CTIONSTIEDTOANY-YTHOLOGICAL#REATURERECRUITEDTHISTURN 4HENMOVEYOUROFFERINGMARKERTOTHETURNTRACK 4HIS MARKER MUST BE PLACED ON THE SPACE WITH THE HIGHEST NUMBERSTILLFREE )F ATTHEENDOFACYCLE ASINGLEPLAYEROWNSTWO-ETROPOLISES HE ISTHEWINNER)FMORETHANONEPLAYEROWNSTWO-ETROPOLISES THE TIE BREAKERISTHEAMOUNTOF'0EACHHAS MYTHOLOGICAL CREATURES THE FATES SATYR DRYAD Recieve your revenue again, just like Steal a Philosopher from the player Steal a Priest from the player of SIREN PEGASUS GIANT at the beginning of the Cycle. of your choice. your choice. Remove an opponent’s fleet from Designate one of your isles and Destroy a building. This action can the board and replace it with one move some or all of the troops on be used to slow down an opponent of yours. If you no longer have any it to another isle without having to or remove a troublesome Fortress. The Kraken, the Minotaur, Chiron, The following 4 creatures work the same way: place the figurine on the isle of fleets in reserve, you can take one have a chain of fleets. This creature The Giant cannot destroy a Metro- Medusa and Polyphemus have a your choice. The power of the creature is applied to the isle where it is until from somewhere else on the board. is the only way to invade an oppo- polis. figurine representing them as they the beginning of your next turn. -

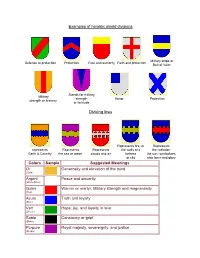

Examples of Heraldic Shield Divisions Dividing Lines Colors Sample

Examples of heraldic shield divisions Military strips or Defense or protection Protection Rule and authority Faith and protection Belt of Valor Stands for military Military strength Honor Protection strength or bravery. or fortitude. Dividing lines Represents fire, or Represents represents Represents Represents the walls of a the radiation Earth & Country the sea or water clouds and air fortress the sun. symbolizes or city also fame and glory Colors Sample Suggested Meanings Or Generosity and elevation of the mind (Gold) Argent Peace and sincerity (White/Silver) Gules Warrior or martyr; Military strength and magnanimity (Red) Azure Truth and loyalty (Blue) Vert Hope, joy, and loyalty in love (Green) Sable Constancy or grief (Black) Purpure Royal majesty, sovereignty, and justice (Purple) Tenne Worthy ambition (Orange) Sanguine Patient in battle, and yet victorious (Maroon) v Charges: Suggested Meanings: Acacia Branch Eternal and affectionate remembrance both for the living and the dead. Acorn Life, immortality and perseverence Anchor Christian emblem of hope and refuge; awarded to sea warriors for special feats performed Also signifies steadfastness and stability. In seafaring nations, the anchor is a symbol of good luck, of safety, and of security Annulet Emblem of fidelity; Also a mark of Cadency of the fifth son Antelope Represents action, agility and sacrifice and a very worthy guardian that is not easily provoked, but can be fierce when challenged Antlers Strength and fortitude Anvil Honour and strength; chief emblem of the smith's trade Arrow Readiness; if with a cross it denotes affliction; a bow and arrow signifies a man resolved to abide the uttermost hazard of battle. -

Pegasus 300 Moons Wyvern 314 Moons Manticore 251 Moons

Pegasus 300 moons XF-TQL Serpentis_Prime sec -0.012 sec -0.125 12 moons 7 moons 4-EP12 YZS5-4 sec -0.048 sec -0.015 54 moons 67 moons Centaur 186 moons 3WE-KY Z9PP-H sec -0.107 sec -0.028 51 moons 14 moons A8-XBW IR-WT1 9-VO0Q 7-8S5X EI-O0O sec -0.170 sec -0.101 sec -0.121 sec -0.029 sec -0.028 40 moons 63 moons 14 moons 71 moons 7 moons Manticore Sphinx Wyvern 251 moons 470 moons 314 moons E-BWUU 5-D82P PNQY-Y J5A-IX 7X-02R sec -0.091 sec -0.092 sec -0.132 sec -0.004 sec -0.092 64 moons 30 moons 26 moons 34 moons 8 moons to Cloud Ring Y-1W01 8ESL-G RP2-OQ D2AH-Z sec -0.128 sec -0.027 sec -0.151 B-DBYQ sec -0.216 57 moons 40 moons 42 moons 51 moons 9R4-EJ JGOW-Y R3W-XU YVBE-E sec -0.104 sec -0.048 sec -0.106 sec -0.284 29 moons 10 moons 28 moons 89 moons Q-XEB3 SPLE-Y APM-6K AL8-V4 BYXF-Q sec -0.062 sec -0.111 sec -0.059 sec -0.072 sec -0.241 28 moons 51 moons 65 moons 46 moons 40 moons Taurus 351 moons K8L-X7 9O-ORX RE-C26 KCT-0A OW-TPO AC2E-3 C-C99Z P5-EFH sec -0.087 sec -0.121 sec -0.062 sec -0.021 sec -0.066 sec -0.307 sec -0.321 sec -0.321 22 moons 17 moons 50 moons 29 moons 70 moons 6 moons 37 moons 52 moons Kraken Satyr 238 moons 275 moons UAYL-F 00GD-D N2-OQG IGE-RI CL-BWB L-A5XP sec -0.034 sec -0.204 sec -0.014 sec -0.049 sec -0.285 sec -0.379 7 moons 26 moons 60 moons 53 moons 47 moons 50 moons Unicorn 188 moons ESC-RI C1XD-X B17O-R YRNJ-8 3ZTV-V D4KU-5 sec -0.024 sec -0.453 sec -0.120 sec -0.611 sec -0.280 sec -0.110 38 moons 34 moons 44 moons 55 moons 54 moons 85 moons to Aridia Chimera 373 moons H-S80W 671-ST A-HZYL G95F-H -

Animal Creatures and Creation

Curriculum Units by Fellows of the Yale-New Haven Teachers Institute 1998 Volume II: Cultures and Their Myths Universal Myths and Symbols: Animal Creatures and Creation Curriculum Unit 98.02.05 by Pedro Mendia-Landa Mythology and mythological ideas permeate all languages, cultures and lives. Myths affect us in many ways, from the language we use to how we tell time; mythology is an integral presence. The influence mythology has in our most basic traditions can be observed in the language, customs, rituals, values and morals of every culture, yet the limited extent of our knowledge of mythology is apparent. In general we have today a poor understanding of the significance of myths in our lives. One way of studying a culture is to study the underlying mythological beliefs of that culture, the time period of the origins of the culture’s myths, the role of myth in society, the symbols used to represent myths, the commonalties and differences regarding mythology, and the understanding a culture has of its myths. Such an exploration leads to a greater understanding of the essence of a culture. As an elementary school teacher I explore the role that mythology plays in our lives and the role that human beings play in the world of mythology. My objective is to bring to my students’ attention stories that explore the origins of the universe and the origins of human kind, and to encourage them to consider the commonalties and differences in the symbols represented in cross-cultural universal images of the creation of the world. I begin this account of my unit by clarifying the differences between myth, folklore, and legend, since they have been at times interchangeably and wrongly used. -

Pegasus XL Development and L-1011 Pegasus Carrier Aircraft

I PEGASUS<iI XL DEVELOPMENT AND L-1011 I PEGASUS CARRIER AIRCRAFT I by Marty Mosier' Ed Rutkowski2 I Orbital Sciences Corporation Space Systems Division I Dulles, VA Abstract Pegasus XL vehicle design, capability, develop ment program, and payload interfaces. The L- I The Pegasus air-launched space booster has 1011 carrier aircraft is described, including its established itself as America's standard small selection process, release mechanism vehicle and launch vehicle. Since its first flight on April 5, 1990 payload support capabilities, and certification pro Pegasus has delivered 13 payloads to orbit in the gram. Pegasus production facilities are described. I four launches conducted to-date. To improve ca pability and operational flexibility, the Pegasus XL development program was initiated in late 1991. Background I The Pegasus XL vehicle has increased propellant, improved avionics, and a number of design en The Pegasus air-launched space booster (Fig hancements. To increase the Pegasus launch ure 1), which first flew on April 5, 1990, provides a I system's flexibility, a Lockheed L-1 011 aircraft has flexible, and cost effective means for delivering been modified to serve as a carrier aircraft for the satellites into low earth orbit. 1 Four launches vehicle. In addition, the activation of two new have occurred to-date, delivering a total of 13 Pegasus production facilities is underway at payloads to orbit. Launches have been conducted I Vandenberg AFB, California and the NASA Wal from both the Eastern (Kennedy Space Center, lops Flight Facility, Wallops, Virginia. The Pe Florida) and Western (Vandenberg, California) gasus XL vehicle, L-1011 carrier aircraft, and Ranges. -

Contract Is Eligible?

Run Date : 09/27/2021 @ 03:30 AM City of Chicago Contracts in Scope for CIP PO End Vendor Name PO Number Description Date 1140 NORTH BRANCH DEVELOPMENT LLC 28314 TIF Reimbursement 11/16/2028 1319 S SPAULDING LLC 21219 1319 S SPAULDING (Group 69A) Multi-Family 07/30/2034 1525 HP LLC 33056 TIF - RDA - 1525 HP LLC 12/31/2036 18TH STREET DEVELOPMENT CORP 24130 Facade Rebate 07/30/2034 2128 NORTH CALIFORNIA LLC 17424 CSPAN - 2809 W. Shakespeare Ave 03/12/2025 2650 MILWAUKEE LLC 80686 2650 MILWAUKEE LLC /LOGAN SQUARE THEATRE 12/31/2023 2657 NORTH KEDZIE LLC 21697 2657 N. Kedzie Facade Rebate 07/30/2034 3339 W DOUGLAS LLC 21558 Multi-Family 07/30/2034 3 ARTS INC 141994 Grant Agreement, CityArts Small, 3Arts 12/31/2021 45th COTTAGE LLC 95516 45th/COTTAGE, LLC -"4400 GROVE" 12/31/2043 4800 N DAMEN, LLC 20724 Residential Development: 4800 N. Damen 12/31/2024 4832 S VINCENNES LP 6535 Multi 07/25/2023 550 ADAMS LLC 9683 Redevelopment Agreement 12/31/2022 550 JACKSON ASSOCIATES, LLC 8222 Redevelopment Agreement: 550 W. Jackson 12/31/2022 601W COMPANIES CHICAGO LLC 104989 601 W. COMPANIES CHICAGO LLC 05/17/2069 7131 JEFFREY DEVELOPMENT LLC 130192 7131 JEFFREY DEVELOPMENT, LLC - JEFFREY PLAZA RDA 05/07/2030 7742-48 S STONY LLC 24088 Facade Rebate 07/30/2034 79TH STREET LIMITED PARTNERSHIP 20590 Wrightwood Senior Apartments Multi Program 10/03/2026 79TH STREET LIMITED PARTNERSHIP 21748 Redevelopment Agreement: 2815 W. 79th St. and 2751-57 W. 79th St. 12/31/2024 826CHI INC NFP 142430 Grant Agreement, CityArts Project, 826CHI INC NFP 12/31/2021 901 W 63RD LIMITED PARTNERSHIP 19742 Construction at 901-923 W. -

The Symbolisms of Heraldry : Or, a Treatise on the Meanings And

THE SYMBOLISMS OF HERALDRY " Unto the very points and prickes, here are to be found great misteries." NICHOLAS FLAMMEL, 1399. " And if aught else great bards beside In sage and solemn tunes have sung, Of turneys, and of trophies hung, Of forests, and enchantments drear, Where more is meant than meets the ear." MILTON. THE SYMBOLISMS OF HERALDRY OR A TREATISE ON THE MEANINGS AND DERIVATIONS OF ARMORIAL BEARINGS BY W. CECIL WADE " Yet my attempt is not of presumption to teache, (/ myselfe be hewing most need to taught',) but only to the intent that gentle- men that seeke to knovoe all good thinges, and woulde have an entrie into this, may notfind here a thing expedient but rather a poor help thereto." LEIGH'S Accedence of Armorie, London, 1576. WITH 95 ILLUSTRATIONS SECOND EDITION LONDON GEORGE REDWAY 1898 PREFACE " THE Daily News very recently remarked, Heraldry is lost art the for almost a ; very rage old book- plates shows that it takes its rank amongst anti- " and not this quities ; though we may agree with writer's remarks in their entirety, certain it is that the collecting of book-plates has really helped to revive the taste for heraldic studies, and now seems to invite the publication of my researches on the subject of armorial symbols. Besides the chief modern authorities whom I have consulted, and whose names are given in the text, I may mention the following older heraldic writers whose tomes I have examined and com- : "Accedence of Guillim's pared Leigh's Arms," 1576 ; " Heraldry," editions of 1610 and 1632, which in- clude the chief points to be found in Juliana Berners, " Bosworth, and Sir John Feme's Glorie of Gene- " also Peacham's rositie ; "Compleat Gentleman," 1626; Camden's "Remaines," 1623; Nisbet's vi Preface " Froissart's trans- Heraldry," .1722 ; "Chronicle," lated " Her- by Lord Berners, 1525 ; Edmonson's aldry,"1780; Dallaway's "Heraldry," 1793; Forney's "Heraldry," 1771; and Brydson's "History of Chivalry," 1786. -

Constellation Legends

Constellation Legends by Norm McCarter Naturalist and Astronomy Intern SCICON Andromeda – The Chained Lady Cassiopeia, Andromeda’s mother, boasted that she was the most beautiful woman in the world, even more beautiful than the gods. Poseidon, the brother of Zeus and the god of the seas, took great offense at this statement, for he had created the most beautiful beings ever in the form of his sea nymphs. In his anger, he created a great sea monster, Cetus (pictured as a whale) to ravage the seas and sea coast. Since Cassiopeia would not recant her claim of beauty, it was decreed that she must sacrifice her only daughter, the beautiful Andromeda, to this sea monster. So Andromeda was chained to a large rock projecting out into the sea and was left there to await the arrival of the great sea monster Cetus. As Cetus approached Andromeda, Perseus arrived (some say on the winged sandals given to him by Hermes). He had just killed the gorgon Medusa and was carrying her severed head in a special bag. When Perseus saw the beautiful maiden in distress, like a true champion he went to her aid. Facing the terrible sea monster, he drew the head of Medusa from the bag and held it so that the sea monster would see it. Immediately, the sea monster turned to stone. Perseus then freed the beautiful Andromeda and, claiming her as his bride, took her home with him as his queen to rule. Aquarius – The Water Bearer The name most often associated with the constellation Aquarius is that of Ganymede, son of Tros, King of Troy.