Who Moderates the Social Media Giants? a Call to End Outsourcing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TCPID Update February 2020

Update from the Trinity Centre for People with Intellectual Disabilities School of Education, Trinity College Dublin February 2020 Trinity College Dublin, The University of Dublin Update from the Trinity Centre for People with Intellectual Disabilities, School of Education, Trinity College Dublin February 2020 Dear Partners, Thank you all as always for your continued support for the Trinity Centre for People with Intellectual Disabilities. We now have more than 30 TCPID Business Partners and Business Patrons which is an incredible achievement and something that we are very grateful to you all for. Thanks to your very generous support, we are able to secure the future of the TCPID and create many exciting opportunities for our students and graduates. We launched our TCPID online mentor training programme at the end of last year. We hope that you have found it useful so far and we would greatly welcome any feedback that you may have at any stage. We are very much looking forward to continuing working with you all in 2020. Here are just a few of our highlights over the past few months here in the TCPID. With warmest thanks as always for your support, TCPID Pathways Coordinator Email: [email protected] Tel: 01 8963885 Please follow all our latest news on our website at www.tcd.ie/tcpid as well as on Facebook @InclusionTCD, Twitter @IDTCD Instagram inclusiontcd as well as on LinkedIn www.linkedin.com/school/inclusiontcd Trinity College Dublin, The University of Dublin ASIAP Graduation Dr. Mary-Ann O’Donovan, Course Coordinator and Assistant Professor in Intellectual Disability and Inclusion: Friday January 31st 2020 was a very proud day for all of us in the TCPID as it was graduation day for our Level 5 Certificate in Arts, Science and Inclusive Applied Practice. -

JULIAN ASSANGE: When Google Met Wikileaks

JULIAN ASSANGE JULIAN +OR Books Email Images Behind Google’s image as the over-friendly giant of global tech when.google.met.wikileaks.org Nobody wants to acknowledge that Google has grown big and bad. But it has. Schmidt’s tenure as CEO saw Google integrate with the shadiest of US power structures as it expanded into a geographically invasive megacorporation... Google is watching you when.google.met.wikileaks.org As Google enlarges its industrial surveillance cone to cover the majority of the world’s / WikiLeaks population... Google was accepting NSA money to the tune of... WHEN GOOGLE MET WIKILEAKS GOOGLE WHEN When Google Met WikiLeaks Google spends more on Washington lobbying than leading military contractors when.google.met.wikileaks.org WikiLeaks Search I’m Feeling Evil Google entered the lobbying rankings above military aerospace giant Lockheed Martin, with a total of $18.2 million spent in 2012. Boeing and Northrop Grumman also came below the tech… Transcript of secret meeting between Julian Assange and Google’s Eric Schmidt... wikileaks.org/Transcript-Meeting-Assange-Schmidt.html Assange: We wouldn’t mind a leak from Google, which would be, I think, probably all the Patriot Act requests... Schmidt: Which would be [whispers] illegal... Assange: Tell your general counsel to argue... Eric Schmidt and the State Department-Google nexus when.google.met.wikileaks.org It was at this point that I realized that Eric Schmidt might not have been an emissary of Google alone... the delegation was one part Google, three parts US foreign-policy establishment... We called the State Department front desk and told them that Julian Assange wanted to have a conversation with Hillary Clinton... -

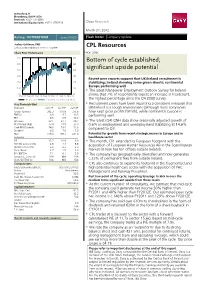

CPL Resources

www.davy.ie Bloomberg: DAVY<GO> Research: +353 1 6148997 Institutional Equity Sales: +353 1 6792816 Davy Research March 21, 2012 Rating: OUTPERFORM Issued 30/06/09 Flash Note: Company update Joshua Goldman, PhD [email protected] / +353 1 6148997 CPL Resources Share Price Performance Price: 298c 350 300 Bottom of cycle established; 300 260 significant upside potential 250 220 200 180 Recent peer reports suggest that UK/Ireland recruitment is 150 140 stabilizing; Ireland showing some green shoots; continental Europe performing well 100 100 • The latest Manpower Employment Outlook Survey for Ireland 50 60 Mar 09 Sep 09 Mar 10 Sep 10 Mar 11 Sep 11 Mar 12 shows that 7% of respondents expect an increase in headcount, CPL price (c) Rel to ISEQ overall index (rhs) the highest percentage since the Q4 2008 survey. Key financials (€m) • Recruitment peers have been reporting a consistent message that Year end Jun12E Jun13F Jun14F UK/Ireland is a tough environment (although most companies Group Turnover 292.3 310.9 335.8 have kept gross profits flattish), while continental Europe is EBITDA 8.9 9.7 10.5 performing well. PBT 8.9 9.5 10.4 EPS Basic 23.1 27.1 29.7 • The latest (Q4) QNH data show seasonally adjusted growth of EPS Diluted (Adj) 23.4 27.5 30.0 0.6% in employment and unemployment stabilizing at 14.6% Cash EPS (Diluted) 24.9 29.0 31.6 compared to Q3. Dividend 6.0 7.0 7.0 NBV 179.0 198.7 221.0 Potential for growth from recent strategic moves in Europe and in Valuation healthcare sector P/E 12.7 10.9 9.9 • This month, CPL extended its European footprint with the FCF Yld (pre div) (%) 2.8 7.4 8.8 Dividend Yield (%) 2.0 2.3 2.3 acquisition of European Human Resources AB in the Scandinavian Price / Book 1.7 1.5 1.3 market (it now has ten offices outside Ireland). -

Human Capital Management Industry Update Winter 2019

HUMAN CAPITAL MANAGEMENT STAFFING & RECRUITMENT – INDUSTRY UPDATE | Winter 2019 Houlihan Lokey Human Capital Management Houlihan Lokey is pleased to present its third Human Capital Management (HCM) Industry Update. Once again, we are happy to share industry insights, a public markets overview, a snapshot of relevant macroeconomic indicators, transaction announcements, and related detail. We believe this newsletter will provide you with the most important and relevant information you need to stay up to date with the HCM industry. We would also like to encourage you to meet with us at the SIA Executive Forum in Austin, Texas on February 25-28, 2019 where we would be happy to share recent market developments and further insights. If there is additional content that you would find useful for future updates, please do not hesitate to contact us with your suggestions. Regards, Thomas Bailey Jon Harrison Andrew Shell Managing Director Managing Director Vice President [email protected] [email protected] [email protected] 404.495.7056 +44 (0) 20 7747 7564 404.495.7002 Additional Human Capital Management Contacts Larry DeAngelo Pat O’Brien Alex Scott Bennett Tullos Mike Bertram Head of Business Services Associate Financial Analyst Financial Analyst Financial Analyst [email protected] [email protected] [email protected] [email protected] [email protected] 404.495.7019 404.495.7042 404.926.1609 404.926.1619 404.495.7040 Human Capital Management – Coverage by Subsector Staffing & VMS/MSP/RPO Talent Payroll/ HR Consulting/ Recruitment Management & PEO Benefits Admin Development -

Barefoot Into Cyberspace Adventures in Search of Techno-Utopia

Barefoot into Cyberspace Adventures in search of techno-Utopia By Becky Hogge July 2011 http://www,barefootintocyberspace.com Barefoot into Cyberspace Becky Hogge Read This First This text is distributed by Barefoot Publishing Limited under a Creative Commons Attribution-ShareAlike 2.0 UK: England & Wales Licence. That means: You are free to copy, distribute, display, and perform the work to make derivative works to make commercial use of the work Under the following conditions Attribution. You must attribute the work in the manner specified by the author or licensor (but not in any way that suggests that they endorse you or your use of the work). Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under the same or similar licence to this one. For any reuse or distribution, you must make clear to others the licence terms of this work. The best way to do this is with a link to http://barefootintocyberspace.com/book/hypertext Any of these conditions may be waived by seeking permission from Barefoot Publishing Limited. To contact Barefoot Publishing Limited, email barefootpublishing [AT] gmail [DOT] com. More information available at http://creativecommons.org/licenses/by- sa/2.0/uk/. See the end of this file for complete legalese 2 Barefoot into Cyberspace Becky Hogge Contents Prologue: Fierce Dancing ...................................................................................................................................... 5 Chapter 1: Digging the command line ............................................................................................................ -

Scheme Document. the Scheme Meeting Will Start at 12 Noon on That Date and the EGM at 12.15 P.M

174368 Proof 6 Tuesday, November 24, 2020 22:39 THIS DOCUMENT IS IMPORTANT AND REQUIRES YOUR IMMEDIATE ATTENTION. If you are in any doubt about the contents of this Scheme Document and what action you should take, you should consult your stockbroker, bank manager, solicitor, accountant or other independent financial adviser who, if you are taking advice in Ireland, is authorised or exempted under the European Union (Markets in Financial Instruments) Regulations 2017 (S.I. No. 375 of 2017) or the Investment Intermediaries Act 1995 (as amended) or, if you are taking such advice in the United Kingdom, is authorised pursuant to the Financial Services and Markets Act 2000 of the United Kingdom or, if you are taking advice elsewhere, is an appropriately authorised independent financial adviser. If you have sold or otherwise transferred all your Cpl Shares, please send this Scheme Document and the accompanying documents at once to the purchaser or transferee, or to the stockbroker, bank or other agent through whom the sale or transfer was effected for delivery to the purchaser or transferee. The release, publication or distribution of this Scheme Document in or into jurisdictions other than Ireland and the United Kingdom may be restricted by law and therefore persons into whose possession this Scheme Document comes should inform themselves about and observe such restrictions. Any failure to comply with these restrictions may constitute a violation of the securities laws of any such jurisdiction. To the fullest extent permitted by applicable Law, the companies involved in the Acquisition disclaim any responsibility or liability for the violation of any such restrictions by any person. -

An Examination of Social Media's Role in the Egyptian Arab Spring

Butler University Digital Commons @ Butler University Undergraduate Honors Thesis Collection Undergraduate Scholarship 5-2014 Revolutionizing the Revolution: An Examination of Social Media's Role in the Egyptian Arab Spring Needa A. Malik Butler University Follow this and additional works at: https://digitalcommons.butler.edu/ugtheses Part of the International and Area Studies Commons, Mass Communication Commons, and the Social Media Commons Recommended Citation Malik, Needa A., "Revolutionizing the Revolution: An Examination of Social Media's Role in the Egyptian Arab Spring" (2014). Undergraduate Honors Thesis Collection. 197. https://digitalcommons.butler.edu/ugtheses/197 This Thesis is brought to you for free and open access by the Undergraduate Scholarship at Digital Commons @ Butler University. It has been accepted for inclusion in Undergraduate Honors Thesis Collection by an authorized administrator of Digital Commons @ Butler University. For more information, please contact [email protected]. 1 Revolutionizing the Revolution: An Examination of Social Media’s Role in the Egyptian Arab Spring Revolution A Thesis Presented to the Department of International Studies College of Liberal Arts and Sciences And The Honors Program Of Butler University In Partial Fulfillment of the Requirements for Graduation Honors Needa A. Malik 4/28/14 2 “The barricades today do not bristle with bayonets and rifles, but with phones.”1 Introduction Revolutions are born out of human existence intertwining with synergetic movements. They are the products of deeply disgruntled and dissatisfied individuals seeking an alternative to the present status quo. Revolutions emerge from a number of situations and come in the form of wars, of movements, of social, cultural, and technological upheavals. -

Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/327186182 Custodians of the internet: Platforms, content moderation, and the hidden decisions that shape social media Book · January 2018 CITATIONS READS 268 6,850 1 author: Tarleton Gillespie Microsoft 37 PUBLICATIONS 3,116 CITATIONS SEE PROFILE All content following this page was uploaded by Tarleton Gillespie on 20 December 2019. The user has requested enhancement of the downloaded file. Custodians of the Internet platforms, content moderation, and the hidden decisions that shape social media Tarleton Gillespie CUSTODIANS OF THE INTERNET CUSTODIANS OF THE INTERNET platforms, content moderation, and the hidden decisions that shape social media tarleton gillespie Copyright © 2018 by Tarleton Gillespie. All rights reserved. Subject to the exception immediately following, this book may not be repro- duced, in whole or in part, including illustrations, in any form (beyond that copying permitted by Sections 107 and 108 of the U.S. Copyright Law and except by reviewers for the public press), without written permission from the publishers. The Author has made this work available under the Creative Commons Attribution- Noncommercial- ShareAlike 4.0 International Public License (CC BY- NC- SA 4.0) (see https://creativecommons.org/licenses/ by- nc- sa/4.0/). An online version of this work is available; it can be accessed through the author’s website at http://www.custodiansoftheinternet.org. Yale University Press books may be purchased in quantity for educational, business, or promotional use. For information, please e- mail sales.press@yale. edu (U.S. offi ce) or [email protected] (U.K. -

1 Michelle Zilio – Rapporteur December 16

Michelle Zilio – Rapporteur December 16, 2011 Conference Report (DRAFT 2) Executive Summary The University of Victoria’s Centre for Global Studies hosted the Networks, Social Media and Political Change in the Middle East and North Africa (MENA) conference from November 29 to December 1, 2011 in Victoria, British Columbia, Canada. A group of 27 academics, activists, government and private sector representatives, and social media experts gathered for three days to discuss this timely subject matter. The conference was structured into five informal discussion forums and included a web conference with individuals from the MENA region. The conference began with a review of the role of social media in MENA since the start of the phenomenon known popularly as the Arab Spring. After canvassing the types of hardware/software which might be characterized as constituting “social media”, participants agreed that the role of those media varied from country to country, largely depending on the degree of Internet penetration. A lively discussion ensued on the relationship between traditional (printed press, television networks) and new/social media in MENA over the last year. Participants agreed that the old and new media were interacting and evolving in relation to each other. A combination of old and new media often significantly affected specific event outcomes, although the level of impact depended on circumstances. They also agreed that the media as a whole contributed to a demonstration or cascade effect from one country to another. The role of journalism in MENA was examined, including the differing practices and approaches of the two Al Jazeera networks. The general parameters of social media effects were agreed upon. -

Creating Impact – Achieving Results

30% Club Ireland CEO & Chairs Third Annual Conference Creating Impact – Achieving Results 25 January 2017 #30pcImpact National Gallery of Ireland Clare Street, Dublin 2 3.45pm Registration 4pm Seminar 6:15-7.30pm Reception & Networking @30percentclubIE www.30percentclub.org 30% Club Ireland Creating Impact Achieving Results 25 January 2017 2 Creating Impact Achieving Results 25 January 2017 Agenda 4.00pm Opening Remarks An Tánaiste and Minister for Justice and Equality, Frances Fitzgerald TD 4.15pm Welcome Marie O’Connor, Partner, PwC, Country Lead, 30% Club Ireland 4.25pm Painting the Picture: Research Update 2016 Facilitator: Conor O’Leary, Group Company Secretary, Greencore Anne-Marie Taylor, Management Consultant - Women in Management and Women on Boards: the Irish picture Darina Barrett, Partner, KPMG - The Think Future Study 2016 Dr Sorcha McKenna, Partner, McKinsey – Women Matter: Women in the Workplace 2016 4.45pm Sasha Wiggins introduces Lady Barbara Judge Sasha Wiggins, CEO, Barclays Bank Ireland introduces Lady Barbara Judge, Chair, Institute of Directors 5.00pm Taking Action Facilitator: Melíosa O’Caoimh, Senior Vice President, Northern Trust Anne Heraty, CEO, CPL Resources and President of Ibec Gareth Lambe, Head of Facebook Ireland Pat O’Doherty, CEO, ESB Brian O’Gorman, Managing Partner, Arthur Cox 5.25pm Leadership Commitment and Accountability - Perspective of the Chair Facilitator: Bríd Horan, Former Deputy CEO, ESB Rose Hynes, Chair, Shannon Group and Origin Enterprises Gary Kennedy, Chair, Greencore Gary McGann, Chair, Paddy Power Betfair 5.45pm Diplomacy, Diaspora and Diversity Anne Anderson, Ambassador of Ireland to the United States 6.00pm Future Plans & Closing Remarks Carol Andrews, Global Head of Client Service and Prime Custody (AIS), BNY Mellon Please note that this is an on the record event and views expressed are not necessarily representative of all 30% Club members. -

2020.11.05 Q3 FY12/20 Presentation1.93MB

Financial Results for the 3rd Quarter of Fiscal Year Ending December 31, 2020 November 2020 OUTSOURCING Inc. Securities Code: 2427/TSE 1st Section Copyright (C) 2020 OUTSOURCING Inc. All Rights Reserved. Contents ⚫ P. 2 Our Group’s Social Responsibility and Significance ⚫ P. 5 Consolidated Financial Results for 3Q FY12/20 (IFRS) ⚫ P. 19 The Revised Full-Year Consolidated Financial Forecasts (IFRS) and Dividend Forecast ⚫ P. 25 Status of Group Companies Whose Goodwill is Recorded on OS Account ⚫ P. 37 The Group’s Advantage in Discovering New Demands in Response to COVID-19 ⚫ P. 42 Acquisition Announcement Made on November 4, 2020 ⚫ P. 44 Reference Materials Copyright (C) 2020 OUTSOURCING Inc. All Rights Reserved. 1 Our Group’s Social Responsibility and Significance Copyright (C) 2020 OUTSOURCING Inc. All Rights Reserved. 2 Our Group’s Social Responsibility and Significance New Initiatives in Social Responsibility Due to large, worldwide changes caused by rapid globalization, significant changes have been starting to occur in what the society demands from HR service companies and their roles in society. By redefining our management philosophy, we as the Outsourcing Group will create a framework that will allow us to contribute widely to society through our business activities. Group Mission Management Philosophy Vision of a society to be achieved through our businesses Universal principles for realizing our group mission that underpin our group’s business activities Compliance Implementation Execution Execution Sustainability Policy Business Vision Upward spiral through the Code of Conduct power of employees Business Policy (individual) and the power of the company (unity) Copyright (C) 2020 OUTSOURCING Inc. -

Cyberactivism in the Egyptian Revolution: How Civic Engagement and Citizen Journalism Tilted the Balance

Cyberactivism in the Egyptian Revolution: How Civic Engagement and Citizen Journalism Tilted the Balance by Dr Sahar Khamis and Katherine Vaughn published in Issue 14 of Arab Media and Society, Summer 2011 Introduction ―If you want to free a society, just give them Internet access.‖ These were the words of 30-year- old Egyptian activist Wael Ghonim in a CNN interview on February 9, 2011, just two days before long-time dictator Hosni Mubarak was forced to step down under pressure from a popular, youthful, and peaceful revolution. This revolution was characterized by the instrumental use of social media, especially Facebook, Twitter, YouTube, and text messaging by protesters, to bring about political change and democratic transformation. This article focuses on how these new types of media acted as effective tools for promoting civic engagement, through supporting the capabilities of the democratic activists by allowing forums for free speech and political networking opportunities; providing a virtual space for assembly; and supporting the capability of the protestors to plan, organize, and execute peaceful protests. Additionally, it explores how these new media avenues enabled an effective form of citizen journalism, through providing forums for ordinary citizens to document the protests; to spread the word about ongoing activities; to provide evidence of governmental brutality; and to disseminate their own words and images to each other, and, most importantly, to the outside world through both regional and transnational media. In discussing these aspects, special attention will be paid to the communication struggle which erupted between the people and the government, through shedding light on how the Egyptian people engaged in both a political struggle to impose their own agendas and ensure the fulfillment of their demands, while at the same time engaging in a communication struggle to ensure that their authentic voices were heard and that their side of the story was told, thus asserting their will, exercising their agency, and empowering themselves.