Introduction of an Advanced Caching Layer Leveraging the Varnish Technology Stack and Integrating It to the Existing Web Platform

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Guide to Open Source Solutions

White paper ___________________________ Guide to open source solutions “Guide to open source by Smile ” Page 2 PREAMBLE SMILE Smile is a company of engineers specialising in the implementing of open source solutions OM and the integrating of systems relying on open source. Smile is member of APRIL, the C . association for the promotion and defence of free software, Alliance Libre, PLOSS, and PLOSS RA, which are regional cluster associations of free software companies. OSS Smile has 600 throughout the World which makes it the largest company in Europe - specialising in open source. Since approximately 2000, Smile has been actively supervising developments in technology which enables it to discover the most promising open source products, to qualify and assess them so as to offer its clients the most accomplished, robust and sustainable products. SMILE . This approach has led to a range of white papers covering various fields of application: Content management (2004), portals (2005), business intelligence (2006), PHP frameworks (2007), virtualisation (2007), and electronic document management (2008), as well as PGIs/ERPs (2008). Among the works published in 2009, we would also cite “open source VPN’s”, “Firewall open source flow control”, and “Middleware”, within the framework of the WWW “System and Infrastructure” collection. Each of these works presents a selection of best open source solutions for the domain in question, their respective qualities as well as operational feedback. As open source solutions continue to acquire new domains, Smile will be there to help its clients benefit from these in a risk-free way. Smile is present in the European IT landscape as the integration architect of choice to support the largest companies in the adoption of the best open source solutions. -

Freebsd's Firewall Feast

SEE TEXT ONLY FreeBSD’s Firewall Feast By Michael W Lucas reeBSD is famous for all sorts of fantastic gets silently dropped, and which is sent back to features, such as ZFS, jails, bhyve virtual- the source with a letter of complaint. A packet- ization, and the Ports Collection. It’s forwarding packet filter is the original firewall. F somewhat infamous, however, for having The firewalls all have a common core feature three different firewalls: PF, IPFilter, and IPFW. set considered the minimum for a modern pack- Where did all these firewalls come from, and et filter. They can track the state of a TCP/IP con- why are they all still in the system? nection and permit traffic based on existing con- The IT industry has repeatedly abused, nections. They can all return resets or silently stretched, and tormented the word firewall to fit drop connections. All can manage non-routable all sorts of different products. When someone addresses and perform network address transla- uses firewall, ask them exactly what they’re talk- tion. They all work with lists of rules defining ing about. Do they mean a caching HTTP proxy how to respond to traffic from different IP like Squid or Varnish? A generic proxy like addresses and network ports. Incoming packets relayd? Or a TCP/IP packet filter? are compared to the list of rules until they are All of FreeBSD’s firewalls are packet filters. permitted or rejected. They control which TCP/IP addresses and ports The firewalls have their own unique features, can connect to the host. -

Learning HTTP 2.Pdf

L e a r n i n g H T T P/2 A PRACTICAL GUIDE FOR BEGINNERS Stephen Ludin & Javier Garza Learning HTTP/2 A Practical Guide for Beginners Stephen Ludin and Javier Garza Beijing Boston Farnham Sebastopol Tokyo Learning HTTP/2 by Stephen Ludin and Javier Garza Copyright © 2017 Stephen Ludin, Javier Garza. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/insti‐ tutional sales department: 800-998-9938 or [email protected]. Acquisitions Editor: Brian Anderson Indexer: Wendy Catalano Editors: Virginia Wilson and Dawn Schanafelt Interior Designer: David Futato Production Editor: Shiny Kalapurakkel Cover Designer: Karen Montgomery Copyeditor: Kim Cofer Illustrator: Rebecca Demarest Proofreader: Sonia Saruba June 2017: First Edition Revision History for the First Edition 2017-05-14: First Release 2017-10-27: Second Release See http://oreilly.com/catalog/errata.csp?isbn=9781491962442 for release details. The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Learning HTTP/2, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc. While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. -

Varnish Documentation Release 3.0.2

Varnish Documentation Release 3.0.2 Varnish Project January 09, 2012 CONTENTS 1 Varnish Installation 3 1.1 Prerequisites...............................................3 1.2 Installing Varnish.............................................3 1.3 Compiling Varnish from source.....................................4 1.4 Getting hold of us............................................6 1.5 Reporting bugs..............................................7 1.6 Upgrading from Varnish 2.1 to 3.0....................................9 2 Using Varnish 13 2.1 Backend servers............................................. 13 2.2 Starting Varnish............................................. 14 2.3 Logging in Varnish............................................ 14 2.4 Sizing your cache............................................ 15 2.5 Put Varnish on port 80.......................................... 15 2.6 Varnish Configuration Language - VCL................................. 16 2.7 Statistics................................................. 18 2.8 Achieving a high hitrate......................................... 19 2.9 Cookies.................................................. 22 2.10 Vary.................................................... 23 2.11 Pitfall - Vary: User-Agent........................................ 24 2.12 Purging and banning........................................... 24 2.13 Edge Side Includes............................................ 26 2.14 Running inside a virtual machine (VM)................................. 27 2.15 Advanced Backend configuration................................... -

Varnish Http Accelerator

Varnish http accelerator - A 2006 software design Poul-Henning Kamp [email protected] Varnish Cheat-Sheet ● Web-accelleration for slow CMS systems ● Narrow focus on server side speedup – No FTP etc. – Content provider features ● High Performance – 32 & 64bit, large RAM, sendfile, accept filters – SMP/Multicore friendly architecture – 2006 software design – 11 syscall + 7 locks per cache hit dictionary:Varnish ● tr. v. var·nished, var·nish·ing, var·nish·es – 1. To cover with varnish. – 2. To give a smooth and glossy finish to. – 3. To give a deceptively attractive appearance to. RFC2616 and Varnish Server domain Client domain HTTP HTTP Varnish Client Origin HTTP Server cache Shared HTTP HTTP Cache Client RFC2616 origin server Private HTTP HTTP Client Cache Client Cache Situation ● Origin servers are adversarial. ● Anything the origin server says is law – ... if we can make sense of it. ● If in doubt: don't cache. ● Be semantically transparent at any cost. ● If origin server does not reply: error. Server Cache Situation ● Backend (origin server) is on our side – More precisely: We are on its side. ● We might be responsible for modifying the origin servers instructions. – Change TTL, rewrite URLs etc. ● Whatever happens: protect the backend. ● If backend does not reply: do something! Content Provider Features ● Instant URL invalidation – Regexp matching – Easy Integration to CMS system ● Each object checked max 1 time – When used next time – Many objects will expire without being checked. Content Provider Features ● Varnish Configuration Language – Full expiry time control – Load/Situation mitigation – Content substitution – URL editing ● Prefetching (v2 feature) – Inspect object usage stats – Compression for bandwidth savings Varnish Config Language ● Simple domain specific language – Compiled via C language to binary ● Transparantly handled by manager process. -

An Introduction to Apache Traffic Server

An introduction to Apache Traffic Server Leif Hedstrom December 2011 Who am I? • One of the drivers to open source Traffic Server (at Yahoo) • Committer for Apache Traffic Server • VP of Apache Traffic Server PMC • ASF member • Go Daddy’s Principal Architect for Hosting [email protected] @zwoop +zwoop History of Traffic Server Inktomi Yahoo Apache Traffic Server Traffic Server Traffic Server 1995 2000 2005 2010 Plenty of FOSS Proxy Servers Features ATS HAproxy nginx Squid Varnish mod_proxy Worker Threads Y N N N Y Y Multi-Process N Y Y N Y Y Event-driven Y Y Y Y sometimes Y Plugin APIs Y N Y part Y Y Forward Proxy Y N N Y N Y Reverse Proxy Y Y Y Y Y Y Transp. Proxy Y Y N Y N N Load Balancer weak Y Y Y Y Y Persistent Cache Y N Y Y sorta Y ESI Y N N Y kinda N ICP soon N N Y N N Keep-Alive Y N Y Y Y Y SSL Y N Y Y N Y Pipeline Y N Y Y N Y Mandatory useless benchmark … 120,000" 100,000" 80,000" 60,000" Throughput) 40,000" 20,000" 0" ATS"2.1.9" Nginx"0.8.53" Varnish"2.1.5" Req"/"sec" Less useless benchmark … 120,000# 4.0# 3.5# 100,000# 3.0# 80,000# 2.5# 60,000# 2.0# Throughput% 1.5# 40,000# 1.0# Time%to%first%response% 20,000# 0.5# 0# 0.0# ATS#2.1.9# Nginx#0.8.53# Varnish#2.1.5# Req#/#sec# Latency#(ms)# Intermediaries - Forward Proxy The browser (UA) is explicitly configured to use the FP server as a proxy Web site A GET http://c.com/ HTTP/1.1 Forward Web site B Proxy Browser GET / HTTP/1.1 Host: c.com Web site C Intermediaries - Reverse Proxy The reverse proxy has explicit rules for a.com, b.com and c.com. -

Caching Techniques for High-Performance Web Map Services

Multidisciplinary Research on Geographical Information in Europe and Beyond Proceedings of the AGILE'2012 International Conference on Geographic Information Science, Avignon, April, 24-27, 2012 ISBN: 978-90-816960-0-5 Editors: Jérôme Gensel, Didier Josselin and Danny Vandenbroucke Caching techniques for high-performance Web Map Services Alexander J. Loechel Stephan Schmid University of the Bundeswehr University of the Bundeswehr Munich, Germany Munich, Germany [email protected] [email protected] Abstract The demand for digital maps on the Internet has increased considerably in the last few years. Therefore the performance of web mapping services is becoming more and more important. This paper introduces different caching techniques for high performance serving of standardized OGC WMS. It describes and examines different caching mechanisms based on tile caching, reverse proxy caching and web application acceleration. Furthermore it demonstrates benefits and problems and data can be modified for different caching techniques. The paper outlines the advantages of WMS caching systems and investigates the behavior of these systems with an increasing number of concurrent requests using benchmarking tests. This includes the examination of applicability of the INSPIRE level of service agreement for view services. Keywords: Caching, INSPIRE, Benchmark, Generic approach, WMS 1 Introduction - Transaction time represents the time that passes while the web service is completing one complete The use of maps on web pages has increased in the last few transaction. years. Professional mapping services like Google Maps, Bing - Latency is the round-trip delay (RTD) between Maps and other companies dominate the market. The sending a request and receiving the response. -

Web Acceleration Mechanics

Web Acceleration Mechanics Alexander Krizhanovsky Tempesta Technologies, Inc. [email protected] Who am I? CEO at Tempesta Technologies, INC Custom software development since 2008: ● Network security: WAF, VPN, DPI etc. e.g. Positive Technologies AF, “Visionar” Gartner magic quadrant’15 ● Databases: one of the top MariaDB contributors ● Perfomance tuning Tempesta FW – Linux Application Delivery Controller Tempesta FW: Application Delivery Controller (ADC) Web accelerator Load balancing Backend connections management TLS termination Protocol downgrade Cache responses Agenda & Disclaimer Mechanics: ● HTTP connections & messages management ● HTTP decoders & parsers ● Web caches ● Network I/O ● Multitasking ● TLS The web accelerators are mentioned only as implementation examples Some software is just more familiar to me HTTP connections & messages management Server connections New connections or persistent connections (usual) HTTP keep-alive connections Keep-Alive: timeout=5, max=10000 Reestablish closed KA connection New connections if all are busy N backend connections = N backend requests in-flight DDoS and flash crowds: as many server connections as client connections Run out of port numbers HTTP/1 message pipelining Mostly unused by proxies Squid, Tempesta FW, Polipo Messages multiplexing Forwarding and reforwarding issues Security issues ● Breaking authentication ● HTTP Response splitting HTTP Response Splitting attack (Web cache poisoning) [CRLF CRLF] Client: /redir_lang.jsp?lang=foobar%0d%0aContent-Length:%200%0d%0a%0d%0a HTTP/1.1%20200%20OK%0d%0aContent-Type:%20text/html%0d%0a -

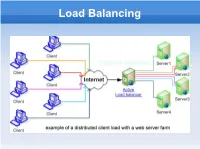

Load Balancing

Load Balancing Contents What is Load Balancing Load Balancer and Back-End Layer-2 Load Balancing Layer-4 Load Balancing Layer-7 Load Balancing Persistence DNS Load Balancing Database Load Balancing Load-Balancing Clusters More Software Solutions What is Load Balancing? Load balancing is a computer networking methodology to distribute workload across multiple resources to achieve optimal resource utilization maximize throughput minimize response time avoid overload Load Balancer and Back-End A Load Balancer (Front-End Server) is usually used Receives computed outputs from several Back- End Servers In Multitier architecture, terminology of designs may include Stovepipes Bowties Load Balancer and Back-End Redirects traffic according to algorithm/availability/traffic but stays as a mediator between the user and the back-end servers Server Health Checking through Ping/TCP Connect/HTTP Get Header/HTTP Get Content Custom checks can be designed too Layer-2 Load Balancing ● Bonding ● Bond two or more links into a single, higher- bandwidth logical link ● NIC Teaming ● Link Aggregation Control Protocol (LACP) Layer 4 Load Balancer/Router MultiLayer Switch Practically a NAT with port and transaction awareness Modern load balancing routers can use rules least load fastest response times balancing requests out if one machine is not up, the router will not send traffic to it IPVS in the Linux Virtual Server (LVS) Layer-7 Load Balancing Reverse Proxy Parse requests in application layer and distribute requests to servers based on different types of request contents LoadBalancing.org recommends HAProxy for open-source Citrix netscaler and F5 are also said to dominate this market Also KTCPVS Layer-7 Load Switching Persistence When a user is directed to one server The user is kept redirected to that server Source IP Persistence Not good for multiple access behind one IP Cookie Based Good for HTTP but there are famous vendors that also do RDP Cookies (HAProxy) DNS Load Balancing Using the Zone File When the Server is down.. -

HTTP/2: Analysis and Measurements

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Universidad Carlos III de Madrid e-Archivo UNIVERSIDAD CARLOS III DE MADRID ESCUELA POLITÉCNICA SUPERIOR DEPARTAMENTO DE INGENIERÍA TELEMÁTICA ITT: Sistemas de Telecomunicaciones Proyecto Fin de Carrera HTTP/2: Analysis and measurements Author: José Fernando Calcerrada Cano Tutor: Marcelo Bagnulo Braun Cotutor: Anna Maria Mandalari January 2016 “This, Jen, is the Internet.” Maurice Moss, IT Crowd. Abstract HTTP/2: Analysis and measurements by José Fernando Calcerrada Cano The upgrade of HTTP, the protocol that powers the Internet of the people, was published as RFC on May of 2015. HTTP/2 aims to improve the users experience by solving well- know problems of HTTP/1.1 and also introducing new features. The main goal of this project is to study HTTP/2 protocol, the support in the software, its deployment and im- plementation on the Internet and how the network reacts to an upgrade of the existing protocol. To shed some light on this question we build two experiments. We build a crawler to monitor the HTTP/2 adoption across Internet using the Alexa top 1 million websites as sample. We find that 22,653 servers announce support for HTTP/2, but only 10,162 websites are served over it. The support for HTTP/2 Upgrade is minimal, just 16 servers support it and only 10 of them load the content of the websites over HTTP/2 on plain TCP. Motivated by those numbers, we investigate how the new protocol behaves with the middleboxes along the path in the network. -

Optimasi Apache Web Server Menggunakan Varnish Web Cache Dan Reverse Proxy Nginx

OPTIMASI APACHE WEB SERVER MENGGUNAKAN VARNISH WEB CACHE DAN REVERSE PROXY NGINX Skripsi disusun sebagai salah satu syarat untuk memperoleh gelar Sarjana Komputer Program Studi Teknik Informatika Oleh Anindya Putri Arunawati 4611416030 JURUSAN ILMU KOMPUTER FAKULTAS MATEMATIKA DAN ILMU PENGETAHUAN ALAM UNIVERSITAS NEGERI SEMARANG 2020 ii iii iv MOTTO DAN PERSEMBAHAN MOTTO • Jika yang dapat kau lakukan hanyalah merangkak, maka mulailah merangkak (Rumi) • Semakin dia yakin kepada Allah, semakin sedikit dia mengeluh dalam hidupnya (ust. Hanan Attaki) • Jangan pernah lelah untuk berdoa dan percayakan semuanya pada Allah, Allah yang mengatur segalanya (Anindya) PERSEMBAHAN Skripsi ini saya persembahkan kepada: • Kedua Orang Tua saya Bapak Achmad Daserun dan Ibu Watini yang telah mencurahkan keringatnya untuk membiayai pendidikan saya, yang selalu memberikan kasih sayang, doa, dan dukungannnya. • Adik saya, Hilal Hubdin yang telah memberikan dukungan serta doa yang terus dipanjatkan. • Teman-teman saya di jurusan Ilmu Komputer, Fakultas MIPA, serta teman-teman di Universitas Negeri Semarang. • Semua pihak yang tidak dapat disebutkan satu persatu yang telah membantu hingga terselesaikannya penulisan skripsi ini. • Almamater, Universitas Negeri Semarang. v PRAKATA Puji syukur penulis panjatkan kepada Allah Subhanahu wa ta'ala atas berkat rahmat dan hidayah-Nya penulis dapat menyelesaikan skripsi yang berjudul “Optimasi Apache Web Server Menggunakan Varnish Web Cache Dan Reverse Proxy Nginx”. Penulis menyadari bahwa penulisan skripsi ini tidak akan selesai tanpa adanya dukungan serta bantuan dari berbagai pihak. Oleh karena itu, penulis ingin menyampaikan ucapan terima kasih kepada: 1. Prof. Dr. Fathur Rokhman, M.Hum., Rektor Universitas Negeri Semarang. 2. Dr. Sugianto M.Si., Dekan Fakultas Matematika dan Ilmu Pengetahuan Alam Universitas Negeri Semarang. -

Varnish-Book.Pdf

Authors: Tollef Fog Heen (Varnish Software), Kristian Lyngstøl (Varnish Software), Jérôme Renard (39Web) Copyright: Varnish Software AS 2010-2013, Redpill Linpro AS 2008-2009 Versions: Documentation version-4.7-58-g56edce8-dirty / Tested for Varnish 3.0.4 Date: 2013-07-08 License: The material is available under a CC-BY-NC-SA license. See http://creativecommons.org/licenses/by-nc-sa/3.0/ for the full license. For questions regarding what we mean by non-commercial, please contact [email protected]. Contact: For any questions regarding this training material, please contact [email protected]. Web: http://www.varnish-software.com/book/ Source: http://github.com/varnish/Varnish-Book/ Contents 1 Introduction 9 1.1 About the course 10 1.2 Goals and Prerequisites 11 1.3 Introduction to Varnish 12 1.4 Design principles 13 1.5 How objects are stored 14 2 Getting started 15 2.1 Configuration 16 2.2 Command line configuration 17 2.3 Configuration files 18 2.4 Defining a backend in VCL 19 2.5 Exercise: Installation 20 2.6 Exercise: Fetch data through Varnish 21 2.7 Log data 22 2.8 varnishlog 23 2.9 Varnishlog tag examples 24 2.10 varnishlog options 25 2.11 varnishstat 26 2.12 The management interface 28 2.13 Exercise: Try out the tools 29 3 Tuning 30 3.1 Process Architecture 31 3.1.1 The management process 31 3.1.2 The child process 32 3.1.3 VCL compilation 32 3.2 Storage backends 33 3.3 The shared memory log 34 3.4 Tunable parameters 35 3.5 Threading model 36 3.6 Threading parameters 37 3.6.1 Details of threading parameters