Tropical Cyclone Intensity Estimation Using Multi-Dimensional Convolutional Neural Networks from Geostationary Satellite Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dropsonde Observations of Intense Typhoons in 2017 and 2018 in the T-PARCII

EGU General Assembly 2020 May 6, 2020 Online 4-8 May 2020 Tropical meteorology and tropical cyclones (AS1.22) Dropsonde Observations of Intense Typhoons in 2017 and 2018 in the T-PARCII Kazuhisa TSUBOKI1 Institute for Space-Earth Environmental Research, Nagoya University Hiroyuki Yamada2, Tadayasu Ohigashi3, Taro Shinoda1, Kosuke Ito2, Munehiko Yamaguchi4, Tetsuo Nakazawa4, Hisayuki Kubota5, Yukihiro Takahashi5, Nobuhiro Takahashi1, Norio Nagahama6, and Kensaku Shimizu6 1Institute for Space-Earth Environmental Research, Nagoya University, Nagoya, 464-8601 Japan 2University of the Ryukyus, Okinawa, Japan 3National Research Institute for Earth Science and Disaster Resilience, Tsukuba, Japan 4Meteorological Research Institute, Japan Meteorological Agency, Tsukuba, Japan 5Hokkaido University, Sapporo, Japan 6Meisei Electric Co. Ltd., Isesaki, Japan Violent wind and heavy rainfall associated with a typhoon cause huge disaster in East Asia including Japan. For prevention/mitigation of typhoon disaster, accurate estimation and prediction of typhoon intensity are very important as well as track forecast. However, intensity data of the intense typhoon category such as supertyphoon have large error after the US aircraft reconnaissance was terminated in 1987. Intensity prediction of typhoon also has not been improved sufficiently for the last few decades. To improve these problems, in situ observations of typhoon using an aircraft are indispensable. The main objective of the T-PARCII (Tropical cyclone-Pacific Asian Research Campaign for Improvement of Intensity estimations/forecasts) project is improvements of typhoon intensity estimations and forecasts. Violent wind and heavy rainfall associated with a typhoon cause huge disaster in East Asia including Japan. Payment of insurance due to disasters in Japan Flooding Kinu River on Sept. -

Environmental Influences on Sinking Rates and Distributions Of

www.nature.com/scientificreports OPEN Environmental infuences on sinking rates and distributions of transparent exopolymer particles after a typhoon surge at the Western Pacifc M. Shahanul Islam1,3, Jun Sun 2,3*, Guicheng Zhang3, Zhuo Chen3 & Hui Zhou 4 A multidisciplinary approach was used to investigate the causes of the distributions and sinking rates of transparent exopolymer particles (TEPs) during the period of September–October (2017) in the Western Pacifc Ocean (WPO); the study period was closely dated to a northwest typhoon surge. The present study discussed the impact of biogeophysical features on TEPs and their sinking rates (sTEP) at depths of 0–150 m. During the study, the concentration of TEPs was found to be higher in areas adjacent to the Kuroshio current and in the bottom water layer of the Mindanao upwelling zone due to the widespread distribution of cyanobacteria, i.e., Trichodesmium hildebrandti and T. theibauti. The positive signifcant regressions of TEP concentrations with Chl-a contents in eddy-driven areas (R2 = 0.73, especially at 100 m (R2 = 0.75)) support this hypothesis. However, low TEP concentrations and TEPs were observed at mixed layer depths (MLDs) in the upwelling zone (Mindanao). Conversely, high TEP concentrations and high sTEP were found at the bottom of the downwelling zone (Halmahera). The geophysical directions of eddies may have caused these conditions. In demonstrating these relations, the average interpretation showed the negative linearity of TEP concentrations with TEPs (R2 = 0.41 ~ 0.65) at such eddies. Additionally, regression curves (R2 = 0.78) indicated that atmospheric pressure played a key role in the changes in TEPs throughout the study area. -

4. the TROPICS—HJ Diamond and CJ Schreck, Eds

4. THE TROPICS—H. J. Diamond and C. J. Schreck, Eds. Pacific, South Indian, and Australian basins were a. Overview—H. J. Diamond and C. J. Schreck all particularly quiet, each having about half their The Tropics in 2017 were dominated by neutral median ACE. El Niño–Southern Oscillation (ENSO) condi- Three tropical cyclones (TCs) reached the Saffir– tions during most of the year, with the onset of Simpson scale category 5 intensity level—two in the La Niña conditions occurring during boreal autumn. North Atlantic and one in the western North Pacific Although the year began ENSO-neutral, it initially basins. This number was less than half of the eight featured cooler-than-average sea surface tempera- category 5 storms recorded in 2015 (Diamond and tures (SSTs) in the central and east-central equatorial Schreck 2016), and was one fewer than the four re- Pacific, along with lingering La Niña impacts in the corded in 2016 (Diamond and Schreck 2017). atmospheric circulation. These conditions followed The editors of this chapter would like to insert two the abrupt end of a weak and short-lived La Niña personal notes recognizing the passing of two giants during 2016, which lasted from the July–September in the field of tropical meteorology. season until late December. Charles J. Neumann passed away on 14 November Equatorial Pacific SST anomalies warmed con- 2017, at the age of 92. Upon graduation from MIT siderably during the first several months of 2017 in 1946, Charlie volunteered as a weather officer in and by late boreal spring and early summer, the the Navy’s first airborne typhoon reconnaissance anomalies were just shy of reaching El Niño thresh- unit in the Pacific. -

Action Proposed

ESCAP/WMO Typhoon Committee FOR PARTICIPANTS ONLY Fiftieth Session WRD/TC.50/7.2 28 February - 3 March 2018 28 February 2018 Ha Noi, Viet Nam ENGLISH ONLY SUMMARY OF MEMBERS’ REPORTS 2017 (submitted by AWG Chair) Summary and Purpose of Document: This document presents an overall view of the progress and issues in meteorology, hydrology and DRR aspects among TC Members with respect to tropical cyclones and related hazards in 2017. Action Proposed The Committee is invited to: (a) take note of the major progress and issues in meteorology, hydrology and DRR aspects under the Key Result Areas (KRAs) of TC as reported by Members in 2017; and (b) review the Summary of Members’ Reports 2017 in APPENDIX B with the aim of adopting a “Executive Summary” for distribution to Members’ governments and other collaborating or potential sponsoring agencies for information and reference. APPENDICES: 1) Appendix A – DRAFT TEXT FOR INCLUSION IN THE SESSION REPORT 2) Appendix B – SUMMARY OF MEMBERS’ REPORTS 2017 1 APPENDIX A: DRAFT TEXT FOR INCLUSION IN THE SESSION REPORT 6.2 SUMMARY OF MEMBERS’ REPORTS 1. The Committee took note of the Summary of Members’ Reports 2017 as submitted for the 12th IWS in Jeju, Republic of Koreq, highlighting the key tropical cyclone impacts on Members in 2017 and the major activities undertaken by Members under the various KRAs and components during the year. 2. The Committee expressed its appreciation to AWG Chair for preparinG the Summary of Members’ Reports. It is noted the new KRA and supportinG Priorities structure contained developed in the new TC StrateGic Plan 2017-2021 caused some confusion in the format of the Member Reports. -

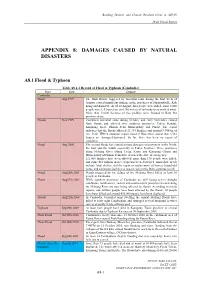

Appendix 8: Damages Caused by Natural Disasters

Building Disaster and Climate Resilient Cities in ASEAN Draft Finnal Report APPENDIX 8: DAMAGES CAUSED BY NATURAL DISASTERS A8.1 Flood & Typhoon Table A8.1.1 Record of Flood & Typhoon (Cambodia) Place Date Damage Cambodia Flood Aug 1999 The flash floods, triggered by torrential rains during the first week of August, caused significant damage in the provinces of Sihanoukville, Koh Kong and Kam Pot. As of 10 August, four people were killed, some 8,000 people were left homeless, and 200 meters of railroads were washed away. More than 12,000 hectares of rice paddies were flooded in Kam Pot province alone. Floods Nov 1999 Continued torrential rains during October and early November caused flash floods and affected five southern provinces: Takeo, Kandal, Kampong Speu, Phnom Penh Municipality and Pursat. The report indicates that the floods affected 21,334 families and around 9,900 ha of rice field. IFRC's situation report dated 9 November stated that 3,561 houses are damaged/destroyed. So far, there has been no report of casualties. Flood Aug 2000 The second floods has caused serious damages on provinces in the North, the East and the South, especially in Takeo Province. Three provinces along Mekong River (Stung Treng, Kratie and Kompong Cham) and Municipality of Phnom Penh have declared the state of emergency. 121,000 families have been affected, more than 170 people were killed, and some $10 million in rice crops has been destroyed. Immediate needs include food, shelter, and the repair or replacement of homes, household items, and sanitation facilities as water levels in the Delta continue to fall. -

Research Article Application of Buoy Observations in Determining Characteristics of Several Typhoons Passing the East China Sea in August 2012

Hindawi Publishing Corporation Advances in Meteorology Volume 2013, Article ID 357497, 6 pages http://dx.doi.org/10.1155/2013/357497 Research Article Application of Buoy Observations in Determining Characteristics of Several Typhoons Passing the East China Sea in August 2012 Ningli Huang,1 Zheqing Fang,2 and Fei Liu1 1 Shanghai Marine Meteorological Center, Shanghai, China 2 Department of Atmospheric Science, Nanjing University, Nanjing, China Correspondence should be addressed to Zheqing Fang; [email protected] Received 27 February 2013; Revised 5 May 2013; Accepted 21 May 2013 Academic Editor: Lian Xie Copyright © 2013 Ningli Huang et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. The buoy observation network in the East China Sea is used to assist the determination of the characteristics of tropical cyclone structure in August 2012. When super typhoon “Haikui” made landfall in northern Zhejiang province, it passed over three buoys, the East China Sea Buoy, the Sea Reef Buoy, and the Channel Buoy, which were located within the radii of the 13.9 m/s winds, 24.5 m/s winds, and 24.5 m/s winds, respectively. These buoy observations verified the accuracy of typhoon intensity determined by China Meteorological Administration (CMA). The East China Sea Buoy had closely observed typhoons “Bolaven” and “Tembin,” which provided real-time guidance for forecasters to better understand the typhoon structure and were also used to quantify the air-sea interface heat exchange during the passage of the storm. -

二零一七熱帶氣旋tropical Cyclones in 2017

176 第四節 熱帶氣旋統計表 表4.1是二零一七年在北太平洋西部及南海區域(即由赤道至北緯45度、東 經 100度至180 度所包括的範圍)的熱帶氣旋一覽。表內所列出的日期只說明某熱帶氣旋在上述範圍內 出現的時間,因而不一定包括整個風暴過程。這個限制對表內其他元素亦同樣適用。 表4.2是天文台在二零一七年為船舶發出的熱帶氣旋警告的次數、時段、首個及末個警告 發出的時間。當有熱帶氣旋位於香港責任範圍內時(即由北緯10至30度、東經105至125 度所包括的範圍),天文台會發出這些警告。表內使用的時間為協調世界時。 表4.3是二零一七年熱帶氣旋警告信號發出的次數及其時段的摘要。表內亦提供每次熱帶 氣旋警告信號生效的時間和發出警報的次數。表內使用的時間為香港時間。 表4.4是一九五六至二零一七年間熱帶氣旋警告信號發出的次數及其時段的摘要。 表4.5是一九五六至二零一七年間每年位於香港責任範圍內以及每年引致天文台需要發 出熱帶氣旋警告信號的熱帶氣旋總數。 表4.6是一九五六至二零一七年間天文台發出各種熱帶氣旋警告信號的最長、最短及平均 時段。 表4.7是二零一七年當熱帶氣旋影響香港時本港的氣象觀測摘要。資料包括熱帶氣旋最接 近香港時的位置及時間和當時估計熱帶氣旋中心附近的最低氣壓、京士柏、香港國際機 場及橫瀾島錄得的最高風速、香港天文台錄得的最低平均海平面氣壓以及香港各潮汐測 量站錄得的最大風暴潮(即實際水位高出潮汐表中預計的部分,單位為米)。 表4.8.1是二零一七年位於香港600公里範圍內的熱帶氣旋及其為香港所帶來的雨量。 表4.8.2是一八八四至一九三九年以及一九四七至二零一七年十個為香港帶來最多雨量 的熱帶氣旋和有關的雨量資料。 表4.9是自一九四六年至二零一七年間,天文台發出十號颶風信號時所錄得的氣象資料, 包括熱帶氣旋吹襲香港時的最近距離及方位、天文台錄得的最低平均海平面氣壓、香港 各站錄得的最高60分鐘平均風速和最高陣風。 表4.10是二零一七年熱帶氣旋在香港所造成的損失。資料參考了各政府部門和公共事業 機構所提供的報告及本地報章的報導。 表4.11是一九六零至二零一七年間熱帶氣旋在香港所造成的人命傷亡及破壞。資料參考 了各政府部門和公共事業機構所提供的報告及本地報章的報導。 表4.12是二零一七年天文台發出的熱帶氣旋路徑預測驗証。 177 Section 4 TROPICAL CYCLONE STATISTICS AND TABLES TABLE 4.1 is a list of tropical cyclones in 2017 in the western North Pacific and the South China Sea (i.e. the area bounded by the Equator, 45°N, 100°E and 180°). The dates cited are the residence times of each tropical cyclone within the above‐mentioned region and as such might not cover the full life‐ span. This limitation applies to all other elements in the table. TABLE 4.2 gives the number of tropical cyclone warnings for shipping issued by the Hong Kong Observatory in 2017, the durations of these warnings and the times of issue of the first and last warnings for all tropical cyclones in Hong Kong's area of responsibility (i.e. the area bounded by 10°N, 30°N, 105°E and 125°E). Times are given in hours and minutes in UTC. TABLE 4.3 presents a summary of the occasions/durations of the issuing of tropical cyclone warning signals in 2017. The sequence of the signals displayed and the number of tropical cyclone warning bulletins issued for each tropical cyclone are also given. -

Double Warm-Core Structure of Typhoon Lan (2017) Observed by Dropsondes During T-PARCII

AAS03-15 Japan Geoscience Union Meeting 2018 Double warm-core structure of Typhoon Lan (2017) observed by dropsondes during T-PARCII *Hiroyuki Yamada1, Kazuhisa Tsuboki2, Norio Nagahama3, Kensaku Shimizu3, Tadayasu Ohigashi 4, Taro Shinoda2, Kosuke Ito1, Munehiko Yamaguchi5, Tetsuo Nakazawa5 1. University of the Ryukyus, 2. Nagoya University, 3. Meisei Electric, 4. Kyoto University, 5. Meteorological Research Institute A jet airplane (Gulfstream-II) with two newly-developed GPS dropsonde receivers was used to examine the inner core of Typhoon Lan (2017), as part of the Tropical Cyclones-Pacific Asian Research Campaign for the Improvement of Intensity Estimations/Forecasts (T-PARCII). During the first flight with several eye soundings in 0500-0700 UTC on 21 October, this typhoon was in its mature stage with a central pressure of 915 hPa. Satellite imagery showed the annular structure of this typhoon, with roughly 90-km diameter of the eye and surrounding axisymmetric eyewall convection. The flight route was initially designed to go around the eyewall convection, but was modified to go into the eye. This decision was made in flight using a weather avoidance radar. The airplane flew at 43,000 feet (~13.7 km) of altitude, and totally 8 and 10 dropsondes were deployed in the eye and around the eyewall, respectively. The deployment from this altitude enabled us to examine thermodynamic features of the eye in the lower and middle troposphere and marginally in the upper troposphere. Vertical profile of the eye soundings showed the warm core structure extending from the lower through upper troposphere, with two peaks near 3 km (~ 700 hPa) and above 12 km MSL (< 200 hPa). -

2019 Insurance Fact Book

2019 Insurance Fact Book TO THE READER Imagine a world without insurance. Some might say, “So what?” or “Yes to that!” when reading the sentence above. And that’s understandable, given that often the best experience one can have with insurance is not to receive the benefits of the product at all, after a disaster or other loss. And others—who already have some understanding or even appreciation for insurance—might say it provides protection against financial aspects of a premature death, injury, loss of property, loss of earning power, legal liability or other unexpected expenses. All that is true. We are the financial first responders. But there is so much more. Insurance drives economic growth. It provides stability against risks. It encourages resilience. Recent disasters have demonstrated the vital role the industry plays in recovery—and that without insurance, the impact on individuals, businesses and communities can be devastating. As insurers, we know that even with all that we protect now, the coverage gap is still too big. We want to close that gap. That desire is reflected in changes to this year’s Insurance Information Institute (I.I.I.)Insurance Fact Book. We have added new information on coastal storm surge risk and hail as well as reinsurance and the growing problem of marijuana and impaired driving. We have updated the section on litigiousness to include tort costs and compensation by state, and assignment of benefits litigation, a growing problem in Florida. As always, the book provides valuable information on: • World and U.S. catastrophes • Property/casualty and life/health insurance results and investments • Personal expenditures on auto and homeowners insurance • Major types of insurance losses, including vehicle accidents, homeowners claims, crime and workplace accidents • State auto insurance laws The I.I.I. -

The Contribution of Forerunner to Storm Surges Along the Vietnam Coast

Journal of Marine Science and Engineering Article The Contribution of Forerunner to Storm Surges along the Vietnam Coast Tam Thi Trinh 1,2,*, Charitha Pattiaratchi 2 and Toan Bui 2,3 1 Vietnam National Centre for Hydro-Meteorological Forecasting, No. 8 Phao Dai Lang Street, Lang Thuong Commune, Dong Da District, Hanoi 11512, Vietnam 2 Oceans Graduate School and the UWA Oceans Institute, The University of Western Australia, 35 Stirling Highway, Perth WA 6009, Australia; [email protected] (C.P.); [email protected] (T.B.) 3 Faculty of Marine Sciences, Hanoi University of Natural Resource and Environment, No. 41A Phu Dien Road, Phu Dien Commune, North-Tu Liem District 11916, Hanoi, Vietnam * Correspondence: [email protected]; Tel.: +84-988-132-520 Received: 3 May 2020; Accepted: 7 July 2020; Published: 10 July 2020 Abstract: Vietnam, located in the tropical region of the northwest Pacific Ocean, is frequently impacted by tropical storms. Occurrence of extreme water level events associated with tropical storms are often unpredicted and put coastal infrastructure and safety of coastal populations at risk. Hence, an improved understanding of the nature of storm surges and their components along the Vietnam coast is required. For example, a higher than expected extreme storm surge during Typhoon Kalmegi (2014) highlighted the lack of understanding on the characteristics of storm surges in Vietnam. Physical processes that influence the non-tidal water level associated with tropical storms can persist for up to 14 days, beginning 3–4 days prior to storm landfall and cease up to 10 days after the landfall of the typhoon. -

Recent Advances in Research and Forecasting of Tropical Cyclone Rainfall

106 TROPICAL CYCLONE RESEARCH AND REVIEW VOLUME 7, NO. 2 RECENT ADVANCES IN RESEARCH AND FORECASTING OF TROPICAL CYCLONE RAINfaLL 1 2 3 4 5 KEVIN CHEUNG , ZIFENG YU , RUSSELL L. ELSBErry , MICHAEL BELL , HAIYAN JIANG , 6 7 8 9 TSZ CHEUNG LEE , KUO-CHEN LU , YOSHINORI OIKAWA , LIANGBO QI , 10 11 ROBErt F. ROGERS , KAZUHISA TSUBOKI 1Macquarie University, Sydney, Australia 2Shanghai Typhoon Institute, Shanghai, China 3University of Colorado Colorado Springs, Colorado Springs, USA 4Colorado State University, Fort Collins, USA 5Florida International University, Miami, USA 6Hong Kong Observatory, Hong Kong, China 7Pacific Science Association 8RSMC Tokyo/Japan Meteorological Agency, Tokyo, Japan 9Shanghai Meteorological Service, Shanghai, China 10NOAA/AOML/Hurricane Research Division, Miami, USA 11Nagoya University, Nagoya, Japan ABSTRACT In preparation for the Fourth International Workshop on Tropical Cyclone Landfall Processes (IWTCLP-IV), a summary of recent research studies and the forecasting challenges of tropical cyclone (TC) rainfall has been prepared. The extreme rainfall accumulations in Hurricane Harvey (2017) near Houston, Texas and Typhoon Damrey (2017) in southern Vietnam are examples of the TC rainfall forecasting challenges. Some progress is being made in understanding the internal rainfall dynamics via case studies. Environmental effects such as vertical wind shear and terrain-induced rainfall have been studied, as well as the rainfall relationships with TC intensity and structure. Numerical model predictions of TC-related rainfall have been improved via data as- similation, microphysics representation, improved resolution, and ensemble quantitative precipitation forecast techniques. Some attempts have been made to improve the verification techniques as well. A basic forecast challenge for TC-related rainfall is monitoring the existing rainfall distribution via satellite or coastal radars, or from over-land rain gauges. -

Innovation for Water Infrastructure Development in the Mekong Region

The Development Dimension Innovation for Water Infrastructure Development in the Mekong Region The Development Dimension Innovation for Water Infrastructure Development in the Mekong Region This work is published under the responsibility of the Secretary-General of the OECD. The opinions expressed and arguments employed herein do not necessarily reflect the official views of the member countries of the OECD or its Development Centre, of the ADBI or of the Mekong Institute. By making any designation of or reference to a particular territory, city or geographic area, or by using the term “country” in this document, neither the OECD, ADBI, or the Mekong Institute intend to make any judgments as to the legal or other status of any territory, city or area. This publication, as well as any data and any map included herein are without prejudice to the status of or sovereignty over any territory, to the delimitation of international frontiers and boundaries and to the name of any territory, city or area. The names of countries and territories used in this joint publication follow the practice of the OECD. Please cite this publication as: OECD/ADBI/Mekong Institute (2020), Innovation for Water Infrastructure Development in the Mekong Region, The Development Dimension, OECD Publishing, Paris, https://doi.org/10.1787/167498ea-en. ISBN 978-92-64-81904-7 (print) ISBN 978-92-64-90245-9 (pdf) The Development Dimension ISSN 1990-1380 (print) ISSN 1990-1372 (online) Photo credits: Cover design by Aida Buendía (OECD Development Centre) on the basis of an image from © Macrovector/Shutterstock.com. Corrigenda to publications may be found on line at: www.oecd.org/about/publishing/corrigenda.htm.