UC Berkeley UC Berkeley Electronic Theses and Dissertations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

References Please Help Making This Preliminary List As Complete As Possible!

Cypraeidae - important references Please help making this preliminary list as complete as possible! ABBOTT, R.T. (1965) Cypraea arenosa Gray, 1825. Hawaiian Shell News 14(2):8 ABREA, N.S. (1980) Strange goings on among the Cypraea ziczac. Hawaiian Shell News 28 (5):4 ADEGOKE, O.S. (1973) Paleocene mollusks from Ewekoro, southern Nigeria. Malacologia 14:19-27, figs. 1-2, pls. 1-2. ADEGOKE, O.S. (1977) Stratigraphy and paleontology of the Ewekoro Formation (Paleocene) of southeastern Nigeria. Bulletins of American Paleontology 71(295):1-379, figs. 1-6, pls. 1-50. AIKEN, R. P. (2016) Description of two undescribed subspecies and one fossil species of the Genus Cypraeovula Gray, 1824 from South Africa. Beautifulcowries Magazine 8: 14-22 AIKEN, R., JOOSTE, P. & ELS, M. (2010) Cypraeovula capensis - A specie of Diversity and Beauty. Strandloper 287 p. 16 ff AIKEN, R., JOOSTE, P. & ELS, M. (2014) Cypraeovula capensis. A species of diversity and beauty. Beautifulcowries Magazine 5: 38–44 ALLAN, J. (1956) Cowry Shells of World Seas. Georgian House, Melbourne, Australia, 170 p., pls. 1-15. AMANO, K. (1992) Cypraea ohiroi and its associated molluscan species from the Miocene Kadonosawa Formation, northeast Japan. Bulletin of the Mizunami Fossil Museum 19:405-411, figs. 1-2, pl. 57. ANCEY, C.F. (1901) Cypraea citrina Gray. The Nautilus 15(7):83. ANONOMOUS. (1971) Malacological news. La Conchiglia 13(146-147):19-20, 5 unnumbered figs. ANONYMOUS. (1925) Index and errata. The Zoological Journal. 1: [593]-[603] January. ANONYMOUS. (1889) Cypraea venusta Sowb. The Nautilus 3(5):60. ANONYMOUS. (1893) Remarks on a new species of Cypraea. -

Une Ovulidae Singulière Du Bartonien (Éocène Moyen) De Catalogne (Espagne)

Olianatrivia riberai n. gen., n. sp. (Mollusca, Caenogastropoda), une Ovulidae singulière du Bartonien (Éocène moyen) de Catalogne (Espagne) Luc DOLIN 1, rue des Sablons, Mesvres F-37150 Civray-de-Touraine (France) Josep BIOSCA-MUNTS Universitat Politècnica de Catalunya Museu de Geologia « Valenti Masachs » Av. Bases de Manresa, 61-73 E-08242 Manresa (Espagne) David PARCERISA Universitat Politècnica de Catalunya Dpt. d’Enginyeria Minera i Recursos Naturals Av. Bases de Manresa, 61-73 E-08242 Manresa (Espagne) Dolin L., Biosca-Munts J. & Parcerisa D. 2013. — Olianatrivia riberai n. gen., n. sp. (Mollusca, Caenogastropoda), une Ovulidae singulière du Bartonien (Éocène moyen) de Catalogne (Es- pagne). Geodiversitas 35 (4): 931-939. http://dx.doi.org/10.5252/g2013n4aX RÉSUMÉ Les coquilles d’une espèce d’Ovulidae aux caractères totalement inhabituels MOTS CLÉS ont été récoltés dans la partie axiale de l’anticlinal d’Oliana, en Catalogne : Mollusca, Gastropoda, Olianatrivia riberai n. gen., n. sp. (Mollusca, Caenogastropoda), dont la forme Cypraeoidea, et l’ornementation rappellent certaines Triviinae. En accord avec les datations Ovulidae, Pediculariinae, chrono-stratigraphiques connues établies dans le bassin, l’âge de la faune mala- Bartonien, cologique, déplacée à partir d’un faciès récifal, est datée du Bartonien supérieur Bassin sud-Pyrénéen, (Éocène moyen). Il est proposé de placer O. riberai n. gen., n. sp. au sein des Espagne, genre nouveau, Pediculariinae, une sous-famille des Ovulidae (Cypraeoidea), à proximité de espèce nouvelle. Cypropterina ceciliae (De Gregorio, 1880) du Lutétien inférieur du Véronais. GEODIVERSITAS • 2013 • 35 (4) © Publications Scientifiques du Muséum national d’Histoire naturelle, Paris. www.geodiversitas.com 931 Dolin L. -

Contributions to the Knowledge of the Ovulidae. XVI. the Higher Systematics

ZOBODAT - www.zobodat.at Zoologisch-Botanische Datenbank/Zoological-Botanical Database Digitale Literatur/Digital Literature Zeitschrift/Journal: Spixiana, Zeitschrift für Zoologie Jahr/Year: 2007 Band/Volume: 030 Autor(en)/Author(s): Fehse Dirk Artikel/Article: Contributions to the knowledge of the Ovulidae. XVI. The higher systematics. (Mollusca: Gastropoda) 121-125 ©Zoologische Staatssammlung München/Verlag Friedrich Pfeil; download www.pfeil-verlag.de SPIXIANA 30 1 121–125 München, 1. Mai 2007 ISSN 0341–8391 Contributions to the knowledge of the Ovulidae. XVI. The higher systematics. (Mollusca: Gastropoda) Dirk Fehse Fehse, D. (2007): Contributions to the knowledge of the Ovulidae. XVI. The higher systematics. (Mollusca: Gastropoda). – Spixiana 30/1: 121-125 The higher systematics of the family Ovulidae is reorganised on the basis of re- cently published studies of the radulae, shell and animal morphology and the 16S rRNA gene. The family is divided into four subfamilies. Two new subfamilîes are introduced as Prionovolvinae nov. and Aclyvolvinae nov. The apomorphism and the result of the study of the 16S rRNA gene are contro- versally concerning the Pediculariidae. Therefore, the Pediculariidae are excluded as subfamily from the Ovulidae. Dirk Fehse, Nippeser Str. 3, D-12524 Berlin, Germany; e-mail: [email protected] Introduction funiculum. A greater surprise seemed to be the genetically similarity of Ovula ovum (Linneaus, 1758) In conclusion of the recently published studies on and Volva volva (Linneaus, 1758) in fi rst sight but a the shell morphology, radulae, anatomy and 16S closer examination of the shells indicates already rRNA gene (Fehse 2001, 2002, Simone 2004, Schia- that O. -

(Mollusca, Gastropoda, Cypraeoidea) Description of a Species

Cainozoic Research, 8(1-2), pp. 29-34, December2011 Contributions to the knowledge of the Pediculariidae (Mollusca, Gastropoda, Cypraeoidea) 2. On the of the Eotrivia 1924 occurrence genus Schilder, in the Ukraine Eocene, with the description of a new species Dirk Fehse Nippeser Str. 3, D-12524 Berlin, Germany; e-mail: [email protected] Received 18 August 2010; revised version accepted 16 August 2011 known from West Eocene is confirmedfor the A The genus Eotrivia formerly European deposits now easternmost Paratethys. second, Eotrivia Eocene still unknown Eotrivia besides faracii is described from the of Ukraine as Eotrivia procera sp. nov. KEY WORDS:: Cypraeoidea, Eocypraeidae, Pediculariidae, Eotrivia, new species, new combinations, fossil, Eocene, Ukraine. Introduction clarified by geological studies. In the following the term ‘Mandrikovka beds, Eocene’ is therefore used. Recently some shells from Mandrikovka, Dnepropetrovsk region of Ukraine became available for study. Among In Part 1 (Fehse & Vician, 2008) of this small series of those shells several species of families Eocypraeidae papers on the Pediculariidae the identity of Projenneria Schilder, 1924 and PediculariidaeAdams & Adams, 1854 neumayri (Hilber, 1879) is discussed and Projenneria al- were found.These species formerly known from the Mid- bopunctata Fehse & Vician, 2008 is described. dle Eocene of Western Europe are unusually common at Mandrikovka. The species ofthe Eocypraeidae are Apiocy- Abbreviations - To denote the repositories of materialre- praea sellei (de Raincourt, 1874), Oxycypraea del- ferred to in the text, the following abbreviations are used: phinoides (Cossmann, 1886), Oxycypraea fourtaui (Op- penheim, 1906) comb, nov., Cyproglobina parvulorbis (de DFB collection Dirk Fehse, Berlin, Germany. Gregorio), 1880, Cyproglobina pisularia (de Gregorio, ZSM Zoological State Collection, Munich, Germany. -

Molecular Diversity, Phylogeny, and Biogeographic Patterns of Crustacean Copepods Associated with Scleractinian Corals of the Indo-Pacific

Molecular Diversity, Phylogeny, and Biogeographic Patterns of Crustacean Copepods Associated with Scleractinian Corals of the Indo-Pacific Dissertation by Sofya Mudrova In Partial Fulfillment of the Requirements For the Degree of Doctor of Philosophy of Science King Abdullah University of Science and Technology, Thuwal, Kingdom of Saudi Arabia November, 2018 2 EXAMINATION COMMITTEE PAGE The dissertation of Sofya Mudrova is approved by the examination committee. Committee Chairperson: Dr. Michael Lee Berumen Committee Co-Chair: Dr. Viatcheslav Ivanenko Committee Members: Dr. James Davis Reimer, Dr. Takashi Gojobori, Dr. Manuel Aranda Lastra 3 COPYRIGHT PAGE © November, 2018 Sofya Mudrova All rights reserved 4 ABSTRACT Molecular diversity, phylogeny and biogeographic patterns of crustacean copepods associated with scleractinian corals of the Indo-Pacific Sofya Mudrova Biodiversity of coral reefs is higher than in any other marine ecosystem, and significant research has focused on studying coral taxonomy, physiology, ecology, and coral-associated fauna. Yet little is known about symbiotic copepods, abundant and numerous microscopic crustaceans inhabiting almost every living coral colony. In this thesis, I investigate the genetic diversity of different groups of copepods associated with reef-building corals in distinct parts of the Indo-Pacific; determine species boundaries; and reveal patterns of biogeography, endemism, and host-specificity in these symbiotic systems. A non-destructive method of DNA extraction allowed me to use an integrated approach to conduct a diversity assessment of different groups of copepods and to determine species boundaries using molecular and taxonomical methods. Overall, for this thesis, I processed and analyzed 1850 copepod specimens, representing 269 MOTUs collected from 125 colonies of 43 species of scleractinian corals from 11 locations in the Indo-Pacific. -

CONE SHELLS - CONIDAE MNHN Koumac 2018

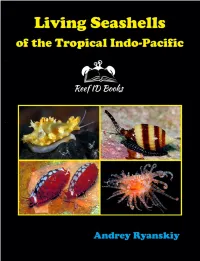

Living Seashells of the Tropical Indo-Pacific Photographic guide with 1500+ species covered Andrey Ryanskiy INTRODUCTION, COPYRIGHT, ACKNOWLEDGMENTS INTRODUCTION Seashell or sea shells are the hard exoskeleton of mollusks such as snails, clams, chitons. For most people, acquaintance with mollusks began with empty shells. These shells often delight the eye with a variety of shapes and colors. Conchology studies the mollusk shells and this science dates back to the 17th century. However, modern science - malacology is the study of mollusks as whole organisms. Today more and more people are interacting with ocean - divers, snorkelers, beach goers - all of them often find in the seas not empty shells, but live mollusks - living shells, whose appearance is significantly different from museum specimens. This book serves as a tool for identifying such animals. The book covers the region from the Red Sea to Hawaii, Marshall Islands and Guam. Inside the book: • Photographs of 1500+ species, including one hundred cowries (Cypraeidae) and more than one hundred twenty allied cowries (Ovulidae) of the region; • Live photo of hundreds of species have never before appeared in field guides or popular books; • Convenient pictorial guide at the beginning and index at the end of the book ACKNOWLEDGMENTS The significant part of photographs in this book were made by Jeanette Johnson and Scott Johnson during the decades of diving and exploring the beautiful reefs of Indo-Pacific from Indonesia and Philippines to Hawaii and Solomons. They provided to readers not only the great photos but also in-depth knowledge of the fascinating world of living seashells. Sincere thanks to Philippe Bouchet, National Museum of Natural History (Paris), for inviting the author to participate in the La Planete Revisitee expedition program and permission to use some of the NMNH photos. -

Xenophoridae, Cypraeoidea, Mitriforms and Terebridae (Caenogastropoda)

Taxonomic study on the molluscs collected in Marion-Dufresne expedition (MD55) to SE Brazil: Xenophoridae, Cypraeoidea, mitriforms and Terebridae (Caenogastropoda) Luiz Ricardo L. SIMONE Carlo M. CUNHA Museu de Zoologia da Universidade de São Paulo, caixa postal 42494, 04218-970 São Paulo, SP (Brazil) [email protected] [email protected] Simone L. R. L. & Cunha C. M. 2012. — Taxonomic study on the molluscs collected in Marion-Dufresne expedition (MD55) to SE Brazil: Xenophoridae, Cypraeoidea, mitriforms and Terebridae (Caenogastropoda). Zoosystema 34 (4): 745-781. http://dx.doi.org/10.5252/z2012n4a6 ABSTRACT The deep-water molluscs collected during the expedition MD55 off SE Brazil have been gradually studied in some previous papers. The present one is focused on samples belonging to caenogastropod taxa Xenophoridae Troschel, 1852, Cypraeoidea Rafinesque, 1815, mitriforms and Terebridae Mörch, 1852. Regarding the Xenophoridae, Onustus aquitanus n. sp. is a new species, collected off the littoral of Espírito Santo and Rio de Janeiro, Brazil, 430-637 m depth (continental slope). The main characters of the species include the small size (c. 20 mm), the proportionally wide shell, the white colour, the short peripheral flange, the oblique riblets weakly developed and a brown multispiral protoconch. This appears to be the smallest living species of the family, resembling in this aspect fossil species. In respect to the Cypraeoidea, the following results were obtained: family Cypraeidae Rafinesque, 1815: Erosaria acicularis (Gmelin, 1791) and Luria cinerea (Gmelin, 1791) had the deepest record, respectively 607-620 m and 295-940 m, although the samples were all dead, eroded shells. Family Lamellariidae d’Orbigny, 1841: a total of three lots were collected, provisionally identified as Lamellaria spp. -

Caenogastropoda

13 Caenogastropoda Winston F. Ponder, Donald J. Colgan, John M. Healy, Alexander Nützel, Luiz R. L. Simone, and Ellen E. Strong Caenogastropods comprise about 60% of living Many caenogastropods are well-known gastropod species and include a large number marine snails and include the Littorinidae (peri- of ecologically and commercially important winkles), Cypraeidae (cowries), Cerithiidae (creep- marine families. They have undergone an ers), Calyptraeidae (slipper limpets), Tonnidae extraordinary adaptive radiation, resulting in (tuns), Cassidae (helmet shells), Ranellidae (tri- considerable morphological, ecological, physi- tons), Strombidae (strombs), Naticidae (moon ological, and behavioral diversity. There is a snails), Muricidae (rock shells, oyster drills, etc.), wide array of often convergent shell morpholo- Volutidae (balers, etc.), Mitridae (miters), Buccin- gies (Figure 13.1), with the typically coiled shell idae (whelks), Terebridae (augers), and Conidae being tall-spired to globose or fl attened, with (cones). There are also well-known freshwater some uncoiled or limpet-like and others with families such as the Viviparidae, Thiaridae, and the shells reduced or, rarely, lost. There are Hydrobiidae and a few terrestrial groups, nota- also considerable modifi cations to the head- bly the Cyclophoroidea. foot and mantle through the group (Figure 13.2) Although there are no reliable estimates and major dietary specializations. It is our aim of named species, living caenogastropods are in this chapter to review the phylogeny of this one of the most diverse metazoan clades. Most group, with emphasis on the areas of expertise families are marine, and many (e.g., Strombidae, of the authors. Cypraeidae, Ovulidae, Cerithiopsidae, Triphori- The fi rst records of undisputed caenogastro- dae, Olividae, Mitridae, Costellariidae, Tereb- pods are from the middle and upper Paleozoic, ridae, Turridae, Conidae) have large numbers and there were signifi cant radiations during the of tropical taxa. -

01-03 Simone 2018 Malacopedia Limacization.Pdf

Malacopedia ________________________________________________________________________________ São Paulo, SP, Brazil ISSN 2595-9913 Volume 1(3): 12-22 September/2018 ________________________________________________________________________________ Main processes of body modification in gastropods: the limacization Luiz Ricardo L. Simone Museu de Zoologia da Universidade de São Paulo [email protected]; [email protected] OrcID: 0000-0002-1397-9823 Abstract Limacization is the evolutive process that transform a snail into a slug. The modifications and implications of this process are exposed and discussed herein, emphasizing the modification of the visceral mass that migrates to the head-foot haemocoel; the pallial structures also have similar modification or disappear. The limacization is divided in 2 levels: degree 1) with remains of visceral mass and pallial cavity in a dorsal hump; degree 2) lacking any vestige of them, being secondarily bilaterally symmetrical. Despite several gastropod branches suffered the limacization process, the degree 2 is only reached by the Systellommatophora and part of the Nudibranchia (doridaceans). Some related issues are also discussed, such as the position of the mantle in slugs, if the slugs have detorsion (they do not have), the absence of slugs in some main taxa, such as Caenogastropoda and the archaeogastropod grade, and the main branches that have representatives with limacization. Introduction The process called “limacization” is the transformation of a snail into a slug. The name is derived from the Latin limax, meaning slug. The limacization consists of the evolutionary process of relocation of the visceral mass into a region inside the cephalo-pedal mass, as well as the reduction and even loss of the pallial cavity and its organs. -

Biodiversity of Gastropods in Intertidal Zone of Krakal Beach, Gunungkidul, Yogyakarta

1st Bioinformatics and Biodiversity Conference Volume 2021 http://dx.doi.org/10.11594/nstp.2021.0703 Conference Paper Biodiversity of Gastropods in Intertidal Zone of Krakal Beach, Gunungkidul, Yogyakarta G. A. B. Y. P. Cahyadi, Ragil Pinasti, Assyafiya Salwa, Maghfira Aulia Devi, Lutfiyah Rizqi Fajriana, Nila Qudsiyati, Pinkan Calista, Rury Eprilurahman* Faculty of Biology, Gadjah Mada University, Jl. Teknika Selatan, Sekip Utara, Yogyakarta, 55281, Indonesia *Corresponding author: ABSTRACT E-mail: [email protected] Krakal Beach is located in Gunungkidul Regency, Yogyakarta Special Region. This beach is built by coral reefs. The coral reef is an ecosystem that can sup- port various biota to live on it by being a habitat for many species, such as Molluscs. Mollusc is the phylum that has the most members after Arthropods. Approximately 60,000 living species and 15,000 fossil species belong to Mol- lusc. The phylum Mollusc is divided into seven classes, one of which is Gas- tropods. Gastropods are Molluscs that move with their abdominal muscles. Molluscs are so diverse, so this research is aimed to study the biodiversity of Molluscs in the intertidal zone of Krakal beach, Gunungkidul, Yogyakarta. The research was conducted on October 4th, 2019 at 03.10 WIB. The research held when ecological parameters were ±21.3oC for water temperature, ±3.6% for salinity, and 7.5 for pH. The samples were collected using a purposive sampling method, preserved by using a dry preservation method, and identi- fied by determining the morphological characteristics of the shell and re- ferred to many references. This study found 7 families from the class Gastro- pod in the intertidal zone of Krakal beach, those are Aplustridae, Conidae, Cypraeidae, Mitridae, Muricidae, Nacellidae, and Turbinidae. -

Novitates 37

VOLUME 37 /// 2014 DURBAN•NATURAL•SCIENCE•MUSEUM•NOVITATES EDITOR D.G Allan Curator of Birds Durban Natural Science Museum P.O. Box 4085 Durban 4000, South Africa e-mail: [email protected] EDITORIAL BOARD Dr A.J. Armstrong Animal Scientist (Herpetofauna & Invertebrates) Ezemvelo KZN Wildlife G.B.P. Davies Curator of Birds Ditsong National Museum of Natural History Dr L. Richards Curator of Mammals e-mail: [email protected] Prof. P.J. Taylor School of Mathematical and Natural Sciences University of Venda Dr K.A. Williams Curator of Entomology e-mail: [email protected] SUBSCRIPTION DETAILS Librarian Durban Natural Science Museum P.O. Box 4085 Durban 4000, South Africa e-mail:[email protected] COVER IMAGE Cape grass lizard Chamaesaura anguina Photo: Johan Marais Published by the Durban Natural Science Museum 1 EDITORIAL Durban Natural Science Museum Novitates 37 EDITORIAL SOUTH AFRICAN NATURAL SCIENCE MUSEUM JOURNALS - THE PAST 50 YEARS (1964 - 2013) GOING DOWN SWINGING OR ADJUSTING TO THE RIGHT FIGHTING WEIGHT? This editorial briefly reviews publication trends in the scientific component (Fig. 1). Four of these are national museums: Ditsong journals published by South African museums that are solely or National Museum of Natural History (Pretoria), National Museum – partially focused on the natural sciences. It is based on an oral Bloemfontein, KwaZulu-Natal Museum (Pietermaritzburg) and Iziko presentation given at the conference of the International Council of South African Museum (Cape Town). Five are provincial museums: Museums – South Africa (ICOM-SA) in August 2014. The conference McGregor Museum (Kimberley) and a cluster of four museums was hosted at the Durban Natural Science Museum Research Centre (making up the ‘Eastern Cape Provincial Museums’) in the Eastern during 26 - 27 August and its theme was “Museum research in South Cape Province: Amatole Museum (King Williams Town), East London Africa – relevance and future”. -

Gastropoda: Velutinidae), a Specialist Predator of Ascidians

Canadian Journal of Zoology The life history and feeding ecology of velvet shell, Velutina velutina (Gastropoda: Velutinidae), a specialist predator of ascidians Journal: Canadian Journal of Zoology Manuscript ID cjz-2018-0327.R1 Manuscript Type: Article Date Submitted by the 03-Jun-2019 Author: Complete List of Authors: Sargent, Philip; Northwest Atlantic Fisheries Centre, Fisheries and Oceans Canada Hamel, Jean-Francois; Society for the Exploration and Valuing of the EnvironmentDraft Mercier, Annie; Memorial University of Newfoundland, Ocean Sciences Is your manuscript invited for consideration in a Special Not applicable (regular submission) Issue?: Velutina velutina, velvet shell, velutinid, gastropod, invasive species, Keyword: specialist predator, ascidian https://mc06.manuscriptcentral.com/cjz-pubs Page 1 of 42 Canadian Journal of Zoology 1 The life history and feeding ecology of velvet shell, Velutina velutina (Gastropoda: Velutinidae), a specialist predator of ascidians P. S. Sargent*, J-F. Hamel, and A. Mercier P. S. Sargent1 Department of Ocean Sciences, Memorial University, St. John’s (Newfoundland and Labrador) Canada A1C 5S7 Email: [email protected] J-F Hamel Society for the Exploration and ValuingDraft of the Environment (SEVE), Portugal Cove-St. Philips (Newfoundland and Labrador) Canada A1M 2B7 Email: [email protected] A. Mercier Department of Ocean Sciences, Memorial University, St. John’s (Newfoundland and Labrador) Canada A1C 5S7 Email: [email protected] * Corresponding Author: Philip S. Sargent Department of Fisheries and Oceans Canada, Northwest Atlantic Fisheries Centre, 80 East White Hills Road, St. John’s, Newfoundland and Labrador, Canada, A1C 4N1 Email: [email protected] Phone: 1 (709) 772-4278 Fax: 1 (709) 772-5315 1 Current Contact Information for P.