Luis Espinosa-Anke

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2ANGLAIS.Pdf

ANGLAIS domsdkp.com HERE WITHOUT YOU 3 DOORS DOWN KRYPTONITE 3 DOORS DOWN IN DA CLUB 50 CENT CANDY SHOP 50 CENT WHAT'S UP ? 4 NON BLONDES TAKE ON ME A-HA MEDLEY ABBA MONEY MONEY MONEY ABBA DANCING QUEEN ABBA FERNANDO ABBA THE WINNER TAKES IT ALL ABBA TAKE A CHANCE ON ME ABBA I HAVE A DREAM ABBA CHIQUITITA ABBA GIMME GIMME GIMME ABBA WATERLOO ABBA KNOWING ME KNOWING YOU ABBA TAKE A CHANCE ON ME ABBA THANK YOU FOR THE MUSIC ABBA SUPER TROUPER ABBA VOULEZ VOUS ABBA UNDER ATTACK ABBA ONE OF US ABBA HONEY HONEY ABBA HAPPY NEW YEAR ABBA HIGHWAY TO HELL AC DC HELLS BELLS AC DC BACK IN BLACK AC DC TNT AC DC TOUCH TOO MUCH AC DC THUNDERSTRUCK AC DC WHOLE LOTTA ROSIE AC DC LET THERE BE ROCK AC DC THE JACK AC DC YOU SHOOK ME ALL NIGHT LONG AC DC WAR MACHINE AC DC PLAY BALL AC DC ROCK OR DUST AC DC ALL THAT SHE WANTS ACE OF BASE MAD WORLD ADAM LAMBERT ROLLING IN THE DEEP ADELE SOMEONE LIKE YOU ADELE DON'T YOU REMEMBER ADELE RUMOUR HAS IT ADELE ONLY AND ONLY ADELE SET FIRE TO THE RAIN ADELE TURNING TABLES ADELE SKYFALL ADELE WALK THIS WAY AEROSMITH WALK THIS WAY AEROSMITH BECAUSE I GOT HIGH AFROMAN RELEASE ME AGNES LONELY AKON EYES IN THE SKY ALAN PARSON PROJECT THANK YOU ALANIS MORISSETTE YOU LEARN ALANIS MORISSETTE IRONIC ALANIS MORISSETTE THE BOY DOES NOTHING ALESHA DIXON NO ROOTS ALICE MERTON FALLIN' ALICIA KEYS NO ONE ALICIA KEYS Page 1 IF I AIN'T GOT YOU ALICIA KEYS DOESN'T MEAN ANYTHING ALICIA KEYS SMOOTH CRIMINAL ALIEN ANT FARM NEVER EVER ALL SAINTS SWEET FANTA DIALO ALPHA BLONDY A HORSE WITH NO NAME AMERICA KNOCK ON WOOD AMII STEWART THIS -

Mpub10110094.Pdf

An Introduction to Chaghatay: A Graded Textbook for Reading Central Asian Sources Eric Schluessel Copyright © 2018 by Eric Schluessel Some rights reserved This work is licensed under the Creative Commons Attribution-NonCommercial- NoDerivatives 4.0 International License. To view a copy of this license, visit http:// creativecommons.org/licenses/by-nc-nd/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, California, 94042, USA. Published in the United States of America by Michigan Publishing Manufactured in the United States of America DOI: 10.3998/mpub.10110094 ISBN 978-1-60785-495-1 (paper) ISBN 978-1-60785-496-8 (e-book) An imprint of Michigan Publishing, Maize Books serves the publishing needs of the University of Michigan community by making high-quality scholarship widely available in print and online. It represents a new model for authors seeking to share their work within and beyond the academy, offering streamlined selection, production, and distribution processes. Maize Books is intended as a complement to more formal modes of publication in a wide range of disciplinary areas. http://www.maizebooks.org Cover Illustration: "Islamic Calligraphy in the Nasta`liq style." (Credit: Wellcome Collection, https://wellcomecollection.org/works/chengwfg/, licensed under CC BY 4.0) Contents Acknowledgments v Introduction vi How to Read the Alphabet xi 1 Basic Word Order and Copular Sentences 1 2 Existence 6 3 Plural, Palatal Harmony, and Case Endings 12 4 People and Questions 20 5 The Present-Future Tense 27 6 Possessive -

Ka'ahea, Kekuhoumana Hi'ilauleleonalani, Jan. 29, 2013

Ka‘ahea, Kekuhoumana Hi‘ilauleleonalani, Jan. 29, 2013 Kekuhoumana Hi‘ilauleleonalani Ka‘ahea, 61, of San Luis Obispo, Calif., died. She was born in Honolulu. She is survived by husband William Woods, brothers Henry K. and Edward K., and sisters Enid N. Simao, Lee P. Downes and Susan L. Ng. Services pending. Flowers welcome. [Honolulu Star-Advertiser 16 February 2013] Ka‘anapu, James Imailani, April 1, 2013 James Imailani Ka‘anapu, 86, of Kailua, a retired Honolulu Police Department officer, died at home. He was born in Honolulu. He is survived by sons Mark I. and James-Scott P., daughters Terry-Ann Kaolulo and Anna M. Ka‘anapu, eight grandchildren, 14 great-grandchildren and two great-great-grandchildren. Visitation: 5 p.m. Friday at the Church of Jesus Christ of Latter-day Saints, Kailua second ward. Services: 6 p.m. Aloha attire. No flowers. [Honolulu Star-Advertiser 16 April 2013] KAAHANUI SR., WILSON FRIDENBERG KUKINIOKALA, 71, of Kaneohe, Retired Bulldozer Operator with the College of Tropical Agriculture and HR at The University of Hawaii at Manoa, Waimanalo Research Station, died January 14, 2013 at Aloha Nursing Home. He was born in Honolulu. He is survived by sons, Wilson Kukiniokala “Willy” Kaahanui Jr., Joseph Kaaumoana “Joey” Kaahanui, Winslow Fridenberg Kaahanui; daughter, Sheryl Uilani “Ui” Kaahanui; 10 grandchildren, 5 great grandchildren; brothers, Robert Kaahanui, John Kaahanui, Chris Kaahanui, John Nuuhiwa; sisters, Elaine Gomes, Annie Dow-Leslie. Visitation at 9 a.m. on Saturday, February 2, 2013 at Nuuanu Memorial Park & Mortuary; service at 11 a.m. Burial at 2:30 p.m. -

Karaoke Song Book Karaoke Nights Frankfurt’S #1 Karaoke

KARAOKE SONG BOOK KARAOKE NIGHTS FRANKFURT’S #1 KARAOKE SONGS BY TITLE THERE’S NO PARTY LIKE AN WAXY’S PARTY! Want to sing? Simply find a song and give it to our DJ or host! If the song isn’t in the book, just ask we may have it! We do get busy, so we may only be able to take 1 song! Sing, dance and be merry, but please take care of your belongings! Are you celebrating something? Let us know! Enjoying the party? Fancy trying out hosting or KJ (karaoke jockey)? Then speak to a member of our karaoke team. Most importantly grab a drink, be yourself and have fun! Contact [email protected] for any other information... YYOUOU AARERE THETHE GINGIN TOTO MY MY TONICTONIC A I L C S E P - S F - I S S H B I & R C - H S I P D S A - L B IRISH PUB A U - S R G E R S o'reilly's Englische Titel / English Songs 10CC 30H!3 & Ke$ha A Perfect Circle Donna Blah Blah Blah A Stranger Dreadlock Holiday My First Kiss Pet I'm Mandy 311 The Noose I'm Not In Love Beyond The Gray Sky A Tribe Called Quest Rubber Bullets 3Oh!3 & Katy Perry Can I Kick It Things We Do For Love Starstrukk A1 Wall Street Shuffle 3OH!3 & Ke$ha Caught In Middle 1910 Fruitgum Factory My First Kiss Caught In The Middle Simon Says 3T Everytime 1975 Anything Like A Rose Girls 4 Non Blondes Make It Good Robbers What's Up No More Sex.... -

The Ambiguity of “Weeping” in William Blake's Poetry

Central Washington University ScholarWorks@CWU All Master's Theses Master's Theses 1968 The Ambiguity of “Weeping” in William Blake’s Poetry Audrey F. Lytle Central Washington University Follow this and additional works at: https://digitalcommons.cwu.edu/etd Part of the Liberal Studies Commons, and the Scholarship of Teaching and Learning Commons Recommended Citation Lytle, Audrey F., "The Ambiguity of “Weeping” in William Blake’s Poetry" (1968). All Master's Theses. 1026. https://digitalcommons.cwu.edu/etd/1026 This Thesis is brought to you for free and open access by the Master's Theses at ScholarWorks@CWU. It has been accepted for inclusion in All Master's Theses by an authorized administrator of ScholarWorks@CWU. For more information, please contact [email protected]. ~~ THE AMBIGUITY OF "WEEPING" IN WILLIAM BLAKE'S POETRY A Thesis Presented to the Graduate Faculty Central Washington State College In Partial Fulfillment of the Requirements for the Degree Master of Education by Audrey F. Lytle August, 1968 LD S77/3 I <j-Ci( I-. I>::>~ SPECIAL COLL£crtoN 172428 Library Central W ashingtoft State Conege Ellensburg, Washington APPROVED FOR THE GRADUATE FACULTY ________________________________ H. L. Anshutz, COMMITTEE CHAIRMAN _________________________________ Robert Benton _________________________________ John N. Terrey TABLE OF CONTENTS CHAPTER PAGE I. INTRODUCTION 1 Method 1 Review of the Literature 4 II. "WEEPING" IMAGERY IN SELECTED WORKS 10 The Marriage of Heaven and Hell 10 Songs of Innocence 11 --------The Book of Thel 21 Songs of Experience 22 Poems from the Pickering Manuscript 30 Jerusalem . 39 III. CONCLUSION 55 BIBLIOGRAPHY 57 APPENDIX 58 CHAPTER I INTRODUCTION I. -

Radio Itapema FM Floria

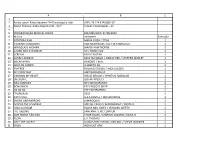

A B C 1 2 Razão social: Radio Itapema FM Florianópolis Ltda CNPJ: 79.224.614/0001-07 3 Nome fantasia: Rádio Itapema FM - 93.7 Cidade: Florianópolis - SC 4 5 PROGRAMAÇÃO MUSICAL DIÁRIA DIA/MÊS/ANO: 01/08/2020 6 Música Intérprete Execução 7 SONÍFERA ILHA MARIA LUIZA / TITAS 2 8 EVENING SHADOWNS VAN MORRISON / JOEY DEFRANCESCO 2 9 WINEGLASS WOMAN MAYER HAWTHORNE 2 10 A MAN AND A WOMAN U2 / BONO VOX 2 11 SCREAM PAOLO NUTINI 2 12 LONELY AVENUE MICK HUCKNALL / SIMPLY RED / STEPHEN MARLEY 2 13 BREAK APART BONOBO / RHYE 2 14 MEIO DE CAMPO GILBERTO GIL 2 15 I'M FREE ROLLING STONES / MICK JAGGER 2 16 MY OWN WAY AMY WINEHOUSE 2 17 UNCHAIN MY HEART WILLIE NELSON / WYNTON MARSALIS 2 18 DIFERENTE GOTAN PROJECT 2 19 NÃO CONSIGO NEY MATOGROSSO 2 20 BOHANNON FAT FREDDYS DROP 2 21 HA HA HA THE EXCITEMENTS 2 22 TROPICALIA BECK 2 23 FOTO VIVA LEILA PANTEL / MO'HORIZONS 2 24 SMOKE AND MIRRORS JAMIROQUAI 2 25 ACROSS THE UNIVERSE SPEECH / RUFUS WAINWRIGHT / BEATLES 2 26 I HAD A DREAM BLANK AND JONES / HOWARD WERTH 2 27 THE CALLING SANTANA / ERIC CLAPTON 2 28 QUE HORAS NÃO SÃO VITOR RAMIL / MARCOS SUZANO / KATIA B 2 29 FLOW L.A. THOMAS 2 30 ISN'T SHE LOVELY LIVINGSTON TAYLOR / KEB'MO' / STEVIE WONDER 2 31 VIDEO INDIA DOT ARIE 2 A B C 32 ESCAPE JACK JOHNSON 2 33 SE EU FOSSE UM DIA O TEU OLHAR NUNO MINDELIS 2 34 I FEEL THE EARTH MOVE THE MOLESKINS 2 35 FORGET BEN WATT / EVERYTHING BUT THE GIRL 2 36 LOVE IS GONNA BRING US BACK ALIVE RICKIE LEE JONES 2 37 HUSH ANGUS AND JULIA STONE 2 38 RONCO DA CUÍCA CEU / JOAO BOSCO 2 39 HERE THERE AND EVERYWHERE DAVID GILMOUR / PINK FLOYD 2 40 BLUE ZUCCHERO / SHERYL CROW 2 41 A IDADE DO CEU PAULINHO MOSKA / ZELIA DUNCAN 2 42 HUMAN LOSS AND GAIN CLUB DES BELUGAS / BAJKA 2 43 DO I QUALIFY? LYNDEN DAVID HALL 2 44 HYPNOSIS DAMIEN RICE 2 45 MY BABY JUST CARES FOR ME IMELDA MAY / BLUE HARLEM / NINA SIMONE 2 46 I WILL BE FOUND JOHN MAYER 2 47 BREAK ON THROUGH DJ DISSE / THE DOORS 2 48 ANIMA MILTON NASCIMENTO 2 49 GOOD THINGS SPENGLER 2 50 SWEET L.A. -

Mind Your Biscuits Freudland Gio Game Daily

Daily Racing Form Watch List 2. Aqueduct. 6 Furlongs. Dirt. Thoroughbred Ch. c. 4 (Mar) KEEJAN14 $47,000 Life 19 6 7 3 $2,509,166 107 D.Fst 15 4 6 2 $1,235,966 107 Mind Your Biscuits Sire: Posse (Silver Deputy) Wet(367) 4 2 1 1 $1,273,200 80 Own: Head of Plains LLC J Stables LLC, Sum 2017 6 2 2 1 $1,713,800 107 Dam:Jazzmane (Toccet) Synth 0 0 0 0 $0 - Br: Jumping Jack Racing LLC (NY) 2016 9 4 3 1 $740,400 107 Turf(243) 0 0 0 0 $0 - Tr: Summers Chad(9 0 4 1 .00) 2017:(81 9 .11) Aqu 3 1 1 0 $195,000 107 Dst(355) 6 2 2 2 $1,709,966 104 2ã17=10Aqu fst 1 ý 22¨ :45©1:10©1:35 3ÎCigarMiH-G1 107 1 /10 5¤ô 5¬ô 4§ 2¨ 2ªõ Ortiz I Jr L122b 7.30 91=15 SharpAztecaªõ MindYourBiscuits§ö PrcticlJoke¦õ 2w,ask 5/16,5w upper 4ä17=8Dmr fst 6f 21© :44¨ :56§1:08¨ 3ÎBCSprint-G1 104 6 /10 10 10®õ 10¬õ 7ªõ 3¨ Rosario J L126b 9.40 92=12 Roy H¦ Imperial Hint§ Mind Your Biscuits¦õ Off slow, swung 7 wide 26Ý17=9Sar fst 7f 22© :45§1:08©1:21 3ÎForego-G1 91 6 /10 5 5© 5© 8¤ 6¤ Rosario J L124b 2.65 89=06 Drefong© Awesome Slew¦ô Tom's ReadyÇ 3w trn,4w upr,empty 8Û17=7Bel fst 7f 22¨ :45¨1:09©1:21© 3ÎBelSprnt-G2 106 2 /7 3 4ª 4© 1¦ô 1¨ô Rosario J L124b *1.40 90=09 MindYourBiscuits¨ô AwesomSlw¦ TommyMcho©ö 3w,4w & asked 1/8pl 25à17 Meydan(UAE) my Í6f LH 1:10© 3Î Dubai Golden Shaheen-G1 14 1¨ Rosario J 132 b - Mind Your Biscuits¨ Comicasó Morawijô Timeform Rating: 125 Stk 2000000 To lead 100y out, drew clear 25á17=12GP fst 6ôf 22¦ :44¨1:08©1:15¦ 4ÎGpSprint-G3 101 6 /6 6 5¬ 5©ô 2¨ô 2É Rosario J L123b *.90 97=09 UnifiedÉ Mind Your Biscuits©õ Squadron A¦õ Gaining fast to wire Previously -

Voicebox Songbook by Title - Chinese

Voicebox Songbook by Title - Chinese 失憶周末 17歲的異想世界 許志安 21932 丁文琪 24381 ! 18 MON MON 41129 Twins 34613 (WAS IT)JUST ONLY THE DREAM 1874 林憶蓮 31404 陳奕迅 22177 (妳沒有)好結果 18愛不愛 李蕙敏 19115 翼勢力企鵝 41133 +-*:- 1984 Beyond組合 23083 Swing 23481 -我只想知道你是否真的愛我 1985 黃小琥 30840 郭顶 40067 0 1996窮得沸亮 Twins 24106 陳輝雄 19853 0204 1ST ALBUM 張震嶽 27277 BEEGA 40872 0932 1天一萬年 陳小春 27278 游鴻明 29901 1 TYM 20 30 40 HOT 35918 Unknown Artist 33630 1+1=0 20.30.40 溫嵐 30234 劉若英+張艾嘉 27280 1.2.3.4.5.6.7 200% 咒語 周華健 30193 Twins 23403 10 2000年 左光平 41130 王力宏 31720 1000朵玫瑰 2001 太空漫遊 罗百吉 38801 陳奕迅 22304 100巴仙(%)愛上妳 2001 太空漫遊(演唱会) 梁漢文 22099 陳奕迅 23579 100樣可能 2002 黎明 21916 Unknown Artist 33634 34561 101 2002年的第一場雪 梁靜茹 41131 刀郎 30235 101封情書 2005 歐得洋 41132 Unknown Artist 33055 102% 2006 Unknown Artist 34027 Unknown Artist 33530 107号牢房 2006冠军情歌 林冠吟 38123 MC HOTDOG 34779 10点10分 2008 张芯瑜 39695 Unknown Artist 33728 1127 2039 玫瑰事件 LMF 23281 林東松 20042 1127(演唱会) 20年长岛黄昏 LMF 23270 刘若英 36517 123 木頭人 21個人 BMG 全體歌手 23354 游鴻明 29897 1234567 22098全城效應 FIR 34777 鄭伊健 22078 Unknown Artist 33799 24 小時 123木头人 王秀琳 21087 刘德华 34778 24HRS 123木頭人 莫文蔚 39006 BMG 歌手 27279 24樓 123站起来 劉若英 27281 杨坤 38093 281 公裡 127日 謝霆鋒 29794 吴建豪 37178 39506 281 公裡(演唱会) 13131 謝霆鋒 22305 陈琳 40368 281公裡 16 謝霆鋒 27282 Unknown Artist 34170 2999年的聖誕節 16楼的演唱会 光良 27283 唐雅明 37029 2nd 17歲 FIN K L 35119 劉德華 21796 Voicebox Songbook by Title - Chinese 2NIGHT藏爱 A BETTER DAY 陶喆 40398 劉德華 27288 2月30号见 A BRAND NEW DAY 飞轮海 34780 莫文蔚 23801 3 7 20 1 A FOR APPLE B FOR BOY 曹格 35342 Twins 23186 33轉 A LOVE BEFORE TIME 溫拿 41134 李玟 37953 365天爱你不变 A THOUSAND DREAMS -

112 Dance with Me 112 Peaches & Cream 213 Groupie Luv 311

112 DANCE WITH ME 112 PEACHES & CREAM 213 GROUPIE LUV 311 ALL MIXED UP 311 AMBER 311 BEAUTIFUL DISASTER 311 BEYOND THE GRAY SKY 311 CHAMPAGNE 311 CREATURES (FOR A WHILE) 311 DON'T STAY HOME 311 DON'T TREAD ON ME 311 DOWN 311 LOVE SONG 311 PURPOSE ? & THE MYSTERIANS 96 TEARS 1 PLUS 1 CHERRY BOMB 10 M POP MUZIK 10 YEARS WASTELAND 10,000 MANIACS BECAUSE THE NIGHT 10CC I'M NOT IN LOVE 10CC THE THINGS WE DO FOR LOVE 112 FT. SEAN PAUL NA NA NA NA 112 FT. SHYNE IT'S OVER NOW (RADIO EDIT) 12 VOLT SEX HOOK IT UP 1TYM WITHOUT YOU 2 IN A ROOM WIGGLE IT 2 LIVE CREW DAISY DUKES (NO SCHOOL PLAY) 2 LIVE CREW DIRTY NURSERY RHYMES (NO SCHOOL PLAY) 2 LIVE CREW FACE DOWN *** UP (NO SCHOOL PLAY) 2 LIVE CREW ME SO HORNY (NO SCHOOL PLAY) 2 LIVE CREW WE WANT SOME ***** (NO SCHOOL PLAY) 2 PAC 16 ON DEATH ROW 2 PAC 2 OF AMERIKAZ MOST WANTED 2 PAC ALL EYEZ ON ME 2 PAC AND, STILL I LOVE YOU 2 PAC AS THE WORLD TURNS 2 PAC BRENDA'S GOT A BABY 2 PAC CALIFORNIA LOVE (EXTENDED MIX) 2 PAC CALIFORNIA LOVE (NINETY EIGHT) 2 PAC CALIFORNIA LOVE (ORIGINAL VERSION) 2 PAC CAN'T C ME 2 PAC CHANGED MAN 2 PAC CONFESSIONS 2 PAC DEAR MAMA 2 PAC DEATH AROUND THE CORNER 2 PAC DESICATION 2 PAC DO FOR LOVE 2 PAC DON'T GET IT TWISTED 2 PAC GHETTO GOSPEL 2 PAC GHOST 2 PAC GOOD LIFE 2 PAC GOT MY MIND MADE UP 2 PAC HATE THE GAME 2 PAC HEARTZ OF MEN 2 PAC HIT EM UP FT. -

Voicebox Songbook by Artist - Chinese

Voicebox Songbook by Artist - Chinese 感受 20667 2個女生 我行我素+我的生活2+安可+我愛台妹 41748 讓我忘了你 28310 最心疼是你 29008 3EP美少女 留一點愛來愛自己 25816 ALL RIGHT 27289 當我想起你 41647 4 in love 衣服 42084 FALL IN LOVE 29020 野姜花的回憶 28775 PARTY NIGHT 29024 0 PRINT MY HEART 29019 UNKNOWN 19797 一千零一個願望 31031 183 Club 什麼都在意 31024 甜蜜約定 30766 他到底哪一點好 29022 真愛 30982 命中注定 31033 迷魂計 30598 圍巾 29028 魔法 30607 好朋友 31028 183 Club+七朵花 小秘密 29029 閉上眼晴默唸3遍 30352 已經過去的愛情 29025 183Club 愛還在 29021 迷魂計《王子變青蛙》主題曲 33160 我怕你再搖晃 31021 183CLUB 我要快樂一點 31030 BOMBA BOMBA 34785 把愛情放進冰箱 29027 FEELING SO RIGHT 35035 改變自己 31025 I AM SO HOT 34802 最好的就在眼前 31026 MAGIC ROOM 34807 最驕傲的事 31022 一把伞 34825 有你真好 31027 两个人的西洋棋 34831 為你唱的歌 31023 为你征服全世界 34835 等你打電話給我 29026 你永远是最美的 34845 觸電 29023 再一遍 34850 說再見 31029 她不是你 35036 35319 誰怕誰 31032 好爱她好想他 36135 4IN LOVE 好爱她好想她 34823 你休想 34842 感情线 34912 再见中国海 34851 折磨 35031 5566 拜金女孩 35028 35029 COME ON 29045 爱情特卖 35027 CRYING IN THE RAIN 34787 爱的奇迹 38341 EASY COME EASY GO 30243 相信永远 35030 FOR YOU 35811 1983 IT IS OUR PART TIME 35812 好孤单 35011 MORE THAN WORDS 29048 无证狗 35034 ONE MORE TRY 27376 等爱走了以后 35032 OPEN ARMS 34809 1983組合 THANK YOU FOR LOVING ME 35813 好孤單 33107 WE WILL ROCK YOU 35814 愛情木瓜 33622 WITHOUT YOUR LOVE 29041 2MORO X'MAS 29034 SHABU SHABU 34814 一光年 29037 初吻 30396 一次 29035 刺激2006 34858 传说 34840 塔基拉 BON 41506 偷心 YES I LOVE YOU 30774 塔基拉BON 40945 偷心YES I LOVE YOU 33399 33661 少了 34902 冷风过境 34855 找到你 34926 勇士們 29044 牽著妳 30665 单眼皮女生 35815 被骗了 35001 原點 30959 2R 同一個世界 30772 SHINING FRIENDS 24164 哇沙米 29040 我兩個 23468 大意 29036 第29天 24165 好久不見 30468 33759 2个女生 存在 30406 FARAWAY -

![Fairy Inc. Sheet Music Products List Last Updated [2013/03/018] Price (Japanese Yen) a \525 B \788 C \683](https://docslib.b-cdn.net/cover/1957/fairy-inc-sheet-music-products-list-last-updated-2013-03-018-price-japanese-yen-a-525-b-788-c-683-4041957.webp)

Fairy Inc. Sheet Music Products List Last Updated [2013/03/018] Price (Japanese Yen) a \525 B \788 C \683

Fairy inc. Sheet Music Products list Last updated [2013/03/018] Price (Japanese Yen) A \525 B \788 C \683 ST : Standard Version , OD : On Demand Version , OD-PS : Piano solo , OD-PV : Piano & Vocal , OD-GS : Guitar solo , OD-GV : Guitar & Vocal A Band Score Piano Guitar Title Artist Tie-up ST OD ST OD-PS OD-PV ST OD-GS OD-GV A I SHI TE RU no Sign~Watashitachi no Shochiku Distributed film "Mirai Yosouzu ~A I DREAMS COME TRUE A A A Mirai Yosouzu~ SHI TE RU no Sign~" Theme song OLIVIA a little pain - B A A A A inspi'REIRA(TRAPNEST) A Song For James ELLEGARDEN From the album "BRING YOUR BOARD!!" B a walk in the park Amuro Namie - A a Wish to the Moon Joe Hisaishi - A A~Yokatta Hana*Hana - A A Aa Superfly 13th Single A A A Aa Hatsu Koi 3B LAB.☆ - B Aa, Seishun no Hibi Yuzu - B Abakareta Sekai thee michelle gun elephant - B Abayo Courreges tact, BABY... Kishidan - B abnormalize Rin Toshite Shigure Anime"PSYCHO-PASS" Opening theme B B Acro no Oka Dir en grey - B Acropolis ELLEGARDEN From the album "ELEVEN FIRE CRACKERS" B Addicted ELLEGARDEN From the album "Pepperoni Quattro" B ASIAN KUNG-FU After Dark - B GENERATION again YUI Anime "Fullmetal Alchemist" Opening theme A B A A A A A A Again 2 Yuzu - B again×again miwa From 2nd album "guitarium" B B Ageha Cho PornoGraffitti - B Ai desita. Kan Jani Eight TBS Thursday drama 9 "Papadoru!" Theme song B B A A A Ai ga Yobu Hou e PornoGraffitti - B A A Ai Nanda V6 - A Ai no Ai no Hoshi the brilliant green - B Ai no Bakudan B'z - B Ai no Kisetsu Angela Aki NHK TV novel series "Tsubasa" Theme song A A -

DJ Song List by Song

A Case of You Joni Mitchell A Country Boy Can Survive Hank Williams, Jr. A Dios le Pido Juanes A Little Bit Me, a Little Bit You The Monkees A Little Party Never Killed Nobody (All We Got) Fergie, Q-Tip & GoonRock A Love Bizarre Sheila E. A Picture of Me (Without You) George Jones A Taste of Honey Herb Alpert & The Tijuana Brass A Ti Lo Que Te Duele La Senorita Dayana A Walk In the Forest Brian Crain A*s Like That Eminem A.M. Radio Everclear Aaron's Party (Come Get It) Aaron Carter ABC Jackson 5 Abilene George Hamilton IV About A Girl Nirvana About Last Night Vitamin C About Us Brook Hogan Abracadabra Steve Miller Band Abracadabra Sugar Ray Abraham, Martin and John Dillon Abriendo Caminos Diego Torres Absolutely (Story Of A Girl) Nine Days Absolutely Not Deborah Cox Absynthe The Gits Accept My Sacrifice Suicidal Tendencies Accidentally In Love Counting Crows Ace In The Hole George Strait Ace Of Hearts Alan Jackson Achilles Last Stand Led Zeppelin Achy Breaky Heart Billy Ray Cyrus Across The Lines Tracy Chapman Across the Universe The Beatles Across the Universe Fiona Apple Action [12" Version] Orange Krush Adams Family Theme The Hit Crew Adam's Song Blink-182 Add It Up Violent Femmes Addicted Ace Young Addicted Kelly Clarkson Addicted Saving Abel Addicted Simple Plan Addicted Sisqó Addicted (Sultan & Ned Shepard Remix) [feat. Hadley] Serge Devant Addicted To Love Robert Palmer Addicted To You Avicii Adhesive Stone Temple Pilots Adia Sarah McLachlan Adíos Muchachos New 101 Strings Orchestra Adore Prince Adore You Miley Cyrus Adorn Miguel