Project Periodic Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Changchun Mayor: Better Quality of Life Needed

CHINA DAILY MONDAY, MARCH 14, 2011 sports 23 scoreboard ALPINE SKIING Fall of wkts: 1-41 (Smith), 2-127 (Amla), NEW JERSEY 3 NY Islanders 2 (OT) Nice 1 (Mouloungui 62) Auxerre 0 India’s Harbhajan Singh is bowled 3-173 (Kallis), 4-223 (de Villiers), 5-238 Atlanta 5 PHILADELPHIA 4 (OT) Sochaux 0 Lyon 2 (Lisandro 22, Pjanic 64) by South Africa’s Dale Steyn during Women’s World Cup slalom (Duminy), 6-247 (van Wyk), 7-279 (Botha). Columbus 3 CAROLINA 2 Arles-Avignon 3 (Meriem 6, Kermorgant Saturday’s results: Bowling: Zaheer 10-0-43-1, Nehra 8.4- FLORIDA 4 Tampa Bay 3 (OT) 64, Cabella 80) Lorient 3 (Amalfi tano 19, their World Cup group B match in 0-65-0, Patel 10-0-65-2, Pathan 4-0-20-0, Detroit 5 ST. LOUIS 3 Diarra 45, Gameiro 53) 1. Marlies Schild (AUT) 1:43.85 Nagpur on Saturday. REUTERS 2. Kathrin Zettel (AUT) 1:44.78 Yuvraj 8-0-47-0, Harbhajan 9-0-53-3 (w1). NASHVILLE 4 Colorado 2 Lens 0 Toulouse 1 (Santander 75) 3. Tina Maze (SLO) 1:45.01) Result: South Africa win by three wickets Vancouver 4 CALGARY 3 Saint-Etienne 2 (Sako 35-pen, Payet 88) 4. Maria Pietilae-holmner (SWE) 1:45.41 NY Rangers 3 SAN JOSE 2 (SO) Brest 0 5. Veronika Zuzulova (SVK) 1:45.42 BIATHLON (OT indicates overtime win) 6. Manuela Moelgg (ITA) 1:45.60 German league World Cup overall standings (after 30 of 35 World championship NORDIC SKIING Saturday’s results: events): Saturday’s results (penalties for missed VfL Wolfsburg 1 (Mandzukic 22) Nurem- 1. -

Steckbriefe „So Bin Ich“/„So Bist Du“ G 1.0

STECKBRIEFE „SO BIN ICH“/„SO BIST DU“ G 1.0 STECKBRIEFE „SO BIN ICH“/„SO BIST DU“ Dein DAS BIN ICH! Foto! Mein Name: So alt bin ich: Dort wohne ich: Mit mir leben dort: Meine Lieblingssportart(en): Das spiele ich besonders gerne: Beim Spielen brauche ich die anderen, weil: Wenn ich gewinne, fühle ich mich so: Wenn ich verliere, fühle ich mich so: So lautet meine wichtigste Spielregel: Das würde ich gerne meinem besten Freund / meiner besten Freundin beibringen: Mein Lieblingssportler heißt: Das kann ich besonders gut und werde darin ja vielleicht mal Olympiasieger: Olympia ruft: Mach mit! Tokio 2020 Unterrichtsmaterialien Sekundarstufe Deutsche Olympische Akademie 1 / 2 STECKBRIEFE „SO BIN ICH“/„SO BIST DU“ G 1.0 Dein Foto! DAS BIST DU! Dein Name: So alt bist Du: Das weiß ich über dich: Das mag ich ganz besonders an dir: Deine Lieblingssportart(en): Beim Spielen brauche ich dich, weil: Wenn wir gewinnen, würden wir den Sieg so genießen und feiern: Wenn wir mal verlieren, reagierst du so: Das würde ich dir gerne beibringen: Das würde ich gerne von dir lernen: Dein Lieblingssportler heißt: Das kannst du besonders gut und würdest darin ja vielleicht mal Olympiasieger werden: Olympia ruft: Mach mit! Tokio 2020 Unterrichtsmaterialien Sekundarstufe Deutsche Olympische Akademie 2 / 2 CHECKLISTE ZUR VORBEREITUNG EINES BIBLIOTHEKBESUCHS G 2.0 CHECKLISTE ZUR VORBEREITUNG EINES BIBLIOTHEKBESUCHS Verteilt die anfallenden Aufgaben untereinander. Jeder soll sich dort einbringen, wo er am besten unterstützen kann: • Bibliothek auswählen • Terminfindung • Genehmigung des Unterrichtsganges einholen • Thema „Freundschaft“ im Unterricht zum Beispiel mittels eigener Bücher vorbereiten • Aufgabenstellungen vorbereiten • Unterrichtsgangplanung und -durchführung • Analyse bzgl. -

Athletes' Commission Elections Candidate Profiles Akvile

Athletes’ Commission Elections Candidate Profiles Akvile STAPUSAITYTE (Lithuania) Date of Birth – 25 March 1986 Current Ranking ‐ Women’s Singles: 224 Achievements o Played at the Beijing 2008 and London 2012 London Olympic Games Languages spoken/written Lithuanian, English, Russian Statement from the Player Akvile Stapusaityte is a professional badminton player from Lithuania. She has been national champion multiple times in singles and other disciplines as well. Akville has represented her nation at two Olympic Games – the first was in the year 2008 in Beijing in China and the second one in the 2012 London Games. Her badminton career has seen her play at many international tournaments hosted in many different countries far and wide. From her travels, Akville has observed that conditions at various tournaments were generally good but there are many things that could be further improved. Among them, she listed the following areas that can be worked on ‐ individual entry, tournament time schedules, better understanding between players and empires, more efficient price money distribution and player’s lounge. Hence, it is her wish to be able to offer her contribution to the game as a candidate such that there can be improvements in the future. Athletes’ Commission Elections 2017 – Candidate Profiles Athletes’ Commission Elections Candidate Profiles Edwin Ekiring (Uganda) Date of Birth – 22 December 1983 Current Ranking ‐ Men’s Singles: 331 Achievements o Former African No 1 o Represented Uganda at two Olympic Games and two BWF World Championships Languages spoken/written English, Dutch, and a little bit of German Statement from the Player A profession badminton player and former African No 1, Edwin Ekiring has the honour of representing his country at two Olympic Games – Beijing 2008 and London 2012 as well as two BWF World Championships, three Commonwealth Games, three African Games plus a long list of international competitions. -

Badminton Er Fællessproget

Sommerlejre i Danmark (som D BÎ,,kender til) Lejrnavn Dato Arrangør Sted Niveau Andre inform ationer DBF Badmintonlejr 23. juni - 25. juni DBF Sæby Hallen U11-U13 Www.badminton.dk DBF Badm intonlejr 26. juni - 29. juni DBF Sæby Hallen U15-U17 Www.badminton.dk DBF Badmintonlejr 30. juni - 2. juli DBF Sakskøbing Hallen U13-U15 Www.badminton.dk DBF Badmintonlejr 23. juni - 25. juni DBF Sorø Hallerne U11-U13 Www.badminton.dk DBF Badmintonlejr 26. juni - 29. juni DBF Sorø Hallen U15-U17 W w w . b ad m i n t o n . d k DBF Badmintonlejr 28. juni - 30. juni DBF Lyngby Hallerne U11-U13 Www.badminton.dk DBF Badmintonlejr 31. juni - 3. august DBF Lyngby Hallerne U15-U17 Www.badminton.dk NetCam p 1: 7. -11. juli Badmintonsiden.dk Højbjerg U11A - U13EM A www.badmintonsiden.dk NetCamp 2: 12. -16. juli Badmlntonsiden.dk Højbjerg U13E - U15EM www.badmintonsiden.dk Viby Lejr 1 23. juni - 29. juni Viby Badminton Klub Viby J. U15 E + U17 og U19 EM A www.vibybk.dk Viby Lejr 2 30. juni - 5. juli Viby Badminton Klub Vib y J. U11-U 15 EM A www.vibybk.dk Glam sbjerg Lejr 1 22. juni - 25. juni Fyns Badminton Kreds Glam sbjerg U11 www.glam sbjerglejr.dk Glam sbjerg Lejr 2 25. juni - 29. juni Fyns Badminton Kreds Glam sbjerg U13-U15 www.glam sbjerglejr.dk Glam sbjerg Lejr 3 12. juli - 16. juli Fyns Badminton Kreds Glam sbjerg U15-U17 www.glam sbjerglejr.dk Glam sbjerg Lejr 4 16. juli - 20. juli Fyns Badminton Kreds Glam sbjerg U13-U15 www.glam sbjerglejr.dk Glam sbjerg Lejr 5 26. -

ROAD to BAKU – the Singles

ROAD TO BAKU – the singles This is an unofficial list of the qualifiers for the inaugural 2015 Baku European Games – if the qualification was decided now! Men’s singles Women’s singles Qual. Rank. NOC Player In / Out Qual. Rank. NOC Player In / Out 1 #3 Jan Ø. Jørgensen - 1 #7 Carolina Marin - 2 #9 Hans-Kristian Vittinghus In 2 #23 Beatriz Corrales - - #11 Viktor Axelsen Out 3 #26 Kirsty Gilmour - 3 #18 Marc Zwiebler - 4 #34 Karin Schnaase - 4 #24 Rajiv Ouseph - 5 #36 Line Kjærsfeldt - 5 #28 Brice Leverdez - 6 #38 Linda Zetchiri - 6 #32 Scott Evans - 7 #41 Chloe Magee - 7 #36 Eric Pang - 8 #42 Anna Thea Madsen - 8 #41 Henri Hurskainen - 9 #43 Sashina Vignes Waran - 9 #46 Ville Lång - 10 #47 Natalia Perminova - 10 #51 Misha Zilberman - 11 #48 Sabrina Jaquet - 11 #52 Petr Koukal - 12 #53 Kristina Gavnholt - 12 #53 Pablo Abian - 13 #59 Özge Bayrak - 13 #56 Vladimir Malkov - 14 #63 Jeanine Cicognini - 14 #69 Dmytro Zavadsky - 15 #76 Marija Ulitina - 15 #72 Luka Wraber - 16 #83 Kati Tolmoff - 16 #75 Adrian Dziolko - 17 #85 Soraya d.v. Eijbergen - 17 #79 Yuhan Tan - 18 #88 Elisabeth Baldauf In 18 #81 Raul Must - 19 #89 Akvile Stapusaityte - 19 #82 Kestutis Navickas - 20 #93 Lianne Tan - 20 #96 Iztok Utrosa - 21 #98 Anna Narel - 21 #111 Rosario Maddaloni - 22 #102 Jana Ciznarova - 22 #131 Blagovest Kisyov - - #103 Simone Prutsch Out 23 #141 Jarolim Vicen In 23 #110 Matilda Petersen - - #150 Matej Hlinican Out 24 #113 Nanna Vainio - 24 #153 Zvonimir Durkinjak - 25 #126 Laura Sarosi - 25 #161 Marius Myhre - 26 #133 Alesia Zaitsava - 26 #191 Enis Sesalan - 27 #144 Dorotea Sutara - 27 #213 Anthony Dumartheray - 28 #200 Telma Santos - 28 #304 Gergely Krausz In 29 #220 Monika Radovska - - #314 Rudolf Dellenbach Out 30* #249 Milica Simic - 29 #444 Pedro Martin - 31* #262 Ioanna Karkantzia In 30* #567 Ilija Pavlovic - AZE** #503 Alizada Parvin - 31* #633 Kari Gunnarsson In Res. -

YONEX German Open 2017 GERMAN OPEN INSIDE Donnerstag, 2

Event-Magazin der YONEX German Open 2017 GERMAN OPEN INSIDE Donnerstag, 2. März 2017 „Danke für sechs wunderschöne Jahre!“ Birgit Overzier und Michael Fuchs verabschiedeten sich am Mittwoch mit einem Klassematch von der internationalen Bühne und ihren Fans. GERMAN OPEN INSIDE - Donnerstag 3 Match of the Day 4 - 5 Höhepunkte am Donnerstag 129 - 13-13 Bericht XXXX Mittwoch 6 - 7 Turniergeschichten 14 -18 Ergebnisse 8 Foto des Tages 19 Spielplan Donnerstag Die innogy Sporthalle – seit 2005 werden die YONEX German Open in Mülheim an der Ruhr ausgetragen. Ergebnisse und Infos auf YONEX German Open german-open-badminton.de auf Facebook Impressum Herausgeber des Eventmagazins: Hinweise: Vermarktungsgesellschaft Der Herausgeber des Eventmaga- Badminton zins haftet nicht für den Inhalt der Deutschland mbH (VBD) veröffentlichten Anzeigen Südstraße 25a, D-45470 Mülheim an der Ruhr Team: Tel.: 0208/308 2717 Claudia Pauli (cp) E-Mail: janet.bourakkadi @ Sven Heise (sh) vbd-badminton.de Stephan Wilde (sw) Copyright: Papier & Druck: Nachdruck, auch auszugsweise, Konica Minolta Business nur mit ausdrücklicher Einwilli- Solutions Deutschland GmbH gung des Herausgebers und unter Alexanderstraße 38 voller Quellenangabe 45472 Mülheim an der Ruhr 2 German Open Inside | 2.3.2017 Match of the Day Achtelfinale Herreneinzel 19.55 Uhr auf Spielfeld 2 Fabian Roth – Takuma Ueda Fast ein Jahr musste Fabian Roth nach ei deutsche Team im Halbfinale gegen Däne ner Hüftoperation pausieren, dann legte er mark 1:3 und gewann Bronze. Den einzigen ein großartiges Comeback hin. Bei seinem Sieg steuerte Fabian bei – mit einer sensa ersten Turnier, den Belgian International im tionellen Leistung bezwang er den Europa September 2016 belegte er Platz drei. -

Auf Dem Sprung Nach Olympia: Vor Zwei Jahren Hatte Marc Zwiebler ('Links) Noch So Starke Rucken Schmerzen, Dass Er Nicht Allein in Die Hose Kam

Auf dem Sprung nach Olympia: Vor zwei Jahren hatte Marc Zwiebler ('links) noch so starke Rucken schmerzen, dass er nicht allein in die Hose kam. Dann land er einen guten Arzt und zu neuen Erfol gen: Peking l Da will er hin, genauso wie Ju liane Domscheid (oben) und Laura Darimont (ganz oben) II Bound for Beijing: Two years back, Marc Zwiebler (left) was in so much pain he couldn't even dress him self. Then a good doctor put him back on the road: to the Olympics! He's headed for China just like Juliane Dom scheid (above) and Laura Darimont (top) Nah am Wasser gebaut: Als Juliane Domscheid beim Schwimmen schei lerte, heulte sie tagelang. Dann begann sie vor drei Jahren zu rudern - und konnte schon jetzt Olym piasiegerin werden II Water baby: Juliane Domscheid didn'l quite make the grade as a swimmer and cried for days. Then three years ago, she took up rowing - and could well be in line for Olympic Gold ,ansa M,:gazin 07/08 People Olympia + - ~ 51 her dabei zu. Wenn sie mit einer Bleiweste von neun Kilo many victories. She accom the French cross the border It; und sie springt und springt und springt. Wenn sie lacht, panies Laura to training ses for a drink and people are n sie weint, wenn sie einfach nur ein mutiges Madchen ist, sions nearly every day and proud of where they come 3 sich von seiner Anatomie nicht unterkriegen lassen will. watches from the benches in from and will tell you with a Letztes Jahr gewann Laura Oarimont Silber und Bronze all weather. -

Turniermagazin YGO 2016

Den schnellsten t der Welt Racket-Spor live erleben Die besten Badmintonspieler kämpfen um 120.000 US$ Preisgeld RWE-Sporthalle in Mülheim an der Ruhr Mehr Infos unter www.german-open-badminton.de Veranstalter: Vermarktungsgesellschaft Badminton Deutschland mbH (VBD) für den Deutschen Badminton-Verband e.V. (DBV) Ausrichter: 1. Badminton-Verein Mülheim an der Ruhr e.V. YONEX.DE IMPOSANTE KONTROLLE Überliste deine Gegner mit der überragenden Präzision und den perfekten Kontrollqualitäten eines ARCSABER Rackets. MARC ZWIEBLER ARCSABER 11 ARCSABER Schläger geben dir genau die Performance für Kontrolle, Präzision und Feeling, die du brauchst, um deinen Gegner bei jedem Schlag in Bedrängnis zu bringen. Nutze diesen Vorteil, damit du als Sieger vom Feld gehst. YONEX GMBH • 47877 Willich • Tel. 0 21 54 / 9 18 60 • Fax 0 21 54 / 91 86 99 • e-mail: [email protected] INHALT / IMPRESSUM | CONTENT / IMPRINT Inhalt | Content* Geleitwort/Grußworte | Foreword/Greetings Historie | History Ulrich Scholten, Oberbürgermeister Mülheim a. d. Ruhr 4 Austragungsorte seit 1955 16 Christina Kampmann, Sportministerin Land NRW 4 Venues since 1955 Poul-Erik Høyer, President Badminton World Federation 5 Die Finalspiele der YONEX German Open 2015 19 Finals of YONEX German Open 2015 Poul-Erik Høyer, Präsident Badminton-Weltverband 5 Letzter „Heimsieg“ im Jahr 1975 22 Karl-Heinz Kerst, Präsident Deutscher Badminton-Verband 6 Deutsche Titelträger seit 1955 22 Kusaki Hayashida, President YONEX Co., Ltd. 6 Marc Zwiebler schrieb Sportgeschichte 23 Kusaki Hayashida, Präsident YONEX Co., Ltd. 7 Erfolge der DBV-Asse seit März 2015 24 Frank Thiemann, 1. Vorsitzender 1. BV Mülheim 7 Ulrich Schaaf, Präsident Badminton-Landesverband NRW 8 Martina Ellerwald, Leiterin Mülheimer SportService 8 Hintergrund Deutsches Badminton-Zentrum Mülheim a. -

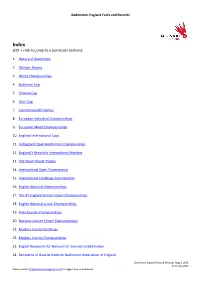

Facts and Records

Badminton England Facts and Records Index (cltr + click to jump to a particular section): 1. History of Badminton 2. Olympic Games 3. World Championships 4. Sudirman Cup 5. Thomas Cup 6. Uber Cup 7. Commonwealth Games 8. European Individual Championships 9. European Mixed Championships 10. England International Caps 11. All England Open Badminton Championships 12. England’s Record in International Matches 13. The Stuart Wyatt Trophy 14. International Open Tournaments 15. International Challenge Tournaments 16. English National Championships 17. The All England Seniors’ Open Championships 18. English National Junior Championships 19. Inter-County Championships 20. National Leisure Centre Championships 21. Masters County Challenge 22. Masters County Championships 23. English Recipients for Honours for Services to Badminton 24. Recipients of Awards made by Badminton Association of England Badminton England Facts & Records: Page 1 of 86 As at May 2021 Please contact [email protected] to suggest any amendments. Badminton England Facts and Records 25. English recipients of Awards made by the Badminton World Federation 1. The History of Badminton: Badminton House and Estate lies in the heart of the Gloucestershire countryside and is the private home of the 12th Duke and Duchess of Beaufort and the Somerset family. The House is not normally open to the general public, it dates from the 17th century and is set in a beautiful deer park which hosts the world-famous Badminton Horse Trials. The Great Hall at Badminton House is famous for an incident on a rainy day in 1863 when the game of badminton was said to have been invented by friends of the 8th Duke of Beaufort. -

YGO-Turniermagazin 2019

Den schnellsten Racketsport der Welt live erleben YONEX GERMAN OPEN 2019 part of the HSBC BWF World Tour Die besten Badmintonspieler kämpfen um 150.000 US$ Preisgeld 26.02.-03.03.2019 innogy Sporthalle in Mülheim an der Ruhr Mehr Infos unter www.german-open-badminton.de Veranstalter: Vermarktungsgesellschaft Badminton Deutschland mbH (VBD) für den Deutschen Badminton-Verband e.V. (DBV) Ausrichter: 1. Badminton-Verein Mülheim an der Ruhr e.V. Kento Momota, Sieger 2018 BWF World Championships FAR BEYOND ORDINARY YONEX Rackets und weiteres Badminton Equipment erhalten Sie an unseren Verkaufsständen. INHALT / IMPRESSUM | CONTENT / IMPRINT Inhalt | Content¹ Geleitwort/Grußworte | Foreword/Greetings Historie | History Ulrich Scholten, Oberbürgermeister Mülheim a. d. Ruhr 4 Große Herausforderungen beim Heimturnier 16 Andrea Milz, Staatssekretärin für Sport und Ehrenamt 4 Titelgewinne durch DBV-Asse seit 1955 16 in der Staatskanzlei des Landes NRW Podestplätze durch DBV-Asse seit 1955 17 Poul-Erik Høyer, President Badminton World Federation 5 Deutsche Einzelmeisterschaften 2019 19 Poul-Erik Høyer, Präsident Badminton-Weltverband 5 Die Finalspiele der YONEX German Open 2018 23 Thomas Born, Präsident Deutscher Badminton-Verband 6 Finals of YONEX German Open 2018 Kusaki Hayashida, President YONEX Co., Ltd. 6 Kusaki Hayashida, Präsident YONEX Co., Ltd. 7 Portrait Frank Thiemann, 1. Vorsitzender 1. BV Mülheim 7 Ulrich Schaaf, Präsident Badminton-Landesverband NRW 8 1. BVM: 2018 – das Jahr der Schüler 24 und Jugendlichen Martina Ellerwald, Leiterin -

Nikolaus-Turnier Mit Prominenter Besetzung

Mit der VOLTRIC Technologie haben die YONEX Ingenieure eine neue Racket-Generation entwickelt, die in bisher nicht gekannter Weise Power- und Handlingsqualitäten in einem Racket vereint. Damit bieten die VOLTRIC Schläger beste Voraussetzungen, um dem Spieler im offensiv orientieren Rally-Point Spielsystem einen nahezu unschlagbaren Vorteil zu verschaffen. Testen Sie jetzt selbst, dass nur YONEX Ihr Partner No 1 für hochwertiges Badminton-Equipment ist. Carsten Mogensen (DEN) VOLTRIC 80 Das Top-Racket der neuen VOLTRIC Schlägerserie mit unglaublicher Power und fantastischem Handling. VOLTRIC 70 Das extrem flinke Racket-Handling und gewaltige Power-Ressourcen machen dieses VOLTRIC Racket zum perfekten Partner für das offensive Badmintonspiel. YONEX GMBH • D - 47877 Willich • Tel. 0 21 54 / 9 18 60 • Fax 0 21 54 / 91 86 99 • e-mail: [email protected] INHALT / IMPRESSUM Inhalt Geleitwort/Grußworte Internationales Dagmar Mühlenfeld, Oberbürgermeisterin von Mülheim a. d. Ruhr 4 Das Turniersystem der BWF 15 Karl-Heinz Kerst, Präsident des DBV 4 Punkte für die Weltrangliste 16 Yoshihiro Yano, Geschäftsführer der YONEX GmbH 5 Die Preisgeldverteilung bei den YGO 2011 16 Thomas van Emmenes, Vorsitzender des 1. BV Mülheim 5 Siege fürs Geschichtsbuch 18 Ulrich Schaaf, Präsident des BLV-NRW 6 Die Medaillengewinner/innen 19 Martina Ellerwald, Stellv. Leiterin des Mülheimer SportService 6 der Individual-WM 2010 Hintergrund Turnierinformationen Ein silbernes Jubiläum 21 Veranstalter, Haupt- und Namenssponsor 7 Siegerpreise 7 Portrait Turnierablauf und Ticketpreise -

Men's Singles Results Gold Silver Bronze Bronze World Championships Chen Jin Taufik Hidayat Park Sung Hwan Peter Gade

⇧ 2011 Back to Badzine Results Page ⇩ 2009 2010 Men's Singles Results Gold Silver Bronze Bronze World Championships Chen Jin Taufik Hidayat Park Sung Hwan Peter Gade Super Series Korea Open Lee Chong Wei Peter Gade Chen Jin Chen Long Malaysia Open Lee Chong Wei Boonsak Ponsana Nguyen Tien Minh Peter Gade All England Lee Chong Wei Kenichi Tago Peter Gade Bao Chunlai Swiss Open Chen Jin Chen Long Du Pengyu Peter Gade Singapore Open Sony Dwi Kuncoro Boonsak Ponsana Kashyap Parupalli Peter Gade Indonesia Open Lee Chong Wei Taufik Hidayat Sony Dwi Kuncoro Nguyen Tien Minh Japan Open Lee Chong Wei Lin Dan Boonsak Ponsana Peter Gade China Masters Lin Dan Chen Long Bao Chunlai Wang Zhengming Denmark Open Jan O Jorgensen Taufik Hidayat Hu Yun Du Pengyu French Open Taufik Hidayat Joachim Persson Peter Gade Boonsak Ponsana China Open Chen Long Bao Chunlai Du Pengyu Chen Jin Hong Kong Open Lee Chong Wei Taufik Hidayat Nguyen Tien Minh Chen Long Superseries Finals Lee Chong Wei Peter Gade Boonsak Ponsana Chen Long Grand Prix Gold India Open GP Gold Alamsyah Yunus R. M. V. Gurusaidatt Chetan Anand Kashyap Parupalli Malaysia GP Gold Lee Chong Wei Wong Choong Hann Ajay Jayaram Taufik Hidayat U.S. Open GP Gold Rajiv Ouseph Brice Leverdez Yuichi Ikeda Derek Wong Macau Open Lee Chong Wei Lee Hyun Il Simon Santoso Boonsak Ponsana Chinese Taipei Open Simon Santoso Son Wan Ho Nguyen Tien Minh Dionysius Hayom Rumbaka Bitburger Open Chen Long Hans-Kristian Vittinghus Carl Baxter Petr Koukal Indonesia GP Gold Taufik Hidayat Dionysius Hayom Rumbaka Andre Kurniawan