Using Color Infrared (CIR) Imagery

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Radiant Heating with Infrared

W A T L O W RADIANT HEATING WITH INFRARED A TECHNICAL GUIDE TO UNDERSTANDING AND APPLYING INFRARED HEATERS Bleed Contents Topic Page The Advantages of Radiant Heat . 1 The Theory of Radiant Heat Transfer . 2 Problem Solving . 14 Controlling Radiant Heaters . 25 Tips On Oven Design . 29 Watlow RAYMAX® Heater Specifications . 34 The purpose of this technical guide is to assist customers in their oven design process, not to put Watlow in the position of designing (and guaranteeing) radiant ovens. The final responsibility for an oven design must remain with the equipment builder. This technical guide will provide you with an understanding of infrared radiant heating theory and application principles. It also contains examples and formulas used in determining specifications for a radiant heating application. To further understand electric heating principles, thermal system dynamics, infrared temperature sensing, temperature control and power control, the following information is also available from Watlow: • Watlow Product Catalog • Watlow Application Guide • Watlow Infrared Technical Guide to Understanding and Applying Infrared Temperature Sensors • Infrared Technical Letter #5-Emissivity Table • Radiant Technical Letter #11-Energy Uniformity of a Radiant Panel © Watlow Electric Manufacturing Company, 1997 The Advantages of Radiant Heat Electric radiant heat has many benefits over the alternative heating methods of conduction and convection: • Non-Contact Heating Radiant heaters have the ability to heat a product without physically contacting it. This can be advantageous when the product must be heated while in motion or when physical contact would contaminate or mar the product’s surface finish. • Fast Response Low thermal inertia of an infrared radiation heating system eliminates the need for long pre-heat cycles. -

Understanding Infrared Light

TEACHER/PARENT ACTIVITY MANUAL Joint Polar Satellite System Understanding Infrared Light This activity educates students about the electromagnetic spectrum, or different forms of light detected by Earth observing satellites. The Joint Polar Satellite System (JPSS), a collaborative effort between NOAA and NASA, detects various wavelengths of the electromagnetic spectrum including infrared light to measure the temperature of Earth’s surface, oceans, and atmosphere. The data from these measurements provide the nation with accurate weather forecasts, hurricane warnings, wildfire locations, and much more! Provided is a list of materials that can be purchased to complete several learning activities, including simulating infrared light by constructing homemade infrared goggles. Learning Objectives Next Generation Science Standards (Grades 5–8) Performance Disciplinary Description Expectation Core Ideas 4-PS4-1 PS4.A: • Waves, which are regular patterns of motion, can be made in water Waves and Their Wave Properties by disturbing the surface. When waves move across the surface of Applications in deep water, the water goes up and down in place; there is no net Technologies for motion in the direction of the wave except when the water meets a Information Transfer beach. (Note: This grade band endpoint was moved from K–2.) • Waves of the same type can differ in amplitude (height of the wave) and wavelength (spacing between wave peaks). 4-PS4-2 PS4.B: An object can be seen when light reflected from its surface enters the Waves and Their Electromagnetic eyes. Applications in Radiation Technologies for Information Transfer 4-PS3-2 PS3.B: Light also transfers energy from place to place. -

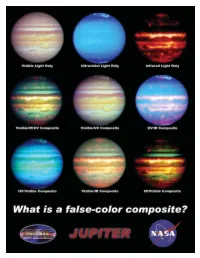

Jupiter Litho

What Is a False-Color Composite? When most of us think of light, we naturally think about the light we can see with our eyes. But did you know that visible light is only a tiny fraction of the electromagnetic spectrum? You see, the human eye is only sensitive to certain wavelengths of light, and there are many other wavelengths, including radio waves, infrared light, ultraviolet light, x-rays, and gamma rays. When astronomers observe the cosmos, some objects are better observed at wavelengths that we cannot see. In order to help us visualize what we're looking at, sometimes scientists and artists will create a false-color image. Basically, the wavelengths of light that our eyes can't see are represented by a color (or a shade of grey) that we can see. Even though these false-color images do not show us what the object we're observing would actually look like, it can still provide us with a great deal of insight about the object. A composite is an image which combines different wavelengths into the same image. Since a composite isn't what an object really looks like, the same object can be represented countless different ways to emphasize different features. On the reverse are nine images of the planet Jupiter. The first image is a visible-light image, and shows the planet as it might look to our eyes. This image is followed by two false-color images, one showing the planet in the ultraviolet spectrum, and one showing it in the infrared spectrum. The six composites that follow are all made from the first three images, and you can see how different the planet can look! Definitions composite – An image that combines several different wavelengths of light that humans cannot see into one picture. -

False Color Photography Effect Using Hoya UV\&Amp

MATEC Web of Conferences 197, 15008 (2018) https://doi.org/10.1051/matecconf/201819715008 AASEC 2018 False Color Photography Effect using Hoya UV&IR Cut Filters Feature White Balance Setya Chendra Wibawa1,6*, Asep Bayu Dani Nandyanto2, Dodik Arwin Dermawan3, Alim Sumarno4, Naim Rochmawati5, Dewa Gede Hendra Divayana6, and Amadeus Bonaventura6 1Universitas Negeri Surabaya, Educational in Information Technology, Informatics Engineering, 20231, Indonesia, 2Universitas Pendidikan Indonesia, Chemistry Department, Bandung, 40154, Indonesia, 3Universitas Negeri Surabaya, Informatics Engineering, 60231, Indonesia, 4Universitas Negeri Surabaya, Educational Technology, 60231, Indonesia, 5Universitas Negeri Surabaya, Informatics Engineering, 60231, Indonesia, 6Universitas Pendidikan Ganesha, Educational in Information Technology, Informatics Engineering, 81116, Indonesia Abstract. The research inspired by modification DSLR camera become False Color Photography effect. False Color Photography is a technique to give results like near-infrared. Infrared photography engages capturing invisible light to produces a striking image. The objective of this research to know the effect by change a digital single-lens reflex (D-SLR) camera to be false color. The assumption adds a filter and make minor adjustments or can modify the camera permanently by removing the hot mirror. This experiment confirms change usual hot mirror to Hoya UV&IR Cut Filter in front of sensor CMOS. The result is false color effect using feature Auto White Balance such as the color of object photography change into reddish, purplish, old effect, in a pint of fact the skin of model object seems to be smoother according to White Balance level. The implication this study to get more various effect in photography. 1 Introduction The camera sensor is, in essence, monochromatic. -

Study of X-Ray Image Via Pseudo Coloring Algorithm

Sharma Komal et al.; International Journal of Advance Research, Ideas and Innovations in Technology ISSN: 2454-132X Impact factor: 4.295 (Volume 5, Issue 1) Available online at: www.ijariit.com Study of X-ray image via pseudo coloring algorithm Komal Sharma Karamvir Singh Rajpal Ravi Kant [email protected] [email protected] [email protected] Rayat Bahra University, Neonex Technology, Rayat Bahra University, Mohali, Punjab Mohali, Punjab Mohali, Punjab ABSTRACT The objective of this paper is to comprehensively study the concept of pseudo colouring techniques with Repetitive Line Tracking Method Keywords— False coloring, MATLAB, Ferro magnetic, Medical images 1. INTRODUCTION Fig. 2: X-Ray Image of hand before repetitive line tracking Medical imagining refers to techniques and processing used to method is applied create images of unanimous parts of the body. It is not always easy to analyze and interpret X-Ray images. The human eye can distinguish only a limited number of gray scale values but can distinguish thousands of color. So it is clear that the human eye can extract more amount of information from the colored image than that of a gray image. So pseudo coloring is very useful in improving the visibility of an image. As we know that in X-Ray images can only perceive gray shades. In detecting edges of dark hues like Violet, Indigo, Blue and Green [2]. Organs and tissues within the body contain magnetic properties. MRI involves radio waves and magnetic field (instead of X-rays) and on another side, a computer is used to Fig. 3: X-Ray Image of hand after repetitive line tracking manipulate the magnetic elements and generate highly detailed method is applied images of organs in the body. -

Multidisciplinary Design Project Engineering Dictionary Version 0.0.2

Multidisciplinary Design Project Engineering Dictionary Version 0.0.2 February 15, 2006 . DRAFT Cambridge-MIT Institute Multidisciplinary Design Project This Dictionary/Glossary of Engineering terms has been compiled to compliment the work developed as part of the Multi-disciplinary Design Project (MDP), which is a programme to develop teaching material and kits to aid the running of mechtronics projects in Universities and Schools. The project is being carried out with support from the Cambridge-MIT Institute undergraduate teaching programe. For more information about the project please visit the MDP website at http://www-mdp.eng.cam.ac.uk or contact Dr. Peter Long Prof. Alex Slocum Cambridge University Engineering Department Massachusetts Institute of Technology Trumpington Street, 77 Massachusetts Ave. Cambridge. Cambridge MA 02139-4307 CB2 1PZ. USA e-mail: [email protected] e-mail: [email protected] tel: +44 (0) 1223 332779 tel: +1 617 253 0012 For information about the CMI initiative please see Cambridge-MIT Institute website :- http://www.cambridge-mit.org CMI CMI, University of Cambridge Massachusetts Institute of Technology 10 Miller’s Yard, 77 Massachusetts Ave. Mill Lane, Cambridge MA 02139-4307 Cambridge. CB2 1RQ. USA tel: +44 (0) 1223 327207 tel. +1 617 253 7732 fax: +44 (0) 1223 765891 fax. +1 617 258 8539 . DRAFT 2 CMI-MDP Programme 1 Introduction This dictionary/glossary has not been developed as a definative work but as a useful reference book for engi- neering students to search when looking for the meaning of a word/phrase. It has been compiled from a number of existing glossaries together with a number of local additions. -

INTRODUCTION to ERDAS IMAGINE Goals: 1. Display a Raster Image

ERDAS 1: INTRODUCTION TO ERDAS IMAGINE Goals: 1. Display a raster image using panchromatic, normal and false color options. 2. Use the zoom tool/buttons to properly display an image and the inquire cursor to identify pixel X, Y locations and digital numbers (DN). 3. Display an image automatically scaled with DN’s stretched from 0-255, or with natural spectral variation. o Use the histogram to view spectral variability and frequency/proportion of DN’s within each class. 4. Perform a linear contrast stretch 5. Measure distances and areas within an image. 6. Create spatial and spectral profiles of an image. GETTING STARTED To begin your Imagine Session, double-click on the ERDAS Imagine / Imagine Icon or select it from the start-up menu. Erdas Imagine 2011 with a blank 2D View# 1will appear. 1. DISPLAYING AN IMAGE To open and display a raster image you can do either of the following: • From the ERDAS Application select File - Open – Raster Layer (Data will be in Vender Data; ERDAS folder; Example Data_201; lanier.img) Do not Click OK yet. Select the Raster Options tab. This is a Landsat Thematic Mapper (TM) image that has 7 wavebands of information with 512 rows and columns of raster information. In the Layers to Colors section, use the following band/color assignments: Red 4, Green 3, Blue 2. This is a False Color Composite which sends Near Infrared (NIR) Light in TM Band 4 to the Red color gun, Red Light in TM Band 3 to the Green gun, and Green Light TM Band 2 to the Blue gun. -

False Color Suppression in Demosaiced Color Images

FalseColorSuppressioninDemosaicedColorImages JayantaMukherje ManfredK.Lan S.K.Mitra DepartmentofComputerInstituteforHuman-DepartmentofElectrical Science&Engg.-MachineCommunication&ComputerEngg. IndianInstituteofTechnology TechnicalUniversityofMunich, UniversityofCalifornia Kharagpur,INDIA-721302D-80290,Munich,GermanySantaBarbara,93106,USA [email protected]@[email protected] ¡ ¡ Abstract ¢ In a single-chip digital color camera, a color filter array ¢ ¡ ¡ (CFA) is used to obtain sampled spectral components (red, green and blue) in an interleaved fashion. A color demo- ¢ ¢ saicing operation is then carried out to determine the miss- ing spectral components at every location. One of the prob- lems in color demosaicing is that many of the interpolated Figure1:TheBayerpattern. images are affected by colored artifacts near the edges cre- ating false colors. The problem is more severe if the edges are achromatic. In this paper we propose the use of median filtering for suppressing this phenomenon. We have con- or color demosaicing. sidered a set of existing interpolation algorithms and pre- One of the major concerns of this interpolation task is to sented their performances in interpolating mosaiced pat- keep the hardware cost as well as the computation time as terns. Next we have carried out median filtering of the small as possible. This is to make the digital color imag- chrominance components of the demosaiced images. In ing (for both still and video images) cost-effective and tech- each case, the post-processing has remarkably improved the nologically viable. Hence the methods usually employed quality of the reconstructions. The observations are verified in practice are based on low storage requirement (storing by both quantitative measures for expressing the quality of two or three rows of the captured image during scanning reconstruction as well as by visual examinations of the re- and processing) and simple computations (such as averag- constructed images. -

Undersea Warfare

THE EMERGING ERA IN UNDERSEA WARFARE BRYAN CLARK www.csbaonline.org 1 The Emerging Era in Undersea Warfare Introduction U.S. defense strategy depends in large part on America’s advantage in undersea warfare. Quiet submarines are one of the U.S. military’s most viable means of gathering intelligence and pro- jecting power in the face of mounting anti-access/area-denial (A2/AD) threats being fielded by a growing number of countries. As a result, undersea warfare is an important, if not essential, element of current and future U.S. operational plans. America’s rivals worry in particular about the access submarines provide for U.S. power-projection operations, which can help offset an enemy’s numerical or geographic advantages.1 Broadly speaking, undersea warfare is the employment of submarines and other undersea sys- tems in military operations within and from the underwater domain. These missions may be both offensive and defensive and include surveillance, insertion of Special Forces, and destroy- ing or neutralizing enemy military forces and undersea infrastructure. America’s superiority in undersea warfare is the product of decades of research and develop- ment (R&D), a sophisticated defense industrial base, operational experience, and high-fidelity training. This superiority, however, is far from assured. U.S. submarines are the world’s qui- etest, but new detection techniques are emerging that do not rely on the noise a submarine makes, and that may render traditional manned submarine operations far riskier in the future. America’s competitors are likely pursuing these technologies while also expanding their own undersea forces. -

7-9 April 2020 /// Amsterdam

7-9 April 2020 /// Amsterdam www.geospatialworldforum.org #GWF2020 Study of IRS 1C-LISS III Image and Identification of land cover features based on Spectral Responses Rubina Parveen Dr. Subhash Kulkarni Dr. V.D. Mytri Research Scholar PESIT, Bangaluru (South Campus) AIET Kalburgi, VTU, Belgavi, India India India [email protected] [email protected] [email protected] earth surface features using remotely sensed data are Abstract— Satellite Remote sensing with repetitive and based on the basic premise that different objects have pan viewing and multispectral capabilities, is a powerful unique reflectance/emittance properties in different tool for mapping and monitoring the ecological changes. parts of the electromagnetic spectrum [3]. The term Analysis of the remote sensing data is faced with a number “Signature” is defined as any set of observable of challenges ranging from type of sensors, number of characteristics, which directly or indirectly leads to sensors, spectral responses of satellite sensors, resolutions the identification of an object and/or its condition. in different domains and qualitative and quantitative interpretation. Any analysis of satellite imagery directly Signatures are statistical in nature with a certain depends on the uniqueness of above features. The mean value and some dispersion around it. Spectral multispectral image from IRS LISS-III sensor has been variations: Spectral variations are the changes in the used as the primary data to produce land cover reflectance or emittance of objects as a function of classification. This paper reports on the study of LISS III wavelength. Spatial variations: Spatial arrangements image, with emphasis on spectral responses of satellite of terrain features, providing attributes such as shape, sensors. -

Color Filter Array Demosaicking: New Method and Performance Measures Wenmiao Lu, Student Member, IEEE, and Yap-Peng Tan, Member, IEEE

1194 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 12, NO. 10, OCTOBER 2003 Color Filter Array Demosaicking: New Method and Performance Measures Wenmiao Lu, Student Member, IEEE, and Yap-Peng Tan, Member, IEEE Abstract—Single-sensor digital cameras capture imagery by demosaicking or CFA interpolation method, is required to esti- covering the sensor surface with a color filter array (CFA) such mate for each pixel its two missing color values. that each sensor pixel only samples one of three primary color An immense number of demosaicking methods have been values. To render a full-color image, an interpolation process, commonly referred to as CFA demosaicking, is required to esti- proposed in the literature [1]–[15]. The simplest one is prob- mate the other two missing color values at each pixel. In this paper, ably bilinear interpolation, which fills missing color values with we present two contributions to the CFA demosaicking: a new and weighted averages of their neighboring pixel values. Although improved CFA demosaicking method for producing high quality computationally efficient and easy to implement, bilinear inter- color images and new image measures for quantifying the per- polation introduces severe demosaicking artifacts and smears formance of demosaicking methods. The proposed demosaicking method consists of two successive steps: an interpolation step that sharp edges. To obtain more visually pleasing results, many estimates missing color values by exploiting spatial and spectral adaptive CFA demosaicking methods have been proposed to ex- correlations among neighboring pixels, and a post-processing step ploit the spectral and spatial correlations among neighboring that suppresses noticeable demosaicking artifacts by adaptive pixels. -

Color and False-Color Films for Aerial Photography

Color and False-Color Films for Aerial Photography RATFE G. TARKINGTON and ALLAN L. SOREM Research Laboratories, Eastman Kodak Company Rochester, N. Y. ABSTRACT: Color reproduction by the photographic process using three primary colors is discussed, and the 11se of these photographic and optical principles for false-color reproduction is explained. The characteristics of two new aerial films-Kodak Ektachrome Aero Film (Process E-3) and a false-color type, Kodak Ektachrome Infrared Aero Film (Process E-3)-are compared with those of the older products they replace. The new films have higher speed, im proved definition, and less granularity. OPULAR processes of color photography are KODAK EKTACHROME AERO FILM (PROCESS E-3) P based upon the facts that (1) the colors perceived by the human eye can be produced BLUE SENSITIVE YELLOW POSITIVE IMAGE by mixtures of only three suitably chosen =====::::==l=====~=~=~~[M~ colors called primaries; (2) photographic GREEN SENSITIVE MAGENTA POSITIVE IMAGE emulsions can be made to respond selectively REO SENSITIVE CYAN POSITIVE IMAGE to each of these three colors; and (3) chemical reactions exist which can produce three in dividual colorants, each capable of absorbing FIG. 1. Schematic representation of a essentially only one of the chosen primary multilayer color film. colors. Although theory imposes no single unique set of three primary colors, in prac in a scene, but the results obtained with tice the colors chosen are those produced by modern color photographic materials are re light from successive thirds of the visible markably realistic representations of the spectrum: red, green, and blue. When these original scene.