A False Color Image Fusion Method Based on Multi-Resolution Color

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

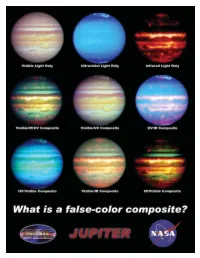

Jupiter Litho

What Is a False-Color Composite? When most of us think of light, we naturally think about the light we can see with our eyes. But did you know that visible light is only a tiny fraction of the electromagnetic spectrum? You see, the human eye is only sensitive to certain wavelengths of light, and there are many other wavelengths, including radio waves, infrared light, ultraviolet light, x-rays, and gamma rays. When astronomers observe the cosmos, some objects are better observed at wavelengths that we cannot see. In order to help us visualize what we're looking at, sometimes scientists and artists will create a false-color image. Basically, the wavelengths of light that our eyes can't see are represented by a color (or a shade of grey) that we can see. Even though these false-color images do not show us what the object we're observing would actually look like, it can still provide us with a great deal of insight about the object. A composite is an image which combines different wavelengths into the same image. Since a composite isn't what an object really looks like, the same object can be represented countless different ways to emphasize different features. On the reverse are nine images of the planet Jupiter. The first image is a visible-light image, and shows the planet as it might look to our eyes. This image is followed by two false-color images, one showing the planet in the ultraviolet spectrum, and one showing it in the infrared spectrum. The six composites that follow are all made from the first three images, and you can see how different the planet can look! Definitions composite – An image that combines several different wavelengths of light that humans cannot see into one picture. -

False Color Photography Effect Using Hoya UV\&Amp

MATEC Web of Conferences 197, 15008 (2018) https://doi.org/10.1051/matecconf/201819715008 AASEC 2018 False Color Photography Effect using Hoya UV&IR Cut Filters Feature White Balance Setya Chendra Wibawa1,6*, Asep Bayu Dani Nandyanto2, Dodik Arwin Dermawan3, Alim Sumarno4, Naim Rochmawati5, Dewa Gede Hendra Divayana6, and Amadeus Bonaventura6 1Universitas Negeri Surabaya, Educational in Information Technology, Informatics Engineering, 20231, Indonesia, 2Universitas Pendidikan Indonesia, Chemistry Department, Bandung, 40154, Indonesia, 3Universitas Negeri Surabaya, Informatics Engineering, 60231, Indonesia, 4Universitas Negeri Surabaya, Educational Technology, 60231, Indonesia, 5Universitas Negeri Surabaya, Informatics Engineering, 60231, Indonesia, 6Universitas Pendidikan Ganesha, Educational in Information Technology, Informatics Engineering, 81116, Indonesia Abstract. The research inspired by modification DSLR camera become False Color Photography effect. False Color Photography is a technique to give results like near-infrared. Infrared photography engages capturing invisible light to produces a striking image. The objective of this research to know the effect by change a digital single-lens reflex (D-SLR) camera to be false color. The assumption adds a filter and make minor adjustments or can modify the camera permanently by removing the hot mirror. This experiment confirms change usual hot mirror to Hoya UV&IR Cut Filter in front of sensor CMOS. The result is false color effect using feature Auto White Balance such as the color of object photography change into reddish, purplish, old effect, in a pint of fact the skin of model object seems to be smoother according to White Balance level. The implication this study to get more various effect in photography. 1 Introduction The camera sensor is, in essence, monochromatic. -

Study of X-Ray Image Via Pseudo Coloring Algorithm

Sharma Komal et al.; International Journal of Advance Research, Ideas and Innovations in Technology ISSN: 2454-132X Impact factor: 4.295 (Volume 5, Issue 1) Available online at: www.ijariit.com Study of X-ray image via pseudo coloring algorithm Komal Sharma Karamvir Singh Rajpal Ravi Kant [email protected] [email protected] [email protected] Rayat Bahra University, Neonex Technology, Rayat Bahra University, Mohali, Punjab Mohali, Punjab Mohali, Punjab ABSTRACT The objective of this paper is to comprehensively study the concept of pseudo colouring techniques with Repetitive Line Tracking Method Keywords— False coloring, MATLAB, Ferro magnetic, Medical images 1. INTRODUCTION Fig. 2: X-Ray Image of hand before repetitive line tracking Medical imagining refers to techniques and processing used to method is applied create images of unanimous parts of the body. It is not always easy to analyze and interpret X-Ray images. The human eye can distinguish only a limited number of gray scale values but can distinguish thousands of color. So it is clear that the human eye can extract more amount of information from the colored image than that of a gray image. So pseudo coloring is very useful in improving the visibility of an image. As we know that in X-Ray images can only perceive gray shades. In detecting edges of dark hues like Violet, Indigo, Blue and Green [2]. Organs and tissues within the body contain magnetic properties. MRI involves radio waves and magnetic field (instead of X-rays) and on another side, a computer is used to Fig. 3: X-Ray Image of hand after repetitive line tracking manipulate the magnetic elements and generate highly detailed method is applied images of organs in the body. -

INTRODUCTION to ERDAS IMAGINE Goals: 1. Display a Raster Image

ERDAS 1: INTRODUCTION TO ERDAS IMAGINE Goals: 1. Display a raster image using panchromatic, normal and false color options. 2. Use the zoom tool/buttons to properly display an image and the inquire cursor to identify pixel X, Y locations and digital numbers (DN). 3. Display an image automatically scaled with DN’s stretched from 0-255, or with natural spectral variation. o Use the histogram to view spectral variability and frequency/proportion of DN’s within each class. 4. Perform a linear contrast stretch 5. Measure distances and areas within an image. 6. Create spatial and spectral profiles of an image. GETTING STARTED To begin your Imagine Session, double-click on the ERDAS Imagine / Imagine Icon or select it from the start-up menu. Erdas Imagine 2011 with a blank 2D View# 1will appear. 1. DISPLAYING AN IMAGE To open and display a raster image you can do either of the following: • From the ERDAS Application select File - Open – Raster Layer (Data will be in Vender Data; ERDAS folder; Example Data_201; lanier.img) Do not Click OK yet. Select the Raster Options tab. This is a Landsat Thematic Mapper (TM) image that has 7 wavebands of information with 512 rows and columns of raster information. In the Layers to Colors section, use the following band/color assignments: Red 4, Green 3, Blue 2. This is a False Color Composite which sends Near Infrared (NIR) Light in TM Band 4 to the Red color gun, Red Light in TM Band 3 to the Green gun, and Green Light TM Band 2 to the Blue gun. -

False Color Suppression in Demosaiced Color Images

FalseColorSuppressioninDemosaicedColorImages JayantaMukherje ManfredK.Lan S.K.Mitra DepartmentofComputerInstituteforHuman-DepartmentofElectrical Science&Engg.-MachineCommunication&ComputerEngg. IndianInstituteofTechnology TechnicalUniversityofMunich, UniversityofCalifornia Kharagpur,INDIA-721302D-80290,Munich,GermanySantaBarbara,93106,USA [email protected]@[email protected] ¡ ¡ Abstract ¢ In a single-chip digital color camera, a color filter array ¢ ¡ ¡ (CFA) is used to obtain sampled spectral components (red, green and blue) in an interleaved fashion. A color demo- ¢ ¢ saicing operation is then carried out to determine the miss- ing spectral components at every location. One of the prob- lems in color demosaicing is that many of the interpolated Figure1:TheBayerpattern. images are affected by colored artifacts near the edges cre- ating false colors. The problem is more severe if the edges are achromatic. In this paper we propose the use of median filtering for suppressing this phenomenon. We have con- or color demosaicing. sidered a set of existing interpolation algorithms and pre- One of the major concerns of this interpolation task is to sented their performances in interpolating mosaiced pat- keep the hardware cost as well as the computation time as terns. Next we have carried out median filtering of the small as possible. This is to make the digital color imag- chrominance components of the demosaiced images. In ing (for both still and video images) cost-effective and tech- each case, the post-processing has remarkably improved the nologically viable. Hence the methods usually employed quality of the reconstructions. The observations are verified in practice are based on low storage requirement (storing by both quantitative measures for expressing the quality of two or three rows of the captured image during scanning reconstruction as well as by visual examinations of the re- and processing) and simple computations (such as averag- constructed images. -

7-9 April 2020 /// Amsterdam

7-9 April 2020 /// Amsterdam www.geospatialworldforum.org #GWF2020 Study of IRS 1C-LISS III Image and Identification of land cover features based on Spectral Responses Rubina Parveen Dr. Subhash Kulkarni Dr. V.D. Mytri Research Scholar PESIT, Bangaluru (South Campus) AIET Kalburgi, VTU, Belgavi, India India India [email protected] [email protected] [email protected] earth surface features using remotely sensed data are Abstract— Satellite Remote sensing with repetitive and based on the basic premise that different objects have pan viewing and multispectral capabilities, is a powerful unique reflectance/emittance properties in different tool for mapping and monitoring the ecological changes. parts of the electromagnetic spectrum [3]. The term Analysis of the remote sensing data is faced with a number “Signature” is defined as any set of observable of challenges ranging from type of sensors, number of characteristics, which directly or indirectly leads to sensors, spectral responses of satellite sensors, resolutions the identification of an object and/or its condition. in different domains and qualitative and quantitative interpretation. Any analysis of satellite imagery directly Signatures are statistical in nature with a certain depends on the uniqueness of above features. The mean value and some dispersion around it. Spectral multispectral image from IRS LISS-III sensor has been variations: Spectral variations are the changes in the used as the primary data to produce land cover reflectance or emittance of objects as a function of classification. This paper reports on the study of LISS III wavelength. Spatial variations: Spatial arrangements image, with emphasis on spectral responses of satellite of terrain features, providing attributes such as shape, sensors. -

Color Filter Array Demosaicking: New Method and Performance Measures Wenmiao Lu, Student Member, IEEE, and Yap-Peng Tan, Member, IEEE

1194 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 12, NO. 10, OCTOBER 2003 Color Filter Array Demosaicking: New Method and Performance Measures Wenmiao Lu, Student Member, IEEE, and Yap-Peng Tan, Member, IEEE Abstract—Single-sensor digital cameras capture imagery by demosaicking or CFA interpolation method, is required to esti- covering the sensor surface with a color filter array (CFA) such mate for each pixel its two missing color values. that each sensor pixel only samples one of three primary color An immense number of demosaicking methods have been values. To render a full-color image, an interpolation process, commonly referred to as CFA demosaicking, is required to esti- proposed in the literature [1]–[15]. The simplest one is prob- mate the other two missing color values at each pixel. In this paper, ably bilinear interpolation, which fills missing color values with we present two contributions to the CFA demosaicking: a new and weighted averages of their neighboring pixel values. Although improved CFA demosaicking method for producing high quality computationally efficient and easy to implement, bilinear inter- color images and new image measures for quantifying the per- polation introduces severe demosaicking artifacts and smears formance of demosaicking methods. The proposed demosaicking method consists of two successive steps: an interpolation step that sharp edges. To obtain more visually pleasing results, many estimates missing color values by exploiting spatial and spectral adaptive CFA demosaicking methods have been proposed to ex- correlations among neighboring pixels, and a post-processing step ploit the spectral and spatial correlations among neighboring that suppresses noticeable demosaicking artifacts by adaptive pixels. -

Color and False-Color Films for Aerial Photography

Color and False-Color Films for Aerial Photography RATFE G. TARKINGTON and ALLAN L. SOREM Research Laboratories, Eastman Kodak Company Rochester, N. Y. ABSTRACT: Color reproduction by the photographic process using three primary colors is discussed, and the 11se of these photographic and optical principles for false-color reproduction is explained. The characteristics of two new aerial films-Kodak Ektachrome Aero Film (Process E-3) and a false-color type, Kodak Ektachrome Infrared Aero Film (Process E-3)-are compared with those of the older products they replace. The new films have higher speed, im proved definition, and less granularity. OPULAR processes of color photography are KODAK EKTACHROME AERO FILM (PROCESS E-3) P based upon the facts that (1) the colors perceived by the human eye can be produced BLUE SENSITIVE YELLOW POSITIVE IMAGE by mixtures of only three suitably chosen =====::::==l=====~=~=~~[M~ colors called primaries; (2) photographic GREEN SENSITIVE MAGENTA POSITIVE IMAGE emulsions can be made to respond selectively REO SENSITIVE CYAN POSITIVE IMAGE to each of these three colors; and (3) chemical reactions exist which can produce three in dividual colorants, each capable of absorbing FIG. 1. Schematic representation of a essentially only one of the chosen primary multilayer color film. colors. Although theory imposes no single unique set of three primary colors, in prac in a scene, but the results obtained with tice the colors chosen are those produced by modern color photographic materials are re light from successive thirds of the visible markably realistic representations of the spectrum: red, green, and blue. When these original scene. -

EAN-False Color

EAN-False Color 2021-09-08 Sales: [email protected] Exports: Export Summary Sheet Support: [email protected] EULA: End User License Agreement Phone: +1 (541) 716-5137 Web: sightlineapplications.com 1 Overview ................................................................ 1 4 False Color Palettes ................................................ 3 1.1 Additional Support Documentation ....................... 1 4.1 Custom Color User Palette ..................................... 4 1.2 SightLine Software Requirements .......................... 1 4.2 Multiple Color Range Example ............................... 5 1.3 Application Bit Requirements ................................ 1 4.3 Sepia Tone Example ............................................... 6 2 False Color Guidelines ............................................ 2 5 Questions and Additional Support ......................... 6 2.1 Frequently Used Modes ......................................... 2 3 Related SightLine Commands ................................ 2 CAUTION: Alerts to a potential hazard that may result in personal injury, or an unsafe practice that causes damage to the equipment if not avoided IMPORTANT: Identifies crucial information that is important to setup and configuration procedures. Used to emphasize points or reminds the user of something. Supplementary information that aids in the use or understanding of the equipment or subject that is not critical to system use. © SightLine Applications, Inc. EAN-False-Color 1 Overview Using false -

False Color Images - a Js9 Activity

False Color Images - a js9 activity Purpose: To use the tools in js9 to examine features of a supernova remnant. Background: This activity builds on tools and techniques learned in • De-Coding Starlight: From Pixels to Images (Introduction to Imaging) • 3-Color Composite Images • X-Ray Spectroscopy of Supernova Remnants • Star Formation and U/HLX’s in the Cartwheel Galaxy • Analysis of Two Pulsating X-ray Sources The above activities should be done first. Also, read about false color images at https://chandra.si.edu/photo/false_color.html To save your images, go to File>Save>JPEG or PNG. You may also take a screenshot. Images can then be pasted into a Google doc or any other kind of document or presentation. Procedure (Using colormaps, contrast, bias, and scale): 1. Go to https://js9.si.edu. 2. The default image of Cas A (a type 2 supernova) is made up of pixels, each one with a number of X-rays that were recorded to hit each pixel during a given observation. Use Zoom until you can see individual pixels. Move your mouse over the pictures and look at the first green number in the top left which corresponds to pixel intensity. You can also go to View>Pixel Table. This looks much like the grid we used in “Decoding Starlight” to make an image of Cas A. Try various colormaps under Color. Compare the pixel numbers to the numbers on the color bar under the image. 3. Go back to Zoom>Zoom 1 to see the whole image. 4. Contrast refers to the rate of change of color with color level. -

A False Color Fusion Strategy for Drusen and Geographic Atrophy Visualization in Optical Coherence Tomography Images

A FALSE COLOR FUSION STRATEGY FOR DRUSEN AND GEOGRAPHIC ATROPHY VISUALIZATION IN OPTICAL COHERENCE TOMOGRAPHY IMAGES QIANG CHEN, PHD,* THEODORE LENG, MD, MS,† SIJIE NIU, MS,* JIAJIA SHI, BS,* LUIS DE SISTERNES, PHD,‡ DANIEL L. RUBIN, MD, MS‡ Purpose: To display drusen and geographic atrophy (GA) in a single projection image from three-dimensional spectral domain optical coherence tomography images based on a novel false color fusion strategy. Methods: We present a false color fusion strategy to combine drusen and GA projection images. The drusen projection image is generated with a restricted summed-voxel projection (axial sum of the reflectivity values in a spectral domain optical coherence tomography cube, limited to the region where drusen is present). The GA projection image is generated by incorporating two GA characteristics: bright choroid and thin retina pigment epithelium. The false color fusion method was evaluated in 82 three-dimensional optical coherence tomography data sets obtained from 7 patients, for which 2 readers independently identified drusen and GA as the gold standard. The mean drusen and GA overlap ratio was used as the metric to determine accuracy of visualization of the proposed method when compared with the conventional summed-voxel projection, (axial sum of the reflectivity values in the complete spectral domain optical coherence tomography cube) technique and color fundus photographs. Results: Comparative results demonstrate that the false color image is more effective in displaying drusen and GA than summed-voxel projection and CFP. The mean drusen/GA overlap ratios based on the conventional summed-voxel projection method, color fundus photographs, and the false color fusion method were 6.4%/100%, 64.1%/66.7%, and 85.6%/100%, respectively. -

Design of False Color Palettes for Grayscale Reproduction

Design of false color palettes for grayscale reproduction Filip A. Sala Warsaw University of Technology, Faculty of Physics, Koszykowa 75, 00-662 Warsaw, Poland Abstract Design of false color palette is quite easy but some effort has to be done to achieve good dynamic range, contrast and overall appearance of the palette. Such palettes, for instance, are commonly used in scientific papers for presenting the data. However, to lower the cost of the paper most scientists decide to let the data to be printed in grayscale. The same applies to e-book readers based on e-ink where most of them are still grayscale. For majority of false color palettes reproducing them in grayscale results in ambiguous mapping of the colors and may be misleading for the reader. In this article design of false color palettes suitable for grayscale reproduction is described. Due to the monotonic change of luminance of these palettes grayscale representation is very similar to the data directly presented with a grayscale palette. Some suggestions and examples how to design such palettes are provided. Keywords: false-color palettes; data visualization; False color palettes are used to display information in variety of fields like physical sciences (e.g. astronomy, optics)[1, 2, 3], medicine (e.g. in different imaging systems)[4] and industry [5]. In most applications palettes are optimized for better contrast, dynamic range and overall appearance of the palette [6, 7]. They are adjusted for particular data that have to be presented. There is no unique algorithm or easy rules to make a good false color palette.