Ask the Crowd to Find out What's Important

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Media Guide Template

MOST CHAMPIONSHIP TITLES T O Following are the records for championships achieved in all of the five major events constituting U R I N the U.S. championships since 1881. (Active players are in bold.) N F A O M E MOST TOTAL TITLES, ALL EVENTS N T MEN Name No. Years (first to last title) 1. Bill Tilden 16 1913-29 F G A 2. Richard Sears 13 1881-87 R C O I L T3. Bob Bryan 8 2003-12 U I T N T3. John McEnroe 8 1979-89 Y D & T3. Neale Fraser 8 1957-60 S T3. Billy Talbert 8 1942-48 T3. George M. Lott Jr. 8 1928-34 T8. Jack Kramer 7 1940-47 T8. Vincent Richards 7 1918-26 T8. Bill Larned 7 1901-11 A E C V T T8. Holcombe Ward 7 1899-1906 E I N V T I T S I OPEN ERA E & T1. Bob Bryan 8 2003-12 S T1. John McEnroe 8 1979-89 T3. Todd Woodbridge 6 1990-2003 T3. Jimmy Connors 6 1974-83 T5. Roger Federer 5 2004-08 T5. Max Mirnyi 5 1998-2013 H I T5. Pete Sampras 5 1990-2002 S T T5. Marty Riessen 5 1969-80 O R Y C H A P M A P S I T O N S R S E T C A O T I R S D T I S C S & R P E L C A O Y R E D R Bill Tilden John McEnroe S * All Open Era records include only titles won in 1968 and beyond 169 WOMEN Name No. -

United States Vs. Czech Republic

United States vs. Czech Republic Fed Cup by BNP Paribas 2017 World Group Semifinal Saddlebrook Resort Tampa Bay, Florida * April 22-23 TABLE OF CONTENTS PREVIEW NOTES PLAYER BIOGRAPHIES (U.S. AND CZECH REPUBLIC) U.S. FED CUP TEAM RECORDS U.S. FED CUP INDIVIDUAL RECORDS ALL-TIME U.S. FED CUP TIES RELEASES/TRANSCRIPTS 2017 World Group (8 nations) First Round Semifinals Final February 11-12 April 22-23 November 11-12 Czech Republic at Ostrava, Czech Republic Czech Republic, 3-2 Spain at Tampa Bay, Florida USA at Maui, Hawaii USA, 4-0 Germany Champion Nation Belarus at Minsk, Belarus Belarus, 4-1 Netherlands at Minsk, Belarus Switzerland at Geneva, Switzerland Switzerland, 4-1 France United States vs. Czech Republic Fed Cup by BNP Paribas 2017 World Group Semifinal Saddlebrook Resort Tampa Bay, Florida * April 22-23 For more information, contact: Amanda Korba, (914) 325-3751, [email protected] PREVIEW NOTES The United States will face the Czech Republic in the 2017 Fed Cup by BNP Paribas World Group Semifinal. The best-of-five match series will take place on an outdoor clay court at Saddlebrook Resort in Tampa Bay. The United States is competing in its first Fed Cup Semifinal since 2010. Captain Rinaldi named 2017 Australian Open semifinalist and world No. 24 CoCo Vandeweghe, No. 36 Lauren Davis, No. 49 Shelby Rogers, and world No. 1 doubles player and 2017 Australian Open women’s doubles champion Bethanie Mattek-Sands to the U.S. team. Vandeweghe, Rogers, and Mattek- Sands were all part of the team that swept Germany, 4-0, earlier this year in Maui. -

Torneo Se Desarrollará Sólo Con Tres Grupos

10348401 28/06/2004 11:20 p.m. Page 2 2D |EL SIGLO DE DURANGO | MARTES 29 DE JUNIO DE 2004 | DEPORTES RESPALDO | HENMAN SE LLEVA TODOS LOS APLAUSOS EN EL ALL ENGLAND CLUB Anfitrión sigue vivo El tenista británico logró, por novena vez en su carrera, los cuartos de final de Wimbledon LONDRES, INGLATERRA (AGENCIAS).- El británico Tim Henman logró por novena vez en su carrera los cuartos de final de Wimbledon, al vencer al australiano Mark Phi- lippoussis, finalista el pasado año por 6-2, 7-5, 6-7 (3) y 7-6 (5). El ariete holandés Ruud van Nistelrooy entrena El jugador de Oxford nece- en Albufeira, previo al encuentro ante Portugal. sitó cinco puntos de partido pa- ra imponerse a “Scud” ante el delirio de los casi 14 mil espec- Van Nistelrooy suena tadores que llenaron la central y que no cesaron de apoyarle, incluso cuando Henman desper- en filas madridistas, dició dos puntos para rematar su victoria en el octavo juego del tercer set (5-2). Philippoussis, que conectó 22 desde Inglaterra “aces”, los mismos que Henman, y uno de ellos a 217.2 kilómetros LONDRES, INGLATERRA (AGENCIAS).- La “operación por hora, se sintió perjudicado Van Nistelrooy” vuelve a la carga. Que el jugador por una polémica decisión en una holandés es casi una obsesión para Florentino Pé- bola en el undécimo juego de la rez, presidente del Real Madrid, hace mucho tiem- primera manga que le costó su po que se sabe. El pasado mes de noviembre se saque, y mantuvo una dura con- adelantó que el ariete del Manchester United era versación con el juez de silla es- el galáctico elegido para el proyecto 2004-2005. -

WTA Tour Statistical Abstract 2002

WTA Tour Statistical Abstract 2002 Robert B. Waltz ©2002 by Robert B. Waltz Reproduction and/or distribution for profit prohibited Contents Introduction. .4 Idealized Ranking Systems . .66 Surface-Modified Divisor (Minimum 16)......................66 2002 In Review: The Top Players . .5 Idealized Rankings/Proposal 2 — Adjusted Won/Lost..68 The Final Top Thirty.........................................................5 Adjusted Winning Percentage, No Bonuses...................70 The Beginning Top Twenty-Five .................................... 6 Percentage of Possible Points Earned ............................71 Summary of Changes, Beginning to End of 2002 ............6 Head to Head . 73 Top Players Analysed . .7 All the Players in the Top Ten in 2002: The Complete The Top 20 Head to Head . .73 Top Ten Based on WTA (Best 17) Statistics ...............7 Wins Over Top Players . .74 The Complete Top Ten under the 1996 Ranking System.7 Matches Played/Won against the (Final) Top Twenty...74 Ranking Fluctuation. .8 Won/Lost Versus the Top Players Highest Ranking of 2002 ................................................10 (Based on Rankings at the Time of the Match) .........75 Top Players Sorted by Median Ranking.........................11 Won/Lost Versus the Top Players Short Summary: The Top Eighty. .12 (Based on Final Rankings).........................................76 The Top 200, in Numerical Order ..................................14 Statistics/Rankings Based on Head-to-Head . .77 The Top 200, in Alphabetical Order...............................15 -

2017 Miami Open Presented by Itau Doubles Final

DAILY MATCH NOTES: MIAMI OPEN PRESENTED BY ITAU MIAMI, FL, USA | MARCH 21 - APRIL 2, 2017 | $6,993,450 PREMIER MANDATORY WTA Information: www.wtatennis.com | @WTA | facebook.com/wta Tournament Info: www.miamiopen.com | @MiamiOpen | facebook.com/MiamiOpenTennis WTA Communications: Jeff Watson ([email protected]), Alexander Prior ([email protected]) 2017 MIAMI OPEN PRESENTED BY ITAU DOUBLES FINAL [3] SANIA MIRZA (IND #7) / BARBORA STRYCOVA (CZE #10) vs. GABRIELA DABROWSKI (CAN #34) / XU YIFAN (CHN #26) Head-to-Head: First meeting A LOOK AT THE DOUBLES FINALISTS YTD YTD CAREER CAREER DOUBLES YTD CAREER PLAYER NAT AGE DOUBLES DOUBLES DOUBLES DOUBLES RANK PRIZE $ PRIZE $ W-L TITLES W-L TITLES [3] Sania Mirza 7 IND 30 129,085 19-5 1 6,570,862 474-201 41 [3] Barbora Strycova 16 CZE 31 345,900 15-5 0 6,029,977 391-219 20 Gabriela Dabrowski 34 CAN 25 52,669 15-7 0 667,873 196-165 3 Xu Yifan 26 CHN 28 49,578 10-6 0 777,373 284-169 3 Players listed in order of doubles ranking; match records up-to-date through SF; prize money figures do not include this tournament BEST PREVIOUS MIAMI DOUBLES RESULTS PLAYER BEST MIAMI RESULTS [3] Sania Mirza WON (1): 2015 (w/Hingis) [3] Barbora Strycova FINAL (1): 2017 (w/Mirza) Gabriela Dabrowski FINAL (1): 2017 (w/Xu) Xu Yifan FINAL (1): 2017 (w/Dabrowski) ROAD TO THE FINAL [3] SANIA MIRZA (IND #7) / BARBORA STRYCOVA (CZE GABRIELA DABROWSKI (CAN #34) / XU YIFAN (CHN #26) #16) R32: d. Schuurs/Voracova (NED/CZE) 63 76(3) R32: d. -

WTA Tour Statistical Abstract 2000

WTA Tour Statistical Abstract 2000 Robert B. Waltz ©2000 by Robert B. Waltz Reproduction and/or distribution for profit prohibited Contents 2000 In Review: Top Players 5 Wins Over Top Players 57 Tournament Wins by Surface 98 The Final Top Twenty-Five 5 Matches Played/Won against the (Final) Assorted Statistics 99 The Beginning Top Twenty-Five 6 Top Twenty 57 Summary of Changes 2000 6 Won/Lost Versus the Top Players (Based on The Busiest Players on the Tour 99 Rankings at the Time of the Match) 58 Total Matches Played by Top Players 99 All the Players in the Top Ten in 2000 7 Total Events Played by the Top 150 100 The Complete Top Ten Based on WTA Won/Lost Versus the Top Players (Based on (Best 18) Statistics 7 Final Rankings) 59 The Biggest Tournaments 101 The Complete Top Ten under the 1996 Statistics/Rankings Based on Head-to- Tournament Strength Based on the Four Top Ranking System 7 Head Numbers 60 Players Present 102 Ranking Fluctuation 8 Total Wins over Top Ten Players 60 The Top Tournaments Based on Top Players Top Players Sorted by Median Ranking 9 Winning Percentage against Top Ten Present — Method 1 103 Players 60 The Top Tournaments Based on Top Players Tournament Results 10 Present — Method 2 104 Tournaments Played/Summary of Results How They Earned Their Points 61 Strongest Tournaments Won 105 for Top Players 10 Fraction of Points Earned in Slams 61 Strongest Tournament Performances The Rankings (Top 192 — As of November Quality Versus Round Points 62 106 20, 2000. -

Prize Top 100 Money YTD SD.Txt

Prize Top 100 Money YTD S-D.txt WTA TOUR PRIZE MONEY YTD 1 MARTINA HINGIS 2,936,425 355,355 3,291,780 2 LINDSAY DAVENPORT 2,426,756 303,660 2,734,205 3 SERENA WILLIAMS 2,270,946 317,540 2,605,102 4 VENUS WILLIAMS 1,990,887 317,540 2,316,005 5 STEFFI GRAF 1,219,289 13,097 1,248,867 6 MARY PIERCE 857,078 129,465 996,442 7 NATHALIE TAUZIAT 723,648 140,859 864,507 8 MONICA SELES 744,741 77,477 822,218 9 ARANTXA SANCHEZ-VICARIO 617,433 190,488 807,921 10 ANNA KOURNIKOVA 389,325 325,205 748,424 11 JANA NOVOTNA 524,662 216,792 741,454 12 BARBARA SCHETT 633,068 92,617 725,685 13 AMELIE MAURESMO 551,825 30,643 582,468 14 ELENA LIKHOVTSEVA 336,719 156,567 525,307 15 JULIE HALARD-DECUGIS 457,930 56,140 514,070 16 SANDRINE TESTUD 359,847 123,155 488,496 17 CONCHITA MARTINEZ 394,876 91,516 486,392 18 AMANDA COETZER 425,814 58,525 486,120 19 LISA RAYMOND 207,110 179,550 465,664 20 DOMINIQUE VAN ROOST 394,995 52,967 450,401 21 NATASHA ZVEREVA 267,917 170,660 438,577 22 CORINA MORARIU 161,771 242,256 413,980 23 ANKE HUBER 361,439 50,548 411,987 24 AI SUGIYAMA 226,719 105,967 405,148 25 CHANDA RUBIN 229,078 126,643 355,721 26 PATTY SCHNYDER 277,567 59,030 336,597 27 LARISA NEILAND 57,096 231,563 324,300 28 RUXANDRA DRAGOMIR 261,606 47,616 309,222 29 IRINA SPIRLEA 190,680 102,963 299,143 30 MIRJANA LUCIC 271,801 12,535 286,259 31 MARY JOE FERNANDEZ 168,557 95,043 263,600 32 MARIAAN DE SWARDT 107,991 113,525 263,287 33 SILVIA FARINA 186,691 71,457 258,148 34 AMY FRAZIER 221,647 36,039 257,686 35 ANNE-GAELLE SIDOT 175,877 66,731 246,939 36 JENNIFER CAPRIATI -

2003 Australian Open

2019 FED CUP BY BNP PARIBAS WORLD GROUP FIRST ROUND WORLD GROUP II FIRST ROUND 9-10 February – TIE NOTES WORLD GROUP FIRST ROUND Czech Republic (s) (c) v Romania – Ostravar Arena, Ostrava, CZE – (hard, indoors) Belgium (c) v France (s) – Country Hall du Sart-Tilman, Liège, BEL – (hard, indoors) Germany (c) v Belarus (s) – Volkswagen Halle, Braunschweig, GER – (hard, indoors) USA (s) (c) v Australia – US Cellular Arena, Asheville, NC, USA – (hard, indoors) WORLD GROUP II FIRST ROUND Switzerland (s) (c)* v Italy – Swiss Tennis Arena, Biel, SUI – (hard, indoors) Latvia (c)* v Slovakia (s) – Arena Riga, Riga, LAT – (hard, indoors) Japan (s) (c) v Spain – Sogo Gymnastic Hall, Kita-kyushu, JPN – (hard, indoors) Netherlands (s) (c)* v Canada – Maaspoort Sports & Events, ’s-Hertogenbosch, NED – (clay, indoors) (s) = seed (c) = choice of ground * = choice of ground was decided by lot CHOICE OF GROUND – WORLD GROUP SEMIFINALS The winners of this weekend’s World Group first round ties advance to the semifinals on 20-21 April. Below is the choice of ground for all potential semifinal match-ups. The venue details are due to be submitted to the ITF by 25 February. BELGIUM FRANCE CZECH REPUBLIC CZE CZE ROMANIA BEL FRA* USA AUSTRALIA GERMANY GER AUS BELARUS USA AUS* * choice of ground decided by lot WORLD GROUP & WORLD GROUP II PLAY-OFFS The World Group play-offs and World Group II play-offs will also take place on 20-21 April. The draw for the World Group play-offs and World Group II play-offs will be held at the ITF offices in London on Tuesday 12 February. -

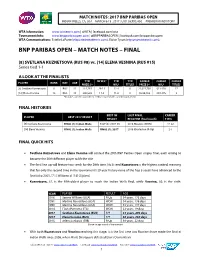

Bnp Paribas Open – Match Notes – Final

MATCH NOTES: 2017 BNP PARIBAS OPEN INDIAN WELLS, CA, USA – MARCH 8-19 , 2017 | USD $6,993,450 – PREMIER MANDATORY WTA Information: www.wtatennis.com | @WTA | facebook.com/wta Tournament Info: www.bnpparibasopen.com | @BNPPARIBASOPEN | facebook.com/bnpparibasopen WTA Communications: Estelle LaPorte ([email protected]), Eloise Tyson ([email protected]), BNP PARIBAS OPEN – MATCH NOTES – FINAL [8] SVETLANA KUZNETSOVA (RUS #8) vs. [14] ELENA VESNINA (RUS #15) Series tied 1-1 A LOOK AT THE FINALISTS YTD IW W/L* YTD YTD CAREER CAREER CAREER PLAYER RANK NAT AGE PRIZE $^ W/L* TITLES PRIZE $^ W/L* TITLES [8] Svetlana Kuznetsova 8 RUS 31 214,749 28-13 12-4 0 22,071,750 611-293 17 [14] Elena Vesnina 15 RUS 30 280,558 11-8 11-6 0 9,228,165 393-305 2 *Includes current tournament / ^Does not include current tournament FINAL HISTORIES BEST IW LAST FINAL CAREER PLAYER BEST 2017 RESULT RESULT REACHED (final result) F W/L [8] Svetlana Kuznetsova FINAL (1): Indian Wells R-UP (2): 2007-08 2016 Moscow (WON) 17-22 [14] Elena Vesnina FINAL (1): Indian Wells FINAL (1): 2017 2016 Charleston (R-Up) 2-7 FINAL QUICK HITS Svetlana Kuznetsova and Elena Vesnina will contest the 29th BNP Paribas Open singles final, each aiming to become the 20th different player to lift the title The final line-up will feature two seeds for the 26th time. No.8 seed Kuznetsova is the highest ranked, meaning that for only the second time in the tournament’s 29-year history none of the Top 5 seeds have advanced to the final (also 2001, [7] S.Williams d. -

WTA Tour Statistical Abstract 2001

WTA Tour Statistical Abstract 2001 Robert B. Waltz ©2001 by Robert B. Waltz and Tennis News Reproduction and/or distribution for profit prohibited Contents Introduction. .4 Head to Head . 64 2001 In Review: The Top Players . .5 The Top 20 Head to Head . .64 The Final Top Thirty.........................................................5 Wins Over Top Players . .65 The Beginning Top Twenty-Five .................................... 6 Matches Played/Won Summary of Changes, Beginning to End of 2001 ............6 against the (Final) Top Twenty..................................65 Top Players Analysed . .7 Won/Lost Versus the Top Players All the Players in the Top Ten in 2001............................ 7 (Based on Rankings at the Time of the Match) .........66 Complete Top Ten under the 1996 Ranking System.......7 Won/Lost Versus the Top Players Ranking Fluctuation. .8 (Based on Final Rankings).........................................67 Highest Ranking of 2001 ..................................................9 Statistics Based on Head-to-Heads . .68 Top Players Sorted by Median Ranking.........................10 Total Wins over Top Ten Players ..................................68 Short Summary: The Top Eighty. .11 Winning Percentage against Top Ten Players................68 The Top 200, in Numerical Order ..................................13 How They Earned Their Points . 69 The Top 200, in Alphabetical Order...............................14 Fraction of Points Earned in Slams ................................69 Tournament Results . .15 Quality -

Italia Vittorie/Finali

GIRONE: GIRONE 1: FRANCESCA SCHIAVONE BEST RANKING 4 2011 6 Titoli Italia TORNEI GRAND SLAM PARTECIPANTI Circolo Clas. Vittorie/Finali W L AUSTRALIAN OPEN Q F BIANCHI Ethel Tt Vianello 35 ROLAND GARROS 1 1 FANUELE Chiara La Torre 42 WIMBLEDON Q F PISCOPO Beatrice Villa Pamphili 43 US OPEN Q F COLARIETI Antonella Gli Ulivi 43 1 1 https://youtu.be/_X9vMofSAek PARTITE GIOCATE FRANCESCA SCHIAVONE in singolare Francesca Schiavone (Milano, 23 giugno 1980) è una tennista italiana. VITTORIE 563 57% Dotata di un eccellente gioco variabile,è tutt'oggi l'unica tennista al mondo ad aver vinto SCONFITTE 417 43% uno Slam utilizzando il rovescio ad una mano,considerato da molti come uno dei migliori al 980 mondo. Vincitrice del Roland Garros del 2010 è stata la prima tennista italiana ad aver CLASSIFICA vinto un torneo del Grande Slam nel singolare;in carriera ha vinto in totale sei tornei WTA G V P Set V Set P Punti Dif. Set G. W. G. L. in singolare e sette in doppio,accumulando montepremi superiori a $10,000,000 e 1° battendo nel corso degli anni giocatrici di livello come Serena Williams,Justine Henin, 2° Caroline Wozniacki,Amelie Mauresmo etc. 3° PALMARES 4° VITTORIE TORNEI ATP TOUR 6 Primo La CLASSIFICA DEL GIRONE: Singolo Bad Gastein Austria 2007 a) di DUE PUNTI per ogni incontro vinto, anche per rinuncia/ abbandono TORNEI ATP TOUR 7 Ultimo b) di UN PUNTO per ogni incontro giocato e perso; Doppio Marrakech Marocco 2013 c) di ZERO PUNTI per ogni incontro perso per rinuncia od abbandono. -

Indian Wells 1997

Indian Wells 1997 Search: Lycos Angelfire Harry Potter Share This Page Report Abuse Edit your Site Browse Sites « Previous | Top 100 | Next » Indian Wells 1997 March 16 State Farm Evert Cup Hard Tier I 1996 1997 1998 1999 2000 2001 2002 2003 2004 F Lindsay Davenport(4) d. Irina Spirlea(6) 6-2 6-1 SF Lindsay Davenport(4) d. Mary Joe Fernandez(9) 6-1 6-1 SF Irina Spirlea(6) d. Arantxa Sanchez-Vicario(1) 4-6 6-3 6-3 QF Lindsay Davenport(4) d. Venus Williams-Q 6-4 5-7 7-61 QF Mary Joe Fernandez(9) d. Conchita Martinez(2) 6-4 6-4 QF Irina Spirlea(6) d. Nathalie Tauziat(13) 6-4 6-2 QF Arantxa Sanchez-Vicario(1) d. Sandrine Testud 3-6 6-2 6-2 3 Lindsay Davenport(4) d. Ruxandra Dragomir Ilie(14) 6-2 6-1 3 Conchita Martinez(2) d. Chanda Rubin(15) 6-1 1-6 7-5 3 Mary Joe Fernandez(9) d. Kimberly Po(11) 6-2 6-3 3 Irina Spirlea(6) d. Elena Likhovtseva(12) 6-3 6-4 3 Nathalie Tauziat(13) d. Anke Huber(3) 6-4 6-3 3 Sandrine Testud d. Florencia Labat 6-2 6-2 3 Arantxa Sanchez-Vicario(1) d. Asa Svensson 6-1 6-3 3 Venus Williams-Q d. Iva Majoli(5) 7-5 3-6 7-5 2 Asa Svensson d. Barbara Paulus(10) 6-4 6-4 2 Lindsay Davenport(4) d. Tami Jones-Q 6-4 6-0 2 Ruxandra Dragomir Ilie(14) d.