High-Performance Computing and NERSC

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What Are Your Best Computer Options for Teleworking?

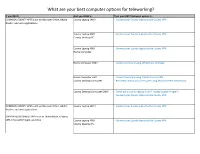

What are your best computer options for teleworking? If you NEED… And you HAVE a… Then your BEST telework option is… COMMON COUNTY APPS such as Microsoft Office, Adobe County Laptop ONLY - Connect your County laptop to the County VPN Reader, and web applications County Laptop AND - Connect your County laptop to the County VPN County Desktop PC County Laptop AND - Connect your County laptop to the County VPN Home Computer Home Computer ONLY - Connect remotely using VDI (Virtual Desktop) Home Computer AND - Connect remotely using Dakota County VPN County Desktop Computer - Remotely control your computer using Windows Remote Desktop County Desktop Computer ONLY - Check out a County laptop from IT Laptop Loaner Program - Connect your County laptop to the County VPN COMMON COUNTY APPS such as Microsoft Office, Adobe County Laptop ONLY - Connect your County laptop to the County VPN Reader, and web applications SUPPORTED BUSINESS APPS such as OneSolution, OnBase, SIRE, Microsoft Project, and Visio County Laptop AND - Connect your County laptop to the County VPN County Desktop PC If you NEED… And you HAVE a… Then your BEST telework option is… County Laptop AND - Connect your County laptop to the County VPN Home Computer Home Computer ONLY - Contact the County IT Help Desk at 651/438-4346 Home Computer AND - Connect remotely using Dakota County VPN County Desktop Computer - Remotely control your computer using Windows Remote Desktop County Desktop Computer ONLY - Check out a County laptop from IT Laptop Loaner Program - Connect your County laptop -

Scaling the Digital Divide: Home Computer Technology and Student Achievement

Scaling the Digital Divide Home Computer Technology and Student Achievement J a c o b V i g d o r a n d H e l e n l a d d w o r k i n g p a p e r 4 8 • j u n e 2 0 1 0 Scaling the Digital Divide: Home Computer Technology and Student Achievement Jacob L. Vigdor [email protected] Duke University Helen F. Ladd Duke University Contents Acknowledgements ii Abstract iii Introduction 1 Basic Evidence on Home Computer Use by North Carolina Public School Students 5 Access to home computers 5 Access to broadband internet service 7 How Home Computer Technology Might Influence Academic Achievement 10 An Adolescent's time allocation problem 10 Adapting the rational model to ten-year-olds 13 Estimation strategy 14 The Impact of Home Computer Technology on Test Scores 16 Comparing across- and within-student estimates 16 Extensions and robustness checks 22 Testing for effect heterogeneity 25 Examining the mechanism: broadband access and homework effort 29 Conclusions 34 References 36 Appendix Table 1 39 i Acknowledgements The authors are grateful to the William T. Grant Foundation and the National Center for Analysis of Longitudinal Data in Education Research (CALDER), supported through Grant R305A060018 to the Urban Institute from the Institute of Education Sciences, U.S. Department of Education for research support. They wish to thank Jon Guryan, Jesse Shapiro, Tim Smeeding, Andrew Leigh, and seminar participants at the University of Michigan, the University of Florida, Cornell University, the University of Missouri‐ Columbia, the University of Toronto, Georgetown University, Harvard University, the University of Chicago, Syracuse University, the Australian National University, the University of South Australia, and Deakin University for helpful comments on earlier drafts. -

Setup Outlook on Your Home Computer

Setup Outlook on your Home Computer Office 2010: 1. Run Microsoft Outlook 2010 2. When the startup wizard opens click Next. 3. When prompted to configure an E-mail Account select Yes and then click Next. 4. The Auto Account Setup will display next, select E-mail Account and fill in the information. Your password is your network password, the same one you use to log into computers on campus. After you fill the information in click Next. 5. Outlook will begin to setup your account; it may take a few minutes before the next window pops up. 6. You will be prompted with some sort of login screen. The one shown here offers 3 choices (Select the middle one if this is the case), but others may only offer 1. Type in the same username you use to login to campus computers but precede it with sdsmt\ Your password will be the same one you use to login on campus. Click OK to continue. 7. Outlook will finish setting up your account; this may take a few minutes. When it is done press the Finish button. 8. Outlook will start up and will begin to download your emails, contacts, and calendar from the server. This may take several minutes; there will be a green loading bar at the bottom of the window. Office 2007: 1. Run Microsoft Outlook 2007 2. When the startup wizard opens click Next. 3. When prompted to configure an E-mail Account select Yes and then click Next. 4. The Auto Account Setup will display next, select E-mail Account and fill in the information. -

The Cost of Using Computers

Summary: Students calculate and compare electrical costs of computers in various modes. Cost of Computers Grade Level: 5-8 Subject Areas: English Objectives puters, lights, and monitors when they’re not (Media and Technology, Research By the end of this activity, students will be in use reduces the number of comfort com- and Inquiry), Mathematics, able to plaints and air conditioning costs. Science (Physical) • explain that different types of computers use different amounts of electricity; There are many computer myths that have been dispelled over the years. One myth is Setting: Classroom • compare and contrast computer mode settings and their electricity use; and that screen-savers save energy. In fact, it Time: • identify ways to conserve electricity when takes the same amount of electricity to move that fish around your screen as it does to do Preparation: 1 hour using computers. any word processing. Activity: two 50-minute periods Rationale Vocabulary: Another myth is that it takes more energy to Calculating the cost of computers will help turn off and restart your computer than it Blended rate, CPU, Electric rate, students realize potential energy savings both does to just leave it on all the time. It is more Kilowatt-hour, Sleep mode, at school and at home. likely that your computer will be outdated Standby consumption, Watt, Watt before it is worn out by turning it off and on. meter Materials There are some school districts that require • Copies of Calculating Computer Costs Major Concept Areas: computers to be left on at night for software Activity Sheet upgrades and network maintenance; these • Quality of life • Calculator (optional) units are often automatically shut down after • Management of energy the upgrades are complete. -

Openbsd Gaming Resource

OPENBSD GAMING RESOURCE A continually updated resource for playing video games on OpenBSD. Mr. Satterly Updated August 7, 2021 P11U17A3B8 III Title: OpenBSD Gaming Resource Author: Mr. Satterly Publisher: Mr. Satterly Date: Updated August 7, 2021 Copyright: Creative Commons Zero 1.0 Universal Email: [email protected] Website: https://MrSatterly.com/ Contents 1 Introduction1 2 Ways to play the games2 2.1 Base system........................ 2 2.2 Ports/Editors........................ 3 2.3 Ports/Emulators...................... 3 Arcade emulation..................... 4 Computer emulation................... 4 Game console emulation................. 4 Operating system emulation .............. 7 2.4 Ports/Games........................ 8 Game engines....................... 8 Interactive fiction..................... 9 2.5 Ports/Math......................... 10 2.6 Ports/Net.......................... 10 2.7 Ports/Shells ........................ 12 2.8 Ports/WWW ........................ 12 3 Notable games 14 3.1 Free games ........................ 14 A-I.............................. 14 J-R.............................. 22 S-Z.............................. 26 3.2 Non-free games...................... 31 4 Getting the games 33 4.1 Games............................ 33 5 Former ways to play games 37 6 What next? 38 Appendices 39 A Clones, models, and variants 39 Index 51 IV 1 Introduction I use this document to help organize my thoughts, files, and links on how to play games on OpenBSD. It helps me to remember what I have gone through while finding new games. The biggest reason to read or at least skim this document is because how can you search for something you do not know exists? I will show you ways to play games, what free and non-free games are available, and give links to help you get started on downloading them. -

Console Games in the Age of Convergence

Console Games in the Age of Convergence Mark Finn Swinburne University of Technology John Street, Melbourne, Victoria, 3122 Australia +61 3 9214 5254 mfi [email protected] Abstract In this paper, I discuss the development of the games console as a converged form, focusing on the industrial and technical dimensions of convergence. Starting with the decline of hybrid devices like the Commodore 64, the paper traces the way in which notions of convergence and divergence have infl uenced the console gaming market. Special attention is given to the convergence strategies employed by key players such as Sega, Nintendo, Sony and Microsoft, and the success or failure of these strategies is evaluated. Keywords Convergence, Games histories, Nintendo, Sega, Sony, Microsoft INTRODUCTION Although largely ignored by the academic community for most of their existence, recent years have seen video games attain at least some degree of legitimacy as an object of scholarly inquiry. Much of this work has focused on what could be called the textual dimension of the game form, with works such as Finn [17], Ryan [42], and Juul [23] investigating aspects such as narrative and character construction in game texts. Another large body of work focuses on the cultural dimension of games, with issues such as gender representation and the always-controversial theme of violence being of central importance here. Examples of this approach include Jenkins [22], Cassell and Jenkins [10] and Schleiner [43]. 45 Proceedings of Computer Games and Digital Cultures Conference, ed. Frans Mäyrä. Tampere: Tampere University Press, 2002. Copyright: authors and Tampere University Press. Little attention, however, has been given to the industrial dimension of the games phenomenon. -

Mnl the Worlds Biggest Supercomputer

one stop english .com NEWS lesson Solutions for English Teaching The world’s biggest supercomputer Elementary 1 Warmer Match these computer words with their meanings. 1. processor a. move information from the internet to your computer 2. data b. a set of information on a computer with its own name 3. download c. the part of a computer that controls and does all its operations 4. file d. information in a form that a computer can use 2 Key words Fill the gaps in the sentences with these key words from the text. The paragraph numbers are given to help you. calculation server process research billion cool trillion 1. If computers ___________________ information, they use a program to organize it. (para 3) 2. A___________________ is 1,000,000,000,000. (para 3) 3. A ___________________ is the use of mathematics to discover a number or an amount. For example, 2 + 2 = 4. (para 3) 4. A ___________________ is a computer than controls all the computers in a network. (para 5) 5. If you ___________________ something, you make it colder. (para 5) 6. ___________________ is the detailed study of something in order to discover new facts. (para 6) 7. A ___________________ is 1,000,000,000. (para 6) 3 Find the information Find the following information in the text as quickly as possible. 1. When did Summit start working? 2. Where is it? 3. What was the fastest supercomputer in the world before Summit? 4. How many calculations can Summit process each second? 5. When did Titan start working? 6. How much water does Summit need each minute? • © Springer Nature Limited 2018. -

Your Guide to the Rbselect Home Computer Benefit

Your guide to the RBSelect Home Computer Benefit Get the latest technology – direct from RBSelect No deposits or upfront payments • Get a brand new computer from just £10.66 (including VAT) per month! • Convenient, fixed RBSelect charge over 36 months • Windows 8 laptop or desktop computers from HP and Samsung • iPad, iPad mini and Apple Macs including MacBook Air and MacBook Pro Let’s Connect are • Tax efficient home computing for you administering this benefit on behalf of RBS. Windows iPad and iPad mini Apple MacBooks laptops and desktops To order logon to www.rbspeople.com/yourrewardonline Elect by 11th September 2013 Once you login, click on the 'Home Computer' benefit to complete your order. If you need advice on the ordering process or on choosing a computer, call the Home Computer helpline on 08444 821 860ˆ. ˆCall costs 5p per minute from fixed lines. Different rates may apply from mobile phones. What’s in this guide? Contents How to order and key scheme dates ______________________________ P3 What’s included with each package? _____________________________ P4 Package Summary ____________________________________________ P5 Tablet packages – iPad, iPad mini and Samsung ATIV Tab 3 options ___________________ P6 Laptop and desktop PC packages – Microsoft Windows 8 options __________________________________ P23 Apple Mac packages – MacBook Air and MacBook Pro options ___________________________ P29 Scheme accessories – Software, wireless printer, laptop carry cases, speakers, storage devices, tablet stand and Apple Mac accessories ____ P33 How the scheme works _______________________________________ P39 Frequently asked questions ____________________________________ P43 2 Place your order online at www.rbspeople.com/yourrewardonline by 11th September 2013 How to order and key scheme dates Or, what do I need to do and when do I need to do it by.. -

Archos Introduces 704-Wifi Portable Media Player With

ARCHOS INTRODUCES 704-WIFI PORTABLE MEDIA PLAYER WITH EXCLUSIVE 7-INCH TOUCH SCREEN AND WIRELESS INTERNET CAPABILITIES ARCHOS 704-WiFi Features the Highest Resolution Display of Any Portable Video Player, Support for New Online Video Download Stores, and Ability to Play Content from the PC onto the TV DENVER — March 6, 2007 — ARCHOS, Inc., introduced today the industry’s most advanced Portable Media Player with the ARCHOS 704-WiFi, the only PMP with a 7-inch touch-screen and full wireless capabilities. The new 704-WiFi presents the highest quality screen with 800x480 resolution and 5x7 dimensions—making it similar in size to a standard photograph. The ARCHOS 704-WiFi is the latest in the company’s Generation 4 line-up, priced at $549.99, and is available for pre-order today from www.archos.com. The ARCHOS 704-WiFi joins the company’s 604-WiFi in offering complete PMP features— video, music and photo playback—with full Internet access for surfing the Web and sending email. The new 704-WiFi features an 80GB hard drive with the capacity to store 100 hours of video, or about 70 DVD-quality movies, as well as two high-quality speakers for enhanced audio even without headphones. The 704-WiFi also supports video downloads from the new online stores of leading retailers, giving consumers the flexibility to enjoy their entertainment wherever they want, in their own place, on their own time. “Our new WiFi-enabled players will change the way consumers access and experience entertainment, by moving the digital media library from the home computer to the big screen TV,” said Henri Crohas, ARCHOS founder and CEO. -

Student and Parent Mobile Device Handbook

Student and Parent Mobile Device Handbook East Valley School District Updated: August 2017 Page 1 WHY DISTRICT ISSUED ONE-TO-ONE DEVICES: We are excited to offer your student the opportunity to use a East Valley School District issued laptop both in class and at home to enhance their academic experience. This handbook highlights key information about our program and the responsibilities of both students and parents/guardians for participation in this program. One-to-One computing offers many benefits to our modern day classroom and learner. East Valley’s expectation is that the student will have their computing device (laptop) with them for use in all their classes and for continued use at home. The laptop will help increase student engagement. Students can access learning materials and engage in real-time inquiry as their questions arise. Learning software has evolved to a point that students can track their own learning and have confidence in their progress. Laptops also support Problem-Based Learning, allowing students to research, collaborate, and produce a final product to share with peers, teachers and parents. Having the students take their laptops home provides several advantages. Once students leave the school campus, they are exposed to a different set of tools at home by the very nature of the differences in their computing environment. Some have equivalent technology, though not the same software; others have faster, more powerful computers and become frustrated with the school devices; others have no technology at home and are limited in what they can do after the school day. By issuing students the same laptops we hope to make technology access and learning opportunities equitable. -

The Shaping of the Personal Computer

9780813345901-text_Westview Press 6 x 9 5/15/13 9:26 AM Page 229 10 THE SHAPING OF THE PERSONAL COMPUTER NO HISTORIAN HAS yet written a full account of the personal computer. Per- sonal computing was perhaps the most life-changing consumer phenomenon of the second half of the twentieth century, and it continues to surprise in its ever-evolving applications and forms. If we consider an earlier invention, domestic radio, it took about fifty years for historians to start writing really satisfactory accounts. We should not expect to fully understand the personal computer in a lesser time scale. There has, of course, been no shortage of published accounts of the develop- ment of the personal computer by journalists. Much of this reportage is bad his- tory, though some of it makes for good reading. Perhaps its most serious distortion is its focus on a handful of individuals, portrayed as visionaries who saw the future and made it happen: Apple Computer’s Steve Jobs and Microsoft’s Bill Gates figure prominently in this genre. By contrast, IBM and the established computer firms are usually portrayed as dinosaurs: slow-moving, dim-witted, deservedly extinct. When historians write this history, it will be more complex than these journalistic ac- counts. The real history lies in the rich interplay of cultural forces and commercial interests. RADIO DAYS If the idea that the personal computer was shaped by an interplay of cultural forces and commercial interests appears nebulous, it is helpful to compare the develop- ment of the personal computer with the development of radio in the opening decades of the twentieth century, whose history is well understood. -

A Study of the Growth and Evolution of Personal Computer Devices Throughout the Pc Age

A STUDY OF THE GROWTH AND EVOLUTION OF PERSONAL COMPUTER DEVICES THROUGHOUT THE PC AGE A dissertation submitted in partial fulfilment of the requirements for the degree of Bachelor of Science (Honours) in Software Engineering Ryan Abbott ST20074068 Supervisor: Paul Angel Department of Computing & Information Systems Cardiff School of Management Cardiff Metropolitan University April 2017 Declaration I hereby declare that this dissertation entitled A Study of the Growth and Evolution of Personal Computer Devices Throughout the PC Age is entirely my own work, and it has never been submitted nor is it currently being submitted for any other degree. Candidate: Ryan Abbott Signature: Date: 14/04/2017 Supervisor: Paul Angel Signature: Date: 2 Table of Contents Declaration .................................................................................................................................. 2 List of Figures ............................................................................................................................... 4 1. ABSTRACT ............................................................................................................................ 5 2. INTRODUCTION .................................................................................................................... 6 3. METHODOLOGY.................................................................................................................... 8 4. LITERATURE REVIEW ............................................................................................................