An Investigation of Three-Point Shooting Through an Analysis of NBA Player Tracking Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

© Clark Creative Education Casino Royale

© Clark Creative Education Casino Royale Dice, Playing Cards, Ideal Unit: Probability & Expected Value Time Range: 3-4 Days Supplies: Pencil & Paper Topics of Focus: - Expected Value - Probability & Compound Probability Driving Question “How does expected value influence carnival and casino games?” Culminating Experience Design your own game Common Core Alignment: o Understand that two events A and B are independent if the probability of A and B occurring S-CP.2 together is the product of their probabilities, and use this characterization to determine if they are independent. Construct and interpret two-way frequency tables of data when two categories are associated S-CP.4 with each object being classified. Use the two-way table as a sample space to decide if events are independent and to approximate conditional probabilities. Calculate the expected value of a random variable; interpret it as the mean of the probability S-MD.2 distribution. Develop a probability distribution for a random variable defined for a sample space in which S-MD.4 probabilities are assigned empirically; find the expected value. Weigh the possible outcomes of a decision by assigning probabilities to payoff values and finding S-MD.5 expected values. S-MD.5a Find the expected payoff for a game of chance. S-MD.5b Evaluate and compare strategies on the basis of expected values. Use probabilities to make fair decisions (e.g., drawing by lots, using a random number S-MD.6 generator). Analyze decisions and strategies using probability concepts (e.g., product testing, medical S-MD.7 testing, pulling a hockey goalie at the end of a game). -

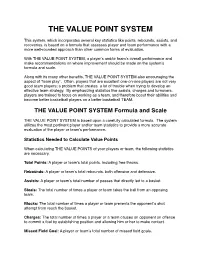

The Value Point System

THE VALUE POINT SYSTEM This system, which incorporates several key statistics like points, rebounds, assists, and recoveries, is based on a formula that assesses player and team performance with a more well-rounded approach than other common forms of evaluation. With THE VALUE POINT SYSTEM, a playerʼs and/or teamʼs overall performance and make recommendations on where improvement should be made on the systemʼs formula and scale. Along with its many other benefits, THE VALUE POINT SYSTEM also encouraging the aspect of “team play”. Often, players that are excellent one-on-one players are not very good team players; a problem that creates a lot of trouble when trying to develop an effective team strategy. By emphasizing statistics like assists, charges and turnovers, players are trained to focus on working as a team, and therefore boost their abilities and become better basketball players on a better basketball TEAM. THE VALUE POINT SYSTEM Formula and Scale THE VALUE POINT SYSTEM is based upon a carefully calculated formula. The system utilizes the most pertinent player and/or team statistics to provide a more accurate evaluation of the player or teamʼs performance. Statistics Needed to Calculate Value Points When calculating THE VALUE POINTS of your players or team, the following statistics are necessary. Total Points: A player or teamʼs total points, including free throws. Rebounds: A player or teamʼs total rebounds, both offensive and defensive. Assists: A player or teamʼs total number of passes that directly led to a basket. Steals: The total number of times a player or team takes the ball from an opposing team. -

Hawks' Trio Headlines Reserves for 2015 Nba All

HAWKS’ TRIO HEADLINES RESERVES FOR 2015 NBA ALL-STAR GAME -- Duncan Earns 15 th Selection, Tied for Third Most in All-Star History -- NEW YORK, Jan. 29, 2015 – Three members of the Eastern Conference-leading Atlanta Hawks -- Al Horford , Paul Millsap and Jeff Teague -- headline the list of 14 players selected by the coaches as reserves for the 2015 NBA All-Star Game, the NBA announced today. Klay Thompson of the Golden State Warriors earned his first All-Star selection, joining teammate and starter Stephen Curry to give the Western Conference-leading Warriors two All-Stars for the first time since Chris Mullin and Tim Hardaway in 1993. The 64 th NBA All-Star Game will tip off Sunday, Feb. 15, at Madison Square Garden in New York City. The game will be seen by fans in 215 countries and territories and will be heard in 47 languages. TNT will televise the All-Star Game for the 13th consecutive year, marking Turner Sports' 30 th year of NBA All- Star coverage. The Hawks’ trio is joined in the East by Dwyane Wade and Chris Bosh of the Miami Heat, the Chicago Bulls’ Jimmy Butler and the Cleveland Cavaliers’ Kyrie Irving . This is the 11 th consecutive All-Star selection for Wade and the 10 th straight nod for Bosh, who becomes only the third player in NBA history to earn five trips to the All-Star Game with two different teams (Kareem Abdul-Jabbar, Kevin Garnett). Butler, who leads the NBA in minutes (39.5 per game) and has raised his scoring average from 13.1 points in 2013-14 to 20.1 points this season, makes his first All-Star appearance. -

The Unseen Play the Game to Win 03/22/2017

The Unseen Play the Game to Win 03/22/2017 Play the Game to Win What Rick Barry and the Atlanta Falcons can teach us about risk management “Something about the crowd transforms the way you think” – Malcolm Gladwell - Revisionist History With 4:45 remaining in Super Bowl LI, Matt Ryan, the Atlanta Falcons quarterback, threw a pass to Julio Jones who made an amazing catch. The play did not stand out because of the way the ball was thrown or the agility that Jones employed to make the catch, but due to the fact that the catch eas- ily put the Falcons in field goal range very late in the game. That reception should have been the play of the game, but it was not. Instead, Tom Brady walked off the field with the MVP trophy and the Patriots celebrated yet another Super Bowl victory. NBA basketball hall of famer Rick Barry shot close to 90% from the free throw line. What made him memorable was not just his free throw percentage or his hard fought play, but the way he shot the ball underhanded, “granny-style”, when taking free throws. Every basketball player, coach and fan clearly understands that the goal of a basketball game is to score the most points and win. Rick Bar- ry, however, was one of the very few that understood it does not matter how you win but most im- portantly if you win. The Atlanta Falcons crucial mistake and Rick Barry’s “granny” shooting style offer stark illustrations about how human beings guard their egos and at times do imprudent things in order to be viewed favorably by their peers and the public. -

Camp Information Camp Features

CAMP INFORMATION CAMP FEATURES Western Illinois University The 2019 Billy Wright Basketball Camp is structured to • Lunch will be provided Basketball Camp Consent Form focus on strengthening the fundamentals of participants • On-Site Athletic Trainer through the use of drills and competition. It also strives PARENTAL RELEASE: to educate participants about offensive and defensive • Awards The Undersigned understands that there are certain dangers, concepts. Camp is an exciting, energetic and fun • Camp T-Shirt hazards, and risks inherent to this activity, which could include atmosphere for upcoming student athletes to learn. serious or even mortal injuries (death) and property damage, and • Instruction from Western that neither The Board of Trustees of Western Illinois University (the “Institution”) nor the WIU Foundation assume responsibility for any Dates: June 10-13, 2019 Illinois Players and Coaches such personal injuries or property damage. Time: 9 a.m. - 2:00 p.m. Location: Brophy Hall Knowing the dangers, hazards, and risks of this activity, and in th th consideration of being permitted to participate in the activity, Ages: Boys entering grades 4 -10 in 2019-20 the Undersigned agrees to assume all risks and responsibilities school year surrounding participation in the activity, and in advance release, and What to Bring: forever discharge, waive, and covenant not to sue the Institution, the - Proper attire is required for participation Foundation, its governing board, officers, agents, employees, and/ or any students acting as employees -

DISCUSSION GUIDE Based on the Novel by E.B

DISCUSSION GUIDE Based on the novel by E.B. Vickers PRE-READING QUESTIONS: 1. Under what circumstances should a person reveal someone else’s secret? Under what circumstances should a person keep another’s secret? 2. There are times when we make assumptions about someone’s life. What assumptions might people make about you? What things might they get wrong? Are assumptions helpful? Why do we make them? 3. What comes to mind when you hear the word addiction? If you don’t know about addiction through people you know personally, where have you gathered ideas of what addiction looks like? READING ACTIVITIES: • Have students read Stephanie Ericsson’s “The Ways We Lie,” summarizing each of the ten kinds of lies she outlines. As students are reading Fadeaway, have them note an example of characters telling each kind of lie, and why they think the character told that kind of lie. (See chart at the end of this guide.) • When Kolt starts telling his part of the story, he says he and Jake “were from the same part of town – the wrong part” (7). What might he have meant by this? Based on what you have read so far, what do you think the wrong part of this town is? What kinds of assumptions do people make upon hearing a statement like this? • At the start of the book, there are several characters who give statements to the police. Re-read Kolt’s (5), Daphne’s (16), Luke’s (52), Sabrina’s (70) and then Kolt’s second statement (84). -

Hawks Work 4Ots at 1-800-765-4011,Ext

Time: 03-25-2012 23:05 User: rtaylor2 PubDate: 03-26-2012 Zone: IN Edition: 1 Page Name: C2 Color: CyanMagentaYellowBlack C2 | MONDAY,MARCH 26, 2012 | THE COURIER-JOURNAL SPORTS | courier-journal.com/sports IN NBA To report sports scores E-mail [email protected] or call Scorecard the sports desk at 502-582-4361, or toll free Hawks work 4OTs at 1-800-765-4011,ext. 4361 COLLEGE AUTO RACING $79,555. 1:59:50.9863. Margin of Victory: 38. (32) Michael McDowell,Ford, vi- 5.5292 seconds. Cautions:3for 15 Suns making BASKETBALL Sprint Cup bration, 6, $79,307. laps. Lead Changes:9among 7 Auto Club 400 39. (20) David Stremme,Toyota, rear drivers. Men Sunday at Fontana, Calif. gear,5,$75,855. Lap Leaders:Power 1-11, Briscoe runinWest NCAA Tournament Auto Club Speedway 40. (39) MikeBliss,Toyota, transmis- 12-20, Dixon 21-36, Sato 37-46, SOUTH REGIONAL Lap length: 2miles sion, 0, $75,675. Franchitti 47, Dixon 48-68, Castro- At The Georgia Dome (Start position in parentheses) 41. (35) Scott Riggs,Chevrolet, vibra- neves 69-70,Sato 71, Hildebrand 72- Associated Press Atlanta 1. (9) Tony Stewart, Chevrolet, 129 tion, 3, $75,505. 74, Castroneves 75-100. Regional Championship laps,$323,450. 42. (43) Reed Sorenson, Chevrolet, Points:Castroneves 50, Dixon 42, Sunday 2. (2) Kyle Busch, Toyota, 129, vibration, 0, $75,415. Hunter-Reay 35, Hinchcliffe 32, Bris- Joe Johnson scored 37 Kentucky 82, Baylor 70 $259,698. 43. (37) Brendan Gaughan, Chevro- coe 30, Pagenaud 28, Power 27, Viso points, Josh Smith added MIDWEST REGIONAL 3. -

Player Set Card # Team Print Run Al Horford Top-Notch Autographs

2013-14 Innovation Basketball Player Set Card # Team Print Run Al Horford Top-Notch Autographs 60 Atlanta Hawks 10 Al Horford Top-Notch Autographs Gold 60 Atlanta Hawks 5 DeMarre Carroll Top-Notch Autographs 88 Atlanta Hawks 325 DeMarre Carroll Top-Notch Autographs Gold 88 Atlanta Hawks 25 Dennis Schroder Main Exhibit Signatures Rookies 23 Atlanta Hawks 199 Dennis Schroder Rookie Jumbo Jerseys 25 Atlanta Hawks 199 Dennis Schroder Rookie Jumbo Jerseys Prime 25 Atlanta Hawks 25 Jeff Teague Digs and Sigs 4 Atlanta Hawks 15 Jeff Teague Digs and Sigs Prime 4 Atlanta Hawks 10 Jeff Teague Foundations Ink 56 Atlanta Hawks 10 Jeff Teague Foundations Ink Gold 56 Atlanta Hawks 5 Kevin Willis Game Jerseys Autographs 1 Atlanta Hawks 35 Kevin Willis Game Jerseys Autographs Prime 1 Atlanta Hawks 10 Kevin Willis Top-Notch Autographs 4 Atlanta Hawks 25 Kevin Willis Top-Notch Autographs Gold 4 Atlanta Hawks 10 Kyle Korver Digs and Sigs 10 Atlanta Hawks 15 Kyle Korver Digs and Sigs Prime 10 Atlanta Hawks 10 Kyle Korver Foundations Ink 23 Atlanta Hawks 10 Kyle Korver Foundations Ink Gold 23 Atlanta Hawks 5 Pero Antic Main Exhibit Signatures Rookies 43 Atlanta Hawks 299 Spud Webb Main Exhibit Signatures 2 Atlanta Hawks 75 Steve Smith Game Jerseys Autographs 3 Atlanta Hawks 199 Steve Smith Game Jerseys Autographs Prime 3 Atlanta Hawks 25 Steve Smith Top-Notch Autographs 31 Atlanta Hawks 325 Steve Smith Top-Notch Autographs Gold 31 Atlanta Hawks 25 groupbreakchecklists.com 13/14 Innovation Basketball Player Set Card # Team Print Run Bill Sharman Top-Notch Autographs -

Rosters Set for 2014-15 Nba Regular Season

ROSTERS SET FOR 2014-15 NBA REGULAR SEASON NEW YORK, Oct. 27, 2014 – Following are the opening day rosters for Kia NBA Tip-Off ‘14. The season begins Tuesday with three games: ATLANTA BOSTON BROOKLYN CHARLOTTE CHICAGO Pero Antic Brandon Bass Alan Anderson Bismack Biyombo Cameron Bairstow Kent Bazemore Avery Bradley Bojan Bogdanovic PJ Hairston Aaron Brooks DeMarre Carroll Jeff Green Kevin Garnett Gerald Henderson Mike Dunleavy Al Horford Kelly Olynyk Jorge Gutierrez Al Jefferson Pau Gasol John Jenkins Phil Pressey Jarrett Jack Michael Kidd-Gilchrist Taj Gibson Shelvin Mack Rajon Rondo Joe Johnson Jason Maxiell Kirk Hinrich Paul Millsap Marcus Smart Jerome Jordan Gary Neal Doug McDermott Mike Muscala Jared Sullinger Sergey Karasev Jannero Pargo Nikola Mirotic Adreian Payne Marcus Thornton Andrei Kirilenko Brian Roberts Nazr Mohammed Dennis Schroder Evan Turner Brook Lopez Lance Stephenson E'Twaun Moore Mike Scott Gerald Wallace Mason Plumlee Kemba Walker Joakim Noah Thabo Sefolosha James Young Mirza Teletovic Marvin Williams Derrick Rose Jeff Teague Tyler Zeller Deron Williams Cody Zeller Tony Snell INACTIVE LIST Elton Brand Vitor Faverani Markel Brown Jeffery Taylor Jimmy Butler Kyle Korver Dwight Powell Cory Jefferson Noah Vonleh CLEVELAND DALLAS DENVER DETROIT GOLDEN STATE Matthew Dellavedova Al-Farouq Aminu Arron Afflalo Joel Anthony Leandro Barbosa Joe Harris Tyson Chandler Darrell Arthur D.J. Augustin Harrison Barnes Brendan Haywood Jae Crowder Wilson Chandler Caron Butler Andrew Bogut Kentavious Caldwell- Kyrie Irving Monta Ellis -

Official Basketball Statistics Rules Basic Interpretations

Official Basketball Statistics Rules With Approved Rulings and Interpretations (Throughout this manual, Team A players have last names starting with “A” the shooter tries to control and shoot the ball in the and Team B players have last names starting with “B.”) same motion with not enough time to get into a nor- mal shooting position (squared up to the basket). Article 2. A field goal made (FGM) is credited to a play- Basic Interpretations er any time a FGA by the player results in the goal being (Indicated as “B.I.” references throughout manual.) counted or results in an awarded score of two (or three) points except when the field goal is the result of a defen- sive player tipping the ball in the offensive basket. 1. APPROVED RULING—Approved rulings (indicated as A.R.s) are designed to interpret the spirit of the applica- Related rules in the NCAA Men’s and Women’s Basketball tion of the Official Basketball Rules. A thorough under- Rules and Interpretations: standing of the rules is essential to understanding and (1) 4-33: Definition of “Goal” applying the statistics rules in this manual. (2) 4-49.2: Definition of “Penalty for Violation” (3) 4-69: Definition of “Try for Field Goal” and definition of 2. STATISTICIAN’S JOB—The statistician’s responsibility is “Act of Shooting” to judge only what has happened, not to speculate as (4) 4-73: Definition of “Violation” to what would have happened. The statistician should (5) 5-1: “Scoring” not decide who would have gotten the rebound if it had (6) 9-16: “Basket Interference and Goaltending” not been for the foul. -

Basketball House Rules

Policy and Procedure Department: Recreation + Wellness Section: Title: Kiewit Fitness Center Basketball House Effective Date: Rules Authored by: Lucia Zamecnik Approval Date: Approved by: Revision Date: Type: Departmental Policy Purpose: This policy was created to ensure the general safety of all patrons who are planning on participating in basketball within the Kiewit Fitness Center and to provide a general outline of what is expected of those participating. Scope: All students, faculty, staff and guests that are using the recreational facilities that are planning on participating in pick up basketball. Policy: Follow all guidelines associated with basketball games in the Kiewit Fitness Center in the procedure section below. Failure to follow guidelines will result in suspension or facility privileges being revoked. Procedure/Guidelines: Team Selection – First Game of the day on each court only: 1. Teams for the first game of the day on each court are determined by shooting free throws. Players may not select their own teams 2. The first five people to score form one team. The next five people form the second team. Everyone must get an equal number of chances to shoot. If free throw shooting takes too long, players will move to the three-point line to shoot. 3. After teams are selected, a player from either team will take a three-point shot. If it goes in, that team take the opening in-bound. Otherwise, the other team receives the in-bound to start the game. 4. Teams are formed on a first-come, first-serve basis. 5. Whoever has called the net game will accept the next four people who arrive at that court and ask to play 6. -

YMCA Recreational Basketball Rules

YMCA Recreational Basketball Rules All players must play at least half a game or receive equal playing time. Allowances may be made if practices are missed or for behavioral problems. Team rules should be in place by coaches and team members. Grades 2-4 Both Head Coaches will meet at mid-court socially distance prior to game with official(s) to discuss game procedure, special rules and odd/even number behind back for possession of ball (no center jump). Grades 5-8 Both Head Coaches will meet at mid-court socially distance prior to game to meet with official(s) to discuss game procedures. Tip off at center court to begin game. PLAYING RULES In general, the league will be governed by the Nebraska High School Basketball rules. 1. Bench Area Only the Head Coach can stand during game play (if bench/chairs present). Maximum of 2 coaches on bench. NO PARENTS IN BENCH AREA. 2. Time Limits Two 20 minute Halves. 3 minute break between halves. Grade 2 & 3: Score is not kept; clock will only stop on time-outs/injuries. Grades 4-8: Clock will only stop on time-outs/injuries and on all whistles in the final minute of the game, only if game is within 5 points. 3. Game Time Game may be started and played with 4 players (5th player, upon arrival, can sub in at dead ball). 4. Time-outs Each team is allowed one(1) full time-out and one(1) 30 second time-out per half. Time-outs DO NOT carry over to second half.