Program Overview and Operations Plan 26 April, 2021

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sheila Simmenes.Pdf (1.637Mb)

Distant Music An autoethnographic study of global songwriting in the digital age. SHEILA SIMMENES SUPERVISOR Michael Rauhut University of Agder, 2020 Faculty of Fine Arts Department of Popular music 2 Abstract This thesis aims to explore the path to music creation and shed a light on how we may maneuver songwriting and music production in the digital age. As a songwriter in 2020, my work varies from being in the same physical room using digital communication with collaborators from different cultures and sometimes even without ever meeting face to face with the person I'm making music with. In this thesis I explore some of the opportunities and challenges of this process and shine a light on tendencies and trends in the field. As an autoethnographic research project, this thesis offers an insight into the songwriting process and continuous development of techniques by myself as a songwriter in the digital age, working both commercially and artistically with international partners across the world. The thesis will aim to supplement knowledge on current working methods in songwriting and will hopefully shine a light on how one may improve as a songwriter and topliner in the international market. We will explore the tools and possibilities available to us, while also reflecting upon what challenges and complications one may encounter as a songwriter in the digital age. 3 4 Acknowledgements I wish to express my gratitude to my supervisor Michael Rauhut for his insight, expertise and continued support during this work; associate professor Per Elias Drabløs for his kind advice and feedback; and to my artistic supervisor Bjørn Ole Rasch for his enthusiasm and knowledge in the field of world music and international collaborations. -

The A-Z of Brent's Black Music History

THE A-Z OF BRENT’S BLACK MUSIC HISTORY BASED ON KWAKU’S ‘BRENT BLACK MUSIC HISTORY PROJECT’ 2007 (BTWSC) CONTENTS 4 # is for... 6 A is for... 10 B is for... 14 C is for... 22 D is for... 29 E is for... 31 F is for... 34 G is for... 37 H is for... 39 I is for... 41 J is for... 45 K is for... 48 L is for... 53 M is for... 59 N is for... 61 O is for... 64 P is for... 68 R is for... 72 S is for... 78 T is for... 83 U is for... 85 V is for... 87 W is for... 89 Z is for... BRENT2020.CO.UK 2 THE A-Z OF BRENT’S BLACK MUSIC HISTORY This A-Z is largely a republishing of Kwaku’s research for the ‘Brent Black Music History Project’ published by BTWSC in 2007. Kwaku’s work is a testament to Brent’s contribution to the evolution of British black music and the commercial infrastructure to support it. His research contained separate sections on labels, shops, artists, radio stations and sound systems. In this version we have amalgamated these into a single ‘encyclopedia’ and added entries that cover the period between 2007-2020. The process of gathering Brent’s musical heritage is an ongoing task - there are many incomplete entries and gaps. If you would like to add to, or alter, an entry please send an email to [email protected] 3 4 4 HERO An influential group made up of Dego and Mark Mac, who act as the creative force; Gus Lawrence and Ian Bardouille take care of business. -

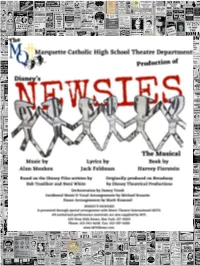

Newsies Digital Program

Director’s Note This show was neither for me, nor about me. It was for the kids. It was for every theatre person who has been denied putting on theatre for the last year. It was for artists, and technicians, and house managers, and designers, and everyone across the country who lost their jobs in the performing arts and have no idea when or if they will be able to return. This show was to let people know that theatre will go on, theatre is important, and whether you realize it or not, theatre is needed. This was a show that I thought we might never be able to pull off, but just to prove that people are capable of so much more than we give ourselves credit for - I decided to do it for these kids. Act One Scenes and Musical Numbers 1. Rooftop: Dawn Santa Fe (Prologue) Jack & Crutchie 2. Newsie’s Square Carrying the Banner Jack, Newsies, & Nuns Carrying the Banner (Tag) Newsies 3. Pulitzer’s Office The Bottom Line Pulitzer, Switzer, Bunsen & Hannah Carrying the Banner(Reprise) Newsies 4. A Street Corner 5. Medda’s Theatre That’s Rich Medda I Never Planned on You/ Don’t Come a-Knocking Jack & Bowery Beauties 6. Newsies Square The World Will Know Jack, Davey, Les, Crutchie & Newsies 7. Jacobi’s Deli & Street The World Will Know(Reprise) Jack, Davey, Les & Newsies 8. Katherine’s Office Watch What Happens Katherine 9. Newsies Square Seize the Day Davey, Jack, Les & Newsies 10. Rooftop Santa Fe Jack Act Two Scenes and Musical Numbers 1. -

Superm 1St Mini Album Download Prostudiomasters.Com

superm 1st mini album download ProStudioMasters.com. High-resolution audio offers the highest-fidelity available, far surpassing the sound quality of traditional CDs. When you listen to music on a CD or tracks purchased via consumer services such as iTunes, you are hearing a low-resolution version of what was actually recorded and mastered in the studio. ProStudioMasters offers the original studio masters — exactly as the artist, producers and sound engineers mastered them — for download, directly to you. What do I need for playback? You may need additional software / hardware to take full advantage of the higher 24-bit high-res audio formats, but any music lover that has heard 16-bit vs 24-bit will tell you it's worth it! Super One -The 1st Album. Purchase and download this album in a wide variety of formats depending on your needs. Buy the album Starting at £9.49. Super One -The 1st Album. Copy the following link to share it. You are currently listening to samples. Listen to over 70 million songs with an unlimited streaming plan. Listen to this album and more than 70 million songs with your unlimited streaming plans. 1 month free, then £14,99/ month. Lucas, Vocalist, AssociatedPerformer - Mark, Vocalist, AssociatedPerformer - Ten, Vocalist, AssociatedPerformer - Moonshine, Producer, Programming, AssociatedPerformer - Kai, Vocalist, AssociatedPerformer - Kenzie, Producer, Programming, AssociatedPerformer, ComposerLyricist - Jonatan Gusmark, ComposerLyricist - Bobii Lewis, ComposerLyricist - Adrian Mckinnon, ComposerLyricist - Taemin, -

Indiana University at Bloomington Official Lists of Graduates And

Indiana University at Bloomington Official Lists of Graduates and Honors Recipients 2017 - 2018 Dates Degrees Conferred June 30, 2017 July 28, 2017 August 19, 2017 August 31, 2017 September 30, 2017 October 31, 2017 November 11, 2017 November 30, 2017 December 16, 2017 January 31, 2018 February 17, 2018 February 28, 2018 March 31, 2018 April 30, 2018 May 4, 2018 May 5, 2018 May 19, 2018 1 2 ** DEGREE LISTINGS FOR STUDENTS WITH COMPLETE RESTRICTIONS ARE EXCLUDED FROM THE RELEASED OFFICIAL LIST OF GRADUATES ** 3 June Business June Business June Business B. S. in Business B. S. in Business B. S. in Business Allen, Daniel Reed Glavin, Timothy Patrick Menne, Justin Patrick Finance Finance BEPP: Economic Consulting Accounting Accounting Finance International Business Accounting Bratrud, Derek David Finance Graf, Krystal Ann Owens, Jackson Dawson Management Finance Civantos, Caroline Elizabeth Operations Management Technology Management Information Systems Gu, Xiaoxuan Perlmutter, Samantha Nicole Claycomb, Cameron Accounting Accounting Accounting Finance Technology Management With Highest Distinction Coen, Andrew Joseph Pfannes, Michael Edward Finance Hasanat, Yaman Marketing Technology Management Supply Chain Management Rayborn, Jessica Ann Connolly, Nathan Allan Hegeler, Wyatt Davis Finance Accounting Legal Studies Accounting Finance Heussner, Matthew Ryan Roberts, Brian William Dave, Neil Bhasker Real Estate Finance Finance Accounting Entrepr & Corp Innovation Donnelly, Lisa Mary Hines, Jennifer Ann Rou, Jeonghwa Marketing Marketing Accounting -

Mayor Joseph A. Curtatone and the Somerville Arts Council Present…

PRESS RELEASE contact: Rachel Strutt Somerville Arts Council 617-625-6600, x2985 [email protected] www.somervilleartscouncil.org Mayor Joseph A. Curtatone and the Somerville Arts Council present… ARTBEAT FESTIVAL DAVIS SQUARE, SOMERVILLE Sat., July 13th, 11am-10pm (rain date: 7/14, same times) Location: Throughout the square, including Seven Hills Park, Elm Street, and Holland Street (streets closed to traffic) Suggested Donation: $3 (Get a cool dog tag!) About ArtBeat: This year the Arts Council is teaming up with the City’s Office of Sustainability and Environment to investigate how artists and climate activists can collaborate in ways that encourage all of us to consume less, affect positive change, and have fun along the way. Also, expect the usual barrage of bands, art, dance, food, a parade—and much more! B.Y.O.W.B. This year we’re asking all festival goers: Bring your own water bottle! You can fill up your bottles at the Quench Buggy (details follow). Let’s make this a festival free of plastic water bottles! ARTBEAT SCHEDULE July 13th [Rain date: 7/14; same times]: Park Stage, Seven Hills Park (behind Somerville Theatre) 12:00 PM Danielle Miraglia & the Glory Junkies (blues/singer-songwriter) 1:00 PM Chris Kaz & the C.O.M.P. (soul/grunge/R&B) 2:00 PM Barrence Whitfield (rock/blues) 3:00 PM Swimming Bell (indie/loops) 4:00 PM The Northern Skulls (rock) 5:00 PM Ruta Beggars (bluegrass) 6:00 PM Klezwoods (klezmer) 7:00 PM Grupo Fantasia (merengue, salsa y mas!) 8:00 PM The Sun Parade (indie) 9:00 PM Cliff Notez (hip-hop) Elm Street Stage (corner of Elm St. -

Chocolate, Mustard and a Fox a and Mustard Chocolate, Rånes Nebb Christer Brian

Chocolate, Mustard and a Fox a and Mustard Chocolate, Rånes Nebb Christer Brian NTNU Norwegian University of Science and Technology Master’s thesis Faculty of Humanities Department of Music Performance and ItsProduction K-Pop, Norwegian andaFox Mustard Chocolate, NebbRånes Brian Christer Trondheim, autumn2014 Trondheim, thesis Master’s in Musicology Brian Christer Nebb Rånes Chocolate, Mustard and a Fox Norwegian K-Pop, Its Production and Performance Master’s Thesis in Musicology Trondheim, November 2014 Norwegian University of Science and Technology Faculty of Humanities Department of Music CONTENTS Contents .......................................................................................................... iii Abstract ............................................................................................................ v Acknowledgements ..................................................................................... vii Introduction ................................................................................................... xi Chapter One: A Deliberate Globalization Strategy ................................ 1 Cultural Technology, Hybridization and the Creation of a “World Culture” ........................... 1 Chapter Two: Catering to the Global Market ........................................ 23 Globalization of Content in Crayon Pop’s “Bar Bar Bar” ........................................................ 23 “The Fox Say ‘Bar Bar Bar’” ..................................................................................................... -

Birmingham, Al

BIRMINGHAM, AL FRIDAY, FEBRUARY 26TH, 2021 MINI/JUNIOR/INTERMEDIATE SOLO COMPETITION BEGINS AT 12:30PM 1 MINI JAZZ SOLO I WILL SURVIVE Q MADDIE EL-AMIN 2 MINI JAZZ SOLO YES I CAN Q ANNIE CARDWELL 3 MINI MUSICAL THEATRE SOLO SPANISH ROSE Q CECE HANLEY 4 MINI LYRICAL SOLO DREAM Q LOUISE COLE 5 JUNIOR CONTEMP/MODERN SOLO FIRE Q ALLIE PLOTT 6 INTERMEDIATE TAP SOLO BOOGIE SHOES Q MACKENZIE MEISSNER 7 INTERMEDIATE JAZZ SOLO SOPHISTICATED Q CAYLER ANN RICH 8 INTERMEDIATE LYRICAL SOLO DAUGHTER Q JULIANNA ARENDALE 9 INTERMEDIATE LYRICAL SOLO MY HEART WITH YOU Q LYDIA SMITH 10 INTERMEDIATE LYRICAL SOLO RELEASE Q ISABELLA WILLIAMS 11 INTERMEDIATE LYRICAL SOLO WITHOUT YOU Q KATE FELLER 12 INTERMEDIATE LYRICAL SOLO NO MORE TEARS Q GRACIE ELDER 1 13 INTERMEDIATE LYRICAL SOLO UPHILL BATTLE Q SADIE BROOKE COLLINS 14 INTERMEDIATE CONTEMP/MODERN SOLO MIND Q MARY JORDAN CLODFELTER 15 INTERMEDIATE CONTEMP/MODERN SOLO BILLIE JEAN Q BROOKE WELTON 16 INTERMEDIATE CONTEMP/MODERN SOLO WHISPER Q LUCY CLAIRE REILLY 17 INTERMEDIATE CONTEMP/MODERN SOLO SMOKESTACKS Q GRACIE HAWKINS 18 INTERMEDIATE CONTEMP/MODERN SOLO THE ESCAPE Q OAKLEY MAY 19 MINI JAZZ SOLO DO YOUR OWN THING J VADA GARNER 20 MINI BALLET/POINTE SOLO RAINBOW J MICHELLE GRANT 1:30PM 21 JUNIOR JAZZ SOLO COVER GIRL J SYDNEY TAYLOR 22 JUNIOR JAZZ SOLO MAKE IT SUPER LOUD J PIPER EVANS 23 JUNIOR BALLET/POINTE SOLO THOUSAND YEARS J MARY LARK 24 INTERMEDIATE JAZZ SOLO DON'T SLACK J ABIGIAL GRIMSLEY 25 MINI JAZZ SOLO THE WAY YOU MAKE ME FEEL N KAYA PLEASANT 26 MINI JAZZ SOLO DO YOU BELIEVE IN MAGIC N ALAINA -

College of Business Commencement 2021

COLLEGE OF BUSINESS COMMENCEMENT 2021 Welcome to CALIFORNIA STATE UNIVERSITY LONG BEACH California State University, Long Beach is a member of the 23-campus California State University (CSU) system. The initial college, known then as Los Angeles-Orange County State College, was established on January 29, 1949, and has since grown to become one of the state’s largest universities. The frst classes in 1949 were held in a converted apartment building on Anaheim Street and the cost to enroll was just $12.50. The 169 transfer students selected from the 25 courses ofered in Teacher Education, Business Education, and Liberal Arts which were taught by 13 faculty members. Enrollment increased in 1953 when freshman and sophomore students were admitted. Expansion, in acreage, degrees, courses and enrollment continued in the 1960s, when the educational mission was modifed to provide instruction for undergraduate and graduate students through the addition of master’s degrees. In 1972, the California Legislature changed the name to California State University, Long Beach. Today, more than 37,000 students are enrolled at Cal State Long Beach, and the campus annually receives high rankings in several national surveys. What students fnd when they come here is an academic excellence achieved through a distinguished faculty, hard-working staf and an efective and visionary administration. The faculty’s primary responsibility is to create, through efective teaching, research and creative activities, a learning environment where students grow and develop to their fullest potential. This year, because of the pandemic, we will celebrate the outstanding graduates of two classes – 2020 and 2021. -

Super Junior

Super Junior From Wikipedia, the free encyclopedia For the professional wrestling tournament, see Best of the Super Juniors. Super Junior Super Junior performing at SMTown Live '08 in Bangkok,Thailand Background information Origin Seoul, South Korea Genres Pop, R&B, dance, electropop, electronica,dance-pop, rock, e lectro, hip-hop, bubblegum pop Years active 2005–present Labels S.M. Entertainment (South Korea) Avex Group (Japan) Associated SM Town, Super Junior-K.R.Y., Super Junior-T,Super acts Junior-M, Super Junior-Happy, S.M. The Ballad, M&D Website superjunior.smtown.com,facebook.com/superjunior Members Leeteuk Heechul Han Geng Yesung Kangin Shindong Sungmin Eunhyuk Donghae Siwon Ryeowook Kibum Kyuhyun Korean name Hangul 슈퍼주니어 Revised Romanization Syupeojunieo McCune–Reischauer Syupŏjuniŏ This article contains Koreantext. Without proper rendering support, you may see question marks, boxes, or other symbolsinstead of Hangul or Hanja. This article contains Chinesetext. Without proper rendering support, you may see question marks, boxes, or other symbolsinstead of Chinese characters. This article contains Japanesetext. Without proper rendering support, you may see question marks, boxes, or other symbolsinstead of kanji and kana. Super Junior (Korean: 슈퍼주니어; Japanese: スーパージュニア) is a South Korean boy band from formed by S.M. Entertainment in 2005. The group debuted with 12 members: Leeteuk (leader), Heechul, Han Geng, Yesung, Kangin, Shindong, Sungmin, Eunhyuk, Donghae, Siwon,Ryeowook, Kibum and later added a 13th member named Kyuhyun; they are one of the largest boy bands in the world. As of September 2011, eight members are currently active,[1] due to Han Geng's lawsuit with S.M. -

2019-20 Officers JMHS Band Parents Organization

Color Guard Awards Inserted Here JMHS Band Parents Organization 2019-20 Officers JMHS Band Parents Organization Catherine Baker and Alex Keam CO- PRESIDENTS John Weeks and Roman Neumeister CO- VICE PRESIDENTS Sylvia Martin-Estes TREASURER Heather Ireland SECRETARY 2019-20 Committee Chairs AWARDS: Mary Kay Alegre SET CONSTRUCTION: TINY TOTS: Jean Torres, Karen Richard Skinner Cain BAND CAMP: Laura Shannon SPRING TRIP: Sylvia Martin- TRANSPORTATION & PIT Estes EQUIPMENT: Richard Skinner, CHAPERONES: Katherine Ken Church Thomas, Rita Monner, Anna THANK Neumeister SCRIP: Joan Walsh TURKEY TROT: Helena Klumpp, Bill Emery, Guy HOSPITALITY: Cheryl Cass SENIOR NIGHT: Amy Masters Kiyokawa PHOTOGRAPHY: Melissa SPIRITWEAR: Alicia Manfra UNIFORMS: Susan Petrovich, Maillet, Mercy Berbano Susan Toloczko YOU! TAG DAY: Greg Gurski, Katie PUBLICITY: Ellen James Gurski WHITE HOUSE ORNAMENTS: Becky Lewis 50/50 RAFFLE: Bill Mann 2020 AWARDS Virginia State Champion. •. BOA Mid-Atlantic Regional Champion •. BOA National Semi-Finalist The JMHS Band Parents Organization WISHES TO SAY THANK YOU THANK TO ALL OUR VOLUNTEERS! YOU! 2020 AWARDS Virginia State Champion. •. BOA Mid-Atlantic Regional Champion •. BOA National Semi-Finalist 2020-21 Officers JMHS Band Parents Organization John Weeks and Roman Neumeister CO- PRESIDENTS Guy Kiyokawa and Katie Geiser-Bush CO- VICE PRESIDENTS Rachel Paci and Kristin Rothrock CO- TREASURERS Heather Ireland SECRETARY Thank You Mr. Hackbarth THANK YOU! 2020 AWARDS Virginia State Champion. •. BOA Mid-Atlantic Regional Champion •. -

Commencement Program 4 Commencement Speaker 5 Honorary Degree Recipients 9 Student Speaker 10 Candidates for Degrees

Duke University Commencement One Hundred Sixty-Seventh Commencement Sunday, May 12, 2019 9:00 a.m. Wa ll ace Wade Stadium Duke University Durham, North Carolina Table of Contents 2 Commencement Program 4 Commencement Speaker 5 Honorary Degree Recipients 9 Student Speaker 10 Candidates for Degrees 10 Graduate School 29 School of Nursing Doctor of Philosophy Doctor of Nursing Practice Master of Arts Master of Science in Nursing Master of Arts in Teaching Bachelor of Science in Nursing Master of Fine Arts Master of Science 31 Fuqua School of Business Master of Business Administration 21 School of Medicine Master of Management Studies Doctor of Medicine Master of Science in Quantitative Management Doctor of Physical Therapy Master of Biostatistics 35 Nicholas School of the Environment Master of Health Sciences International Master of Environmental Policy* Master of Health Sciences in Clinical Research Master of Environmental Management Master of Management in Clinical Informatics Master of Forestry Master of Science in Biomedical Sciences 36 Sanford School of Public Policy 24 School of Law Master of International Development Policy Doctor of Juridical Science Master of Public Policy Juris Doctor 37 Pratt School of Engineering Master of Laws Master of Engineering Master of Laws, International and Comparative Law Master of Engineering Management Master of Laws, Law and Entrepreneurship Bachelor of Science in Engineering 26 Divinity School 40 Trinity College of Arts and Sciences Doctor of Ministry Bachelor of Arts Doctor of Theology Bachelor of Science Master of Arts in Christian Practice Master of Arts In Christian Studies Master of Divinity Master of Theological Studies Master of Theology 45 Honors and Distinctions 52 Special Prizes and Awards 55 Scholarships and Fellowships 56 Military Service 56 Members of the Faculty Retiring 57 Marshals 59 Departmental Events 60 The Traditions of Commencement 61 Commencement Timeline * Joint degree with Sanford School of Public Policy TWO THOUSAND NINETEEN COMMENCEMENT 2 Commencement Program Presiding Vincent E.