Architecture and Design of Alphaserver GS320

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alphaserver GS1280 Overview

QuickSpecs HP AlphaServer GS1280 Systems Overview HP AlphaServer GS1280 Models 8 and 16 1. Cable/DSL HUB 2. PCI/PCI-X I/O Expansion Drawer (optional) 3. PCI/PCI-X I/O Master Drawer (mandatory option) 4. System Building Block Drawer (Model 8 Includes 1 drawer, Model 16 includes 2 drawers) 5. 48-volt DC Power shelves, 3 power supplies per shelf (Model 8 includes 1 shelf, Model 16 includes 2 shelves) 6. AC input controller(s) (mandatory option) DA - 11921 Worldwide — Version 24 — July 23, 2007 Page 1 QuickSpecs HP AlphaServer GS1280 Systems Overview HP AlphaServer GS1280 Model 32 1. Cable/DSL HUB 2. I/O Expansion Drawer (optional) 3. I/O Master Drawer (mandatory option) 4. System Building Block Drawer (Model 32 includes four drawers) 5. Power supply, dual AC input is standard 6. AC input controllers (two mandatory options for Model 32) DA - 11921 Worldwide — Version 24 — July 23, 2007 Page 2 QuickSpecs HP AlphaServer GS1280 Systems Overview HP AlphaServer GS1280 Model 64 1. Cable/DSL HUB 2. I/O Expansion Drawer (optional) 3. I/O Master Drawer (one drawer is optional) 4. System Building Block Drawer (Model 64 includes eight drawers) 5. Power supply, dual AC input is standard (two included for Model 64) 6. AC input controllers (two mandatory options for Model 64) DA - 11921 Worldwide — Version 24 — July 23, 2007 Page 3 QuickSpecs HP AlphaServer GS1280 Systems Overview At A Glance AlphaServer GS1280 Systems Up to 64 Alpha 21364 EV7 processors at 1300 MHz and 1150 MHz with advanced on-chip memory controllers and switch logic capable of providing -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Piranha:Piranha

Piranha:Piranha: DesigningDesigning aa ScalableScalable CMP-basedCMP-based SystemSystem forfor CommercialCommercial WorkloadsWorkloads LuizLuiz AndréAndré BarrosoBarroso WesternWestern ResearchResearch LaboratoryLaboratory April 27, 2001 Asilomar Microcomputer Workshop WhatWhat isis Piranha?Piranha? l A scalable shared memory architecture based on chip multiprocessing (CMP) and targeted at commercial workloads l A research prototype under development by Compaq Research and Compaq NonStop Hardware Development Group l A departure from ever increasing processor complexity and system design/verification cycles ImportanceImportance ofof CommercialCommercial ApplicationsApplications Worldwide Server Customer Spending (IDC 1999) Scientific & Other engineering 3% 6% Infrastructure Collaborative 29% 12% Software development 14% Decision Business support processing 14% 22% l Total server market size in 1999: ~$55-60B – technical applications: less than $6B – commercial applications: ~$40B PricePrice StructureStructure ofof ServersServers Normalized breakdown of HW cost l IBM eServer 680 100% (220KtpmC; $43/tpmC) 90% § 24 CPUs 80% 70% I/O § 96GB DRAM, 18 TB Disk 60% DRAM 50% § $9M price tag CPU 40% Base 30% l Compaq ProLiant ML370 20% 10% (32KtpmC; $12/tpmC) 0% § 4 CPUs IBM eServer 680 Compaq ProLiant ML570 § 8GB DRAM, 2TB Disk Price per component System § $240K price tag $/CPU $/MB DRAM $/GB Disk IBM eServer 680 $65,417 $9 $359 Compaq ProLiant ML570 $6,048 $4 $64 - Storage prices dominate (50%-70% in customer installations) - Software maintenance/management costs even higher (up to $100M) - Price of expensive CPUs/memory system amortized OutlineOutline l Importance of Commercial Workloads l Commercial Workload Requirements l Trends in Processor Design l Piranha l Design Methodology l Summary StudiesStudies ofof CommercialCommercial WorkloadsWorkloads l Collaboration with Kourosh Gharachorloo (Compaq WRL) – ISCA’98: Memory System Characterization of Commercial Workloads (with E. -

Impact of Chip-Level Integration on Performance of OLTP Workloads

Appeared in the Sixth International Symposium on High-Performance Computer Architecture (HPCA), January 2000. Impact of Chip-Level Integration on Performance of OLTP Workloads Luiz Andre´ Barroso, Kourosh Gharachorloo, Andreas Nowatzyk and Ben Verghese Western Research Laboratory Compaq Computer Corporation g fbarroso,kourosh,verghese @pa.dec.com, [email protected] Abstract sistors on a single die. At the same time, the increasing density and speed of transistors make off-chip communication relatively With increasing chip densities, future microprocessor designs more expensive. These technology trends provide an incentive have the opportunity to integrate many of the traditional system- to integrate functional modules that traditionally appear at the level modules onto the same chip as the processor. Some cur- system-level onto the same die as the processor. The current HP rent designs already integrate extremely large on-chip caches, PA-8500 microprocessor already integrates a 1MB data cache and there are aggressive next-generation designs that attempt to and a 0.5MB instruction cache with the processor. Similarly, also integrate the memory controller, coherence hardware, and the next-generation Alpha 21364 plans to aggressively exploit network router all onto a single chip. The tight coupling of such integration by including a 1GHz 21264 core, two levels these modules will enable efficient memory systems with sub- of caches, memory controller, coherence hardware, and network stantially better latency and bandwidth characteristics relative router all on a single die. Figure 1 shows a block diagram of the to current designs. Among the important application areas for Alpha 21364 along with how the processors can be connected to high-performance servers, online transaction processing (OLTP) form a scalable multiprocessor. -

Alphaserver ES47 Overview

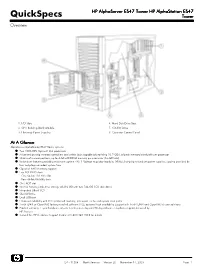

HP AlphaServer ES47 Tower HP AlphaStation ES47 QuickSpecs Tower Overview 1. I/O Slots 4. Hard Disk Drive Bays 2. CPU Building Block Module 5. CD-RW Drive 3. Hot-swap Power Supplies 6. Operator Control Panel At A Glance AlphaServer/AlphaStation ES47 Tower systems Two 1000-MHz Alpha 21364 processors Advanced on-chip memory controllers and switch logic capable of providing 10.7-GB/s of peak memory bandwidth per processor Choice of memory options; up to 4-GB of RDRAM memory per processor (16-GB total) Redundant features providing maximum uptime - N+1 Voltage regulator Modules (VRMs); hot-plug redundant power supplies; cooling provided by four hot-plug redundant system fans Optional RAID memory support Five PCI-X I/O slots - One 64-bit/133-MHz slot - Four 64-bit/66-MHz slots One AGP slot Internal hot-plug disk drive storage of 292 GB with two 146-GB SCSI disk drives Integrated Ultra3 SCSI CD-RW Drive Dual USB port Enhanced reliability with ECC-protected memory, processor cache and system data paths Tru64 UNIX or OpenVMS factory installed software (FIS); optional high availability support with Tru64 UNIX and OpenVMS cluster solutions Product warranty, 1-year hardware, on-site next business day and 90-day software, telephone support delivered by HP Services Consult the HP Customer Support Center at 1-800 345 1518 for details DA - 11508 North America — Version 20 — November 14, 2003 Page 1 HP AlphaServer ES47 Tower HP AlphaStation ES47 QuickSpecs Tower Standard Features Processor Two Alpha 21364 1000-MHz CPUs Cache Memory 1.75-MB ECC L2 on-chip -

Piranha: a Scalable Architecture Based on Single-Chip Multiprocessing

Piranha: A Scalable Architecture Based on Single-Chip Multiprocessing Luiz André Barroso, Kourosh Gharachorloo, Robert McNamara†, Andreas Nowatzyk, Shaz Qadeer†, Barton Sano, Scott Smith‡, Robert Stets, and Ben Verghese Western Research Laboratory †Systems Research Center ‡NonStop Hardware Development Compaq Computer Corporation Compaq Computer Corporation Compaq Computer Corporation Palo Alto, CA 94301 Palo Alto, CA 94301 Austin, TX 78728 Abstract pushing the limits of instruction-level parallelism and speculative out-of-order execution. While this trend has led to significant The microprocessor industry is currently struggling with higher performance gains on target applications such as the SPEC development costs and longer design times that arise from exceed- benchmark [40], continuing along this path is becoming less viable ingly complex processors that are pushing the limits of instruction- due to substantial increases in development team sizes and design level parallelism. Meanwhile, such designs are especially ill suited times [18]. Furthermore, more complex designs are yielding for important commercial applications, such as on-line transaction diminishing returns in performance even for applications such as processing (OLTP), which suffer from large memory stall times SPEC. and exhibit little instruction-level parallelism. Given that commer- Meanwhile, commercial workloads such as databases and Web cial applications constitute by far the most important market for applications have surpassed technical workloads to become the high-performance -

The Push Architecture: a Prefetching Framework for Linked Data Structures

THE PUSH ARCHITECTURE A PREFETCHING FRAMEWORK FOR LINKED DATASTRUCTURES by ChiaLin Yang Department of Computer Science DukeUniversity Date Approved Dr Alvin R Leb eck Sup ervisor Dr Jerey S Chase Dr Gershon Kedem Dr Nikos P Pitsianis Dr Xiaobai Sun Dissertation submitted in partial fulllmentofthe requirements for the degree of Do ctor of Philosophy in the Department of Computer Science in the Graduate Scho ol of DukeUniversity c Copyright by ChiaLin Yang All rights reserved ABSTRACT Computer Science THE PUSH ARCHITECTURE A PREFETCHING FRAMEWORK FOR LINKED DATASTRUCTURES by ChiaLin Yang Department of Computer Science DukeUniversity Date Approved Dr Alvin R Leb eck Sup ervisor Dr Jerey S Chase Dr Gershon Kedem Dr Nikos P Pitsianis Dr Xiaobai Sun An abstract of a dissertation submitted in partial fulllment of the requirements for the degree of Do ctor of Philosophy in the Departmentof Computer Science in the Graduate Scho ol of DukeUniversity Abstract The widening p erformance gap b etween pro cessors and memory makes techniques that alleviate this disparity essential for building highp erformance computer sys tems Caches are recognized as a costeective metho d to improve memory system p erformance However a caches eectiveness can b e limited if programs have p o or lo calityThus techniques that hide memory latency are essential to bridging the CPUmemory gap Prefetching is a commonly used technique to overlap memory accesses with computation Prefetching for arraybased numeric applications with regular ac cess patterns has b een well -

Mappingdatatobmcatriumcmdb 2.1.00

Mapping Your Data to BMC Atrium CMDB 2.1.00 Classes This table maps configuration items (CI) found in your environment to the classes available for BMC Atrium CMDB, whether in the common Data Model or an extension. It contains only the information necessary to map a given type of CI. In some cases, this includes attribute values you must set to distinguish the CI from other CI's that are stored in the same class. CI Class Class owner Attributes to set 68000 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "68000" 68010 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "68010" 68020 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "68020" 68030 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "68030" 68040 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "68040" 68xxx family BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "68xxx family" 6x86 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "6x86" Access server BMC.CORE:BMC_ComputerSystem Common Data Model PrimaryCapability = "Access Server" Account BMC.CORE:BMC_Account Common Data Model Activity BMC.CORE:BMC_Activity Common Data Model Admin domain BMC.CORE:BMC_AdminDomain Common Data Model Alpha 21064 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "Alpha 21064" Alpha 21066 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "Alpha 21066" Alpha 21164 BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "Alpha 21164" Alpha 21164a BMC.CORE:BMC_Processor Common Data Model ProcessorFamily = "Alpha -

Performance Analysis of the Alpha 21364-Based HP GS1280 Multiprocessor Zarka Cvetanovic Hewlett-Packard Corporation [email protected]

Performance Analysis of the Alpha 21364-based HP GS1280 Multiprocessor Zarka Cvetanovic Hewlett-Packard Corporation [email protected] Abstract This paper evaluates performance characteristics of the HP These improvements enhanced single-CPU performance GS1280 shared memory multiprocessor system. The and contributed to excellent multiprocessor scaling. We GS1280 system contains up to 64 Alpha 21364 CPUs describe and analyze these architectural advances and connected together via a torus-based interconnect. We present key results and profiling data to clarify the describe architectural features of the GS1280 system. We benefits of these design features. We contrast GS1280 to compare and contrast the GS1280 to the previous- two previous-generation Alpha systems, both based on a generation Alpha systems: AlphaServer GS320 and 21264 processor: GS320 – a 32-CPU SMP NUMA system ES45/SC45. We further quantitatively show the with switch-based interconnect [2], and SC45 – a 4-CPU performance effects of these features using application ES45 systems connected in a cluster configuration via a results and profiling data based on the built-in fast Quadrics switch. [4][5][6][7]. performance counters. We find that the HP GS1280 often SPECfp_rate2000 (Peak) provides 2 to 3 times the performance of the AlphaServer HP GS1280/1.15GHz (1-16P published, 32P estimated) HP SC45/1.25GHz (1-4P published, >4P estimated) GS320 at similar clock frequencies. We find the key 600 HP GS320/1.2GHz (published) IBM/p690/650 Turbo/1.3/1.45GHz (published) reasons for such performance gains are advances in 550 SGI Altix 3K/1GHz (published) memory, inter-processor, and I/O subsystem designs. -

Piranha: a Scalable Architecture Based on Single-Chip Multiprocessing

COMPAQ CONFIDENTIAL - DO NOT DISTRIBUTE Piranha: A Scalable Architecture Based on Single-Chip Multiprocessing Luiz André Barroso, Kourosh Gharachorloo, Robert McNamara‡, Andreas Nowatzyk, Shaz Qadeer‡, Barton Sano, Scott Smith†, Robert Stets, and Ben Verghese Western Research Laboratory, (‡)Systems Research Center, and (†)Tandem ASIC Design Center Compaq Computer Corporation Abstract The microprocessor industry is currently struggling with higher development costs and longer design times that arise from exceedingly complex processors that are pushing the limits of instruction-level parallelism. Meanwhile, such designs are especially ill-suited for important commercial applications, such as on-line transaction processing (OLTP), which suffer from large memory stall times and exhibit little instruction-level parallelism. Given that commercial appli- cations constitute by far the most important market for high-performance servers, the above trends emphasize the need to consider alternative processor designs that specifically target such workloads. The abundance of explicit thread-level parallelism in commercial workloads, along with advances in semiconductor integration density, identify chip multipro- cessing (CMP) as potentially the most promising approach for designing processors targeted at commercial servers. This paper describes the Piranha system, a research prototype being developed at Compaq (a joint effort by Corporate Research and Tandem Division ASIC Design Center). Piranha aggressively exploits chip multiprocessing by integrating eight simple Alpha processor cores along with a two-level cache hierarchy onto a single chip. Piranha also integrates further on-chip functionality to allow for scalable multiprocessor configurations to be built in a glueless and modular fashion. The Alpha processor cores in Piranha are based on a simple single-issue in-order pipelined design. -

HP Alphaserver ES47 / ES80 / GS1280 User Information

hp AlphaServer ES47/ES80/GS1280 User Information Version 3.0 This document is intended for those who manage, operate, or service hp AlphaServer ES47, ES80 and GS1280 systems. Legal notices and Regulatory notices i June 2004 © 2004 Hewlett-Packard Development Company, L.P. Linux is a registered trademark of Linus Torvalds in several countries. UNIX is a trademark of The Open Group in the United States and other countries. All other product names mentioned herein may be trademarks of their respective companies. HP shall not be liable for technical or editorial errors or omissions contained herein. The information in this document is provided “as is” without warranty of any kind and is subject to change without notice. The warranties for HP products are set forth in the express limited warranty statements accompanying such products. Nothing herein should be construed as constituting an additional warranty. Regulatory Notices for Series ET2003 FCC Notice Part 15 of the Federal Communications Commission (FCC) Rules and Regulations has established Radio Frequency (RF) emission limits to provide an interference-free radio frequency spectrum. Many electronic devices, including computers, generate RF energy incidental to their intended function and are, therefore, covered by these rules. These rules place computers and related peripheral devices into two classes, A and B, depending upon their intended installation. Class A devices are those that may reasonably be expected to be installed in a business or commercial environment. Class B devices are those that may reasonably be expected to be installed in a residential environment (i.e., personal computers). The FCC requires devices in both classes to bear a label indicating the interference potential of the device as well as additional operating instructions for the user. -

Detailed Design and Evaluation of Redundant Multithreading Alternatives* Shubhendu S

29th Annual International Symposium on Computer Architecture (ISCA), 2002 Detailed Design and Evaluation of Redundant Multithreading Alternatives* Shubhendu S. Mukherjee Michael Kontz Steven K. Reinhardt VSSAD Colorado VLSI Lab EECS Department Massachusetts Microprocessor Design Center Systems & VLSI Technology Operations University of Michigan, Ann Arbor Intel Corporation Hewlett-Packard Company 1301 Beal Avenue 334 South Street, SHR1-T25 3404 East Harmony Road, ms 55 Ann Arbor, MI 48109-2122 Shrewsbury, MA 01545 Fort Collins, CO 80525 [email protected] [email protected] [email protected] ABSTRACT techniques in forthcoming dual-processor single-chip devices. Exponential growth in the number of on-chip transistors, coupled Our implementation of the single-processor RMT device is based with reductions in voltage levels, makes each generation of on the previously published simultaneous and redundantly threaded microprocessors increasingly vulnerable to transient faults. In a (SRT) processor design [15] (Figure 1a). However, unlike previous multithreaded environment, we can detect these faults by running evaluations, we start with an extremely detailed performance model two copies of the same program as separate threads, feeding them of an aggressive, commercial-grade SMT microprocessor identical inputs, and comparing their outputs, a technique we call resembling the Compaq Alpha Araña (a.k.a. 21464 or EV8) [12]. Redundant Multithreading (RMT). We call this our base processor. We found several subtle issues This paper studies RMT techniques in the context of both single- involved in adding SRT features to such a base SMT design. For and dual-processor simultaneous multithreaded (SMT) single-chip example, adapting the SRT branch outcome queue, which uses devices.