The Evolution of Applied Harmonic Analysis Models of the Real World

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Name Street City ABABULUGU, BIFTU a 309 CAMPUS DR UNIT 3

Name Street City ABABULUGU, BIFTU A 309 CAMPUS DR UNIT 3 AMHERST NY 14226 ABALIGDI, AYESHA A 161 PRINCETON AVE UNIT 3 AMHERST NY 14226 ABAS, SELWA K 136 PRINCETON AVE UNIT 2 AMHERST NY 14226 ABAZAID, EMAN R 581 ALLENHURST RD UNIT A AMHERST NY 14226 ABBAS, AMMAR 288 ALLENHURST RD AMHERST NY 14226 ABBATE, JOSEPHINE A 166 THISTLE LEA WILLIAMSVILLE NY 14221 ABBATOY, DAVID M 535 BURROUGHS DR AMHERST NY 14226 ABBOTT, ELIZABETH F 293 SARATOGA RD AMHERST NY 14226 ABBOTT, GREGORY N 293 SARATOGA RD AMHERST NY 14226 ABBOTT, JACLYN K 216 BERRYMAN DR AMHERST NY 14226 ABBOTT, JAMES E 334 CALLODINE AVE AMHERST NY 14226 ABBOTT, MARY ROBERTA 519 GROVER CLEVELAND HWY AMHERST NY 14226 ABBOTT, MYLES M 293 SARATOGA RD AMHERST NY 14226 ABDALLAH, BAHI 591 ALLENHURST RD AMHERST NY 14226 ABDELLATIF, IHMAIDAN M 497 ALLENHURST RD AMHERST NY 14226 ABDEL-LATIF, RUBA 497 ALLENHURST RD UNIT A AMHERST NY 14226 ABDULJABBAR, ZAID 533 ALLENHURST RD UNIT B AMHERST NY 14226 ABED, JAWAD K 210 CAPEN BLVD AMHERST NY 14226 ABEDZADEAH, ANIS 72 MEADOW LEA DR AMHERST NY 14226 ABEDZADEH, YOUNES 72 MEADOW LEA DR AMHERST NY 14226 ABEEL, ASHLEY C 326 ROYCROFT BLVD AMHERST NY 14226 ABEEL, JOHN EDMUND 326 ROYCROFT BLVD AMHERST NY 14226 ABEL, BARBARA N 351 CAPEN BLVD AMHERST NY 14226 ABEL, JANE M 356 HUXLEY DR AMHERST NY 14226 ABEL, KEVIN S 356 HUXLEY DR AMHERST NY 14226 ABEL, NANCY J 356 HUXLEY DR AMHERST NY 14226 ABEL, RICHARD C 356 HUXLEY DR AMHERST NY 14226 ABEL-NEAL, SHERYL L 350 WASHINGTON HWY AMHERST NY 14226 ABELS, JOHN R 78 SARGENT DR AMHERST NY 14226 ABELS, LINDSAY E 78 SARGENT -

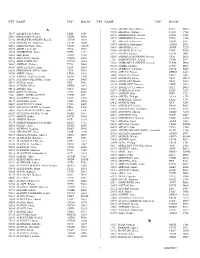

Ext Name Div Room Ext Name Div Room ______

EXT NAME DIV ROOM EXT NAME DIV ROOM __________________________________________________________________________________________________________________________ A 56407 ALUNG, Ashok CSAP D170 52673 ALVETRETI, Letizia CSPL D328 54157 ABABOUCH, Lahsen FIPX F405 54458 AMADO, Maria Blanca TCIB D503 55581 ABALSAMO, Stefania CIOO F713 53290 AMARAL, Cristina TCE C744 53264 ABBASSIAN, Abdolreza ESTD D804 55126 AMBROSIANO, Luciana AGDF C292 54511 ABBONDANZA, Carla CPA A143B 1709 AMEGEE, Emilienne CSSD SSC 52390 ABDELLA, Yesuf TCIA D557 56437 AMICI, Paolo ESSD C456 55396 ABDIRIZZAK, Tania FOED C474 53985 AMMATI, Mohammed AGPM B752 53485 ABE, Kaori TCE C751 54876 AMOROSO, Leslie ESN C214 54315 ABI NASSIF, Joseph CSDM B104 56891 AMROUK, El Mamoun ESTD D866 53963 ABI RACHED, Elie CPAM A281 53980 ANAMAN, Frederick CIOF B239 55087 ABITBOL, Nathalie TCIA D556 54213 ANAND, Sanjeev TCSD D611 56584 ABOU-RIZK, Margaret LEGP D371 53309 ANDARIAS DE PRADO, Rebeca CSAI D102 1716 ABRAHAM, Erika CSSD SSC 1725 ANDONOVSKA, Liljana CSSD SSC 56202 ABRAMO-GUARNA, Anna TCIA D554 53602 ANDRADE CIANFRINI, Graciela FOMD D468 55375 ABRAMOVA, Olga OSD D728 55742 ANELLO, Enrico OEKM C102 54178 ABRAMOVICI, Pierre CIOH C130 54441 ANGELINI, Chiara CPAP A487 56760 ABREU, Maria LEGD A442 54109 ANGELINI, Flavio CSAI D114 53296 ABRINA, Angelica Gavina OSPD B449 55313 ANGELONI, Marino CSAI D103 52709 ACCARDO-DELHOME, Jeanne FODP D435 53145 ANGELOZZI, Vanessa CSDU A042 53843 ACHOURI, Moujahed NRL B732 53720 ANGELUCCI, Federica ESTD D863 52294 ACOSTA, Natalia OEDD B452 52677 ANIBALDI, -

Screening to Prevent Osteoporotic Fractures: an Evidence Review for the U.S

Evidence Synthesis Number 162 Screening to Prevent Osteoporotic Fractures: An Evidence Review for the U.S. Preventive Services Task Force Prepared for: Agency for Healthcare Research and Quality U.S. Department of Health and Human Services 5600 Fishers Lane Rockville, MD 20857 www.ahrq.gov Contract No. HHSA-290-2012-00015-I, Task Order No. 6 Prepared by: RTI International–University of North Carolina Evidence-based Practice Center Research Triangle Park, NC Investigators: Meera Viswanathan, PhD Shivani Reddy, MD, MSc Nancy Berkman, PhD Katie Cullen, BA Jennifer Cook Middleton, PhD Wanda K. Nicholson, MD, MPH, MBA Leila C. Kahwati, MD, MPH AHRQ Publication No. 15-05226-EF-1 October 2017 This report is based on research conducted by the RTI International–University of North Carolina Evidence-based Practice Center (EPC) under contract to the Agency for Healthcare Research and Quality (AHRQ), Rockville, MD (Contract No. HHSA-290-2012-00015-I, Task Order No. 6). The findings and conclusions in this document are those of the authors, who are responsible for its contents, and do not necessarily represent the views of AHRQ. Therefore, no statement in this report should be construed as an official position of AHRQ or of the U.S. Department of Health and Human Services. The information in this report is intended to help health care decisionmakers—patients and clinicians, health system leaders, and policymakers, among others—make well-informed decisions and thereby improve the quality of health care services. This report is not intended to be a substitute for the application of clinical judgment. Anyone who makes decisions concerning the provision of clinical care should consider this report in the same way as any medical reference and in conjunction with all other pertinent information (i.e., in the context of available resources and circumstances presented by individual patients). -

Graduate Commencement

Commencement Exercises SUNDAY, MAY 22, 2011 ALMA MATER “The Netherlands” (first stanza) O Hofstra, to honor thy name we foregather, Rejoicing in voicing thy praises anew; By thee we are guided, with counsel provided; Sustain us with thy strength in the paths we pursue. Commencement Exercises SUNDAY, MAY 22, 2011 This is the unofficial program of the May 22, 2011, commencement. The official program will be archived on August 24, 2011. Table of Contents Alma Mater..................................................................................................Inside Front Cover History of Hofstra ...................................................................................................................3 Commencement Notes ............................................................................................................7 Academic Honors .................................................................................................................10 Honorary Degree Recipients ................................................................................................11 Presidential Medal Recipient ................................................................................................13 Graduate Commencement .....................................................................................................14 Latin Honors Recognition Convocation ...............................................................................18 Baccalaureate Commencement .............................................................................................21 -

Jan Fabre Romeo Castellucci

DAL 07.10 AL 30 .11 Romaeuropa Festival è realizzato con l’alto Patronato del Presidente della Repubblica il Patrocinio della Presidenza del Consiglio dei Ministri il Patrocinio del Ministero degli Affari Esteri sostenuto da con il supporto di Ministero per i Beni e le Attività Culturali Direzione Generale per lo Spettacolo dal Vivo Assessorato alle Politiche Culturali fondazione franco - italiana per la creazione contemporanea ROMA UNIVERSITÀTR DEGLI STUDIE reti Romaeuropa Festival 2011 in corealizzazione con media partner in collaborazione con in network con sponsor tecnici la campagna di comunicazione 2011 è ideata e curata da il concerto di Yuval Avital del 26 ottobre in onda su TRY THE IMPOSSIBLE MONIQUE VEAUTE | FABRIZIO GRIFASI L’edizione 2011 del Festival Romaeuropa sembrava lascia il testimone di Presidente, che gli artisti del Festival volto - ciò che vediamo subito dell’altro e che ci rappresenta Gordon e di frenesia, ma dei mercati finanziari, si occupa destinata a essere una “Mission Impossible”. Romaeuropa troveranno una accoglienza degna nella all’altro - viene negato e oscurato interrompendo il flusso di il Nasdaq match 0.2 di Fabio Cifariello Ciardi dove i titoli Non nascondiamocelo: abbiamo avuto tutti molta paura. capitale italiana. comunicazione generato dall’immagine. borsistici suonano grazie a un software per poi arrivare al I tagli ai finanziamenti pubblici, le conseguenze della crisi Ringrazio tutti loro a nome degli artisti e dell’intera équipe Lo smarrimento, la rabbia e la rivolta in una società che si pianoforte tra le sapienti mani di Uri Caine. economica anche sugli investitori privati, sembravano di Romaeuropa. trasforma e di cui perdiamo le coordinate sono anche al Sono ancora i suoni dei violoncelli rielaborati infrangere il sogno di un futuro, obbligandoci a dire addio a cuore della nuova produzione dei Muta Imago. -

Art and Politics at the Neapolitan Court of Ferrante I, 1458-1494

ABSTRACT Title of Dissertation: KING OF THE RENAISSANCE: ART AND POLITICS AT THE NEAPOLITAN COURT OF FERRANTE I, 1458-1494 Nicole Riesenberger, Doctor of Philosophy, 2016 Dissertation directed by: Professor Meredith J. Gill, Department of Art History and Archaeology In the second half of the fifteenth century, King Ferrante I of Naples (r. 1458-1494) dominated the political and cultural life of the Mediterranean world. His court was home to artists, writers, musicians, and ambassadors from England to Egypt and everywhere in between. Yet, despite its historical importance, Ferrante’s court has been neglected in the scholarship. This dissertation provides a long-overdue analysis of Ferrante’s artistic patronage and attempts to explicate the king’s specific role in the process of art production at the Neapolitan court, as well as the experiences of artists employed therein. By situating Ferrante and the material culture of his court within the broader discourse of Early Modern art history for the first time, my project broadens our understanding of the function of art in Early Modern Europe. I demonstrate that, contrary to traditional assumptions, King Ferrante was a sophisticated patron of the visual arts whose political circumstances and shifting alliances were the most influential factors contributing to his artistic patronage. Unlike his father, Alfonso the Magnanimous, whose court was dominated by artists and courtiers from Spain, France, and elsewhere, Ferrante differentiated himself as a truly Neapolitan king. Yet Ferrante’s court was by no means provincial. His residence, the Castel Nuovo in Naples, became the physical embodiment of his commercial and political network, revealing the accretion of local and foreign visual vocabularies that characterizes Neapolitan visual culture. -

Aes Corporation

THE AES CORPORATION THE AES CORPORATION The global power company A Passion to Serve A Passion A PASSION to SERVE 2000 ANNUAL REPORT ANNUAL REPORT THE AES CORPORATION 1001 North 19th Street 2000 Arlington, Virginia 22209 USA (703) 522-1315 CONTENTS OFFICES 1 AES at a Glance AES CORPORATION AES HORIZONS THINK AES (CORPORATE OFFICE) Richmond, United Kingdom Arlington, Virginia 2 Note from the Chairman 1001 North 19th Street AES OASIS AES TRANSPOWER Arlington, Virginia 22209 Suite 802, 8th Floor #16-05 Six Battery Road 5 Our Annual Letter USA City Tower 2 049909 Singapore Phone: (703) 522-1315 Sheikh Zayed Road Phone: 65-533-0515 17 AES Worldwide Overview Fax: (703) 528-4510 P.O. Box 62843 Fax: 65-535-7287 AES AMERICAS Dubai, United Arab Emirates 33 AES People Arlington, Virginia Phone: 97-14-332-9699 REGISTRAR AND Fax: 97-14-332-6787 TRANSFER AGENT: 83 2000 AES Financial Review AES ANDES FIRST CHICAGO TRUST AES ORIENT Avenida del Libertador COMPANY OF NEW YORK, 26/F. Entertainment Building 602 13th Floor A DIVISION OF EQUISERVE 30 Queen’s Road Central 1001 Capital Federal P.O. Box 2500 Hong Kong Buenos Aires, Argentina Jersey City, New Jersey 07303 Phone: 852-2842-5111 Phone: 54-11-4816-1502 USA Fax: 852-2530-1673 Fax: 54-11-4816-6605 Shareholder Relations AES AURORA AES PACIFIC Phone: (800) 519-3111 100 Pine Street Arlington, Virginia STOCK LISTING: Suite 3300 NYSE Symbol: AES AES ENTERPRISE San Francisco, California 94111 Investor Relations Contact: Arlington, Virginia USA $217 $31 Kenneth R. Woodcock 93% 92% AES ELECTRIC Phone: (415) 395-7899 $1.46* 91% Senior Vice President 89% Burleigh House Fax: (415) 395-7891 88% 1001 North 19th Street $.96* 18 Parkshot $.84* AES SÃO PAULO Arlington, Virginia 22209 Richmond TW9 2RG $21 Av. -

MARTHA STEWART Mary Martha Groves Stewart, 96, of St

MARTHA STEWART Mary Martha Groves Stewart, 96, of St. Clairsville, OH, died Tues day, August 9, 2016, at Wheeling Hospital. She was bom March 23, 1920, in Egypt Valley, OH, a daughter of the late Earl and Hazel Williamson Groves. Martha retired as co owner of Stewart’s* Mobile Home and R.V. in St. Clairsville and a member of First Presbyterian Church. As the matriarch of the fam ily, she enjoyed sewing and cooking and spending time with her grandchildren. She was generous, caring and loving and one of the great est moms to many. In addition to her par ents, she was preceded in death by her husband, Thomas Ray Stewart; her daughter, Jane Stewart (James) Sanati; a sister, Dorothy (Dale) Murphy; two brothers, Bernard and Charles Groves; and sister- in-law, Lois Groves. Surviving are her three children, Carole Stewart (Robert) Sabo of St. Clairsville, Thomas R. (Jackie) Stewart of Tall- madge, OH, and Robert E. (Sharon) Stewart of St. Clajrsville; a brother, Rus sell Groves of St. Clairsville; two sisters- in-law, Carol Ann Groves of Bamesville and Martha Groves of Flush ing; 11 grandchildren, Melissa, Kathy, Jamie, Mary Beth, Julie, Justin, Christo pher, Stephanie, Ryan, Adam, and Lindsay; numer ous great-grandchildren and great-great-grandchildmn; and nieces and nephewsy A Family and friends will be received at Toothman Funeral Home in St. Clairsville on Thursday from 4-8 p.m., where serv ices will be held on Friday at 10:30 a.m. with Rev. Kenneth Gifford officiating. Burial will follow in Union Cemetery, St. -

È Scontro Tra Municipi E Alemanno

anno VIII | numero 64 | sabato 09 aprile 2011 Roma, Via Cristoforo Colombo 134 | Tel. 06.89763157 | [email protected] | Pubblicità: APR srl - Tel. 06.89763161 | Fax. 06.89763158 | [email protected] CAMPIDOGLIO I minisindaci di centrosinistra attaccano il sindaco p3 accadeinprovincia Ciampino Una casalinga e un agente Bilancio: è scontro tra immobiliare denunciati dalla guardia di finanza per riciclaggio di denaro sporco Municipi e Alemanno pagina 12 Genzano «Non ci sono risorse per gli investimenti» dicono i presidenti L’acqua del quartiere Landi è di nuovo potabile. Le ana- «E’ una posizione ideologica» è la replica del primo cittadino lisi effettute indicano valori di arsenico adeguati Non è andato bene ieri l’incontro della giunta capitolina, che «destina tenzione di strade e scuole nelle tra Campidoglio e Municipi per la zero risorse agli investimenti dei mani dell'amministrazione centrale». pagina 13 presentazione del Bilancio. I mini- Municipi, taglia del 5,5% le risorse Un attacco a viso aperto al sindaco sindaci di centrosinistra sono sul destinate a ogni Municipio e con- Alemanno, che replica accusando i Frascati piede di guerra contro la manovra centra tutta la gestione della manu- presidenti di posizione ideologica. Ancora guai in casa Pdl: il CHIUSI PER PROTESTA Montesacro p9 Monterotondo p17 partito resta diviso in tre fa- p6 zioni nonostante il patto si- Casapound Ispettore glato a inizio febbraio irrompe dell’Asl pagina 14 nell’aula del arrestato Fiumicino Municipio IV per tangenti Gli autonoleggiatori conte- stano l’ordinanza antiabu- sivi dell’Enac: «Così non possiamo lavorare» Nomadi al Cara di Castelnuovo p18 pagina 15 Prefettura irremovibile Pomezia E il sindaco si dimette Ok al progetto per 42 al- Il commissario straordinario del governo per il piano loggi popolari: presto i lavori nomadi Giuseppe Pecoraro non torna indietro, nonostante per il completamento della Ieri i commercianti di Grotte Celoni hanno tenuto le la richiesta di moratoria dei primi cittadini del territorio. -

2009 BIC Report

ANNUAL REPORT OF THE BEAN IMPROVEMENT COOPERATIVE A VOLUNTARY AND INFORMAL ORGANIZATION TO EFFECT THE EXCHANGE OF INFORMATION AND MATERIALS THE LII Report of The BEAN IMPROVEMENT COOPERATIVE No. 52 March 2009 [ ISSN 0084-7747 ] Coordinating Committee Jim Beaver Antonio de Ron Jim Kelly (President) Ken Kmiecik Phil Miklas Jim Myers Peter Pauls Ron Riley Howard F. Schwartz (Ex officio) Ron Shellenberger Bert Vandenberg Please address correspondence about BIC membership and BIC annual reports to: Dr. James D. Kelly, BIC President Department of Crop & Soil Sciences Michigan State University East Lansing, MI 48824 U. S. A. Tele: 517-355-0271 extension 1181 // FAX: 517-353-5174 Email: [email protected] http://www.css.msu.edu/bic SITE REGULARLY UPDATED Note: It will be assumed that interested individuals may freely cite (including author credit) any report or note in this BIC report, unless the author indicates to the contrary. As a p rofessional courtesy, individuals citing BIC notes shou ld notify the authors o f his or her intentions. The BIC Coordin ating Committee approv ed this statement on November 5, 1975. TABLE OF CONTENTS Page The 52nd Annual Report of the Bean Improvement Cooperative ......................................................................................... i BIC Committee Membership - 1957 to 2009 ....................................................................................................................... ii BIC Genetics Committee Minutes ...................................................................................................................................... -

January 2011 Pin Title Recognition Americas, Europe and Pacific

JANUARY 2011 PIN TITLE RECOGNITION AMERICAS, EUROPE AND PACIFIC NEW BLUE DIAMOND EXECUTIVES Name Hometown Sponsor Ricard Norell Luxembourg, Luxembourg OSTERBERG, NICLAS NEW DIAMOND EXECUTIVES Name Hometown Sponsor Emanuela Domack Krefeld, Germany DUEREN, SIMONE Marie Helene Taieb Neuilly Sur Seine, France SOFTWORKS AS Florentina Patrascu Bucuresti, Romania LOREDANA DIANA, CISMAS Into Shop Edsbyn AB, Lennart Persson Edsbyn, Sweden SOLOTEK SUNSET Lu Jun Sun, Tony Li Fremont, California, United States WEI, LIYA NEW EMERALD EXECUTIVES Name Hometown Sponsor Essence Of Beauty And Health Dulwich Hill, Australia PIKIT TREELERTSUPALAK Eva Silianoff Modling, Austria STYLIANOU, ANDREA Svetlana Ratnikova Toronto, Ontario, Canada 4AM AGENCY LLC Enza Testa Haegi Geneve, Switzerland CHANAL, ALESSANDRA Nieto Maria Teresa Collonge-Bellerive, Switzerland INVESTOR CAPITAL Ardeah Frans, France DUPONT MOREAU, MARYSE Chamarre, Anne-C Pierron Barbe Henonville, France ANNE TRAUTMANN Christophe Thamri Cannes La Bocca, France GREBERT, REGIS Zevik Farkash Meiron, Israel REUBENS,TOM Maeva M Liu Tahiti, New Zealand HEALTH WEALTH AND WISDOM LTD Cpy Company Beaverton, Oregon, United States GOK, WAI YEE Lee Kilburn Ashland, Oregon, United States ARUNA KI, LLC Mahnchul Lee Haymarket, Virginia, United States CHOI, HAE YOUNG Nulife, Petrza Sarel du Plessis Pretoria, South Africa GONYON ENTERPRISES INC NEW RUBY EXECUTIVES Name Hometown Sponsor Valley Lily Developing Richmond, British Columbia, Canada WARNER, AIESHA Nguyen Trung Truc Opatovice Nad Labem, Czech Republic PERSO INTERIORS&DESIGN ,SRO Patricie Lacinova Dolni Jircany, Czech Republic KOROTVICKOVA, SABINA Sabina Korotvickova Dolni Jircany, Czech Republic BURIANKOVA, MGR IVA Sisa Gbr Oberneisen, Germany MUELLER, HILDEGARD Arianne Hoffmann Magny Le Hongre, France OCCELLI, ORIANNE Cecile Musset Etaules, France BIBAUD, CATHERINE Gregoire Morel Saint-Etienne, France YOUSSEFANE, FLORENCE Louis Carlotta Echirolles, France VULLO, MICHELE Joresz BT. -

Ext Name Div Room Ext Name Div Room

____________________________________________________________________________________________________________________ EXT NAME DIV ROOM EXT NAME DIV ROOM A 54458 AMADO, Maria Blanca TCIO D503 53290 AMARAL, Cristina TCEO C744 54157 ABABOUCH, Lahsen FIPM F508 55126 AMBROSIANO, Luciana AGNS C292 55581 ABALSAMO, Stefania CSDB B354 55399 AMBROSINI, Francesca TCES C520 53162 ABARQUERO GROSSI, Rogelio AUDD A429 1709 AMEGEE, Emilienne CSSD SSC 53264 ABBASSIAN, Abdolreza ESTM D804 53985 AMMATI, Mohammed AGPM B752 54511 ABBONDANZA, Carla CSCD A143B 54876 AMOROSO, Leslie AGNP C224 52390 ABDELLA, Yesuf TCIA D557 53980 ANAMAN, Frederick CIOF B236 55396 ABDIRIZZAK, Tania FOEP C474 54213 ANAND, Sanjeev TCSD D661 53485 ABE, Kaori TCEO C764 53309 ANDARIAS DE PRADO, Rebeca CSAI D102 54315 ABI NASSIF, Joseph CSDM B104 1725 ANDONOVSKA, Liljana CSSD SSC 53963 ABI RACHED, Elie CSCM A284 53602 ANDRADE CIANFRINI, Graciela FOMR D468 55087 ABITBOL, Nathalie TCIA D546 52141 ANDRE, Mathias TCEO D615 56941 ABOU EL HAJA, Aziza CSAP D147 52167 ANDREEV, Alexander CSCM B249 56202 ABRAMO-GUARNA, Anna TCIA D547 55742 ANELLO, Enrico OEKM A204 56760 ABREU, Maria LEGD A442 54441 ANGELINI, Chiara CSCP A487 53296 ABRINA, Angelica Gavina OSPD C499 54109 ANGELINI, Flavio CSAI D019T 52709 ACCARDO-DELHOME, Jeanne FODP D435 55313 ANGELONI, Marino CSAI D103 56591 ACQUA, Ariane FIPI F401 53145 ANGELOZZI, Vanessa CSDU A042 52448 ACUNZO, Mario OEKR C615 53720 ANGELUCCI, Federica ESTT D863 54574 ADAMS, Tim CSFC D242 52677 ANIBALDI, Stefano OEKC A253 52591 ADDUCI, Giovanni TCIN D686 55297