Depth Estimation – an Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

3D Computational Modeling and Perceptual Analysis of Kinetic Depth Effects

Computational Visual Media DOI 10.1007/s41095-xxx-xxxx-x Vol. x, No. x, month year, xx–xx Research Article 3D Computational Modeling and Perceptual Analysis of Kinetic Depth Effects Meng-Yao Cui1, Shao-Ping Lu1( ), Miao Wang2, Yong-Liang Yang3, Yu-Kun Lai4, and Paul L. Rosin4 c The Author(s) 2020. This article is published with open access at Springerlink.com Abstract Humans have the ability to perceive 3D shapes from 2D projections of rotating 3D objects, which is called Kinetic Depth Effects. This process is based on a variety of visual cues such as lighting and shading effects. However, when such cues are weakened or missing, perception can become faulty, as demonstrated by the famous silhouette illusion example – the Spinning Dancer. Inspired by this, we establish objective and subjective evaluation models of rotated 3D objects by taking their projected 2D images as input. We investigate five different cues: ambient Fig. 1 The Spinning Dancer. Due to the lack of visual cues, it confuses humans luminance, shading, rotation speed, perspective, and color as to whether rotation is clockwise or counterclockwise. Here we show 3 of 34 difference between the objects and background. In the frames from the original animation [21]. Image courtesy of Nobuyuki Kayahara. objective evaluation model, we first apply 3D reconstruction algorithms to obtain an objective reconstruction quality 1 Introduction metric, and then use a quadratic stepwise regression analysis method to determine the weights among depth cues to The human perception mechanism of the 3D world has represent the reconstruction quality. In the subjective long been studied. -

Interacting with Autostereograms

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/336204498 Interacting with Autostereograms Conference Paper · October 2019 DOI: 10.1145/3338286.3340141 CITATIONS READS 0 39 5 authors, including: William Delamare Pourang Irani Kochi University of Technology University of Manitoba 14 PUBLICATIONS 55 CITATIONS 184 PUBLICATIONS 2,641 CITATIONS SEE PROFILE SEE PROFILE Xiangshi Ren Kochi University of Technology 182 PUBLICATIONS 1,280 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: Color Perception in Augmented Reality HMDs View project Collaboration Meets Interactive Spaces: A Springer Book View project All content following this page was uploaded by William Delamare on 21 October 2019. The user has requested enhancement of the downloaded file. Interacting with Autostereograms William Delamare∗ Junhyeok Kim Kochi University of Technology University of Waterloo Kochi, Japan Ontario, Canada University of Manitoba University of Manitoba Winnipeg, Canada Winnipeg, Canada [email protected] [email protected] Daichi Harada Pourang Irani Xiangshi Ren Kochi University of Technology University of Manitoba Kochi University of Technology Kochi, Japan Winnipeg, Canada Kochi, Japan [email protected] [email protected] [email protected] Figure 1: Illustrative examples using autostereograms. a) Password input. b) Wearable e-mail notification. c) Private space in collaborative conditions. d) 3D video game. e) Bar gamified special menu. Black elements represent the hidden 3D scene content. ABSTRACT practice. This learning effect transfers across display devices Autostereograms are 2D images that can reveal 3D content (smartphone to desktop screen). when viewed with a specific eye convergence, without using CCS CONCEPTS extra-apparatus. -

Stereopsis and Stereoblindness

Exp. Brain Res. 10, 380-388 (1970) Stereopsis and Stereoblindness WHITMAN RICHARDS Department of Psychology, Massachusetts Institute of Technology, Cambridge (USA) Received December 20, 1969 Summary. Psychophysical tests reveal three classes of wide-field disparity detectors in man, responding respectively to crossed (near), uncrossed (far), and zero disparities. The probability of lacking one of these classes of detectors is about 30% which means that 2.7% of the population possess no wide-field stereopsis in one hemisphere. This small percentage corresponds to the probability of squint among adults, suggesting that fusional mechanisms might be disrupted when stereopsis is absent in one hemisphere. Key Words: Stereopsis -- Squint -- Depth perception -- Visual perception Stereopsis was discovered in 1838, when Wheatstone invented the stereoscope. By presenting separate images to each eye, a three dimensional impression could be obtained from two pictures that differed only in the relative horizontal disparities of the components of the picture. Because the impression of depth from the two combined disparate images is unique and clearly not present when viewing each picture alone, the disparity cue to distance is presumably processed and interpreted by the visual system (Ogle, 1962). This conjecture by the psychophysicists has received recent support from microelectrode recordings, which show that a sizeable portion of the binocular units in the visual cortex of the cat are sensitive to horizontal disparities (Barlow et al., 1967; Pettigrew et al., 1968a). Thus, as expected, there in fact appears to be a physiological basis for stereopsis that involves the analysis of spatially disparate retinal signals from each eye. Without such an analysing mechanism, man would be unable to detect horizontal binocular disparities and would be "stereoblind". -

Relative Importance of Binocular Disparity and Motion Parallax for Depth Estimation: a Computer Vision Approach

remote sensing Article Relative Importance of Binocular Disparity and Motion Parallax for Depth Estimation: A Computer Vision Approach Mostafa Mansour 1,2 , Pavel Davidson 1,* , Oleg Stepanov 2 and Robert Piché 1 1 Faculty of Information Technology and Communication Sciences, Tampere University, 33720 Tampere, Finland 2 Department of Information and Navigation Systems, ITMO University, 197101 St. Petersburg, Russia * Correspondence: pavel.davidson@tuni.fi Received: 4 July 2019; Accepted: 20 August 2019; Published: 23 August 2019 Abstract: Binocular disparity and motion parallax are the most important cues for depth estimation in human and computer vision. Here, we present an experimental study to evaluate the accuracy of these two cues in depth estimation to stationary objects in a static environment. Depth estimation via binocular disparity is most commonly implemented using stereo vision, which uses images from two or more cameras to triangulate and estimate distances. We use a commercial stereo camera mounted on a wheeled robot to create a depth map of the environment. The sequence of images obtained by one of these two cameras as well as the camera motion parameters serve as the input to our motion parallax-based depth estimation algorithm. The measured camera motion parameters include translational and angular velocities. Reference distance to the tracked features is provided by a LiDAR. Overall, our results show that at short distances stereo vision is more accurate, but at large distances the combination of parallax and camera motion provide better depth estimation. Therefore, by combining the two cues, one obtains depth estimation with greater range than is possible using either cue individually. -

Chromostereo.Pdf

ChromoStereoscopic Rendering for Trichromatic Displays Le¨ıla Schemali1;2 Elmar Eisemann3 1Telecom ParisTech CNRS LTCI 2XtremViz 3Delft University of Technology Figure 1: ChromaDepth R glasses act like a prism that disperses incoming light and induces a differing depth perception for different light wavelengths. As most displays are limited to mixing three primaries (RGB), the depth effect can be significantly reduced, when using the usual mapping of depth to hue. Our red to white to blue mapping and shading cues achieve a significant improvement. Abstract The chromostereopsis phenomenom leads to a differing depth per- ception of different color hues, e.g., red is perceived slightly in front of blue. In chromostereoscopic rendering 2D images are produced that encode depth in color. While the natural chromostereopsis of our human visual system is rather low, it can be enhanced via ChromaDepth R glasses, which induce chromatic aberrations in one Figure 2: Chromostereopsis can be due to: (a) longitunal chro- eye by refracting light of different wavelengths differently, hereby matic aberration, focus of blue shifts forward with respect to red, offsetting the projected position slightly in one eye. Although, it or (b) transverse chromatic aberration, blue shifts further toward might seem natural to map depth linearly to hue, which was also the the nasal part of the retina than red. (c) Shift in position leads to a basis of previous solutions, we demonstrate that such a mapping re- depth impression. duces the stereoscopic effect when using standard trichromatic dis- plays or printing systems. We propose an algorithm, which enables an improved stereoscopic experience with reduced artifacts. -

Two Eyes See More Than One Human Beings Have Two Eyes Located About 6 Cm (About 2.4 In.) Apart

ivi act ty 2 TTwowo EyesEyes SeeSee MoreMore ThanThan OneOne OBJECTIVES 1 straw 1 card, index (cut in half widthwise) Students discover how having two eyes helps us see in three dimensions and 3 pennies* increases our field of vision. 1 ruler, metric* The students For the class discover that each eye sees objects from a 1 roll string slightly different viewpoint to give us depth 1 roll tape, masking perception 1 pair scissors* observe that depth perception decreases *provided by the teacher with the use of just one eye measure their field of vision observe that their field of vision decreases PREPARATION with the use of just one eye Session I 1 Make a copy of Activity Sheet 2, Part A, for each student. SCHEDULE 2 Each team of two will need a metric ruler, Session I About 30 minutes three paper cups, and three pennies. Session II About 40 minutes Students will either close or cover their eyes, or you may use blindfolds. (Students should use their own blindfold—a bandanna or long strip VOCABULARY of cloth brought from home and stored in their science journals for use—in this depth perception and other activities.) field of vision peripheral vision Session II 1 Make a copy of Activity Sheet 2, Part B, for each student. MATERIALS 2 For each team, cut a length of string 50 cm (about 20 in.) long. Cut enough index For each student cards in half (widthwise) to give each team 1 Activity Sheet 2, Parts A and B half a card. Snip the corners of the cards to eliminate sharp edges. -

Motion-In-Depth Perception and Prey Capture in the Praying Mantis Sphodromantis Lineola

© 2019. Published by The Company of Biologists Ltd | Journal of Experimental Biology (2019) 222, jeb198614. doi:10.1242/jeb.198614 RESEARCH ARTICLE Motion-in-depth perception and prey capture in the praying mantis Sphodromantis lineola Vivek Nityananda1,*, Coline Joubier1,2, Jerry Tan1, Ghaith Tarawneh1 and Jenny C. A. Read1 ABSTRACT prey as they come near. Just as with depth perception, several cues Perceiving motion-in-depth is essential to detecting approaching could contribute to the perception of motion-in-depth. or receding objects, predators and prey. This can be achieved Two of the motion-in-depth cues that have received the most using several cues, including binocular stereoscopic cues such attention in humans are binocular: changing disparity and as changing disparity and interocular velocity differences, and interocular velocity differences (IOVDs) (Cormack et al., 2017). monocular cues such as looming. Although these have been Stereoscopic disparity refers to the difference in the position of an studied in detail in humans, only looming responses have been well object as seen by the two eyes. This disparity reflects the distance to characterized in insects and we know nothing about the role of an object. Thus as an object approaches, the disparity between the stereoscopic cues and how they might interact with looming cues. We two views changes. This changing disparity cue suffices to create a used our 3D insect cinema in a series of experiments to investigate perception of motion-in-depth for human observers, even in the the role of the stereoscopic cues mentioned above, as well as absence of other cues (Cumming and Parker, 1994). -

From Stereogram to Surface: How the Brain Sees the World in Depth

Spatial Vision, Vol. 22, No. 1, pp. 45–82 (2009) © Koninklijke Brill NV, Leiden, 2009. Also available online - www.brill.nl/sv From stereogram to surface: how the brain sees the world in depth LIANG FANG and STEPHEN GROSSBERG ∗ Department of Cognitive and Neural Systems, Center for Adaptive Systems and Center of Excellence for Learning in Education, Science, and Technology, Boston University, 677 Beacon St., Boston, MA 02215, USA Received 19 April 2007; accepted 31 August 2007 Abstract—When we look at a scene, how do we consciously see surfaces infused with lightness and color at the correct depths? Random-Dot Stereograms (RDS) probe how binocular disparity between the two eyes can generate such conscious surface percepts. Dense RDS do so despite the fact that they include multiple false binocular matches. Sparse stereograms do so even across large contrast-free regions with no binocular matches. Stereograms that define occluding and occluded surfaces lead to surface percepts wherein partially occluded textured surfaces are completed behind occluding textured surfaces at a spatial scale much larger than that of the texture elements themselves. Earlier models suggest how the brain detects binocular disparity, but not how RDS generate conscious percepts of 3D surfaces. This article proposes a neural network model that predicts how the layered circuits of visual cortex generate these 3D surface percepts using interactions between visual boundary and surface representations that obey complementary computational rules. The model clarifies how interactions between layers 4, 3B and 2/3A in V1 and V2 contribute to stereopsis, and proposes how 3D perceptual grouping laws in V2 interact with 3D surface filling-in operations in V1, V2 and V4 to generate 3D surface percepts in which figures are separated from their backgrounds. -

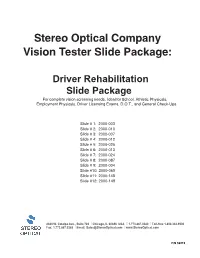

Stereo Depth Perception

Stereo Optical Company Vision Tester Slide Package: Driver Rehabilitation Slide Package For complete vision screening needs. Ideal for School, Athletic Physicals, Employment Physicals, Driver Licensing Exams, D.O.T., and General Check-Ups. Slide # 1: 2000-003 Slide # 2: 2000-010 Slide # 3: 2000-007 Slide # 4: 2000-012 Slide # 5: 2000-025 Slide # 6: 2000-013 Slide # 7: 2000-024 Slide # 8: 2000-087 Slide # 9: 2000-004 Slide #10: 2000-065 Slide #11: 2000-148 Slide #12: 2000-149 8600 W. Catalpa Ave., Suite 703 I Chicago, IL 60656 USA I 1.773.867.0380 I Toll-free 1.800.344.9500 Fax: 1.773.867.0388 I Email: [email protected] I www.StereoOptical.com P/N 56219 Slide 2000-003 “FAR” VISUAL ACUITY LETTERS 1. Dial at 1 (Yellow) indicator. 2. Far Point switch illuminated 3. Right and Left eye switches illuminated L R QUESTION: How many columns of letters do you see? SCORING: The answer is 3. Ask the subject to read line 5 completely. If this is correct, proceed to line 6, if correct proceed to line 7, if correct, the subject has 20/20 vision or better, at FAR point for the right eye, left eye and both eyes together. The correct scoring key is printed on the record form. Two or more letters incorrectly identified on any line (3 to 7) is considered a FAIL for that acuity level IN THAT COLUMN. A different acuity reading for each column is possible. The center column is critical because this is the binocular acuity test, while the right and left columns are monocular acuity tests. -

On the Importance of Stereo for Accurate Depth Estimation: an Efficient Semi-Supervised Deep Neural Network Approach

On the Importance of Stereo for Accurate Depth Estimation: An Efficient Semi-Supervised Deep Neural Network Approach Nikolai Smolyanskiy Alexey Kamenev Stan Birchfield NVIDIA {nsmolyanskiy, akamenev, sbirchfield}@nvidia.com Abstract stereo cameras); and 2) multiple images provide geomet- ric constraints that can be leveraged to overcome the many We revisit the problem of visual depth estimation in the ambiguities of photometric data. context of autonomous vehicles. Despite the progress on The alternative is to use a single image to estimate monocular depth estimation in recent years, we show that depth. We argue that this alternative—due to its funda- the gap between monocular and stereo depth accuracy re- mental limitations—is not likely to be able to achieve high- mains large—a particularly relevant result due to the preva- accuracy depth estimation at large distances in unfamiliar lent reliance upon monocular cameras by vehicles that are environments. As a result, in the context of self-driving expected to be self-driving. We argue that the challenges cars we believe monocular depth estimation is not likely of removing this gap are significant, owing to fundamen- to yield results with sufficient accuracy. In contrast, we tal limitations of monocular vision. As a result, we focus offer a novel, efficient deep-learning stereo approach that our efforts on depth estimation by stereo. We propose a achieves compelling results on the KITTI 2015 dataset by novel semi-supervised learning approach to training a deep leveraging a semi-supervised loss function (using LIDAR stereo neural network, along with a novel architecture con- and photometric consistency), concatenating cost volume, taining a machine-learned argmax layer and a custom run- 3D convolutions, and a machine-learned argmax function. -

Construction of Autostereograms Taking Into Account Object Colors and Its Applications for Steganography

Construction of Autostereograms Taking into Account Object Colors and its Applications for Steganography Yusuke Tsuda Yonghao Yue Tomoyuki Nishita The University of Tokyo ftsuday,yonghao,[email protected] Abstract Information on appearances of three-dimensional ob- jects are transmitted via the Internet, and displaying objects q plays an important role in a lot of areas such as movies and video games. An autostereogram is one of the ways to represent three- dimensional objects taking advantage of binocular paral- (a) SS-relation (b) OS-relation lax, by which depths of objects are perceived. In previous Figure 1. Relations on an autostereogram. methods, the colors of objects were ignored when construct- E and E indicate left and right eyes, re- ing autostereogram images. In this paper, we propose a L R spectively. (a): A pair of points on the screen method to construct autostereogram images taking into ac- and a single point on the object correspond count color variation of objects, such as shading. with each other. (b): A pair of points on the We also propose a technique to embed color informa- object and a single point on the screen cor- tion in autostereogram images. Using our technique, au- respond with each other. tostereogram images shown on a display change, and view- ers can enjoy perceiving three-dimensional object and its colors in two stages. Color information is embedded in we propose an approach to take into account color variation a monochrome autostereogram image, and colors appear of objects, such as shading. Our approach assign slightly when the correct color table is used. -

Stereoscopic Depth Perception at Far Viewing Distances

Perception & Psychophysics 1984.35 (5). 423-428 Stereoscopic depth perception at far viewing distances ROBERT H. CORMACK New Mexico Institute ofMining and Technology, Socorro, New Mexico There has been some controversy as to whether stereoscopic depth constancy can operate at distances greater than a few meters. In the experiments reported here, observers judged the apparent depth in stereoscopic afterimages with crossed retinal disparity as fixation distance was changed. Apparent depth increased with increased fixation distance in accord with the geometry of retinal disparity. The results are discussed with reference to the perception of ap parent distance. Stereoscopic depth constancy refers to the veridical vergence (Foley, 1967). Furthermore, O'Leary and perception of depth mediated by retinal disparity as Wallach (1980) provide evidence that perspective and viewing distance is varied. Since a given depth inter familiar size can serve as cues for depth constancy. val produces less disparity at greater distances, stereo Given these findings, it is interesting that studies scopic depth constancy requires that retinal disparity ofstereopsis at distances greater than 2 m have found be rescaled as absolute distance changes. With sym a failure of depth constancy (Ono & Comerford, metrical convergence, and for objects within a few 1977). It is not at all clear why this should be. Naive, degrees of the line of sight, the depth (d) signaled by everyday viewing reveals no obvious departures from a given disparity can be calculated by the equation: constancy. Stereophotographs are capable of repre senting large depths at great apparent distances. Cues d = (.51(TAN(ATN(D/.51)+ .5R)))-D, (1) known to affect depth constancy such as linear per spective and familiar size could in principle provide where I is the interpupillary distance, R is the retinal the necessary information.