An Investigation Into the Causes and Effects of Legacy Status in a System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Legacy – the All Blacks

LEGACY WHAT THE ALL BLACKS CAN TEACH US ABOUT THE BUSINESS OF LIFE LEGACY 15 LESSONS IN LEADERSHIP JAMES KERR Constable • London Constable & Robinson Ltd 55-56 Russell Square London WC1B 4HP www.constablerobinson.com First published in the UK by Constable, an imprint of Constable & Robinson Ltd., 2013 Copyright © James Kerr, 2013 Every effort has been made to obtain the necessary permissions with reference to copyright material, both illustrative and quoted. We apologise for any omissions in this respect and will be pleased to make the appropriate acknowledgements in any future edition. The right of James Kerr to be identified as the author of this work has been asserted by him in accordance with the Copyright, Designs and Patents Act 1988 All rights reserved. This book is sold subject to the condition that it shall not, by way of trade or otherwise, be lent, re-sold, hired out or otherwise circulated in any form of binding or cover other than that in which it is published and without a similar condition including this condition being imposed on the subsequent purchaser. A copy of the British Library Cataloguing in Publication data is available from the British Library ISBN 978-1-47210-353-6 (paperback) ISBN 978-1-47210-490-8 (ebook) Printed and bound in the UK 1 3 5 7 9 10 8 6 4 2 Cover design: www.aesopagency.com The Challenge When the opposition line up against the New Zealand national rugby team – the All Blacks – they face the haka, the highly ritualized challenge thrown down by one group of warriors to another. -

Become a Pierce-Arrow Museum Legacy Partner and Leave a Lasting Legacy for Tomorrow

PIERCE-ARROW FOUNDATION Operating the Pierce-Arrow Museum on the Campus of the Gilmore Car Museum Driving the Future Become a Pierce-Arrow Museum Legacy Partner and leave a lasting legacy for tomorrow. Making a bequest to the Pierce-Arrow Museum is a simple way to preserve and protect the automobiles and history you value. You can name the Pierce-Arrow Museum as a beneficiary of your will, trust, retirement plan, life insurance policy or financial accounts. Anyone can make a bequest, and no amount is too small. How to Become a PIERCE-ARROW FOUNDATION Operating the Pierce-Arrow Museum on the Campus of the Gilmore Car Museum Legacy Partner The Pierce-Arrow Museum Legacy Partner Program provides long-term sustained funding for the Museum through its Foundation. January 2017 Donations to this Program are invested for perpetuity, and your bequest SPECIFIC will perpetuate your support for the Museum. TRUSTEES BEQUEST Dear Pierce-Arrow Friends, One of the easiest ways to make a gift to the Pierce-Arrow CHAIRMAN Foundation is through a Bequest in your will. Because the Pierce-Arrow Wills a specific dollar amount or a MERLIN SMITH Thank you for your interest in the Pierce-Arrow Museum at Hickory Corners, Michigan. Located on the 90-acre campus of the Gilmore Car Museum, a world class automotive destination, the Pierce-Arrow Foundation is a qualified 501(c)(3) tax exempt organization, this planned specific piece of property CHAIRMAN EMERITUS Museum is host to more than 100,000 visitors every year. giving can be an excellent way to support the Museum while reducing DAVID HARRIS “I give to the Pierce-Arrow Foundation, a not-for- the taxes on larger Estates. -

Dragon Con Progress Report 2021 | Published by Dragon Con All Material, Unless Otherwise Noted, Is © 2021 Dragon Con, Inc

WWW.DRAGONCON.ORG INSIDE SEPT. 2 - 6, 2021 • ATLANTA, GEORGIA • WWW.DRAGONCON.ORG Announcements .......................................................................... 2 Guests ................................................................................... 4 Featured Guests .......................................................................... 4 4 FEATURED GUESTS Places to go, things to do, and Attending Pros ......................................................................... 26 people to see! Vendors ....................................................................................... 28 Special 35th Anniversary Insert .......................................... 31 Fan Tracks .................................................................................. 36 Special Events & Contests ............................................... 46 36 FAN TRACKS Art Show ................................................................................... 46 Choose your own adventure with one (or all) of our fan-run tracks. Blood Drive ................................................................................47 Comic & Pop Artist Alley ....................................................... 47 Friday Night Costume Contest ........................................... 48 Hallway Costume Contest .................................................. 48 Puppet Slam ............................................................................ 48 46 SPECIAL EVENTS Moments you won’t want to miss Masquerade Costume Contest ........................................ -

SEPTEMBER Sunday Monday Tuesday Wednesday Thursday Friday Saturday 1 2 3 4 5 6 7

FALL TELEVISION GUIDE SEPTEMBER Sunday Monday Tuesday Wednesday Thursday Friday Saturday 1 2 3 4 5 6 7 8 9 10 11 12 13 14 Season 4 10pm TV Movie 9pm The Legend of Korra 7pm Ghost Bait 10pm Season 2 - Nickelodeon New Series - Bio Wander Over Yonder 9pm New Series - Disney 15 16 17 18 19 20 21 Sleepy Hollow 9pm Dreamworks Dragons 7:30pm The Neighbors 8:30pm New Series - FOX Season 2 - Carton Network Season 2 - ABC 22 23 24 25 26 27 28 New Series 10pm Marvel's Agents of S.H.I.E.L.D. 8pm The Big Bang Theory 8pm New Series - ABC Season 7 - CBS Person of Interest 10pm Revolution 8pm Elementary 10pm Season 3 - CBS Season 2 - NBC Season 2 - CBS 29 30 Once Upon a Time 8pm Season 3 - ABC A Haunting 10pm Season 6 - Destination America FALL TELEVISION GUIDE OCTOBER Sunday Monday Tuesday Wednesday Thursday Friday Saturday 1 2 3 4 5 Movie 9pm The Vampire Diaries 8pm Season 5 - The CW Transformers Prime Beast 8pm Wolfblood 8pm Arrow: Year One 8pm The Originals 9pm Hunters Predacons Rising Scarecrow 9pm New Series - Disney Special Season 1 Review - The CW New Series Sneak Peak - The CW Movie - The Hub Movie - Syfy 6 7 8 9 10 11 12 Season 9B 9pm The Originals 8pm Timeslot Premiere - The CW Arrow 8pm Sabrina: Secrets of a 12pm Season 2 - The CW Supernatural 9pm Teenage Witch Drop Dead Diva 9pm Season 9 - The CW The Tomorrow People 9pm New Animated Series - The Hub Season 5B - Lifetime New Series - The CW R.L. -

Wake of the Flood

NEWS Local news and entertainment since 1969 Bottom Line Inside Refurbished FRIDAY, AUGUST 20, 2021 I Volume 54, Number 34 I lascrucesbulletin.com apartments combat homelessness page 4 A&E Cast of ‘Harvey’ a true family affair page 22 WELLBEING Wake of the flood Hospital dedicates BULLETIN PHOTO BY ELVA K. ÖSTERREICH chapel to longtime U.S. Highway 70 was shut down all day Saturday, Aug. 14, while repairs were done to mitigate rain and flood damage, including collapsed pavement and mud across the road. Opening late Saturday night, the road was still down a southbound lane when this photo was taken Sunday, Aug. 15. One of the main corridors for pastoral care chief northeast-bound New Mexico traffic and for White Sands Missile Range, this is the second time the highway through San Augustin Pass has been closed because page 34 of flood damage this monsoon season. For more on the impact that flooding has had in Doña Ana County, see the story on page 2. 2 | FRIDAY, AUGUST 20, 2021 NEWS LAS CRUCES BULLETIN La Union families escape homes ahead of floodwaters By ELVA K. ÖSTERREICH Union in southern Doña Moses said. “The last one Las Cruces Bulletin Ana County was deluged to climb was my brother. by rain and flooding in Then, the wall came The Aguirre home floor which several resident down. The water was like is a plane of slippery, families found themselves a little tsunami.” sticky mud. Everything is in trouble and fearing for He went on to say the covered with the stuff. -

Chapter 3: "Transferring Vinyl Lps (And Other Legacy Media) To

3 TRANSFERRINGVINYLLPS(AND OTHERLEGACYMEDIA)TOCD A great way to preserve and enjoy old recordings is to transfer them from any legacy medium—vinyl LPs, cassette tapes, reel-to-reel tapes, vintage 78s, videocassettes, even eight-track tapes—to CD. Or you can transfer them to a hard drive, solid-state drive, or whatever digital storage medium you prefer. Transferring phonograph records to CD is in demand, and you might even be able to get a nice sideline going doing this. A lot of people are still hanging on to their record collections but are afraid to enjoy them because LPs are fragile. A lot of great albums have never been released on commer- cial CDs, or the modern CD remasters are not done well. Some people sim- ply prefer the sound of their old records. Although you can copy any analog media and convert it to any digital audio format, in this chapter we’ll talk mostly about transferring vinyl record albums and singles to CDs. Once you have converted your old analog media to a digital format, Audacity has a number of tools for cleaning up the sound quality. You may not always be able to perform perfect restorations, but you can reduce hiss, clicks, pops, and other defects to quite tolerable levels. You can also customize dynamic range compression to suit your own needs, which is a nice thing because on modern popular CDs, dynamic range com- pression is abused to where it spoils the music. Even if they did it well, it might not be right for you, so Audacity lets you do it your way. -

2020 Catalog

2020 Product CATALOG BLACK EAGLE ARROWS A house divided AgAinst itself will not stAnd • we ARe united ARound the WORld B lACKe AGLEARROWS .com BLACKEAGLEARROWS.com Black eAgle ARRows IS THE ONLY pRo shop EXCLUSIVE ARRow CompAny on eARth. Our business model is simple, we provide our Black Eagle Arrows Authorized Retailers the opportunity to MAXIMIZE VALUE AND CUSTOMER servICE BY ELIMINATING BIG-BOX STORE COMPETITION. This gives every retailer in our network the best product pricing instead of pricing models which require pro shops to subsidize their competition. We are the only arrow company in the industry who sustains these values. We have worked to build and maintain a trusted network of only independently owned and operated pro shops. With no minimums and superior customer service we give every pro shop large and small the opportunity to thrive. We work hard at producing quality products and just as hard at building quality relationships with our partners. WHY PARTNER WITH BLACK EAGLE ARROWS? lAncasteR ARCheRy supply, inc. Leola, PA “We have been a Black Eagle Arrow distributor since 2012 as it was a natural fit with their commitment to innovating and building the best arrows for both 3D target and bow hunting. Lancaster Archery Supply offers the world’s largest selection of archery equipment and is dedicated to providing archery equip- ment to dealers, organizations and individual archers as a leading worldwide archery distributor. We enjoy working with Randy Kitts, Dan McCarthy and Jason Wilkins at Black Eagle because they are men of faith with the highest ethical standards and commitment to their customers. -

1. Brightauthor User Guide

1. BrightAuthor User Guide . 3 1.1 Requirements . 4 1.2 Getting Started . 5 1.3 Setting up BrightSign Players . 6 1.3.1 BrightSign Network Setup . 7 1.3.2 Local File Network Setup . 12 1.3.3 Simple File Network Setup . 16 1.3.4 Standalone Setup . 20 1.4 Creating Presentations . 25 1.4.1 Full-Screen Presentations . 25 1.4.2 Multi-Zone Presentations . 29 1.4.2.1 Customizing Zone Layouts . 33 1.4.3 Interactive Presentations . 36 1.4.3.1 Editing Interactive Events . 39 1.4.3.2 Editing Media Properties . 39 1.4.3.3 Copying, Importing, Exporting States . 40 1.4.3.4 Commands . 40 1.4.3.5 Conditional Targets . 45 1.4.3.6 Dynamic User Variables . 46 1.5 Editing Zones . 47 1.5.1 Video or Images Zones . 47 1.5.2 Video Only Zones . 48 1.5.3 Images Zones . 49 1.5.4 Audio Only Zones . 50 1.5.5 Enhanced Audio Zones . 51 1.5.6 Ticker Zones . 52 1.5.7 Clock Zones . 53 1.5.8 Background Image Zones . 54 1.6 Creating Playlists and Feeds . 54 1.6.1 Dynamic Playlists . 54 1.6.2 Live Data Feeds . 55 1.6.3 Tagged Playlists . 56 1.7 Presentation States . 58 1.7.1 Non-Interactive States . 59 1.7.1.1 Media RSS Feed . 59 1.7.1.2 Local Playlist . 60 1.7.1.3 RSS Feed and Static Text . 61 1.7.1.4 Twitter . 61 1.7.1.5 User Variable . -

Collective of Heroes: Arrow's Move Toward a Posthuman Superhero Fantasy

St. Cloud State University theRepository at St. Cloud State Culminating Projects in English Department of English 12-2016 Collective of Heroes: Arrow’s Move Toward a Posthuman Superhero Fantasy Alyssa G. Kilbourn St. Cloud State University Follow this and additional works at: https://repository.stcloudstate.edu/engl_etds Recommended Citation Kilbourn, Alyssa G., "Collective of Heroes: Arrow’s Move Toward a Posthuman Superhero Fantasy" (2016). Culminating Projects in English. 73. https://repository.stcloudstate.edu/engl_etds/73 This Thesis is brought to you for free and open access by the Department of English at theRepository at St. Cloud State. It has been accepted for inclusion in Culminating Projects in English by an authorized administrator of theRepository at St. Cloud State. For more information, please contact [email protected]. Collective of Heroes: Arrow’s Move Toward a Posthuman Superhero Fantasy by Alyssa Grace Kilbourn A Thesis Submitted to the Graduate Faculty of St. Cloud State University in Partial Fulfillment of the Requirements for the degree of Master of Arts in Rhetoric and Writing December, 2016 Thesis Committee: James Heiman, Chairperson Matthew Barton Jennifer Tuder 2 Abstract Since 9/11, superheroes have become a popular medium for storytelling, so much so that popular culture is inundated with the narratives. More recently, the superhero narrative has moved from cinema to television, which allows for the narratives to address more pressing cultural concerns in a more immediate fashion. Furthermore, millions of viewers perpetuate the televised narratives because they resonate with the values and stories in the shows. Through Fantasy Theme Analysis, this project examines the audience values within the Arrow’s superhero fantasy and the influence of posthumanism on the show’s superhero fantasy. -

County Renews Consideration of Purchasing Old AU Building

SPORTS: LOCAL NEWS: Mustangs win Joe Fivas gives extra-inning thriller update on city’s with Bears: Page 9 projects: Page 4 DR. PERRY GILLUM ELECTED PRESIDENT OF GENERAL APPOINTEE EMERITI GROUP: PAGE 4 162nd YEar • No. 276 16 PaGEs • 50¢ CLEVELAND, TN 37311 THE CITY WITH SPIRIT TUESDAY, MARCH 21, 2017 County renews rawls wants apologies consideration from sheriff, newspaper By BRIAN GRAVES the Cleveland Daily Banner in my [email protected] rebuttal ... none of those which were printed or appeared in the paper,” to Bradley County Commissioner Dan his fellow commissioners. of purchasing Rawls publicly defended himself in the “I would also like to thank the com- face of accusations made against him missioners who took a small amount by Sheriff Eric Watson in a front-page of time to research and debunk this story published in the March 12 edi- fabricated attack, and then to call and tion of the Cleveland Daily Banner. offer their support,” he said. old AU building Rawls made his comments during He said Commission Assistant Monday afternoon’s voting session of Lorrie Moultrie “took 15 minutes of the County Commission. her own time to go on the internet The commissioner took his time and debunk the Florida narrative.” Availability of $4 million during reports to address the situa- “I want to make this clear, the tion between the two local officials. attempt to link me to this or any mur- “I would like to say to the people of der is a clear fabrication of anything grant offers motivation the 6th District and Bradley County in Florida,” Rawls said. -

PDF Download Green Arrow Pdf Free Download

GREEN ARROW PDF, EPUB, EBOOK Andrea Sorrentino,Jeff Lemire | 464 pages | 12 Jan 2016 | DC Comics | 9781401257613 | English | United States Green Arrow PDF Book Public Enemy 42m. So, I re-read it this year The Arrow has become a hero to the citizens of Starling City. Jayson, Jay January 8, While Oliver sets a trap to catch a group of crooked cops, Laurel takes Quentin to see Sara, who is feral and chained up in Laurel's basement. Ruin -- In Stores Now. Namespaces Article Talk. Jock's art is so gorgeous - not because of some photorealistic devotion to copying the real world see Deodato and Land , but instead because he captures in one sparse panel the feeling of pain of getting an arrow through the shoulder, of leaping for your life, of terror or dread or calm. His outlook on the world, how things should be, and his defense of the poor and working class made him a very unique character in the world of capes and tights. You must be a registered user to use the IMDb rating plugin. Meanwhile, Dinah's thirst for vengeance drives her to go rogue. Rating details. Disbanded 42m. Kondolojy, Amanda February 13, Related Characters: Black Canary. Later, Damien performs a blood ritual. Diggle and Lyla face issues in their marriage. Green Arrow: The Longbow Hunters. Also, the city needs an influx of people, which perhaps a new high speed rail line from Central City will provide. Retrieved October 9, The ongoing series Countdown showcased several of these. Later, Steve Aoki headlines the nightclub opening. -

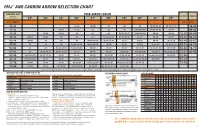

Fmj™ and Carbon Arrow Selection Chart

FMJ™ AND CARBON ARROW SELECTION CHART COMPOUND BOW YOUR ARROW LENGTH BOW RATING RECURVE LONGBOW TO 301-340 FPS 23" 24" 25" 26" 27" 28" 29" 30" 31" 32" BOW POUNDAGE BOW POUNDAGE 22-26 700, 600 700, 600 600 600 600 500, 480, 460, 470 500, 480, 470, 460 38-43 27-31 700, 600 700, 600 600 600 600 500, 480, 460, 470 500, 480, 470, 460 400, 390 44-49 32-36 700, 600 700, 600 600 600 600 500, 480, 460, 470 500, 480, 470, 460 400, 390 400, 390 32-36 50-55 37-41 700, 600 700, 600 600 600 600 500, 480, 460, 470 500, 480, 470, 460 400, 390 400, 390 400, 390 37-41 56-61 42-46 700, 600 600 600 600 500, 480, 460, 470 500, 480, 470, 460 400, 390 400, 390 400, 390 340, 330, 320 42-46 62-67 47-51 600 600 600 500, 480, 460, 470 500, 480, 470, 460 400, 390 400, 390 400, 390 340, 330, 320 340, 330, 320, 300 47-51 68-73 52-56 600 600 500, 480, 460, 470 500, 480, 470, 460 400, 390 400, 390 400, 390 340, 330, 320 340, 330, 320, 300 330, 320, 300 52-56 74-79 57-61 600 500, 480, 460, 470 500, 480, 470, 460 400, 390 400, 390 400, 390 340, 330, 320 340, 330, 320, 300 330, 320, 300 300, 280, 260, 250, 240 57-61 80-85 62-66 500, 480, 460, 470 500, 480, 470, 460 400, 390 400, 390 400, 390 340, 330, 320 340, 330, 320, 300 330, 320, 300 300, 280, 260, 250, 240 260, 250, 240 62-66 67-72 500, 480, 470, 460 400, 390 400, 390 400, 390 340, 330, 320 340, 330, 320, 300 330, 320, 300 300, 280, 260, 250, 240 260, 250, 240 67-72 73-78 400, 390 400, 390 400, 390 340, 330, 320 340, 330, 320, 300 330, 320, 300 300, 280, 260, 250, 240 260, 250, 240 73-78 79-84 400, 390 400, 390 340, 330, 320 340, 330, 320, 300 330, 320, 300 300, 280, 260, 250, 240 260, 250, 240 Note: For fractional arrow lengths, round up or down to the closest column.