Ilore: Discovering a Lineage of Microprocessors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind River Vxworks Platforms 3.8

Wind River VxWorks Platforms 3.8 The market for secure, intelligent, Table of Contents Build System ................................ 24 connected devices is constantly expand- Command-Line Project Platforms Available in ing. Embedded devices are becoming and Build System .......................... 24 VxWorks Edition .................................2 more complex to meet market demands. Workbench Debugger .................. 24 New in VxWorks Platforms 3.8 ............2 Internet connectivity allows new levels of VxWorks Simulator ....................... 24 remote management but also calls for VxWorks Platforms Features ...............3 Workbench VxWorks Source increased levels of security. VxWorks Real-Time Operating Build Configuration ...................... 25 System ...........................................3 More powerful processors are being VxWorks 6.x Kernel Compatibility .............................3 considered to drive intelligence and Configurator ................................. 25 higher functionality into devices. Because State-of-the-Art Memory Host Shell ..................................... 25 Protection ..................................3 real-time and performance requirements Kernel Shell .................................. 25 are nonnegotiable, manufacturers are VxBus Framework ......................4 Run-Time Analysis Tools ............... 26 cautious about incorporating new Core Dump File Generation technologies into proven systems. To and Analysis ...............................4 System Viewer ........................ -

Investigations on Hardware Compression of IBM Power9 Processors

Investigations on hardware compression of IBM Power9 processors Jérome Kieffer, Pierre Paleo, Antoine Roux, Benoît Rousselle Outline ● The bandwidth issue at synchrotrons sources ● Presentation of the evaluated systems: – Intel Xeon vs IBM Power9 – Benchmarks on bandwidth ● The need for compression of scientific data – Compression as part of HDF5 – The hardware compression engine NX-gzip within Power9 – Gzip performance benchmark – Bitshuffle-LZ4 benchmark – Filter optimizations – Benchmark of parallel filtered gzip ● Conclusions – on the hardware – on the compression pipeline in HDF5 Page 2 HDF5 on Power9 18/09/2019 Bandwidth issue at synchrotrons sources Data analysis computer with the main interconnections and their associated bandwidth. Data reduction Upgrade to → Azimuthal integration 100 Gbit/s Data compression ! Figures from former generation of servers Kieffer et al. Volume 25 | Part 2 | March 2018 | Pages 612–617 | 10.1107/S1600577518000607 Page 3 HDF5 on Power9 18/09/2019 Topologies of Intel Xeon servers in 2019 Source: intel.com Page 4 HDF5 on Power9 18/09/2019 Architecture of the AC922 server from IBM featuring Power9 Credit: Thibaud Besson, IBM France Page 6 HDF5 on Power9 18/09/2019 Bandwidth measurement: Xeon vs Power9 Computer Dell R840 IBM AC922 Processor 4 Intel Xeon (12 cores) 2 IBM Power9 (16 cores) 2.6 GHz 2.7 GHz Cache (L3) 19 MB 8x 10 MB Memory channels 4x 6 DDR4 2x 8 DDR4 Memory capacity → 3TB → 2TB Memory speed theory 512 GB/s 340 GB/s Measured memory speed 160 GB/s 270 GB/s Interconnects PCIe v3 PCIe v4 NVlink2 & CAPI2 GP-GPU co-processor 2Tesla V100 PCIe v3 2Tesla V100 NVlink2 Interconnect speed 12 GB/s 48 GB/s CPU ↔ GPU Page 8 HDF5 on Power9 18/09/2019 Strength and weaknesses of the OpenPower architecture While amd64 is today’s de facto standard in HPC, it has a few competitors: arm64, ppc64le and to a less extend riscv and mips64. -

Wind Rose Data Comes in the Form >200,000 Wind Rose Images

Making Wind Speed and Direction Maps Rich Stromberg Alaska Energy Authority [email protected]/907-771-3053 6/30/2011 Wind Direction Maps 1 Wind rose data comes in the form of >200,000 wind rose images across Alaska 6/30/2011 Wind Direction Maps 2 Wind rose data is quantified in very large Excel™ spreadsheets for each region of the state • Fields: X Y X_1 Y_1 FILE FREQ1 FREQ2 FREQ3 FREQ4 FREQ5 FREQ6 FREQ7 FREQ8 FREQ9 FREQ10 FREQ11 FREQ12 FREQ13 FREQ14 FREQ15 FREQ16 SPEED1 SPEED2 SPEED3 SPEED4 SPEED5 SPEED6 SPEED7 SPEED8 SPEED9 SPEED10 SPEED11 SPEED12 SPEED13 SPEED14 SPEED15 SPEED16 POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 WEIBC1 WEIBC2 WEIBC3 WEIBC4 WEIBC5 WEIBC6 WEIBC7 WEIBC8 WEIBC9 WEIBC10 WEIBC11 WEIBC12 WEIBC13 WEIBC14 WEIBC15 WEIBC16 WEIBK1 WEIBK2 WEIBK3 WEIBK4 WEIBK5 WEIBK6 WEIBK7 WEIBK8 WEIBK9 WEIBK10 WEIBK11 WEIBK12 WEIBK13 WEIBK14 WEIBK15 WEIBK16 6/30/2011 Wind Direction Maps 3 Data set is thinned down to wind power density • Fields: X Y • POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 • Power1 is the wind power density coming from the north (0 degrees). Power 2 is wind power from 22.5 deg.,…Power 9 is south (180 deg.), etc… 6/30/2011 Wind Direction Maps 4 Spreadsheet calculations X Y POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 Max Wind Dir Prim 2nd Wind Dir Sec -132.7365 54.4833 0.643 0.767 1.911 4.083 -

Fog Gateways:The Cornerstone of Iot Security

WHITE PAPER Fog Gateways: The Cornerstone of IoT Security Fog Gateways: The Cornerstone of IoT Security By Nicholas Cravotta The IoT offers tremendous value, safety. Utilities can dynamically change rates and shift loads—thus preventing overinvestment in power but also introduces major security generation. IoT-based medical devices can monitor challenges. Connecting previously offline patients continuously to identify trends and alert systems increases the attack surface for hackers— caregivers before an emergency arises. and the diversity of these systems makes it difficult to But all these benefits depend on secure data flow. deploy and manage consistent security. If the network is compromised, the result can be The nature of internal networks—such as the automation disastrous. For industrial applications, the cost could be networks that connect factory machinery—add to the the shutdown of operations or equipment damage. In a problem. Legacy networks provide minimal authorization, utilities environment, invalid data could result in black- little authentication, and not enough encryption. There outs and lost revenue. For retail applications, security is often no protection against intrusion, unauthorized breaches could halt sales and expose customer data. reconfiguration of edge equipment, or DDoS attacks. If an attacker gains access to these unsecure networks, the What Makes IoT Security Different entire organization can be at risk. In some ways, security concerns are nothing new. Most Fog computing can address these security issues today organizations already have security precautions in place and help you prepare for new challenges on the horizon. to protect sensitive IT systems and physical assets. But Fog gateways provide secure connectivity to local equip- the IoT introduces new threats that can catch businesses ment, intelligent data processing, and end-to-end off guard. -

Schedule 14A Employee Slides Supertex Sunnyvale

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 SCHEDULE 14A Proxy Statement Pursuant to Section 14(a) of the Securities Exchange Act of 1934 Filed by the Registrant Filed by a Party other than the Registrant Check the appropriate box: Preliminary Proxy Statement Confidential, for Use of the Commission Only (as permitted by Rule 14a-6(e)(2)) Definitive Proxy Statement Definitive Additional Materials Soliciting Material Pursuant to §240.14a-12 Supertex, Inc. (Name of Registrant as Specified In Its Charter) Microchip Technology Incorporated (Name of Person(s) Filing Proxy Statement, if other than the Registrant) Payment of Filing Fee (Check the appropriate box): No fee required. Fee computed on table below per Exchange Act Rules 14a-6(i)(1) and 0-11. (1) Title of each class of securities to which transaction applies: (2) Aggregate number of securities to which transaction applies: (3) Per unit price or other underlying value of transaction computed pursuant to Exchange Act Rule 0-11 (set forth the amount on which the filing fee is calculated and state how it was determined): (4) Proposed maximum aggregate value of transaction: (5) Total fee paid: Fee paid previously with preliminary materials. Check box if any part of the fee is offset as provided by Exchange Act Rule 0-11(a)(2) and identify the filing for which the offsetting fee was paid previously. Identify the previous filing by registration statement number, or the Form or Schedule and the date of its filing. (1) Amount Previously Paid: (2) Form, Schedule or Registration Statement No.: (3) Filing Party: (4) Date Filed: Filed by Microchip Technology Incorporated Pursuant to Rule 14a-12 of the Securities Exchange Act of 1934 Subject Company: Supertex, Inc. -

IBM Power System POWER8 Facts and Features

IBM Power Systems IBM Power System POWER8 Facts and Features April 29, 2014 IBM Power Systems™ servers and IBM BladeCenter® blade servers using IBM POWER7® and POWER7+® processors are described in a separate Facts and Features report dated July 2013 (POB03022-USEN-28). IBM Power Systems™ servers and IBM BladeCenter® blade servers using IBM POWER6® and POWER6+™ processors are described in a separate Facts and Features report dated April 2010 (POB03004-USEN-14). 1 IBM Power Systems Table of Contents IBM Power System S812L 4 IBM Power System S822 and IBM Power System S822L 5 IBM Power System S814 and IBM Power System S824 6 System Unit Details 7 Server I/O Drawers & Attachment 8 Physical Planning Characteristics 9 Warranty / Installation 10 Power Systems Software Support 11 Performance Notes & More Information 12 These notes apply to the description tables for the pages which follow: Y Standard / Supported Optional Optionally Available / Supported N/A or - Not Available / Supported or Not Applicable SOD Statement of General Direction announced SLES SUSE Linux Enterprise Server RHEL Red Hat Enterprise Linux a One x8 PCIe slots must contain a 4-port 1Gb Ethernet LAN available for client use b Use of expanded function storage backplane uses one PCIe slot Backplane provides dual high performance SAS controllers with 1.8 GB write cache expanded up to 7.2 GB with c compression plus Easy Tier function plus two SAS ports for running an EXP24S drawer d Full benchmark results are located at ibm.com/systems/power/hardware/reports/system_perf.html e Option is supported on IBM i only through VIOS. -

Video Games: Changing the Way We Think of Home Entertainment

Rochester Institute of Technology RIT Scholar Works Theses 2005 Video games: Changing the way we think of home entertainment Eri Shulga Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Shulga, Eri, "Video games: Changing the way we think of home entertainment" (2005). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Video Games: Changing The Way We Think Of Home Entertainment by Eri Shulga Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Information Technology Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Copyright 2005 Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Information Technology Thesis Approval Form Student Name: _ __;E=.;r....;...i S=-h;....;..;u;;;..;..lg;;i..;:a;;...__ _____ Thesis Title: Video Games: Changing the Way We Think of Home Entertainment Thesis Committee Name Signature Date Evelyn Rozanski, Ph.D Evelyn Rozanski /o-/d-os- Chair Prof. Andy Phelps Andrew Phelps Committee Member Anne Haake, Ph.D Anne R. Haake Committee Member Thesis Reproduction Permission Form Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Information Technology Video Games: Changing the Way We Think Of Home Entertainment L Eri Shulga. hereby grant permission to the Wallace Library of the Rochester Institute of Technofogy to reproduce my thesis in whole or in part. -

INTEL CORPORATION (Exact Name of Registrant As Specified in Its Charter) Delaware 94-1672743 State Or Other Jurisdiction of (I.R.S

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K (Mark One) x ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 31, 2016. or ¨ TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to . Commission File Number 000-06217 INTEL CORPORATION (Exact name of registrant as specified in its charter) Delaware 94-1672743 State or other jurisdiction of (I.R.S. Employer incorporation or organization Identification No.) 2200 Mission College Boulevard, Santa Clara, California 95054-1549 (Address of principal executive offices) (Zip Code) Registrant’s telephone number, including area code (408) 765-8080 Securities registered pursuant to Section 12(b) of the Act: Title of each class Name of each exchange on which registered Common stock, $0.001 par value The NASDAQ Global Select Market* Securities registered pursuant to Section 12(g) of the Act: None Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes x No ¨ Indicate by check mark if the registrant is not required to file reports pursuant to Section 13 or Section 15(d) of the Act. Yes ¨ No x Indicate by check mark whether the registrant (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. -

Copyrighted Material

CHAPTER 1 MULTI- AND MANY-CORES, ARCHITECTURAL OVERVIEW FOR PROGRAMMERS Lasse Natvig, Alexandru Iordan, Mujahed Eleyat, Magnus Jahre and Jorn Amundsen 1.1 INTRODUCTION 1.1.1 Fundamental Techniques Parallelism hasCOPYRIGHTED been used since the early days of computing MATERIAL to enhance performance. From the first computers to the most modern sequential processors (also called uni- processors), the main concepts introduced by von Neumann [20] are still in use. How- ever, the ever-increasing demand for computing performance has pushed computer architects toward implementing different techniques of parallelism. The von Neu- mann architecture was initially a sequential machine operating on scalar data with bit-serial operations [20]. Word-parallel operations were made possible by using more complex logic that could perform binary operations in parallel on all the bits in a computer word, and it was just the start of an adventure of innovations in parallel computer architectures. Programming Multicore and Many-core Computing Systems, 3 First Edition. Edited by Sabri Pllana and Fatos Xhafa. © 2017 John Wiley & Sons, Inc. Published 2017 by John Wiley & Sons, Inc. 4 MULTI- AND MANY-CORES, ARCHITECTURAL OVERVIEW FOR PROGRAMMERS Prefetching is a 'look-ahead technique' that was introduced quite early and is a way of parallelism that is used at several levels and in different components of a computer today. Both data and instructions are very often accessed sequentially. Therefore, when accessing an element (instruction or data) at address k, an auto- matic access to address k+1 will bring the element to where it is needed before it is accessed and thus eliminates or reduces waiting time. -

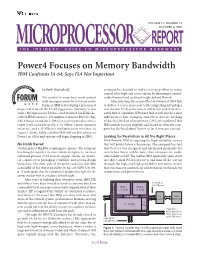

Power4 Focuses on Memory Bandwidth IBM Confronts IA-64, Says ISA Not Important

VOLUME 13, NUMBER 13 OCTOBER 6,1999 MICROPROCESSOR REPORT THE INSIDERS’ GUIDE TO MICROPROCESSOR HARDWARE Power4 Focuses on Memory Bandwidth IBM Confronts IA-64, Says ISA Not Important by Keith Diefendorff company has decided to make a last-gasp effort to retain control of its high-end server silicon by throwing its consid- Not content to wrap sheet metal around erable financial and technical weight behind Power4. Intel microprocessors for its future server After investing this much effort in Power4, if IBM fails business, IBM is developing a processor it to deliver a server processor with compelling advantages hopes will fend off the IA-64 juggernaut. Speaking at this over the best IA-64 processors, it will be left with little alter- week’s Microprocessor Forum, chief architect Jim Kahle de- native but to capitulate. If Power4 fails, it will also be a clear scribed IBM’s monster 170-million-transistor Power4 chip, indication to Sun, Compaq, and others that are bucking which boasts two 64-bit 1-GHz five-issue superscalar cores, a IA-64, that the days of proprietary CPUs are numbered. But triple-level cache hierarchy, a 10-GByte/s main-memory IBM intends to resist mightily, and, based on what the com- interface, and a 45-GByte/s multiprocessor interface, as pany has disclosed about Power4 so far, it may just succeed. Figure 1 shows. Kahle said that IBM will see first silicon on Power4 in 1Q00, and systems will begin shipping in 2H01. Looking for Parallelism in All the Right Places With Power4, IBM is targeting the high-reliability servers No Holds Barred that will power future e-businesses. -

Average Memory Access Time: Reducing Misses

Review: Cache performance 332 Miss-oriented Approach to Memory Access: Advanced Computer Architecture ⎛ MemAccess ⎞ CPUtime = IC × ⎜ CPI + × MissRate × MissPenalt y ⎟ × CycleTime Chapter 2 ⎝ Execution Inst ⎠ ⎛ MemMisses ⎞ CPUtime = IC × ⎜ CPI + × MissPenalt y ⎟ × CycleTime Caches and Memory Systems ⎝ Execution Inst ⎠ CPIExecution includes ALU and Memory instructions January 2007 Separating out Memory component entirely Paul H J Kelly AMAT = Average Memory Access Time CPIALUOps does not include memory instructions ⎛ AluOps MemAccess ⎞ These lecture notes are partly based on the course text, Hennessy CPUtime = IC × ⎜ × CPI + × AMAT ⎟ × CycleTime and Patterson’s Computer Architecture, a quantitative approach (3rd ⎝ Inst AluOps Inst ⎠ and 4th eds), and on the lecture slides of David Patterson and John AMAT = HitTime + MissRate × MissPenalt y Kubiatowicz’s Berkeley course = ()HitTime Inst + MissRate Inst × MissPenalt y Inst + ()HitTime Data + MissRate Data × MissPenalt y Data Advanced Computer Architecture Chapter 2.1 Advanced Computer Architecture Chapter 2.2 Average memory access time: Reducing Misses Classifying Misses: 3 Cs AMAT = HitTime + MissRate × MissPenalt y Compulsory—The first access to a block is not in the cache, so the block must be brought into the cache. Also called cold start misses or first reference misses. There are three ways to improve cache (Misses in even an Infinite Cache) Capacity—If the cache cannot contain all the blocks needed during performance: execution of a program, capacity misses will occur due to blocks being discarded and later retrieved. (Misses in Fully Associative Size X Cache) 1. Reduce the miss rate, Conflict—If block-placement strategy is set associative or direct mapped, conflict misses (in addition to compulsory & capacity misses) will 2. -

POWER® Processor-Based Systems

IBM® Power® Systems RAS Introduction to IBM® Power® Reliability, Availability, and Serviceability for POWER9® processor-based systems using IBM PowerVM™ With Updates covering the latest 4+ Socket Power10 processor-based systems IBM Systems Group Daniel Henderson, Irving Baysah Trademarks, Copyrights, Notices and Acknowledgements Trademarks IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. These and other IBM trademarked terms are marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml The following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both: Active AIX® POWER® POWER Power Power Systems Memory™ Hypervisor™ Systems™ Software™ Power® POWER POWER7 POWER8™ POWER® PowerLinux™ 7® +™ POWER® PowerHA® POWER6 ® PowerVM System System PowerVC™ POWER Power Architecture™ ® x® z® Hypervisor™ Additional Trademarks may be identified in the body of this document. Other company, product, or service names may be trademarks or service marks of others. Notices The last page of this document contains copyright information, important notices, and other information. Acknowledgements While this whitepaper has two principal authors/editors it is the culmination of the work of a number of different subject matter experts within IBM who contributed ideas, detailed technical information, and the occasional photograph and section of description.