Empiricist Roots of Modern Psychology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Stanislaw Piatkiewicz and the Beginnings of Mathematical Logic in Poland

HISTORIA MATHEMATICA 23 (1996), 68±73 ARTICLE NO. 0005 Stanisøaw PiaËtkiewicz and the Beginnings of Mathematical Logic in Poland TADEUSZ BATO G AND ROMAN MURAWSKI View metadata, citation and similar papers at core.ac.uk brought to you by CORE Department of Mathematics and Computer Science, Adam Mickiewicz University, ul. Matejki 48/49, 60-769 PoznanÂ, Poland provided by Elsevier - Publisher Connector This paper presents information on the life and work of Stanisøaw PiaËtkiewicz (1849±?). His Algebra w logice (Algebra in Logic) of 1888 contains an exposition of the algebra of logic and its use in representing syllogisms. This was the ®rst original Polish publication on symbolic logic. It appeared 20 years before analogous works by èukasiewicz and Stamm. 1996 Academic Press, Inc. In dem Aufsatz werden Informationen zu Leben und Werk von Stanisøaw PiaËtkiewicz (1849±?) gegeben. Sein Algebra w logice (Algebra in der Logik) von 1888 enthaÈlt eine Darstel- lung der Algebra der Logik und ihre Anwendung auf die Syllogistik. PiaËtkiewicz' Schrift war die erste polnische Publikation uÈ ber symbolische Logik. Sie erschien 20 Jahre vor aÈhnlichen Arbeiten von èukasiewicz und Stamm. 1996 Academic Press, Inc. W pracy przedstawiono osobeË i dzieøo Stanisøawa PiaËtkiewicza (1849±?). W szczego lnosÂci analizuje sieË jego rozpraweË Algebra w logice z roku 1888, w kto rej zajmowaø sieË on nowymi no wczas ideami algebry logiki oraz ich zastosowaniami do sylogistyki. Praca ta jest pierwszaË oryginalnaË polskaË publikacjaË z zakresu logiki symbolicznej. Ukazaøa sieË 20 lat przed analog- icznymi pracami èukasiewicza i Stamma. 1996 Academic Press, Inc. MSC 1991 subject classi®cations: 03-03, 01A55. -

Religious Worldviews*

RELIGIOUS WORLDVIEWS* SECULAR POLITICAL CHRISTIANITY ISLAM MARXISM HUMANISM CORRECTNESS Nietzsche/Rorty Sources Koran, Hadith Humanist Manifestos Marx/Engels/Lenin/Mao/ The Bible Foucault/Derrida, Frankfurt Sunnah I/II/ III Frankfurt School Subjects School Theism Theism Atheism Atheism Atheism THEOLOGY (Trinitarian) (Unitarian) PHILOSOPHY Correspondence/ Pragmatism/ Pluralism/ Faith/Reason Dialectical Materialism (truth) Faith/Reason Scientism Anti-Rationalism Moral Relativism Moral Absolutes Moral Absolutes Moral Relativism Proletariat Morality (foster victimhood & ETHICS (Individualism) (Collectivism) (Collectivism) (Collectivism) /Collectivism) Creationism Creationism Naturalism Naturalism Naturalism (Intelligence, Time, Matter, (Intelligence, Time Matter, ORIGIN SCIENCE (Time/Matter/Energy) (Time/Matter/Energy) (Time/Matter/Energy) Energy) Energy) 1 Mind/Body Dualism Monism/self-actualization Socially constructed self Monism/behaviorism Mind/Body Dualism (fallen) PSYCHOLOGY (non fallen) (tabula rasa) Monism (tabula rasa) (tabula rasa) Traditional Family/Church/ Polygamy/Mosque & Nontraditional Family/statist Destroy Family, church and Classless Society/ SOCIOLOGY State State utopia Constitution Anti-patriarchy utopia Critical legal studies Proletariat law Divine/natural law Shari’a Positive law LAW (Positive law) (Positive law) Justice, freedom, order Global Islamic Theocracy Global Statism Atomization and/or Global & POLITICS Sovereign Spheres Ummah Progressivism Anarchy/social democracy Stateless Interventionism & Dirigisme Stewardship -

History of Philosophy Outlines from Wheaton College (IL)

Property of Wheaton College. HISTORY OF PHILOSOPHY 311 Arthur F. Holmes Office: Blanch ard E4 83 Fall, 1992 Ext. 5887 Texts W. Kaufman, Philosophical Classics (Prentice-Hall, 2nd ed., 1968) Vol. I Thales to Occam Vol. II Bacon to Kant S. Stumpf, Socrates to Sartre (McGraW Hill, 3rd ed., 1982, or 4th ed., 1988) For further reading see: F. Copleston, A History of Philosophy. A multi-volume set in the library, also in paperback in the bookstore. W. K. C. Guthrie, A History of Greek Philosophy Diogenes Allen, Philosophy for Understanding Theology A. H. Armstrong & R. A. Markus, Christian Faith and Greek Philosophy A. H. Armstrong (ed.), Cambridge History of Later Greek and Early Medieval Philosophy Encyclopedia of Philosophy Objectives 1. To survey the history of Western philosophy with emphasis on major men and problems, developing themes and traditions and the influence of Christianity. 2. To uncover historical connections betWeen philosophy and science, the arts, and theology. 3. To make this heritage of great minds part of one’s own thinking. 4. To develop competence in reading philosophy, to lay a foundation for understanding contemporary thought, and to prepare for more critical and constructive work. Procedure 1. The primary sources are of major importance, and you will learn to read and understand them for yourself. Outline them as you read: they provide depth of insight and involve you in dialogue with the philosophers themselves. Ask first, What does he say? The, how does this relate to What else he says, and to what his predecessors said? Then, appraise his assumptions and arguments. -

Introduction to Philosophy

UNIVERSITY OF CALICUT SCHOOL OF DISTANCE EDUCATION B.A. PHILOSOPHY CORE COURSE - (2019-Admn.) PHL1 B01-INTRODUCTION TO PHILOSOPHY 1. The total number of Vedas is . a) One b) Two c) Three d) Four 2. Philosophy is originally a word. a) English b) Latin c) Greek d) Spanish 3. Philosophy deals with of reality. a) a part b) the whole c) the illusion d) none of these 4. ‘Esthetikos’ is a word. a) Greek b) Latin c) French d) Spanish 5. Taoism belongs to the tradition. a) Japanese b) Oriental c) Occidental d) None of these 6. does not belong to Oriental tradition. a) India b) China c) Japan d) None of these 7. Vedic philosophy evolved in the order. a) Polytheism, Monism, Monotheism c) Polytheism, Monotheism, Monism b) Monotheism, Polytheism, monism d) Polytheism, Monism, Monotheism 8. is not a heterodox system. a) Samkhya b) Buddhism c) Lokayata d) Jainism 9. implies ‘accepting the authority of the Vedas’. a) Heterodox b) Orthodox c) Oriental d) Occidental 10. According to the law of karma, every karma leads to . a) Moksha b) Phala c) Dharma d) all these 11. The portion of Vedas that deals with rituals is known as . a) Mantras b) Brahmanas c) Aranyakas d) Upanishads 12. Polytheism implies as Monism refers to one. a) Two b) three c) many d) all these 13. Belief in one God is referred as . Introduction to Philosophy Page 1 a) Henotheism b) Monotheism c) Monism d) Polytheism 14. Samkhya propounded . a) Dualism b) Monism c) Monotheism d) Polytheism 15. is an Oriental system. -

An Introduction to Late British Associationism and Its Context This Is a Story About Philosophy. Or About Science

Chapter 1: An Introduction to Late British Associationism and Its Context This is a story about philosophy. Or about science. Or about philosophy transforming into science. Or about science and philosophy, and how they are related. Or were, in a particular time and place. At least, that is the general area that the following narrative will explore, I hope with sufficient subtlety. The matter is rendered non-transparent by the fact that that the conclusions one draws with regard to such questions are, in part, matters of discretionary perspective – as I will try to demonstrate. My specific historical focus will be on the propagation of a complex intellectual tradition concerned with human sensation, perception, and mental function in early nineteenth century Britain. Not only philosophical opinion, but all aspects of British intellectual – and practical – life, were in the process of significant transformation during this time. This cultural flux further confuses recovery of the situated significance of the intellectual tradition I am investigating. The study of the mind not only was influenced by a set of broad social shifts, but it also participated in them fully as both stimulus to and recipient of changing conditions. One indication of this is simply the variety of terms used to identify the ‘philosophy of mind’ as an intellectual enterprise in the eighteenth and nineteenth centuries.1 But the issue goes far deeper into the fluid constitutive features of the enterprise - including associated conceptual systems, methods, intentions of practitioners, and institutional affiliations. In order to understand philosophy of mind in its time, we must put all these factors into play. -

Malebranche's Augustinianism and the Mind's Perfection

University of Pennsylvania ScholarlyCommons Publicly Accessible Penn Dissertations Spring 2010 Malebranche's Augustinianism and the Mind's Perfection Jason Skirry University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/edissertations Part of the History of Philosophy Commons Recommended Citation Skirry, Jason, "Malebranche's Augustinianism and the Mind's Perfection" (2010). Publicly Accessible Penn Dissertations. 179. https://repository.upenn.edu/edissertations/179 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/edissertations/179 For more information, please contact [email protected]. Malebranche's Augustinianism and the Mind's Perfection Abstract This dissertation presents a unified interpretation of Malebranche’s philosophical system that is based on his Augustinian theory of the mind’s perfection, which consists in maximizing the mind’s ability to successfully access, comprehend, and follow God’s Order through practices that purify and cognitively enhance the mind’s attention. I argue that the mind’s perfection figures centrally in Malebranche’s philosophy and is the main hub that connects and reconciles the three fundamental principles of his system, namely, his occasionalism, divine illumination, and freedom. To demonstrate this, I first present, in chapter one, Malebranche’s philosophy within the historical and intellectual context of his membership in the French Oratory, arguing that the Oratory’s particular brand of Augustinianism, initiated by Cardinal Bérulle and propagated by Oratorians such as Andre Martin, is at the core of his philosophy and informs his theory of perfection. Next, in chapter two, I explicate Augustine’s own theory of perfection in order to provide an outline, and a basis of comparison, for Malebranche’s own theory of perfection. -

Chapter One Transcendentalism and the Aesthetic Critique Of

Chapter One Transcendentalism and the Aesthetic Critique of Modernity Thinking of the aesthetic as both potentially ideological and potentially utopian allows us to understand the social implications of modern aes- thetics. The aesthetic experience can serve to evade questions of justice and equality as much as it offers the opportunity for a vision of freedom. If the Transcendentalists stand at the end of a Romantic tradition which has always been aware of the utopian dimension of the aesthetic, why should they have cherished the consolation the aesthetic experience of- fers while at the same time forfeiting its critical implications, which have been so important for the British and the early German Romantics? Is the American variety of Romanticism really much more submissive than European Romanticism with all its revolutionary fervor? Is it really true that “the writing of the Transcendentalists, and all those we may wish to consider as Romantics, did not have this revolutionary social dimension,” as Tony Tanner has suggested (1987, 38)? The Transcendentalists were critical of established religious dogma and received social conventions – so why should their aesthetics be apolitical or even adapt to dominant ideologies? In fact, the Transcendentalists were well aware of the philo- sophical and social radicalism of the aesthetic – which, however, is not to say that all of them wholeheartedly embraced it. While the Transcendentalists’ American background (New England’s Puritan legacy and the Unitarian church from which they emerged) is crucial for an understanding of their social, religious, and philosophical thought, it is worth casting an eye across the ocean to understand their concept of beauty since the Transcendentalists were also heir to a long- standing tradition of transatlantic Romanticism which addressed the re- lationship between aesthetic experience, utopia, and social criticism. -

Theory of Knowledge in Britain from 1860 to 1950

Baltic International Yearbook of Cognition, Logic and Communication Volume 4 200 YEARS OF ANALYTICAL PHILOSOPHY Article 5 2008 Theory Of Knowledge In Britain From 1860 To 1950 Mathieu Marion Université du Quéebec à Montréal, CA Follow this and additional works at: https://newprairiepress.org/biyclc This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. Recommended Citation Marion, Mathieu (2008) "Theory Of Knowledge In Britain From 1860 To 1950," Baltic International Yearbook of Cognition, Logic and Communication: Vol. 4. https://doi.org/10.4148/biyclc.v4i0.129 This Proceeding of the Symposium for Cognition, Logic and Communication is brought to you for free and open access by the Conferences at New Prairie Press. It has been accepted for inclusion in Baltic International Yearbook of Cognition, Logic and Communication by an authorized administrator of New Prairie Press. For more information, please contact [email protected]. Theory of Knowledge in Britain from 1860 to 1950 2 The Baltic International Yearbook of better understood as an attempt at foisting on it readers a particular Cognition, Logic and Communication set of misconceptions. To see this, one needs only to consider the title, which is plainly misleading. The Oxford English Dictionary gives as one August 2009 Volume 4: 200 Years of Analytical Philosophy of the possible meanings of the word ‘revolution’: pages 1-34 DOI: 10.4148/biyclc.v4i0.129 The complete overthrow of an established government or social order by those previously subject to it; an instance of MATHIEU MARION this; a forcible substitution of a new form of government. -

Studia Culturologica Series

volume 22, autumn-winter 2005 studia culturologica series GEORGE GALE OSWALD SCHWEMMER DIETER MERSCH HELGE JORDHEIM JAN RADLER JEAN-MARC T~TAZ DIMITRI GINEV ROLAND BENEDIKTER Maison des Sciences de I'Homme et de la Societe George Gcrle LEIBNIZ, PETER THE GREAT, AND TIIE MOUERNIZAIION OF RUSSIA or Adventures of a Philosopher-King in the East Leibniz was the modern world's lirst Universal C'itizen. Ilespite being based in a backwater northern German principalit). Leibniz' scliolarly and diploniatic missions took liim - sometimes for extendud stays -- everywhere i~nportant:to Paris and Lotidon, to Berlin, to Vien~~nand Konie. And this in a time when travel \vas anything birt easy. Leibniz' intelluctual scope was ecli~ally\vide: his philosophic focus extended all the way ti-om Locke's England to Confi~cius'C'hina, even while his geopolitical interests encom- passed all tlie vast territory from Cairo to Moscow. In short, Leibniz' acti- vities, interests and attention were not in the least hounded by tlie narrow compass of his home base. Our interests, however, must be bounded: to this end, our concern in what follows will Iiniil itself to Leibniz' relations with tlie East, tlie lands beyond the Elbe, the Slavic lands. Not only are these relations topics of intrinsic interest, they have not received anywhere near tlie attention they deserve. Our focus will be the activities of Leibniz himself, the works of the living philosopher, diplomat, and statesman, as he carried them out in his own time. Althoi~ghLeibniz' heritage continues unto today in the form of a pl~ilosophicaltradition, exa~ninationoftliis aspect of Leibnizian influ- ence nli~stawait another day. -

Immanuel Kant and the Development of Modern Psychology David E

University of Richmond UR Scholarship Repository Psychology Faculty Publications Psychology 1982 Immanuel Kant and the Development of Modern Psychology David E. Leary University of Richmond, [email protected] Follow this and additional works at: http://scholarship.richmond.edu/psychology-faculty- publications Part of the Theory and Philosophy Commons Recommended Citation Leary, David E. "Immanuel Kant and the Development of Modern Psychology." In The Problematic Science: Psychology in Nineteenth- Century Thought, edited by William Ray Woodward and Mitchell G. Ash, 17-42. New York, NY: Praeger, 1982. This Book Chapter is brought to you for free and open access by the Psychology at UR Scholarship Repository. It has been accepted for inclusion in Psychology Faculty Publications by an authorized administrator of UR Scholarship Repository. For more information, please contact [email protected]. 1 Immanuel Kant and the Development of Modern Psychology David E. Leary Few thinkers in the history of Western civilization have had as broad and lasting an impact as Immanuel Kant (1724-1804). This "Sage of Konigsberg" spent his entire life within the confines of East Prussia, but his thoughts traveled freely across Europe and, in time, to America, where their effects are still apparent. An untold number of analyses and commentaries have established Kant as a preeminent epistemologist, philosopher of science, moral philosopher, aestheti cian, and metaphysician. He is even recognized as a natural historian and cosmologist: the author of the so-called Kant-Laplace hypothesis regarding the origin of the universe. He is less often credited as a "psychologist," "anthropologist," or "philosopher of mind," to Work on this essay was supported by the National Science Foundation (Grant No. -

Intro to Perception

Intro to Perception Dr. Jonathan Pillow Sensation & Perception (PSY 345 / NEU 325) Spring 2015, Princeton University 1 What is Perception? stuff in the world 2 What is Perception? stuff in the world percepts process for: • extracting information via the senses • forming internal representations of the world 3 Outline: 1. Philosophy: • What philosophical perspectives inform our understanding and study of perception? 2. General Examples • why is naive realism wrong? • what makes perception worth studying? 3. Principles & Approaches • modern tools for studying perception 4 Epistemology = theory of knowledge • Q: where does knowledge come from? Answer #1: Psychological Nativism • the mind produces ideas that are not derived from external sources 5 Epistemology = theory of knowledge • Q: where does knowledge come from? Answer #1: Psychological Nativism • the mind produces ideas that are not derived from external sources Answer #2: Empiricism • All knowledge comes from the senses Proponents: Hobbes, Locke, Hume • newborn is a “blank slate” (“tabula rasa”) 6 Epistemology = theory of knowledge • Q: where does knowledge come from? Answer #1: Psychological Nativism vs. Answer #2: Empiricism • resembles “nature” vs. “nurture” debate • extreme positions at both ends are a bit absurd (See Steve Pinker’s “The Blank Slate” for a nice critique of the blank slate thesis) 7 Metaphysics 8 Metaphysics = theory of reality • Q: what kind of stuff is there in the world? Answer #1: Dualism • there are two kinds of stuff • usually: “mind” and “matter” Answer #2: Monism • there is only one kind of stuff “materialism” “idealism” (physical stuff) (mental stuff) 9 Philosophy of Mind Q: what is the relationship between “things in the world” and “representations in our heads”? 10 1. -

Psyc104 Summaries

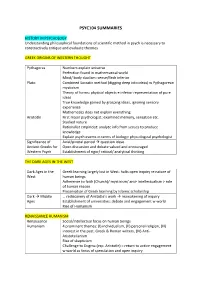

PSYC104 SUMMARIES HISTORY IN PSYCHOLOGY Understanding philosophical foundations of scientific method in psych is necessary to constructively critique and evaluate theories GREEK ORIGINS OF WESTERN THOUGHT Pythagoras Numbers explain universe Perfection found in mathematical world Mind/ body dualism: sense/flesh inferior Plato Combined Socratic method (digging deep into ideas) w Pythagorean mysticism Theory of forms: physical objects = inferior representation of pure ideas True knowledge gained by grasping ideas, ignoring sensory experience Mathematics does not explain everything Aristotle First major psychologist: examined memory, sensation etc. Studied nature Rationalist empiricist: analyze info from senses to produce knowledge Explain psych events in terms of biology: physiological psychologist Significance of Axial/pivotal period à question ideas Ancient Greeks for Open discussion and debate valued and encouraged Western Psych Establishment of rigor/ critical/ analytical thinking THE DARK AGES IN THE WEST Dark Ages in the Greek learning largely lost in West: halts open inquiry re nature of West human beings Adherence to faith (Church)/ mysticism/ anti- intellectualism > role of human reason Preservation of Greek learning by Islamic scholarship Dark à Middle … rediscovery of Aristotle’s work à reawakening of inquiry Ages Establishment of universities: debate and engagement w world Rise of Humanism RENAISSANCE HUMANISM Renaissance Social/intellectual focus on human beings Humanism 4 prominent themes: (I) individualism, (II) personal religion,