A Polynomial Testing Principle

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

{PDF EPUB} Doctor Who and the Green Death by Malcolm Hulke Target Practice #3: Doctor Who and the Green Death by Malcolm Hulke

Read Ebook {PDF EPUB} Doctor Who and the Green Death by Malcolm Hulke Target Practice #3: Doctor Who and the Green Death by Malcolm Hulke. Of the three books that helped make me into a fan, this one is probably my favorite. And why not? It’s a story that dares to talk about pollution and the effects of the coal industry long before climate change became the hot-button political issue it is today. Even with the inherent conservatism of the Pertwee era in mind, (as writers like Paul Cornell and Elizabeth Sandifer have suggested) to see Doctor Who take a firm step for environmentalism this early on is wonderful. And while I wish I could say the situation has improved since the story originally aired on TV in 1973, (and then published in 1975) the truth is, I can’t. Things have only gotten worse in the last 40-odd years, thanks to rising levels of pollution everywhere, and the general warming of the planet leading to significantly stronger hurricanes, melting polar ice caps, and other such disasters. In 2016, the nations of the world signed the Paris Climate Accords, with the goal of keeping the global average temperature from breaching 2 degrees Celsius, but then former president Donald Trump withdrew the United States from the accords, and spent his entire term in office destroying the environment, rather than preserving it. True, Joe Biden returned the US to the agreement after he took office in January, but whatever else he may have planned from a legislative standpoint will run up against a reduced Democratic majority in the House of Representatives, a nominally Democratic Senate, a far right judiciary, and various recalcitrant Republican-controlled states who don’t believe his presidency to be legitimate in the first place, all ready and willing to block even modest reforms, whether through legislation or executive actions. -

OMC | Data Export

Richard Scully, "Entry on: Doctor Who (Series, Season 9): The Time Monster by Paul Bernard, Terrance Dicks, Barry Letts, Robert Sloman", peer-reviewed by Elizabeth Hale and Daniel Nkemleke. Our Mythical Childhood Survey (Warsaw: University of Warsaw, 2018). Link: http://omc.obta.al.uw.edu.pl/myth-survey/item/92. Entry version as of October 01, 2021. Paul Bernard , Terrance Dicks , Barry Letts , Robert Sloman Doctor Who (Series, Season 9): The Time Monster United Kingdom (1972 ) TAGS: Atlantis Classical myth We are still trying to obtain permission for posting the original cover. General information Title of the work Doctor Who (Series, Season 9): The Time Monster Studio/Production Company British Broadcasting Corporation (BBC) Country of the First Edition United Kingdom Original Language English First Edition Date 1972 First Edition Details Episode 1: May 20, 1972 - Episode 6: June 24, 1972 Running time 150 min (6 episodes – 25 mins each) 2001 (VHS release together with ‘Colony in Space’, 1971); March Date of the First DVD or VHS 29, 2010 (DVD [Region 2]; August 5, 2008 (iTunes) Genre Science fiction, Television series, Time-Slip Fantasy* Target Audience Crossover Author of the Entry Richard Scully, University of New England, [email protected] Elizabeth Hale, University of New England, [email protected] Peer-reviewer of the Entry Daniel Nkemleke, Universite de Yaounde 1, [email protected] 1 This Project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 Research and Innovation Programme under grant agreement No 681202, Our Mythical Childhood... The Reception of Classical Antiquity in Children’s and Young Adults’ Culture in Response to Regional and Global Challenges, ERC Consolidator Grant (2016–2021), led by Prof. -

'Went the Day of the Daleks Well?' an Investigation Into the Role Of

Tony Keen ‘Invasion narratives in British television Science Fiction’ ‘Went the Day of the Daleks well?’ An investigation into the role of invasion narratives in shaping 1950s and 1960s British television Science Fiction, as shown in Quater- mass, Doctor Who and UFO If the function of art is to hold a mirror up to society, then science fiction (sf), through the distorted reflection it offers, allows the examination of aspects of society that might otherwise be too uncomfortable to confront. This essay aims to look at how invasion narratives, stories concerned with the invasion of Britain from outside, shaped three British science fiction series, and how those series interro- gated the narratives. The series will primarily be examined through aesthetic and social ap- proaches. Particular areas to be explored include the embracing and subverting of common assumptions about Britain’s attitude to invasion, and the differing attitudes to the military displayed. Introduction As an island nation, the prospect of invasion has always occupied a prominent place in the British popular imagination. According to Sellar and Yeatman, the two memorable dates in history are 55 BC and 1066, the dates of the invasions of Julius Caesar and William the Con- queror.1 Subsequent events such as the Spanish Armada of 1588 are also well-known mo- ments in history. It is inevitable that the prospect of invasion should produce speculative literature. Some works appeared around the time of Napoleon’s threatened invasion of 1803,2 but ‘invasion literature’ as a literary genre emerged out of the growing market for novels and short-story magazines, and is generally considered to have begun with the appearance of George Tomp- kyns Chesney’s 1871 story ‘The Battle of Dorking’,3 a story of the German conquest of Eng- land, prompted by the German victory in the Franco-Prussian War of 1871. -

'BBC Handbook 1976 Incorporating the Annual Report and Accounts 1974-75

'BBC Handbook 1976 Incorporating the Annual Report and Accounts 1974-75 www.americanradiohistory.com www.americanradiohistory.com www.americanradiohistory.com 9L61 310oQPu-BH Dgg www.americanradiohistory.com BBC Handbook 1976 incorporating the Annual Report and Accounts 1974 -75 British Broadcasting Corporation www.americanradiohistory.com Published by the British Broadcasting Corporation 35 Marylebone High Street, London W 1 M 4AA ISBN 563 12891 7 First published 1975 © BBC 1975 Printed in England by The Whitefriars Press Ltd London & Tonbridge Illustrated section printed by Sir Joseph Causton & Sons Ltd, London www.americanradiohistory.com Contents Foreword Sir Michael Swann 7 Tables Part one World radio and television receivers 54 Annual Report and Accounts External broadcasting 65 1974 - 75 Annual Report of the Broadcasting Council for Scotland 105 Introductory 9 Annual Report of the Broadcasting Council for Wales 110 Programmes 21 Appendices 115 Television 21 I Hours of output: television 116 Radio 25 Hours of output: radio 117 Party political broadcasts and broadcasts II Programme analysis television networks by Members of Parliament 32 118 News 34 Programme analysis radio networks 119 III School broadcasting 120 Religious broadcasting 35 IV Hours of broadcasting in the External Educational broadcasting 37 Services 123 Northern Ireland 42 V Rebroadcasts of External Services 124 English regional broadcasting 43 _Network production centres 44 Part two The English television regions 47 Programme review Appeals for charity 48 Audience -

Doctor Who: the Time Monster

Outside the bounds of this world lives Kronos, the Chronivore – a mysterious creature that feeds on time itself. Posing as a Cambridge professor the Master intends to use Kronos in his evil quest for power. To stop him, the Doctor and Jo must journey back in time to Ancient Atlantis and to a terrifying confrontation within the Time Vortex itself. But can even the Doctor save himself from the awesome might of the Time Monster? DOCTOR WHO THE TIME MONSTER Based on the BBC television series by Robert Sloman by arrangement with the British Broadcasting Corporation TERRANCE DICKS No. 102 in the Doctor Who Library A TARGET BOOK published by the Paperback Division of W. H. ALLEN & Co. PLC A Target Book Published in 1986 By the Paperback Division of W.H. Allen & Co. PLC 44 Hill Street, London W1X 8LB First Published in Great Britain by W.H. Allen & Co. PLC 1986 Novelisation Copyright © Terrance Dicks, 1985 Original script Copyright © Robert Sloman 1972 ‘Doctor Who’ series copyright © 1980 by the British Broadcasting Corporation, 1972, 1986 Printed in Great Britain by Anchor Brendon, Tiptree, Essex The BBC Producer of The Time Monster was Barry Letts, the director was Paul Bernard ISBN 0 426 20213 9 This book is sold subject to the condition that it shall not, by way of trade or otherwise, be lent, re-sold, hired out or otherwise circulated without the publisher's prior consent in any form of binding or cover other than that in which it is published and without a similar condition including this condition being imposed on the subsequent purchaser. -

TTZ10 Master Document Pp9.1

Table of Contents EDITOR ’S NOTE Editor’s NoteNote.............................................................2 A Christmas Carol Review Jamie Beckwith ............3 Though I only knew Patrick Troughton from SFX Weekender Will Forbes & A. Vandenbussche.4 “TheThe Five Doctors ” by the time I rediscovered Out of Time Nick Mellish .....................................7 Doctor Who when I was in my teens, I knew A Scribbled Note Jamie Beckwith ...........................9 I loved the Second Doctor. He always seemed Donna Noble vs Amy Pond Aya Vandenbussche .10 like the only Doctor one could reasonably go to for a hug. My admiration continued to The Real Pancho Villa Leslie McMurtry ...............13 grow; my first story from the Second Doctor’s Tea with Nyder: TTZ Interviews Peter Miles ........14 era—“SeedsSeeds of Death ”—may have left a rather The War Games’ Civil War Leslie McMurtry & Evan lukewarm impression, but the Missing Adven- L. Keraminas ...........................................................21 tures novel The Dark Path made up for it. Season 6(b) Matthew Kresal ....................................25 In this issue, we have gone monochrome, in No! Not The Mind Probe - The Web Planet Tony honor of Troughton, his last story “ The War Games ,” and of the significant part the Cross & Steve Sautter ............................................28 Second Doctor’s era had to play in influencing The Blue Peter Guide to The Web Planet Robert all subsequent incarnations of Doctor Who— a Beckwith ..................................................................30 -

The British Environmental Movement: the Development of an Environmental Consciousness and Environmental Activism, 1945-1975

Northumbria Research Link Citation: Wilson, Mark (2014) The British environmental movement: The development of an environmental consciousness and environmental activism, 1945-1975. Doctoral thesis, University of Northumbria. This version was downloaded from Northumbria Research Link: http://nrl.northumbria.ac.uk/id/eprint/21603/ Northumbria University has developed Northumbria Research Link (NRL) to enable users to access the University’s research output. Copyright © and moral rights for items on NRL are retained by the individual author(s) and/or other copyright owners. Single copies of full items can be reproduced, displayed or performed, and given to third parties in any format or medium for personal research or study, educational, or not-for-profit purposes without prior permission or charge, provided the authors, title and full bibliographic details are given, as well as a hyperlink and/or URL to the original metadata page. The content must not be changed in any way. Full items must not be sold commercially in any format or medium without formal permission of the copyright holder. The full policy is available online: http://nrl.northumbria.ac.uk/policies.html THE BRITISH ENVIRONMENTAL MOVEMENT: THE DEVELOPMENT OF AN ENVIRONMENTAL CONSCIOUSNESS AND ENVIRONMENTAL ACTIVISM, 1945- 1975 M Wilson PhD 2014 THE BRITISH ENVIRONMENTAL MOVEMENT: THE DEVELOPMENT OF AN ENVIRONMENTAL CONSCIOUSNESS AND ENVIRONMENTAL ACTIVISM, 1945- 1975 Mark Wilson A thesis submitted in partial fulfilment of the requirements of the University of Northumbria at Newcastle for the award of Doctor of Philosophy Research undertaken in the Faculty of Arts, Design & Social Sciences April 2014 1 ABSTRACT This work investigates the development of an environmental consciousness and environmental activism in Britain, 1945-1975. -

"Star Trek" and "Doctor Who"

Louisiana State University LSU Digital Commons LSU Historical Dissertations and Theses Graduate School 1987 A Critical Examination of the Mythological and Symbolic Elements of Two Modern Science Fiction Series: "Star Trek" and "Doctor Who". Gwendolyn Marie Olivier Louisiana State University and Agricultural & Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_disstheses Recommended Citation Olivier, Gwendolyn Marie, "A Critical Examination of the Mythological and Symbolic Elements of Two Modern Science Fiction Series: "Star Trek" and "Doctor Who"." (1987). LSU Historical Dissertations and Theses. 4373. https://digitalcommons.lsu.edu/gradschool_disstheses/4373 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Historical Dissertations and Theses by an authorized administrator of LSU Digital Commons. For more information, please contact [email protected]. INFORMATION TO USERS While the most advanced technology has been used to photograph and reproduce this manuscript, the quality of the reproduction is heavily dependent upon the quality of the material submitted. For example: ® Manuscript pages may have indistinct print. In such cases, the best available copy has been filmed. • Manuscripts may not always be complete. In such cases, a note will indicate that it is not possible to obtain missing pages. • Copyrighted material may have been removed from the manuscript. In such cases, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, and charts) are photographed by sectioning the original, beginning at the upper left-hand corner and continuing from left to right in equal sections with small overlaps. -

John Levene and Damaris Hayman

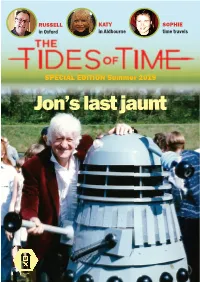

RUSSELL KATY SOPHIE in Oxford in Aldbourne time travels SPECIAL EDITION Summer 2019 Jon’s last jaunt [email protected] [email protected] oxforddoctorwho-tidesoftime.blog users.ox.ac.uk/~whosoc twitter.com/outidesoftime twitter.com/OxfordDoctorWho facebook.com/outidesoftime facebook.com/OxfordDoctorWho Special Edition Summer 2019 CONVENTIONS AND OTHER EVENTS “Ah Doctor!” “Am I early for something?” —The Doctor to the Valeyard, had The Trial of a Time Lord Part One been transmitted in September 1985 HIS EDITION OF THE TIDES OF TIME EXISTS OUTSIDE ITS USUAL TSPACE-TIME CONTINUUM. It arises as a consequence of a decision made during the preparation of number 43 in April. As often, while laying the issue out it became clear that we had overshot a practical page count for printing and were some distance short of the next benchmark. James had written a review of the Time Flight convention at Banbury, and this seemed the feature which it was most practical to exclude, but it would be a waste not to publish it, especially as the pages had already been designed. Cover detail: Jon Pertwee at The idea arose of combining the Time Flight Aldbourne, 1996. Image by reports with those from Bedford Charity Who Stephen Broome Con Five, not yet then written up, and having a convention and events supplement as a download. Perhaps it could also include a look back at a past Oxford Doctor Who Society speaker event, specifi cally a very remote one, Sophie Aldred’s visit in 1991 from which several pictures existed. Matthew Kilburn was due to be involved in DePaul University’s visit to Aldbourne again, this time on a day when an event featuring the cast was going to be held there; and then Mark Learey got in touch with a piece about the April 1996 Aldbourne reunion, the last fan event which Jon Pertwee attended. -

Mycelial Networker

THE OF Oxford Number 41 Doctor Who Trinity 2018 Society MYCELIAL NETWORKER Fungal reproduction and the Time Lords • Allegory in Ghost Light The Twelfth Doctor as storyteller • Twice Upon a Time Varsity Quiz 2018 • Jodie Whittaker • Colin Baker • Big Finish Number 41 Trinity Term 2018 Published by the OXFORD DOCTOR WHO SOCIETY [email protected] Contents 3 Editorial: ‘Change was possible. Change was here’ 4 Xenobiology Special: The Science of Whittaker J A 6 ‘Once Upon A Time, The End’: the Twelfth Doctor, Storyteller W S 11 Haiku for Smile W S 12 ‘Nobody here but us chickens’: The Empty Child B H 14 Running Towards the Future: new and old novelizations J W 20 The Doctor Falls No More: regeneration in Twice Upon a Time I B 23 Fiction: A Stone’s Throw, Part Three J S 29 2018 Varsity Quiz J A M K 36 The Space Museum W S 37 Looming Large S B R C 44 Paradise Lost: John Milton, unsuspected Doctor Who fan R B 46 The Bedford Boys: Bedford CharityCon 4-I I B 48 Mad Dogs and TARDIS Folk: the Doctor in Oxford J A 54 Mike Tucker: Bedford Charity Con 4-II I B 56 Big Who Listen: The Sirens of Time J S, R C, I B, A K, J A, M G, J P. M, P H 64 The Bedford Tales: Bedford CharityCon 4-III A K 67 Terror of the Zygons W S 68 Jodie Whittaker is the Doctor and the World is a Wonderful Place G H 69 The Mysterious Case of Gabriel Chase: Ghost Light A O’D Edited by James Ashworth and Matthew Kilburn Editorial address [email protected] Editorial assistance from Ian Bayley, Nancy Epton, Stephen Bell and William Shaw. -

Foreword: Thinking About Religion and Doctor Who When BBC

Foreword: Thinking About Religion and Doctor Who When BBC science fiction show Doctor Who first hit British television screens on 23rd November 1963, there was little expectation that it would transform from a teatime slot filler into a behemoth that would still be running (at the time of writing) some fifty two years later. Neither could its writers, actors and producers suspect that it would become a staple of British popular culture and identity, or that it would generate a worldwide fandom that continues to celebrate its love of the show in everything from DVD and magazine sales to fan fiction; cosplay to Doctor Who themed weddings. For those with an interest in British religious history, the date of the programme’s creation might appear to be suggestive. 1963 was, after all, the year pinpointed by Callum Brown as the “death of Christian Britain” (Brown 2009), and while many would claim that Brown’s obituary was somewhat premature (e.g. Clark 2012; Green 2010), it is impossible to argue that the nature of faith and belief in Britain hasn’t changed greatly since the TARDIS doors first swung open. This issue of Implicit Religion attempts to examine some of these changes in terms of the religious practices, implicit and explicit, that have developed around Doctor Who over the course of its long history. The authors of the articles included here have looked at the way in which explicit religious practices – such as reading the programme as a form of Buddhist koan, for example – might challenge the sacred/secular dichotomy which is still presumed in much scholarship.1 Others have examined the show more directly through the lens of implicit religion. -

January 2010 by the Oxford Doctor Who Discussions of the Type That Form This Magazine

The Tides of Time The Passage of Time Issue 33 Hilary Term 2010 It has been far too long since an edition of Tides has been published. It is, though, an interesting time for it. Issue 32 was published almost exactly four years Editor Adam Povey ago. We'd just met David Tennant's Tenth Doctor in [email protected] the first Christmas Special. We were all beginning to realise that Doctor Who was not just back but was once more a national institution. As I write this Contents: editorial, The End of Time Part 2 is mere hours away, with Tennant's imminent death opening a new world possibilities in the form of Matt Smith and new script The Prophet 3 editor Steven Moffat. A tribute to Barry Letts (1925 – 2009) from Matthew Kilnurn Also, this term is the 20th anniversary of the foundation of this fair society. We will likely celebrate Five Things Wrong with it by making Matthew stand up and recount his David Tennant's Doctor 9 memory of its great past rather more frequently than Thomas Keyton presents a brief argument against usual. Roll on 20 more! David Tennant's portrayal of the Tenth Doctor. Hopefully the next edition of Tides will be published Time and Relationships before Matt Smith regenerates, but there's only one Diverting in Space 11 way that will happen – if YOU contribute. This Adam Povey provides a brief ramble through the magazine is entirely built from voluntary common tropes of the work of Steven Moffat. contributions and the only reason you weren't reading this sooner is it took four years to get The Might 200 13 together enough contributions to even get close to Jonathan Nash presents his opinions on the hefty printing an edition.