Automatic Assessment of Singing Voice Pronunciation: a Case Study with Jingju Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Investigating Translation for Enhancing Artistic Dialogue

Li Pan 111 Journal of Universal Language 12-1 March 2011, 111-156 Foreignized or Domesticated: Investigating Translation for Enhancing Artistic Dialogue Li Pan University of Macau & Guangdong University of Foreign Studies* Abstract Translation plays a significant role in intercultural communication for it not only enables two cultures to converse with each other but also helps to reveal the disparity and unbalance between cultures. This is especially true in the case of translation of texts for the performing arts like theatre, dance, opera, and music, etc., which are usually embedded with condensed culture specific expressions and concepts. Examining translation strategies used in rendering such texts in practice can be quite revealing about the unbalance and inequality of interaction among or between cultures in a given community since the strategies employed by translators reflect the social cultural context in which texts are produced (Bassnett & Trivedi 1999: 6). It is thus interesting to investigate the translation practice in a international community like Hong Kong, a city well- Li PAN English Department, Faculty of Social Sciences and Humanities, University of Macau, Macao / Faculty of English Language and Culture, Guangdong University of Foreign Studies, Guangzhou, China Phone: 853-62514585 / 86-13580300028; [email protected] Received Nov. 2010; Reviewed Dec. 2010; Revised version received Dec. 2010. 112 Foreignized or Domesticated known as a melting pot which is susceptible to the influences of both the Eastern and the Western cultures, and to find out which culture is more influential on translation practice in such a community and what strategies are deployed in translating texts for the performing arts in this community? However, up till now, not much research has been carried out addressing the impact of the cultural disparity or unbalance on the translation of texts for the arts in Hong Kong, nor the actual practice of translating promotional texts for the performing arts. -

Top 200 Most Requested Songs

Top 200 Most Requested Songs Based on millions of requests made through the DJ Intelligence® music request system at weddings & parties in 2013 RANK ARTIST SONG 1 Journey Don't Stop Believin' 2 Cupid Cupid Shuffle 3 Black Eyed Peas I Gotta Feeling 4 Lmfao Sexy And I Know It 5 Bon Jovi Livin' On A Prayer 6 AC/DC You Shook Me All Night Long 7 Morrison, Van Brown Eyed Girl 8 Psy Gangnam Style 9 DJ Casper Cha Cha Slide 10 Diamond, Neil Sweet Caroline (Good Times Never Seemed So Good) 11 B-52's Love Shack 12 Beyonce Single Ladies (Put A Ring On It) 13 Maroon 5 Feat. Christina Aguilera Moves Like Jagger 14 Jepsen, Carly Rae Call Me Maybe 15 V.I.C. Wobble 16 Def Leppard Pour Some Sugar On Me 17 Beatles Twist And Shout 18 Usher Feat. Ludacris & Lil' Jon Yeah 19 Macklemore & Ryan Lewis Feat. Wanz Thrift Shop 20 Jackson, Michael Billie Jean 21 Rihanna Feat. Calvin Harris We Found Love 22 Lmfao Feat. Lauren Bennett And Goon Rock Party Rock Anthem 23 Pink Raise Your Glass 24 Outkast Hey Ya! 25 Isley Brothers Shout 26 Sir Mix-A-Lot Baby Got Back 27 Lynyrd Skynyrd Sweet Home Alabama 28 Mars, Bruno Marry You 29 Timberlake, Justin Sexyback 30 Brooks, Garth Friends In Low Places 31 Lumineers Ho Hey 32 Lady Gaga Feat. Colby O'donis Just Dance 33 Sinatra, Frank The Way You Look Tonight 34 Sister Sledge We Are Family 35 Clapton, Eric Wonderful Tonight 36 Temptations My Girl 37 Loggins, Kenny Footloose 38 Train Marry Me 39 Kool & The Gang Celebration 40 Daft Punk Feat. -

Bobby Karl Works the Room Chapter 323 There Was Joy in the Schermerhorn Associated with Inductee Chet Atkins, Symphony Center Monday Night (10/12)

page 1 Wednesday, October 14, 2009 Bobby Karl Works The Room Chapter 323 There was joy in the Schermerhorn associated with inductee Chet Atkins, Symphony Center Monday night (10/12). both in tandem with Paul Yandell and Performer after performer at the solo. third annual Musicians Hall of Fame Chet’s daughter, Merle Atkins ceremony conveyed just how much pure Russell accepted. “It’s a wonderful pleasure there is in making the music night,” she said. “It was all about music, you love. for Daddy. This is huge.” “I’ve been a very blessed person, Harold Bradley described inductee working in the business I love,” said Foster as “a nonconformist” and “a producer inductee Fred Foster. visionary” for having signed and “When you do that, you’re produced such talents as Roy not working, you’re Orbison, Dolly Parton and playing.” Kris Kristofferson, all of “For all the loyal fans, whom appeared in a video thank you for keeping the tribute. Fred-produced spirit alive,” said inductee Tony Joe White got a Billy Cox after performing a standing ovation for a super blistering rock set with his funky workout on “Polk Salad group, featuring guest drummer Annie.” Chris Layton from Stevie Ray “This is a great honor Vaughn’s band Double Trouble. that goes in my memory book for Gary Puckett gleefully turned many visits in the future,” said Fred. the mic over to the audience for a Al Jardine of The Beach Boys sing-along rendition of “Young Girl.” He enthusiastically sang “Help Me Rhonda” inducted percussion, keyboard and vibes before inducting Dick Dale, the King of “musician’s musician” Victor Feldman. -

Songs by Artist

Songs by Artist Title Title Title Title - Be 02 Johnny Cash 03-Martina McBride 05 LeAnn Rimes - Butto Folsom Prison Blues Anyway How Do I Live - Promiscuo 02 Josh Turner 04 Ariana Grande Ft Iggy Azalea 05 Lionel Richie & Diana Ross - TWIST & SHO Why Don't We Just Dance Problem Endless Love (Without Vocals) (Christmas) 02 Lorde 04 Brian McKnight 05 Night Ranger Rudolph The Red-Nosed Reindeer Royals Back At One Sister Christian Karaoke Mix (Kissed You) Good Night 02 Madonna 04 Charlie Rich 05 Odyssey Gloriana Material Girl Behind Closed Doors Native New Yorker (Karaoke) (Kissed You) Good Night Wvocal 02 Mark Ronson Feat.. Bruno Mars 04 Dan Hartman 05 Reba McIntire Gloriana Uptown Funk Relight My Fire (Karaoke) Consider Me Gone 01 98 Degress 02 Ricky Martin 04 Goo Goo Dolls 05 Taylor Swift Becouse Of You Livin' La Vida Loca (Dance Mix) Slide (Dance Mix) Blank Space 01 Bob Seger 02 Robin Thicke 04 Heart 05 The B-52's Hollywood Nights Blurred Lines Never Love Shack 01 Bob Wills And His Texas 02 Sam Smith 04 Imagine Dragons 05-Martina McBride Playboys Stay With Me Radioactive Happy Girl Faded Love 02 Taylor Swift 04 Jason Aldean 06 Alanis Morissette 01 Celine Dion You Belong With Me Big Green Tractor Uninvited (Dance Mix) My Heart Will Go On 02 The Police 04 Kellie Pickler 06 Avicci 01 Christina Aguilera Every Breath You Take Best Days Of Your Life Wake Me Up Genie In A Bottle (Dance Mix) 02 Village People, The 04 Kenny Rogers & First Edition 06 Bette Midler 01 Corey Hart YMCA (Karaoke) Lucille The Rose Karaoke Mix Sunglasses At Night Karaoke Mix 02 Whitney Houston 04 Kim Carnes 06 Black Sabbath 01 Deborah Cox How Will I Know Karaoke Mix Bette Davis Eyes Karaoke Mix Paranoid Nobody's Supposed To Be Here 02-Martina McBride 04 Mariah Carey 06 Chic 01 Gloria Gaynor A Broken Wing I Still Believe Dance Dance Dance (Karaoke) I Will Survive (Karaoke) 03 Billy Currington 04 Maroon 5 06 Conway Twitty 01 Hank Williams Jr People Are Crazy Animals I`d Love To Lay You Down Family Tradition 03 Britney Spears 04 No Doubt 06 Fall Out Boy 01 Iggy Azalea Feat. -

David Lee Murphy Bio with Picture 2018

Million-selling singer-songwriter David Lee Murphy had no plans to make a new record until a country superstar made them for him. “I’ve been friends and written songs with Kenny (Chesney) for years,” Murphy reflects. “I sent him some songs for one of his albums a couple of years ago, and he called me up. He goes, ‘Man, you need to be making a record. I could produce it with Buddy Cannon, and I think people would love it.’ It’s hard to say no to Kenny Chesney when he comes up with an idea like that.” Murphy, whose songs “Dust on the Bottle and “Party Crowd” continue to be staples at country radio, could have easily filled the album with hits he’s written for Chesney (“’Til It’s Gone,” “Living in Fast Forward,” “Live a Little”), Jason Aldean (“Big Green Tractor,” “The Only Way I Know How”), Thompson Square (GRAMMY-nominated “Are You Gonna Kiss Me Or Not”), Jake Owen (“Anywhere With You”), or Blake Shelton (“The More I Drink”). But, Chesney had other ideas. “Kenny was really influential in the songs that we picked,” says Murphy. “Over the course of the next year or so, we got together, talked about it and picked out songs. I wanted to record songs of mine that people haven’t heard before, that are new. We wanted to make the kind of album that you would listen to if you were camping or out on a lake, fishing. Or sitting anywhere, just having a good time.” Titled No Zip Code, the new David Lee Murphy collection has already yielded a hit single and duet with Chesney, “Everything’s Gonna Be Alright.” The collection also contains such good-time, party-hearty anthems as “Get Go,” “Haywire” and “That’s Alright.” The rocking “Way Gone” is a female-empowerment barn burner. -

Request List 619 Songs 01012021

PAT OWENS 3 Doors Down Bill Withers Brothers Osbourne (cont.) Counting Crows Dierks Bentley (cont.) Elvis Presley (cont.) Garth Brooks (cont.) Hank Williams, Jr. Be Like That Ain't No Sunshine Stay A Little Longer Accidentally In Love I Hold On Can't Help Falling In Love Standing Outside the Fire A Country Boy Can Survive Here Without You Big Yellow Taxi Sideways Jailhouse Rock That Summer Family Tradition Kryptonite Billy Currington Bruce Springsteen Long December What Was I Thinking Little Sister To Make You Feel My Love Good Directions Glory Days Mr. Jones Suspicious Minds Two Of A Kind Harry Chapin 4 Non Blondes Must Be Doin' Somethin' Right Rain King Dion That's All Right (Mama) Two Pina Coladas Cat's In The Cradle What's Up (What’s Going On) People Are Crazy Bryan Adams Round Here Runaround Sue Unanswered Prayers Pretty Good At Drinking Beer Heaven Eric Church Hinder 7 Mary 3 Summer of '69 Craig Morgan Dishwalla Drink In My Hand Gary Allan Lips Of An Angel Cumbersome Billy Idol Redneck Yacht Club Counting Blue Cars Jack Daniels Best I Ever Had Rebel Yell Buckcherry That's What I Love About Sundays Round Here Buzz Right Where I Need To Be Hootie and the Blowfish Aaron Lewis Crazy Bitch This Ole Boy The Divinyls Springsteen Smoke Rings In The Dark Hold My Hand Country Boy Billy Joel I Touch Myself Talladega Watching Airplanes Let Her Cry Only The Good Die Young Buffalo Springfield Creed Only Wanna Be With You AC/DC Piano Man For What It's Worth My Sacrifice Dixie Chicks Eric Clapton George Jones Time Shook Me All Night Still Rock And -

10 Must-Attend Phoenix Concerts This Fall

10 Must-Attend Phoenix Concerts This Fall Written by Tamara Kraus From pop princess Katy Perry to an American favorite The Eagles, everyone has a chance to rock out to their favorite bands through December. Here’s our concert roundup that will have you singing through the end of the year. September Jason Aldean, Florida Georgia Line and Tyler Farr Dress in your best western wear for a country concert with third time’s the charm. Platinum recording artist Aldean will sing favorites like 2008’s “Big Green Tractor” and 2010’s “Dirt Road Anthem.” Country rock fans will get their fix with FGL’s feel good songs like “Cruise” and “This is How We Roll.” And the show wouldn’t be complete without Farr’s contemporary country from his 2013 album “Redneck Crazy.” The show will be at Ak-Chin Pavillion on Sept. 20 at 7:30 p.m. Click here for more info. Katy Perry Get ready to sing and dance to your favorite girl power anthems at “The Prismatic World Tour” with “Closer” musical guests Tegan and Sara. Join the girls at Jobing.com Arena on Sept. 25 at 7 p.m. Click here for more info. 1 / 4 10 Must-Attend Phoenix Concerts This Fall Written by Tamara Kraus Drake Vs. Lil Wayne Hear a unique spin on battle of the bands with the “Forever” and “Lollipop” rappers at Ak-Chin Pavillion on Sept. 25 at 7 p.m. Visit Live Nation for tickets. October The Eagles More than four decades ago, the rock band brought us timeless hits “Hotel California” and “Desperado.” The concert starts at 8 p.m. -

CONNECT SELECT WEEKEND EDITION a Wide Selection of Interesting and Trending Stories – Rip ‘Em, Read ‘Em and Post ‘Em! FRIDAY, FEBRUARY 26Th, 2021

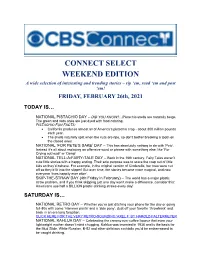

CONNECT SELECT WEEKEND EDITION A wide selection of interesting and trending stories – rip ‘em, read ‘em and post ‘em! FRIDAY, FEBRUARY 26th, 2021 TODAY IS… NATIONAL PISTACHIO DAY – DID YOU KNOW?…Pistachio shells are naturally beige. The green and reds ones are just dyed with food coloring. PISTACHIO FUN FACTS: California produces almost all of America’s pistachio crop - about 300 million pounds each year. The shells naturally split when the nuts are ripe, so don’t bother breaking a tooth on the closed ones. NATIONAL ‘FOR PETE’S SAKE’ DAY – This has absolutely nothing to do with ‘Pete’. Instead it’s all about replacing an offensive word or phrase with something else: like ‘For Crying out loud!’ or ‘Dang!’ NATIONAL TELL-A-FAIRY-TALE DAY – Back in the 16th century, Fairy Tales weren’t cute little stories with a happy ending. Their sole purpose was to scare the crap out of little kids so they’d behave. For example, in the original version of Cinderella, her toes were cut off so they’d fit into the slipper! But over time, the stories became more magical, and now everyone ‘lives happily ever after’. SKIP-THE-STRAW DAY (4th Friday in February) – The world has a major plastic straw problem, and if you think skipping just one day won’t make a difference, consider this: Americans use half a BILLION plastic drinking straws every day! SATURDAY IS… NATIONAL RETRO DAY – Whether you’re just ditching your phone for the day or going full-80s with some ‘Hammer pants’ and a ‘side pony’, dust off your favorite ‘throwback’ and bask in an era long forgotten. -

Garth Songs: Ainʼt Going Down Rodeo the Dance Papa Loves Mama the River Thunder Rolls That Summer Much Too Young Baton Rouge

Garth songs: Ainʼt going down Rodeo The dance Papa loves mama The river Thunder rolls That summer Much too young Baton Rouge Tim McGraw Live like you were dying Meanwhile back at mamas Donʼt take the girl The highway donʼt care Sleep on it Diamond rings and old barstools Red rag top Shot gun rider Green grass grows I like it I love it Something like that Miranda Lambert: Baggage claim Tin man Vice Mamas broken heart Pink sunglasses Gunpowder and lead Kerosene Little red wagon House that built me Famous in a small town Hell on heels Alan Jackson: Chattahootchie Here in the real world 5 oʼclock somewhere Remember when Mercury blues Donʼt rock the jukebox Chasing that neon rainbow Summertime blues Must be love Whoʼs cheating who Vince Gill: Liza Jane Cowgirls do Dont let our love start slipping away I still believe in you Trying to get over you One more last chance Whenever you come around Little Adriana Shania Twain: Still the one Whoʼs bed have your boots been under Any man of mine From this moment on No one needs to know Brooks and Dunn: Neon moon Rock my world My Maria Boot scootin boogie Brand new man Red dirt road Hillbilly delux Long goodbye Believe Getting better all the time George strait All my exes Fireman The chair Check yes or no Amarillo by morning Ocean front property Carrying your love with me Write this down Loves gonna make it Blake Shelton God gave me you Boys round here Home Neon light Some beach Old red Sangria Turning me on Who are you when Iʼm not looking Lonely tonight Toby Keith: Shoulda been a cowboy Red solo cup -

Atlantic Crossing

Artists like WARRENAI Tour Hard And Push Tap Could The Jam Band M Help Save The Music? ATLANTIC CROSSING 1/0000 ZOV£-10806 V3 Ii3V39 9N01 MIKA INVADES 3AV W13 OVa a STOO AlN33219 AINOW AMERICA IIII""1111"111111""111"1"11"11"1"Illii"P"II"1111 00/000 tOV loo OPIVW#6/23/00NE6TON 00 11910-E N3S,,*.***************...*. 3310NX0A .4 www.americanradiohistory.com "No, seriously, I'm at First Entertainment. Yeah, I know it's Saturday:" So there I was - 9:20 am, relaxing on the patio, enjoying the free gourmet coffee, being way too productive via the free WiFi, when it dawned on me ... I'm at my credit union. AND, it's Saturday morning! First Entertainment Credit Union is proud to announce the opening of our newest branch located at 4067 Laurel Canyon Blvd. near Ventura Blvd. in Studio City. Our members are all folks just like you - entertainment industry people with, you know... important stuff to do. Stop by soon - the java's on us, the patio is always open, and full - service banking convenience surrounds you. We now have 9 convenient locations in the Greater LA area to serve you. All branches are FIRSTENTERTAINMENT ¡_ CREDITUNION open Monday - Thursday 8:30 am to 5:00 pm and An Alternative Way to Bank k Friday 8:30 am to 6:00 pm and online 24/7. Plus, our Studio City Branch is also open on Saturday Everyone welcome! 9:00 am to 2:00 pm. We hope to see you soon. If you're reading this ad, you're eligible to join. -

JIAO, WEI, D.M.A. Chinese and Western Elements in Contemporary

JIAO, WEI, D.M.A. Chinese and Western Elements in Contemporary Chinese Composer Zhou Long’s Works for Solo Piano Mongolian Folk-Tune Variations, Wu Kui, and Pianogongs. (2014) Directed by Dr. Andrew Willis. 136 pp. Zhou Long is a Chinese American composer who strives to combine traditional Chinese musical techniques with modern Western compositional ideas. His three piano pieces, Mongolian Folk Tune Variations, Wu Kui, and Pianogongs each display his synthesis of Eastern and Western techniques. A brief cultural, social and political review of China throughout Zhou Long’s upbringing will provide readers with a historical perspective on the influence of Chinese culture on his works. Study of Mongolian Folk Tune Variations will reveal the composers early attempts at Western structure and harmonic ideas. Wu Kui provides evidence of the composer’s desire to integrate Chinese cultural ideas with modern and dissonant harmony. Finally, the analysis of Pianogongs will provide historical context to the use of traditional Chinese percussion instruments and his integration of these instruments with the piano. Zhou Long comes from an important generation of Chinese composers including, Chen Yi and Tan Dun, that were able to leave China achieve great success with the combination of Eastern and Western ideas. This study will deepen the readers’ understanding of the Chinese cultural influences in Zhou Long’s piano compositions. CHINESE AND WESTERN ELEMENTS IN CONTEMPORARY CHINESE COMPOSER ZHOU LONG’S WORKS FOR SOLO PIANO MONGOLIAN FOLK-TUNE VARIATIONS, WU KUI, AND PIANOGONGS by Wei Jiao A Dissertation Submitted to the Faculty of the Graduate School at The University of North Carolina at Greensboro in Partial Fulfillment of the Requirements for the Degree Doctor of Musical Arts Greensboro 2014 Approved by _________________________________ Committee Chair © 2014 Wei Jiao APPROVAL PAGE This dissertation has been approved by the following committee of the Faculty of The Graduate School at The University of North Carolina at Greensboro. -

The Aesthetics of Chinese Classical Theatre

THE AESTHETICS OF CHINESE CLASSICAL THEATRE - A PERFORMER’S VIEW Yuen Ha TANG A thesis submitted for the degree of Doctor of Philosophy of The Australian National University. March, 2016 © Copyright by Yuen Ha TANG 2016 All Rights Reserved This thesis is based entirely upon my own research. Yuen Ha TANG ACKNOWLEDGMENT I owe a debt of gratitude to my supervisor Professor John Minford, for having encouraged me to undertake this study in the first place and for guiding my studies over several years. He gave me generously of his time and knowledge. Since I first became their student in Shanghai in the 1980s, my teachers Yu Zhenfei and Zhang Meijuan (and others) inspired me with their passionate love for the theatre and their unceasing quest for artistic refinement. I am deeply grateful to them all for having passed on to me their rich store of artistry and wisdom. My parents, my sister and her family, have supported me warmly over the years. My mother initiated me in the love of the theatre, and my father urged me to pursue my dream and showed me the power of perseverance. My thanks also go to Geng Tianyuan, fellow artist over many years. His inspired guidance as a director has taught me a great deal. Zhang Peicai and Sun Youhao, outstanding musicians, offered their help in producing the video materials of percussive music. Qi Guhua, my teacher of calligraphy, enlightened me on this perennial and fascinating art. The Hong Kong Arts Development Council has subsidized my company, Jingkun Theatre, since 1998. My assistant Lily Lau has provided much valuable help and my numerous friends and students have given me their constant loyalty and enthusiasm.