A True Lie About Reed College: U.S News Ranking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lawrence University (1-1, 0-0 MWC North) at Beloit College (1-1, 0-0

Lawrence University (1-1, 0-0 MWC North) at Beloit College (1-1, 0-0 MWC North) Saturday, September 19, 2015, 1 p.m., Strong Stadium, Beloit, Wisconsin Webcast making his first start, was 23-for-36 ing possession and moved 75 yards A free video webcast is available for 274 yards and three touchdowns. in 12 plays for the game’s first touch- at: http://portal.stretchinternet.com/ Mandich, a senior receiver from Green down. Byrd hit freshman receiver and lawrence/. Bay, had a career-high eight catches Appleton native Cole Erickson with an for 130 yards and a touchdown for the eight-yard touchdown pass to com- The Series Vikings. plete the drive and give Lawrence a Lawrence holds a 58-36-5 edge in The Lawrence defense limited 7-3 lead. a series that dates all the way back to Beloit to 266 yards and made a key The Vikings then put together 1899. This year marks the 100th game stop late in the game to preserve the another long scoring drive early in in the series, which is the second- victory. Linebacker Brandon Taylor the second quarter. Lawrence went longest rivalry for Lawrence. The Vi- paced the Lawrence defense with 14 80 yards in eight plays and Byrd found kings have played 114 games against tackles and two pass breakups. Trevor Spina with a 24-yard touch- Ripon, and that series dates to 1893. Beloit was down by eight but got down pass for a 14-3 Lawrence lead Lawrence has won three of the last an interception on a tipped ball and with 11:53 left in the first half. -

Below Is a Sampling of the Nearly 500 Colleges, Universities, and Service Academies to Which Our Students Have Been Accepted Over the Past Four Years

Below is a sampling of the nearly 500 colleges, universities, and service academies to which our students have been accepted over the past four years. Allegheny College Connecticut College King’s College London American University Cornell University Lafayette College American University of Paris Dartmouth College Lehigh University Amherst College Davidson College Loyola Marymount University Arizona State University Denison University Loyola University Maryland Auburn University DePaul University Macalester College Babson College Dickinson College Marist College Bard College Drew University Marquette University Barnard College Drexel University Maryland Institute College of Art Bates College Duke University McDaniel College Baylor University Eckerd College McGill University Bentley University Elon University Miami University, Oxford Binghamton University Emerson College Michigan State University Boston College Emory University Middlebury College Boston University Fairfield University Morehouse College Bowdoin College Florida State University Mount Holyoke College Brandeis University Fordham University Mount St. Mary’s University Brown University Franklin & Marshall College Muhlenberg College Bucknell University Furman University New School, The California Institute of Technology George Mason University New York University California Polytechnic State University George Washington University North Carolina State University Carleton College Georgetown University Northeastern University Carnegie Mellon University Georgia Institute of Technology -

COLLEGE VISITS College Visits

1 COLLEGE VISITS College Visits Why Visit Colleges? Visiting colleges and going on official campus tours can be a great way to get a sense of whether a particular school would be a good fit. If you’re not even sure where to begin or don’t know what you want in a college environment, campus visits are a great way to figure out what you really care about in a college. If you’re not sure whether you want a large research university or a small liberal arts college, or whether you want to be in an urban, suburban, or rural location, try visiting a handful of schools in the area. We are very fortunate in the Pacific Northwest because students don’t have to venture far to get a glimpse of college life at different types of post-secondary institutions. For example a student might visit the University of Washington, Western Washington University, and Whitman or perhaps Washington State University, Seattle University, and Lewis & Clark or maybe the University of Puget Sound, Reed, Willamette, and Montana State. Visiting a small mix of different schools will give you an idea of the size, location, campus environment, and surrounding community that resonates with you. Scheduling the Visit Start by exploring the college’s admissions website to see what their visit policies are. Some schools might require advanced notice, while others are happy to welcome anyone at any time. While it can be tempting to visit only the most prestigious schools on your list, the colleges you choose to visit should be in the realm of possibility for you. -

2014 NW5C Annual Report

Northwest Five Consortium Stephen Thorsett, President Willamette University 900 State St., Salem, OR 97301 Annual Report to The Andrew W. Mellon Foundation January 1 - December 31, 2013 Grant: 41100697 * March 27, 2014 I. Introduction With the generous support of The Andrew W. Mellon Foundation, Willamette University, Whitman College, Reed College, University of Puget Sound, and Lewis & Clark College are engaged in collaborative efforts through the establishment of a new regional alliance, the Northwest Five Consortium (NW5C). Working toward the regular sharing of expertise and resources, the mission of the NW5C is to enhance the student academic experience at our five liberal arts colleges through enrichment and development of faculty as teacher-scholars. In service of this mission, the Consortium provides the infrastructure to support collaborative efforts among its member institutions, and strives to create a vibrant and sustainable intellectual community of scholars in the Pacific Northwest. The NW5C has been very active in the second year of a four-year implementation grant following a 2011 planning grant. This report highlights information regarding NW5C events such as the annual conference and faculty workshops, report-outs of completed projects from the 2013 Fund for Collaborative Inquiry (FCI) grant cycle, an overview of the grant cycle for the 2014 FCI faculty projects, confirmation of the NW5C governance structure, the developing connections between the NW5C and other regional initiatives and national organizations, consortium participation statistics, and the further development of NW5C assessment protocols and review. The progress realized in our initial work together demonstrates the value in our increasing inter-connectivity. II. Project Components To date, over 140 consortium faculty and staff members have attended NW5C events. -

Lawrence University

Lawrence University a college of liberal arts & sciences a conservatory of music 1425 undergraduates 165 faculty an engaged and engaging community internationally diverse student-centered changing lives a different kind of university 4 28 Typically atypical Lawrentians 12 College should not be a one-size-fits-all experience. Five stories of how Find the SLUG in this picture. individualized learning changes lives (Hint: It’s easy to find if you know at Lawrence. 10 what you’re looking for.) Go Do you speak Vikes! 19 Lawrentian? 26 Small City 20 Music at Lawrence Big Town 22 Freshman Studies 23 An Engaged Community 30 Life After Lawrence 32 Admission, Scholarship & Financial Aid Björklunden 18 29 33 Lawrence at a Glance Find this bench (and the serenity that comes with it) at Björklunden, Lawrence’s 425-acre A Global Perspective estate on Door County’s Lake Michigan shore. 2 | Lawrence University Lawrence University | 3 The Power of Individualized Learning College should not be a one-size-fits-all experience. Lawrence University believes students learn best when they’re educated as unique individuals — and we exert extraordinary energy making that happen. Nearly two- thirds of the courses we teach at Lawrence have the optimal (and rare) student-to-faculty ratio of 1 to 1. You read that correctly: that’s one student working under the direct guidance of one professor. Through independent study classes, honors projects, studio lessons, internships and Oxford-style tutorials — generally completed junior and senior year — students have abundant -

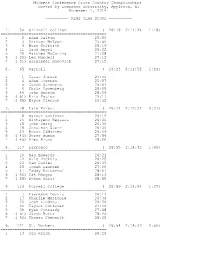

Midwest Conference Cross Country Championships Hosted by Lawrence University, Appleton, WI November 1, 2014 ===MENS TEAM

Midwest Conference Cross Country Championships Hosted by Lawrence University, Appleton, WI November 1, 2014 ========== MENS TEAM SCORE ========== 1. 54 Grinnell College ( 26:19 2:11:34 1:18) ============================================= 1 2 Adam Dalton 25:50 2 3 Anthony McLean 25:55 3 9 Evan Griffith 26:19 4 11 Zach Angel 26:22 5 29 Matthew McCarthy 27:08 6 ( 30) Lex Mundell 27:12 7 ( 31) Alexander Monovich 27:15 2. 65 Carroll ( 26:23 2:11:52 2:29) ============================================= 1 1 Isaac Jordan 25:40 2 4 Adam Joerres 25:57 3 5 Jacob Sundberg 26:01 4 6 Chris Pynenberg 26:05 5 49 Jake Hanson 28:09 6 ( 61) Eric Paulos 29:17 7 ( 65) Bryce Pierson 29:32 3. 78 Lake Forest ( 26:31 2:12:32 0:37) ============================================= 1 8 Mansur Soeleman 26:12 2 14 Sintayehu Regassa 26:26 3 15 John Derry 26:30 4 18 Jonathan Stern 26:35 5 23 Rocco DiMatteo 26:49 6 ( 43) Steve Auman 27:54 7 ( 45) Alec Bruns 28:00 4. 117 Lawrence ( 26:55 2:14:32 1:46) ============================================= 1 10 Max Edwards 26:21 2 12 Kyle Dockery 26:25 3 22 Cam Davies 26:39 4 26 Jonah Laursen 27:00 5 47 Teddy Kortenhof 28:07 6 ( 50) Pat Mangan 28:13 7 ( 55) Ethan Gniot 28:55 5. 119 Cornell College ( 26:59 2:14:54 1:37) ============================================= 1 7 Lawrence Dennis 26:11 2 17 Charlie Mesimore 26:34 3 20 Josh Lindsay 26:36 4 36 Taylor Christen 27:45 5 39 Ryan Conrardy 27:48 6 ( 51) Jacob Butts 28:24 7 ( 52) Thomas Chenault 28:25 6. -

The Faculty, of Which He Was Then President

Carleton Moves CoddentJy Into Its Second Century BY MERRILL E. JARCHOW 1992 CARLETON COLLEGE NORTHFIELD, MINNESOTA Q COPYRIGHT 1992 BY CARLETON COLLEGE, NORTHFIELD, MINNESOTA ALL RIGHTS RESERVED Libray of Congress Curalog Card Number: 92-72408 PRINTED IN THE UNITED STATES OF AMERICA Cover: Old and New: Scoville (1895). Johnson Hall (admissions) / Campus Club (under construction) Contents Foreword ...................................................................................vii Acknowledgements ...................................................................xi 1: The Nason Years ........................................................................1 2: The Swearer Years ....................................................................27 3: The Edwards Years ...................................................................69 4: The Porter Year .......................................................................105 5: The Lewis Years ......................................................................121 Epilogue ..................................................................................155 Appendix .................................................................................157 iii Illustrations President John W . Nason and his wife Elizabeth at the time of Carleton's centennial ..................................................2 Isabella Watson Dormitory ...............................................................4 Student Peace March in 1970 ..........................................................15 -

Oregon Facilities Authority Reed College; Private Coll/Univ - General Obligation

Oregon Facilities Authority Reed College; Private Coll/Univ - General Obligation Primary Credit Analyst: Ying Huang, San Francisco (1) 415-371-5008; [email protected] Secondary Contact: Kevin Barry, New York (1) 212-438-7337; [email protected] Table Of Contents Rating Action Stable Outlook Credit Opinion Enterprise Profile Financial Profile Related Research WWW.STANDARDANDPOORS.COM/RATINGSDIRECT SEPTEMBER 14, 2020 1 Oregon Facilities Authority Reed College; Private Coll/Univ - General Obligation Credit Profile Oregon Facs Auth, Oregon Reed Coll, Oregon Oregon Facs Auth (Reed Coll) PCU_GO Long Term Rating AA-/Stable Affirmed Oregon Facs Auth (Reed Coll) PCU_GO Long Term Rating AA-/A-1/Stable Affirmed Rating Action S&P Global Ratings affirmed its 'AA-' long-term rating on the Oregon Facilities Authority's series 2017A revenue bonds, issued for Reed College. At the same time, we affirmed the 'AA-' long-term component and the 'A-1' short-term component of the rating on Reed's series 2008 bonds. The short-term rating on the series 2008 bonds reflects a standby bond purchase agreement provided by Wells Fargo Bank N.A. expiring in January 2023. The outlook on all long-term ratings is stable. Based on unaudited financial statements prepared by management as of June 30, 2020, Reed College's outstanding debt was about $99 million. Approximately 34% of the debt consists of the series 2008A variable-rate debt, a portion of which is synthetically fixed with an interest rate swap, and the remaining 66% consists of the series 2017A fixed-rate debt. All of the outstanding bonds are secured by a general obligation of the college. -

Oak Knoll School of the Holy Child

Oak Knoll School of the Holy Child Colleges attended by graduates in the Classes of 2014-2018: American University Princeton University (4) Amherst College (2) Providence College (6) Bard College Quinnipiac University Barnard College Reed College Boston College (13) Saint Joseph’s University, Pennsylvania (3) Boston University (2) Salve Regina University Bowdoin College (2) Santa Clara University Bucknell University (6) Skidmore College (4) Carnegie Mellon University Southern Methodist University (3) Clemson University (2) Stanford University (4) Colby College Stetson University Colgate University (9) Stevens Institute of Technology College of the Holy Cross (20) Syracuse University (2) Columbia University (2) Texas Christian University Connecticut College (2) Trinity College (2) Cornell University (3) Tulane University (4) Davidson College (3) United States Military Academy Denison University University of Alabama Dickinson College (3) University of California, Berkeley Drexel University (2) University of California, Los Angeles Duke University (5) University of Colorado, Boulder Elon University (3) University of Delaware (2) Emerson College (2) University of Edinburgh (2) Emory University (2) University of Georgia Fairfield University (2) University of Maryland, College Park Fordham University (11) University of Miami (2) George Washington University (4) University of Michigan (6) Georgetown University (12) University of Mississippi Gettysburg College University of North Carolina, Chapel Hill Hamilton College University of Notre Dame -

DAVID ISAAK Orcid.Org/0000-0002-9548-3855 | [email protected] | 503.517.4890

DAVID ISAAK orcid.org/0000-0002-9548-3855 | [email protected] | 503.517.4890 EDUCATION Certificate in Web Technology Solutions 2011 University of Washington, Seattle, WA Master of Science in Library and Information Science 2007 Pratt Institute, New York, NY Graduated with distinction Bachelor of Arts in History 2003 Swarthmore College, Swarthmore, PA Minor in Astronomy PROFESSIONAL EXPERIENCE Director of Collection Services 2019 – present Reed College Library, Portland, OR § Manage acquisitions, cataloging, electronic resources, serials, and systems department § Conduct data-informed collection assessment and development § Negotiate renewals and licenses with publishers and vendors § Coordinate Federal Depository Library collection § Collaborate with faculty to develop and deliver course-integrated information literacy instruction § Provide reference services § Supervise two librarians, three specialists, and multiple undergraduate student workers Data Services Librarian 2015 – 2019 Reed College Library, Portland, OR § Advised faculty and students on data curation best practices § Assisted faculty and students in locating and acquiring datasets § Wrote data management plans § Coordinated Federal Depository Library collection § Collaborated with faculty to develop and deliver course-integrated information literacy instruction § Provided reference services § Developed and maintained library collections § Supervised four undergraduate student workers Digital Projects Librarian 2011 – 2015 Center for Health Research, Kaiser Permanente, Portland, -

Wheaton College Catalog 2003-2005 (Pdf)

2003/2005 CATALOG WHEATON COLLEGE Norton, Massachusetts www.wheatoncollege.edu/Catalog College Calendar Fall Semester 2003–2004 Fall Semester 2004–2005 New Student Orientation Aug. 30–Sept. 2, 2003 New Student Orientation Aug. 28–Aug. 31, 2004 Labor Day September 1 Classes Begin September 1 Upperclasses Return September 1 Labor Day (no classes) September 6 Classes Begin September 3 October Break October 11–12 October Break October 13–14 Mid-Semester October 20 Mid-Semester October 22 Course Selection Nov. 18–13 Course Selection Nov. 10–15 Thanksgiving Recess Nov. 24–28 Thanksgiving Recess Nov. 26–30 Classes End December 13 Classes End December 12 Review Period Dec. 14–15 Review Period Dec. 13–14 Examination Period Dec. 16–20 Examination Period Dec. 15–20 Residence Halls Close Residence Halls Close (9:00 p.m.) December 20 (9:00 p.m.) December 20 Winter Break and Winter Break and Internship Period Dec. 20 – Jan. 25, 2005 Internship Period Dec. 20–Jan. 26, 2004 Spring Semester Spring Semester Residence Halls Open Residence Halls Open (9:00 a.m.) January 25 (9:00 a.m.) January 27, 2004 Classes Begin January 26 Classes Begin January 28 Mid–Semester March 11 Mid–Semester March 12 Spring Break March 14–18 Spring Break March 15–19 Course Selection April 11–15 Course Selection April 12–26 Classes End May 6 Classes End May 7 Review Period May 7–8 Review Period May 8–9 Examination Period May 9–14 Examination Period May 10–15 Commencement May 21 Commencement May 22 First Semester Deadlines, 2004–2005 First Semester Deadlines, 2003–2004 Course registration -

GRADUATE PROGRAM in the HISTORY of ART Williams College/Clark Art Institute

GRADUATE PROGRAM IN THE HISTORY OF ART Williams College/Clark Art Institute Summer 2003 NEWSLETTER The Class of 2003 at its Hooding Ceremony. Front row, from left to right: Pan Wendt, Elizabeth Winborne, Jane Simon, Esther Bell, Jordan Kim, Christa Carroll, Katie Hanson; back row: Mark Haxthausen, Ben Tilghman, Patricia Hickson, Don Meyer, Ellery Foutch, Kim Conary, Catherine Malone, Marc Simpson LETTER FROM THE DIRECTOR CHARLES w.: (MARK) HAxTHAUSEN Faison-Pierson-Stoddard Professor of Art History, Director of the Graduate Program With the 2002-2003 academic year the Graduate Program began its fourth decade of operation. Its success during its first thirty years outstripped the modest mission that shaped the early planning for the program: to train for regional colleges art historians who were drawn to teaching careers yet not inclined to scholarship and hence having no need to acquire the Ph.D. (It was a different world then!) Initially, those who conceived of the program - members of the Clark's board of trustees and Williams College President Jack Sawyer - seem never to have imagined that it would attain the preeminence that it quickly achieved under the stewardship of its first directors, George Heard Hamilton, Frank Robinson, and Sam Edgerton. Today the Williams/Clark program enjoys an excellent reputation for preparing students for museum careers, yet this was never its declared mission; unlike some institutions, we have never offered a degree or even a specialization in "museum studies" or "museology." Since the time of George Hamilton, the program has endeavored simply to train art historians, and in doing so it has assumed that intimacy with objects is a sine qua non for the practice of art history.