Introduction to FAST Search Server 2010 for Sharepoint

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

System Requirements and Installation

SCAD Office System Requirements and I nstallation SCAD Soft Contents System Requirements ...................................................................................................................... 3 Recommendations on Optimization of an Operational Environment ............................................. 4 Turn on (or off) the Indexing Service .......................................................................................... 4 Defragment Regularly ................................................................................................................. 4 Start Word and Excel once before Starting SCAD Office .......................................................... 4 Memory Fragmentation by the Service Programs ....................................................................... 4 Settings of the PDF-printing ........................................................................................................ 4 SCAD Office Installation ................................................................................................................ 5 Program Installation .................................................................................................................... 5 English Versions of Windows ..................................................................................................... 5 Privileges ..................................................................................................................................... 5 Network Licensing ..................................................................................................................... -

Evaluation of an Ontology-Based Knowledge-Management-System

Information Services & Use 25 (2005) 181–195 181 IOS Press Evaluation of an Ontology-based Knowledge-Management-System. A Case Study of Convera RetrievalWare 8.0 1 Oliver Bayer, Stefanie Höhfeld ∗, Frauke Josbächer, Nico Kimm, Ina Kradepohl, Melanie Kwiatkowski, Cornelius Puschmann, Mathias Sabbagh, Nils Werner and Ulrike Vollmer Heinrich-Heine-University Duesseldorf, Institute of Language and Information, Department of Information Science, Universitaetsstraße 1, D-40225 Duesseldorf, Germany Abstract. With RetrievalWare 8.0TM the American company Convera offers an elaborated software in the range of Information Retrieval, Information Indexing and Knowledge Management. Convera promises the possibility of handling different file for- mats in many different languages. Regarding comparable products one innovation is to be stressed particularly: the possibility of the preparation as well as integration of an ontology. One tool of the software package is useful in order to produce ontologies manually, to process existing ontologies and to import the very. The processing of search results is also to be mentioned. By means of categorization strategies search results can be classified dynamically and presented in personalized representations. This study presents an evaluation of the functions and components of the system. Technological aspects and modes of opera- tion under the surface of Convera RetrievalWare will be analysed, with a focus on the creation of libraries and thesauri, and the problems posed by the integration of an existing thesaurus. Broader aspects such as usability and system ergonomics are integrated in the examination as well. Keywords: Categorization, Convera RetrievalWare, disambiguation, dynamic classification, information indexing, information retrieval, ISO 5964, knowledge management, ontology, user interface, taxonomy, thesaurus 1. -

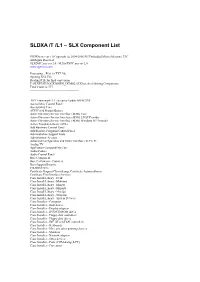

SLDXA /T /L1 – SLX Component List

SLDXA /T /L1 – SLX Component List SLDXA.exe ver 1.0 Copyright (c) 2004-2006 SJJ Embedded Micro Solutions, LLC All Rights Reserved SLXDiffC.exe ver 2.0 / SLXtoTXTC.exe ver 2.0 www.sjjmicro.com Processing... File1 to TXT file. Opening XSL File Reading RTF for final conversion F:\SLXTEST\LOCKDOWN_DEMO2.SLX has the following Components Total Count is: 577 -------------------------------------------------- .NET Framework 1.1 - Security Update KB887998 Accessibility Control Panel Accessibility Core ACPI Fixed Feature Button Active Directory Service Interface (ADSI) Core Active Directory Service Interface (ADSI) LDAP Provider Active Directory Service Interface (ADSI) Windows NT Provider Active Template Library (ATL) Add Hardware Control Panel Add/Remove Programs Control Panel Administration Support Tools Administrator Account Advanced Configuration and Power Interface (ACPI) PC Analog TV Application Compatibility Core Audio Codecs Audio Control Panel Base Component Base Performance Counters Base Support Binaries CD-ROM Drive Certificate Request Client & Certificate Autoenrollment Certificate User Interface Services Class Install Library - Desk Class Install Library - Mdminst Class Install Library - Mmsys Class Install Library - Msports Class Install Library - Netcfgx Class Install Library - Storprop Class Install Library - System Devices Class Installer - Computer Class Installer - Disk drives Class Installer - Display adapters Class Installer - DVD/CD-ROM drives Class Installer - Floppy disk controllers Class Installer - Floppy disk drives -

Microsoft Patches Were Evaluated up to and Including CVE-2020-1587

Honeywell Commercial Security 2700 Blankenbaker Pkwy, Suite 150 Louisville, KY 40299 Phone: 1-502-297-5700 Phone: 1-800-323-4576 Fax: 1-502-666-7021 https://www.security.honeywell.com The purpose of this document is to identify the patches that have been delivered by Microsoft® which have been tested against Pro-Watch. All the below listed patches have been tested against the current shipping version of Pro-Watch with no adverse effects being observed. Microsoft Patches were evaluated up to and including CVE-2020-1587. Patches not listed below are not applicable to a Pro-Watch system. 2020 – Microsoft® Patches Tested with Pro-Watch CVE-2020-1587 Windows Ancillary Function Driver for WinSock Elevation of Privilege Vulnerability CVE-2020-1584 Windows dnsrslvr.dll Elevation of Privilege Vulnerability CVE-2020-1579 Windows Function Discovery SSDP Provider Elevation of Privilege Vulnerability CVE-2020-1578 Windows Kernel Information Disclosure Vulnerability CVE-2020-1577 DirectWrite Information Disclosure Vulnerability CVE-2020-1570 Scripting Engine Memory Corruption Vulnerability CVE-2020-1569 Microsoft Edge Memory Corruption Vulnerability CVE-2020-1568 Microsoft Edge PDF Remote Code Execution Vulnerability CVE-2020-1567 MSHTML Engine Remote Code Execution Vulnerability CVE-2020-1566 Windows Kernel Elevation of Privilege Vulnerability CVE-2020-1565 Windows Elevation of Privilege Vulnerability CVE-2020-1564 Jet Database Engine Remote Code Execution Vulnerability CVE-2020-1562 Microsoft Graphics Components Remote Code Execution Vulnerability -

User's Guide O&O Defrag

Last changes: 19.09.2018 Table of contents About O&O Defrag 22 4 Features at a glance 5 Differences between the various Editions 7 System requirements 8 Installation 10 Screen Saver 12 Online registration 14 Getting started 16 Analyze your drives 17 Defragmenting your Drives 19 Selecting a defragmentation method 22 Standard defragmentation methods 25 User Interface (GUI) 32 Drive List 33 Cluster View 34 Defragmentation summary 36 Job View and Reports 37 Status Views 38 Tray icon (Notification area icon) 40 O&O DiskCleaner 41 O&O DiskStat 44 Schedule defragmentation at regular intervals 45 Create job - General 46 Plan a schedule 48 Screen Saver Mode 50 Select drives 51 Exclude and include files 52 O&O ActivityMonitor for Jobs 54 Further job settings 56 Edit/Duplicate/Delete jobs 59 Status reports 60 Extras 62 TRIM Compatibility 68 Work within the network 79 Zone filing 82 Rules for individual drives 84 Notation for rules 86 Settings 87 General Settings 88 Boot time defragmentation 91 Automatic optimization 93 Select files for defragmentation 95 O&O ActivityMonitor 97 Technical information 99 Using the command line version 101 Status notices and program output 103 Data Security and Integrity 105 Supported hardware 106 Supported File Systems 107 Free space needed for defragmentation 108 Recommendations and FAQs 109 O&O DiskStat 3 113 System requirements 114 Installation 115 Getting started 116 End user license agreement 118 User's guide O&O Defrag About O&O Defrag 22 About O&O Defrag 22 Thank you for choosing O&O Defrag! O&O Defrag activates the hidden performance of your computer and packs file fragments efficiently and securely together. -

Technical Reference for Microsoft Sharepoint Server 2010

Technical reference for Microsoft SharePoint Server 2010 Microsoft Corporation Published: May 2011 Author: Microsoft Office System and Servers Team ([email protected]) Abstract This book contains technical information about the Microsoft SharePoint Server 2010 provider for Windows PowerShell and other helpful reference information about general settings, security, and tools. The audiences for this book include application specialists, line-of-business application specialists, and IT administrators who work with SharePoint Server 2010. The content in this book is a copy of selected content in the SharePoint Server 2010 technical library (http://go.microsoft.com/fwlink/?LinkId=181463) as of the publication date. For the most current content, see the technical library on the Web. This document is provided “as-is”. Information and views expressed in this document, including URL and other Internet Web site references, may change without notice. You bear the risk of using it. Some examples depicted herein are provided for illustration only and are fictitious. No real association or connection is intended or should be inferred. This document does not provide you with any legal rights to any intellectual property in any Microsoft product. You may copy and use this document for your internal, reference purposes. © 2011 Microsoft Corporation. All rights reserved. Microsoft, Access, Active Directory, Backstage, Excel, Groove, Hotmail, InfoPath, Internet Explorer, Outlook, PerformancePoint, PowerPoint, SharePoint, Silverlight, Windows, Windows Live, Windows Mobile, Windows PowerShell, Windows Server, and Windows Vista are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. The information contained in this document represents the current view of Microsoft Corporation on the issues discussed as of the date of publication. -

Remote Indexing Feature Guide Eventtracker Version 7.X

Remote Indexing Feature Guide EventTracker Version 7.x EventTracker 8815 Centre Park Drive Columbia MD 21045 Publication Date: Aug 10, 2015 www.eventtracker.com EventTracker Feature Guide - Remote Indexing Abstract The purpose of this document is to help users install, configure, and use EventTracker Indexer service on remote machines and index CAB files on the EventTracker Server machine. Intended Audience • Users of EventTracker v7.x who wish to deploy EventTracker Indexer service on remote machine to index CAB files on the EventTracker Server thus reducing the workload and improving the performance of the EventTracker Server. • Technical evaluator of EventTracker who seeks to understand how the feature is implemented and its limitations. The information contained in this document represents the current view of Prism Microsystems, Inc. on the issues discussed as of the date of publication. Because Prism Microsystems, Inc. must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Prism Microsystems, Inc. and Prism Microsystems, Inc. cannot guarantee the accuracy of any information presented after the date of publication. This document is for informational purposes only. Prism Microsystems, Inc. MAKES NO WARRANTIES, EXPRESS OR IMPLIED, AS TO THE INFORMATION IN THIS DOCUMENT. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, this Guide may be freely distributed without permission from Prism, as long as its content is unaltered, nothing is added to the content and credit to Prism is provided. Prism Microsystems, Inc. may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. -

Line 6 Gearbox®, Toneport®, POD®Xt, POD® X3, and Audio Recording Software Windows® XP® & Vista® - Audio Tips & Optimizations

® ® ® WINDOWS XP & VISTA AUDIO TIPS & OPTIMIZATIONS Improve the performance of your computer with Line 6 GearBox®, TonePort®, POD®xt, POD® X3, and audio recording software Windows® XP® & Vista® - Audio Tips & Optimizations TABLE OF CONTENTS Digital Audio and Your Computer ..........................................................1•1 Digital Audio Demands .................................................................................................. 1•1 Line 6 Monkey Compatibility Check ......................................................2•1 Windows® XP® Optimizations ..............................................................3•1 Making System Tweaks in Windows XP ........................................................................ 3•1 Disable Your Onboard or Add-in Sound Card ............................................................... 3•1 Turn Off Windows System Sounds ................................................................................ 3•2 Disable Error Reporting .................................................................................................. 3•3 Disable the Remote Assistance Option ......................................................................... 3•3 Turn Automatic Updates Off ........................................................................................ 3•3 Processor Scheduling ...................................................................................................... 3•3 Set Virtual Memory to a Fixed Size ............................................................................... -

Veritas Enterprise Vault™ Administrator's Guide

Veritas Enterprise Vault™ Administrator's Guide 12.1 Veritas Enterprise Vault: Administrator's Guide Last updated: 2017-07-28. Legal Notice Copyright © 2017 Veritas Technologies LLC. All rights reserved. Veritas, the Veritas Logo, Enterprise Vault, Compliance Accelerator, and Discovery Accelerator are trademarks or registered trademarks of Veritas Technologies LLC or its affiliates in the U.S. and other countries. Other names may be trademarks of their respective owners. This product may contain third party software for which Veritas is required to provide attribution to the third party (“Third Party Programs”). Some of the Third Party Programs are available under open source or free software licenses. The License Agreement accompanying the Software does not alter any rights or obligations you may have under those open source or free software licenses. Refer to the third party legal notices document accompanying this Veritas product or available at: https://www.veritas.com/about/legal/license-agreements The product described in this document is distributed under licenses restricting its use, copying, distribution, and decompilation/reverse engineering. No part of this document may be reproduced in any form by any means without prior written authorization of Veritas Technologies LLC and its licensors, if any. THE DOCUMENTATION IS PROVIDED "AS IS" AND ALL EXPRESS OR IMPLIED CONDITIONS, REPRESENTATIONS AND WARRANTIES, INCLUDING ANY IMPLIED WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE OR NON-INFRINGEMENT, ARE DISCLAIMED, EXCEPT TO THE EXTENT THAT SUCH DISCLAIMERS ARE HELD TO BE LEGALLY INVALID. VERITAS TECHNOLOGIES LLC SHALL NOT BE LIABLE FOR INCIDENTAL OR CONSEQUENTIAL DAMAGES IN CONNECTION WITH THE FURNISHING, PERFORMANCE, OR USE OF THIS DOCUMENTATION. -

Evaluation of an Ontology-Based Knowledge- Management-System. a Case Study of Convera Retrievalware 8.0

See discussions, stats, and author profiles for this publication at: http://www.researchgate.net/publication/228969390 Evaluation of an ontology-based knowledge- management-system. A case study of Convera RetrievalWare 8.0 ARTICLE in INFORMATION SERVICES & USE · JANUARY 2005 CITATIONS DOWNLOADS VIEWS 2 11 210 10 AUTHORS, INCLUDING: Cornelius Puschmann Mathias Sabbagh Zeppelin University Heinrich-Heine-Universität Düsseldorf 30 PUBLICATIONS 62 CITATIONS 1 PUBLICATION 2 CITATIONS SEE PROFILE SEE PROFILE Available from: Mathias Sabbagh Retrieved on: 22 June 2015 Information Services & Use 25 (2005) 181–195 181 IOS Press Evaluation of an Ontology-based Knowledge-Management-System. A Case Study of Convera RetrievalWare 8.0 1 Oliver Bayer, Stefanie Höhfeld ∗, Frauke Josbächer, Nico Kimm, Ina Kradepohl, Melanie Kwiatkowski, Cornelius Puschmann, Mathias Sabbagh, Nils Werner and Ulrike Vollmer Heinrich-Heine-University Duesseldorf, Institute of Language and Information, Department of Information Science, Universitaetsstraße 1, D-40225 Duesseldorf, Germany Abstract. With RetrievalWare 8.0TM the American company Convera offers an elaborated software in the range of Information Retrieval, Information Indexing and Knowledge Management. Convera promises the possibility of handling different file for- mats in many different languages. Regarding comparable products one innovation is to be stressed particularly: the possibility of the preparation as well as integration of an ontology. One tool of the software package is useful in order to produce ontologies manually, to process existing ontologies and to import the very. The processing of search results is also to be mentioned. By means of categorization strategies search results can be classified dynamically and presented in personalized representations. This study presents an evaluation of the functions and components of the system. -

The Response by the Security Community to Are All Retained

The magazine you!re reading was put together during an extremely busy few months that saw us pile up frequent flier miles on the way to several conferences. You can read about some of them in the pages that follow, specifically RSA Conference 2009, Infosecurity Europe 2009 and Black Hat Europe 2009. This issue brings forward many hot topics from respected security professionals located all over the world. There!s an in-depth review of IronKey, and to round it all up, there are three interviews that you!ll surely find stimulating. This edition of (IN)SECURE should keep you busy during the summer, but keep in mind that we!re coming back in September! Articles are already piling in so get in touch if you have something to share. Mirko Zorz Editor in Chief Visit the magazine website at www.insecuremag.com (IN)SECURE Magazine contacts Feedback and contributions: Mirko Zorz, Editor in Chief - [email protected] Marketing: Berislav Kucan, Director of Marketing - [email protected] Distribution (IN)SECURE Magazine can be freely distributed in the form of the original, non modified PDF document. Distribution of modified versions of (IN)SECURE Magazine content is prohibited without the explicit permission from the editor. Copyright HNS Consulting Ltd. 2009. www.insecuremag.com Qualys adds Web application scanning to QualysGuard Qualys added QualysGuard Web Application Scanning (WAS) 1.0 to the QualysGuard Security and Compliance Software-as-a- Service (SaaS) Suite, the company!s flagship solution for IT secu- rity risk and compliance management. Delivered through a SaaS model, QualysGuard WAS delivers automated crawling and test- ing for custom Web applications to identify most common vulner- abilities such as those in the OWASP Top 10 and WASC Threat Classification, including SQL injection and cross-site scripting. -

Geethanjali College of Engineering and Technology Cheeryal(V), Keesara(M), R.R.Dist-501 301

Geethanjali College of Engineering and Technology Cheeryal(V), Keesara(M), R.R.Dist-501 301 DEPARTMENT OF CSE QC Format No.: Name of the Subject/Lab Course: Information Retrieval Systems Programme: UG ------------------------------------------------------------------------------------------------- Branch: CSE Version No: 1.0 Year: 4 Updated On: Semester: 1 No.of Pages: 100 ----------------------------------------------------------------------------------------------------------- Classification Status: (Unrestricted/Restricted) Distribution List: ----------------------------------------------------------------------------------------------------------- Prepared By: 1.Name: M . Raja Krishna Kumar 2.Sign: 3.Design: Associate Professor 4.Date: ----------------------------------------------------------------------------------------------------------- Verified By: * For Q.C Only: 1.Name: 1.Name: 2.Sign: 2.Sign: 3.Design: 3.Design: 4.Date 4.Date ----------------------------------------------------------------------------------------------------------- Approved By:(HOD) 1.Name: Prof. Dr. P. V. S. Srinivas 2.Sign: 3.Date: Department of CSE Information Retrieval Systems Subject File By M. Raja Krishna Kumar AMIETE(IT), M.TECH(IP), (Ph.D.(CSE)) Associate Professor Page 2 of 100 Contents S.No. Name of the Topic Page No 1. Detailed Lecture Notes on all Units 2. 3. 4. 5. Page 3 of 100 JNTU Syllabus UNIT-I Introduction: Definition, Objectives, Functional Overview, Relationship to DBMS, Digital libraries and Data Warehouses. Information Retrieval System