Automatically Indexing Millions of Databases in Microsoft Azure SQL Database Sudipto Das, Miroslav Grbic, Igor Ilic, Isidora Jovandic, Andrija Jovanovic, Vivek R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

System Requirements and Installation

SCAD Office System Requirements and I nstallation SCAD Soft Contents System Requirements ...................................................................................................................... 3 Recommendations on Optimization of an Operational Environment ............................................. 4 Turn on (or off) the Indexing Service .......................................................................................... 4 Defragment Regularly ................................................................................................................. 4 Start Word and Excel once before Starting SCAD Office .......................................................... 4 Memory Fragmentation by the Service Programs ....................................................................... 4 Settings of the PDF-printing ........................................................................................................ 4 SCAD Office Installation ................................................................................................................ 5 Program Installation .................................................................................................................... 5 English Versions of Windows ..................................................................................................... 5 Privileges ..................................................................................................................................... 5 Network Licensing ..................................................................................................................... -

Differential Fuzzing the Webassembly

Master’s Programme in Security and Cloud Computing Differential Fuzzing the WebAssembly Master’s Thesis Gilang Mentari Hamidy MASTER’S THESIS Aalto University - EURECOM MASTER’STHESIS 2020 Differential Fuzzing the WebAssembly Fuzzing Différentiel le WebAssembly Gilang Mentari Hamidy This thesis is a public document and does not contain any confidential information. Cette thèse est un document public et ne contient aucun information confidentielle. Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Technology. Antibes, 27 July 2020 Supervisor: Prof. Davide Balzarotti, EURECOM Co-Supervisor: Prof. Jan-Erik Ekberg, Aalto University Copyright © 2020 Gilang Mentari Hamidy Aalto University - School of Science EURECOM Master’s Programme in Security and Cloud Computing Abstract Author Gilang Mentari Hamidy Title Differential Fuzzing the WebAssembly School School of Science Degree programme Master of Science Major Security and Cloud Computing (SECCLO) Code SCI3084 Supervisor Prof. Davide Balzarotti, EURECOM Prof. Jan-Erik Ekberg, Aalto University Level Master’s thesis Date 27 July 2020 Pages 133 Language English Abstract WebAssembly, colloquially known as Wasm, is a specification for an intermediate representation that is suitable for the web environment, particularly in the client-side. It provides a machine abstraction and hardware-agnostic instruction sets, where a high-level programming language can target the compilation to the Wasm instead of specific hardware architecture. The JavaScript engine implements the Wasm specification and recompiles the Wasm instruction to the target machine instruction where the program is executed. Technically, Wasm is similar to a popular virtual machine bytecode, such as Java Virtual Machine (JVM) or Microsoft Intermediate Language (MSIL). -

XML a New Web Site Architecture

XML A New Web Site Architecture Jim Costello Derek Werthmuller Darshana Apte Center for Technology in Government University at Albany, SUNY 1535 Western Avenue Albany, NY 12203 Phone: (518) 442-3892 Fax: (518) 442-3886 E-mail: [email protected] http://www.ctg.albany.edu September 2002 © 2002 Center for Technology in Government The Center grants permission to reprint this document provided this cover page is included. Table of Contents XML: A New Web Site Architecture .......................................................................................................................... 1 A Better Way? ......................................................................................................................................................... 1 Defining the Problem.............................................................................................................................................. 1 Partial Solutions ...................................................................................................................................................... 2 Addressing the Root Problems .............................................................................................................................. 2 Figure 1. Sample XML file (all code simplified for example) ...................................................................... 4 Figure 2. Sample XSL File (all code simplified for example) ....................................................................... 6 Figure 3. Formatted Page Produced -

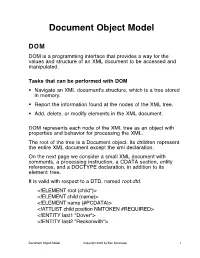

Document Object Model

Document Object Model DOM DOM is a programming interface that provides a way for the values and structure of an XML document to be accessed and manipulated. Tasks that can be performed with DOM . Navigate an XML document's structure, which is a tree stored in memory. Report the information found at the nodes of the XML tree. Add, delete, or modify elements in the XML document. DOM represents each node of the XML tree as an object with properties and behavior for processing the XML. The root of the tree is a Document object. Its children represent the entire XML document except the xml declaration. On the next page we consider a small XML document with comments, a processing instruction, a CDATA section, entity references, and a DOCTYPE declaration, in addition to its element tree. It is valid with respect to a DTD, named root.dtd. <!ELEMENT root (child*)> <!ELEMENT child (name)> <!ELEMENT name (#PCDATA)> <!ATTLIST child position NMTOKEN #REQUIRED> <!ENTITY last1 "Dover"> <!ENTITY last2 "Reckonwith"> Document Object Model Copyright 2005 by Ken Slonneger 1 Example: root.xml <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE root SYSTEM "root.dtd"> <!-- root.xml --> <?DomParse usage="java DomParse root.xml"?> <root> <child position="first"> <name>Eileen &last1;</name> </child> <child position="second"> <name><![CDATA[<<<Amanda>>>]]> &last2;</name> </child> <!-- Could be more children later. --> </root> DOM imagines that this XML information has a document root with four children: 1. A DOCTYPE declaration. 2. A comment. 3. A processing instruction, whose target is DomParse. 4. The root element of the document. The second comment is a child of the root element. -

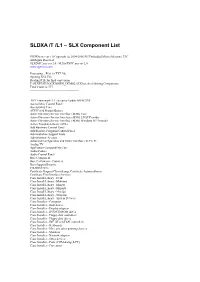

SLDXA /T /L1 – SLX Component List

SLDXA /T /L1 – SLX Component List SLDXA.exe ver 1.0 Copyright (c) 2004-2006 SJJ Embedded Micro Solutions, LLC All Rights Reserved SLXDiffC.exe ver 2.0 / SLXtoTXTC.exe ver 2.0 www.sjjmicro.com Processing... File1 to TXT file. Opening XSL File Reading RTF for final conversion F:\SLXTEST\LOCKDOWN_DEMO2.SLX has the following Components Total Count is: 577 -------------------------------------------------- .NET Framework 1.1 - Security Update KB887998 Accessibility Control Panel Accessibility Core ACPI Fixed Feature Button Active Directory Service Interface (ADSI) Core Active Directory Service Interface (ADSI) LDAP Provider Active Directory Service Interface (ADSI) Windows NT Provider Active Template Library (ATL) Add Hardware Control Panel Add/Remove Programs Control Panel Administration Support Tools Administrator Account Advanced Configuration and Power Interface (ACPI) PC Analog TV Application Compatibility Core Audio Codecs Audio Control Panel Base Component Base Performance Counters Base Support Binaries CD-ROM Drive Certificate Request Client & Certificate Autoenrollment Certificate User Interface Services Class Install Library - Desk Class Install Library - Mdminst Class Install Library - Mmsys Class Install Library - Msports Class Install Library - Netcfgx Class Install Library - Storprop Class Install Library - System Devices Class Installer - Computer Class Installer - Disk drives Class Installer - Display adapters Class Installer - DVD/CD-ROM drives Class Installer - Floppy disk controllers Class Installer - Floppy disk drives -

Extensible Markup Language (XML) and Its Role in Supporting the Global Justice XML Data Model

Extensible Markup Language (XML) and Its Role in Supporting the Global Justice XML Data Model Extensible Markup Language, or "XML," is a computer programming language designed to transmit both data and the meaning of the data. XML accomplishes this by being a markup language, a mechanism that identifies different structures within a document. Structured information contains both content (such as words, pictures, or video) and an indication of what role content plays, or its meaning. XML identifies different structures by assigning data "tags" to define both the name of a data element and the format of the data within that element. Elements are combined to form objects. An XML specification defines a standard way to add markup language to documents, identifying the embedded structures in a consistent way. By applying a consistent identification structure, data can be shared between different systems, up and down the levels of agencies, across the nation, and around the world, with the ease of using the Internet. In other words, XML lays the technological foundation that supports interoperability. XML also allows structured relationships to be defined. The ability to represent objects and their relationships is key to creating a fully beneficial justice information sharing tool. A simple example can be used to illustrate this point: A "person" object may contain elements like physical descriptors (e.g., eye and hair color, height, weight), biometric data (e.g., DNA, fingerprints), and social descriptors (e.g., marital status, occupation). A "vehicle" object would also contain many elements (such as description, registration, and/or lien-holder). The relationship between these two objects—person and vehicle— presents an interesting challenge that XML can address. -

An XML Model of CSS3 As an XƎL ATEX-TEXML-HTML5 Stylesheet

An XML model of CSS3 as an XƎLATEX-TEXML-HTML5 stylesheet language S. Sankar, S. Mahalakshmi and L. Ganesh TNQ Books and Journals Chennai Abstract HTML5[1] and CSS3[2] are popular languages of choice for Web development. However, HTML and CSS are prone to errors and difficult to port, so we propose an XML version of CSS that can be used as a standard for creating stylesheets and tem- plates across different platforms and pagination systems. XƎLATEX[3] and TEXML[4] are some examples of XML that are close in spirit to TEX that can benefit from such an approach. Modern TEX systems like XƎTEX and LuaTEX[5] use simplified fontspec macros to create stylesheets and templates. We use XSLT to create mappings from this XML-stylesheet language to fontspec-based TEX templates and also to CSS3. We also provide user-friendly interfaces for the creation of such an XML stylesheet. Style pattern comparison with Open Office and CSS Now a days, most of the modern applications have implemented an XML package format that includes an XML implementation of stylesheet: InDesign has its own IDML[6] (InDesign Markup Language) XML package format and MS Word has its own OOXML[7] format, which is another ISO standard format. As they say ironically, the nice thing about standards is that there are plenty of them to choose from. However, instead of creating one more non-standard format, we will be looking to see how we can operate closely with current standards. Below is a sample code derived from OpenOffice document format: <style:style style:name=”Heading_20_1” style:display-name=”Heading -

Markup Languages SGML, HTML, XML, XHTML

Markup Languages SGML, HTML, XML, XHTML CS 431 – February 13, 2006 Carl Lagoze – Cornell University Problem • Richness of text – Elements: letters, numbers, symbols, case – Structure: words, sentences, paragraphs, headings, tables – Appearance: fonts, design, layout – Multimedia integration: graphics, audio, math – Internationalization: characters, direction (up, down, right, left), diacritics • Its not all text Text vs. Data • Something for humans to read • Something for machines to process • There are different types of humans • Goal in information infrastructure should be as much automation as possible • Works vs. manifestations • Parts vs. wholes • Preservation: information or appearance? Who controls the appearance of text? • The author/creator of the document • Rendering software (e.g. browser) – Mapping from markup to appearance •The user –Window size –Fonts and size Important special cases • User has special requirements – Physical abilities –Age/education level – Preference/mood • Client has special capabilities – Form factor (mobile device) – Network connectivity Page Description Language • Postscript, PDF • Author/creator imprints rendering instructions in document – Where and how elements appear on the page in pixels Markup languages •SGML, XML • Represent structure of text • Must be combined with style instructions for rendering on screen, page, device Markup and style sheets document content Marked-up document & structure style sheet rendering rendering software instructions formatted document Multiple renderings from same -

Microsoft Patches Were Evaluated up to and Including CVE-2020-1587

Honeywell Commercial Security 2700 Blankenbaker Pkwy, Suite 150 Louisville, KY 40299 Phone: 1-502-297-5700 Phone: 1-800-323-4576 Fax: 1-502-666-7021 https://www.security.honeywell.com The purpose of this document is to identify the patches that have been delivered by Microsoft® which have been tested against Pro-Watch. All the below listed patches have been tested against the current shipping version of Pro-Watch with no adverse effects being observed. Microsoft Patches were evaluated up to and including CVE-2020-1587. Patches not listed below are not applicable to a Pro-Watch system. 2020 – Microsoft® Patches Tested with Pro-Watch CVE-2020-1587 Windows Ancillary Function Driver for WinSock Elevation of Privilege Vulnerability CVE-2020-1584 Windows dnsrslvr.dll Elevation of Privilege Vulnerability CVE-2020-1579 Windows Function Discovery SSDP Provider Elevation of Privilege Vulnerability CVE-2020-1578 Windows Kernel Information Disclosure Vulnerability CVE-2020-1577 DirectWrite Information Disclosure Vulnerability CVE-2020-1570 Scripting Engine Memory Corruption Vulnerability CVE-2020-1569 Microsoft Edge Memory Corruption Vulnerability CVE-2020-1568 Microsoft Edge PDF Remote Code Execution Vulnerability CVE-2020-1567 MSHTML Engine Remote Code Execution Vulnerability CVE-2020-1566 Windows Kernel Elevation of Privilege Vulnerability CVE-2020-1565 Windows Elevation of Privilege Vulnerability CVE-2020-1564 Jet Database Engine Remote Code Execution Vulnerability CVE-2020-1562 Microsoft Graphics Components Remote Code Execution Vulnerability -

User's Guide O&O Defrag

Last changes: 19.09.2018 Table of contents About O&O Defrag 22 4 Features at a glance 5 Differences between the various Editions 7 System requirements 8 Installation 10 Screen Saver 12 Online registration 14 Getting started 16 Analyze your drives 17 Defragmenting your Drives 19 Selecting a defragmentation method 22 Standard defragmentation methods 25 User Interface (GUI) 32 Drive List 33 Cluster View 34 Defragmentation summary 36 Job View and Reports 37 Status Views 38 Tray icon (Notification area icon) 40 O&O DiskCleaner 41 O&O DiskStat 44 Schedule defragmentation at regular intervals 45 Create job - General 46 Plan a schedule 48 Screen Saver Mode 50 Select drives 51 Exclude and include files 52 O&O ActivityMonitor for Jobs 54 Further job settings 56 Edit/Duplicate/Delete jobs 59 Status reports 60 Extras 62 TRIM Compatibility 68 Work within the network 79 Zone filing 82 Rules for individual drives 84 Notation for rules 86 Settings 87 General Settings 88 Boot time defragmentation 91 Automatic optimization 93 Select files for defragmentation 95 O&O ActivityMonitor 97 Technical information 99 Using the command line version 101 Status notices and program output 103 Data Security and Integrity 105 Supported hardware 106 Supported File Systems 107 Free space needed for defragmentation 108 Recommendations and FAQs 109 O&O DiskStat 3 113 System requirements 114 Installation 115 Getting started 116 End user license agreement 118 User's guide O&O Defrag About O&O Defrag 22 About O&O Defrag 22 Thank you for choosing O&O Defrag! O&O Defrag activates the hidden performance of your computer and packs file fragments efficiently and securely together. -

Remote Indexing Feature Guide Eventtracker Version 7.X

Remote Indexing Feature Guide EventTracker Version 7.x EventTracker 8815 Centre Park Drive Columbia MD 21045 Publication Date: Aug 10, 2015 www.eventtracker.com EventTracker Feature Guide - Remote Indexing Abstract The purpose of this document is to help users install, configure, and use EventTracker Indexer service on remote machines and index CAB files on the EventTracker Server machine. Intended Audience • Users of EventTracker v7.x who wish to deploy EventTracker Indexer service on remote machine to index CAB files on the EventTracker Server thus reducing the workload and improving the performance of the EventTracker Server. • Technical evaluator of EventTracker who seeks to understand how the feature is implemented and its limitations. The information contained in this document represents the current view of Prism Microsystems, Inc. on the issues discussed as of the date of publication. Because Prism Microsystems, Inc. must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Prism Microsystems, Inc. and Prism Microsystems, Inc. cannot guarantee the accuracy of any information presented after the date of publication. This document is for informational purposes only. Prism Microsystems, Inc. MAKES NO WARRANTIES, EXPRESS OR IMPLIED, AS TO THE INFORMATION IN THIS DOCUMENT. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, this Guide may be freely distributed without permission from Prism, as long as its content is unaltered, nothing is added to the content and credit to Prism is provided. Prism Microsystems, Inc. may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. -

Swivel: Hardening Webassembly Against Spectre

Swivel: Hardening WebAssembly against Spectre Shravan Narayan† Craig Disselkoen† Daniel Moghimi¶† Sunjay Cauligi† Evan Johnson† Zhao Gang† Anjo Vahldiek-Oberwagner? Ravi Sahita∗ Hovav Shacham‡ Dean Tullsen† Deian Stefan† †UC San Diego ¶Worcester Polytechnic Institute ?Intel Labs ∗Intel ‡UT Austin Abstract in recent microarchitectures [41] (see Section 6.2). In con- We describe Swivel, a new compiler framework for hardening trast, Spectre can allow attackers to bypass Wasm’s isolation WebAssembly (Wasm) against Spectre attacks. Outside the boundary on almost all superscalar CPUs [3, 4, 35]—and, browser, Wasm has become a popular lightweight, in-process unfortunately, current mitigations for Spectre cannot be im- sandbox and is, for example, used in production to isolate plemented entirely in hardware [5, 13, 43, 51, 59, 76, 81, 93]. different clients on edge clouds and function-as-a-service On multi-tenant serverless, edge-cloud, and function as a platforms. Unfortunately, Spectre attacks can bypass Wasm’s service (FaaS) platforms, where Wasm is used as the way to isolation guarantees. Swivel hardens Wasm against this class isolate mutually distursting tenants, this is particulary con- 1 of attacks by ensuring that potentially malicious code can nei- cerning: A malicious tenant can use Spectre to break out of ther use Spectre attacks to break out of the Wasm sandbox nor the sandbox and read another tenant’s secrets in two steps coerce victim code—another Wasm client or the embedding (§5.4). First, they mistrain different components of the under- process—to leak secret data. lying control flow prediction—the conditional branch predic- We describe two Swivel designs, a software-only approach tor (CBP), branch target buffer (BTB), or return stack buffer that can be used on existing CPUs, and a hardware-assisted (RSB)—to speculatively execute code that accesses data out- approach that uses extension available in Intel® 11th genera- side the sandbox boundary.