Hardware Architectures for Secure, Reliable, and Energy-Efficient Real-Time Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

HTTP Cookie - Wikipedia, the Free Encyclopedia 14/05/2014

HTTP cookie - Wikipedia, the free encyclopedia 14/05/2014 Create account Log in Article Talk Read Edit View history Search HTTP cookie From Wikipedia, the free encyclopedia Navigation A cookie, also known as an HTTP cookie, web cookie, or browser HTTP Main page cookie, is a small piece of data sent from a website and stored in a Persistence · Compression · HTTPS · Contents user's web browser while the user is browsing that website. Every time Request methods Featured content the user loads the website, the browser sends the cookie back to the OPTIONS · GET · HEAD · POST · PUT · Current events server to notify the website of the user's previous activity.[1] Cookies DELETE · TRACE · CONNECT · PATCH · Random article Donate to Wikipedia were designed to be a reliable mechanism for websites to remember Header fields Wikimedia Shop stateful information (such as items in a shopping cart) or to record the Cookie · ETag · Location · HTTP referer · DNT user's browsing activity (including clicking particular buttons, logging in, · X-Forwarded-For · Interaction or recording which pages were visited by the user as far back as months Status codes or years ago). 301 Moved Permanently · 302 Found · Help 303 See Other · 403 Forbidden · About Wikipedia Although cookies cannot carry viruses, and cannot install malware on 404 Not Found · [2] Community portal the host computer, tracking cookies and especially third-party v · t · e · Recent changes tracking cookies are commonly used as ways to compile long-term Contact page records of individuals' browsing histories—a potential privacy concern that prompted European[3] and U.S. -

Les Menus Indépendants

les menus indépendants étant un grand fan de minimalisme, et donc pas vraiment attiré vers les environnements de bureaux complets (Gnome/KDE/E17) je me trouve souvent confronté au problème du menu. bien sûr les raccourcis clavier sont parfaits, mais que voulez-vous, j'aime avoir un menu… voici quelques uns des menus indépendants que j'utilise: associés à un raccourcis clavier, un lanceur dans un panel/dock ou intégré dans une zone de notification. Sommaire les menus indépendants....................................................................................................1 compiz-deskmenu...........................................................................................................2 installation..................................................................................................................2 paquet Debian.........................................................................................................2 depuis les sources...................................................................................................2 configuration...............................................................................................................2 MyGTKMenu....................................................................................................................5 installation..................................................................................................................5 configuration...............................................................................................................5 -

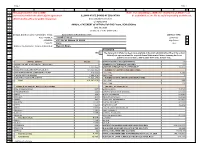

2020 Annual Statement of Affairs

Page 1 Page 1 A B C D E F G H I J 1 This page must be sent to ISBE Note: For submitting to ISBE, the "Statement of Affairs" can 2 and retained within the district/joint agreement ILLINOIS STATE BOARD OF EDUCATION be submitted as one file to avoid separating worksheets. 3 administrative office for public inspection. School Business Services 4 (217)785-8779 5 ANNUAL STATEMENT OF AFFAIRS FOR THE FISCAL YEAR ENDING 6 June 30, 2020 7 (Section 10-17 of the School Code) 8 9 SCHOOL DISTRICT/JOINT AGREEMENT NAME: Aurora East School District 131 DISTRICT TYPE 10 RCDT NUMBER: 31-045-1310-22 Elementary 11 ADDRESS: 417 5th St, Aurora, IL 60505 High School 12 COUNTY: Kane Unit X 1413 NAME OF NEWSPAPER WHERE PUBLISHED: Beacon News 15 ASSURANCE 16 YES x The statement of affairs has been made available in the main administrative office of the school district/joint agreement and the required Annual Statement of Affairs Summary has been published in accordance with Section 10-17 of the School Code. 1817 19 CAPITAL ASSETS VALUE SIZE OF DISTRICT IN SQUARE MILES 13 20 WORKS OF ART & HISTORICAL TREASURES NUMBER OF ATTENDANCE CENTERS 22 21 LAND 2,771,855 9 MONTH AVERAGE DAILY ATTENDANCE 13,327 22 BUILDING & BUILDING IMPROVEMENTS 191,099,506 NUMBER OF CERTIFICATED EMPLOYEES 23 SITE IMPROVMENTS & INFRASTRUCTURE 813,544 FULL-TIME 1,240 24 CAPITALIZED EQUIPMENT 1,553,276 PART-TIME 1 25 CONSTRUCTION IN PROGRESS 13,923,489 NUMBER OF NON-CERTIFICATED EMPLOYEES 26 Total 210,161,670 FULL-TIME 335 27 PART-TIME 113 28 NUMBER OF PUPILS ENROLLED PER GRADE TAX RATE BY FUND -

Giant List of Web Browsers

Giant List of Web Browsers The majority of the world uses a default or big tech browsers but there are many alternatives out there which may be a better choice. Take a look through our list & see if there is something you like the look of. All links open in new windows. Caveat emptor old friend & happy surfing. 1. 32bit https://www.electrasoft.com/32bw.htm 2. 360 Security https://browser.360.cn/se/en.html 3. Avant http://www.avantbrowser.com 4. Avast/SafeZone https://www.avast.com/en-us/secure-browser 5. Basilisk https://www.basilisk-browser.org 6. Bento https://bentobrowser.com 7. Bitty http://www.bitty.com 8. Blisk https://blisk.io 9. Brave https://brave.com 10. BriskBard https://www.briskbard.com 11. Chrome https://www.google.com/chrome 12. Chromium https://www.chromium.org/Home 13. Citrio http://citrio.com 14. Cliqz https://cliqz.com 15. C?c C?c https://coccoc.com 16. Comodo IceDragon https://www.comodo.com/home/browsers-toolbars/icedragon-browser.php 17. Comodo Dragon https://www.comodo.com/home/browsers-toolbars/browser.php 18. Coowon http://coowon.com 19. Crusta https://sourceforge.net/projects/crustabrowser 20. Dillo https://www.dillo.org 21. Dolphin http://dolphin.com 22. Dooble https://textbrowser.github.io/dooble 23. Edge https://www.microsoft.com/en-us/windows/microsoft-edge 24. ELinks http://elinks.or.cz 25. Epic https://www.epicbrowser.com 26. Epiphany https://projects-old.gnome.org/epiphany 27. Falkon https://www.falkon.org 28. Firefox https://www.mozilla.org/en-US/firefox/new 29. -

VENDOR CO-OP 1St Choice Restaurant Equipment and Supply

VENDOR CO-OP 1st Choice Restaurant Equipment and Supply LLC BuyBoard 1-800 Radiator and A/C of El Paso ESC 19 1st Choice Restaurant Equipment & Supply, LLC ESC 19 1st Class Solutions TIPS 22nd Century Technologies Inc. Choice Partners 2717 Group, LLC. 1GPA 2NDGEAR LLC TIPS 2ove1, LLC ESC 19 2TAC Corporation TIPS 2Ten Coffee Roasters ESC 19 3 C Technology LLC TIPS 308 Construction LLC TIPS 323 Link LLC TIPS 360 Degree Customer, Inc. ESC 19 360 Solutions Group TIPS 360Civic 1GPA 3-C Technology BuyBoard 3N1 Office Products Inc TIPS 3P Learning Choice Partners 3P Learning ESC 19 3Rivers Telecom Consulting Plenteous Consulting LLC TIPS 3Sixty Integrated.com BuyBoard 3W Consulting Group LLC ESC 19 3W Consulting Group LLC TIPS 3W Consulting Group, LLC ESC 19 4 Rivers Equipment ESC 19 4 Rivers Equipment LLC BuyBoard 4imprint BuyBoard 4Imprint Choice Partners 4imprint, Inc. ESC 20 4Kboards TIPS 4T Partnership LLc TIPS 4TellX LLC ESC 19 5 L5E LLC TIPS 5-F Mechanical Group Inc. BuyBoard 5W Contracting LLC TIPS 5W Contracting LLC Assignee TIPS 806 Technologies, Inc. Choice Partners 9 to 5 Seating Choice Partners A & H Painting, Inc. 1GPA A & M Awards ESC 19 A 2 Z Educational Supplies BuyBoard A and E Seamless Raingutters Inc TIPS A and S Air Conditioning Assignee TIPS A Bargas and Associates LLC TIPS A King's Image ESC 18 A Lert Roof Systems TIPS A Lingua Franca LLC ESC 19 A Pass Educational Group LLC TIPS A Photo Identification ESC 18 A Photo Identification ESC 20 A Quality HVAC Air Conditioning & Heating 1GPA A to Z Books LLC TIPS A V Pro, Inc. -

Webkit and Blink: Open Development Powering the HTML5 Revolution

WebKit and Blink: Open Development Powering the HTML5 Revolution Juan J. Sánchez LinuxCon 2013, New Orleans Myself, Igalia and WebKit Co-founder, member of the WebKit/Blink/Browsers team Igalia is an open source consultancy founded in 2001 Igalia is Top 5 contributor to upstream WebKit/Blink Working with many industry actors: tablets, phones, smart tv, set-top boxes, IVI and home automation. WebKit and Blink Juan J. Sánchez Outline The WebKit technology: goals, features, architecture, code structure, ports, webkit2, ongoing work The WebKit community: contributors, committers, reviewers, tools, events How to contribute to WebKit: bugfixing, features, new ports Blink: history, motivations for the fork, differences, status and impact in the WebKit community WebKit and Blink Juan J. Sánchez WebKit: The technology WebKit and Blink Juan J. Sánchez The WebKit project Web rendering engine (HTML, JavaScript, CSS...) The engine is the product Started as a fork of KHTML and KJS in 2001 Open Source since 2005 Among other things, it’s useful for: Web browsers Using web technologies for UI development WebKit and Blink Juan J. Sánchez Goals of the project Web Content Engine: HTML, CSS, JavaScript, DOM Open Source: BSD-style and LGPL licenses Compatibility: regression testing Standards Compliance Stability Performance Security Portability: desktop, mobile, embedded... Usability Hackability WebKit and Blink Juan J. Sánchez Goals of the project NON-goals: “It’s an engine, not a browser” “It’s an engineering project not a science project” “It’s not a bundle of maximally general and reusable code” “It’s not the solution to every problem” http://www.webkit.org/projects/goals.html WebKit and Blink Juan J. -

Webkit and Blink: Bridging the Gap Between the Kernel and the HTML5 Revolution

WebKit and Blink: Bridging the Gap Between the Kernel and the HTML5 Revolution Juan J. Sánchez LinuxCon Japan 2014, Tokyo Myself, Igalia and WebKit Co-founder, member of the WebKit/Blink/Browsers team Igalia is an open source consultancy founded in 2001 Igalia is Top 5 contributor to upstream WebKit/Blink Working with many industry actors: tablets, phones, smart tv, set-top boxes, IVI and home automation. WebKit and Blink Juan J. Sánchez Outline 1 Why this all matters 2 2004-2013: WebKit, a historical perspective 2.1. The technology: goals, features, architecture, ports, webkit2, code, licenses 2.2. The community: kinds of contributors and contributions, tools, events 3 April 2013. The creation of Blink: history, motivations for the fork, differences and impact in the WebKit community 4 2013-2014: Current status of both projects, future perspectives and conclusions WebKit and Blink Juan J. Sánchez PART 1: Why this all matters WebKit and Blink Juan J. Sánchez Why this all matters Long time trying to use Web technologies to replace native totally or partially Challenge enabled by new HTML5 features and improved performance Open Source is key for innovation in the field Mozilla focusing on the browser WebKit and now Blink are key projects for those building platforms and/or browsers WebKit and Blink Juan J. Sánchez PART 2: 2004-2013 WebKit, a historical perspective WebKit and Blink Juan J. Sánchez PART 2.1 WebKit: the technology WebKit and Blink Juan J. Sánchez The WebKit project Web rendering engine (HTML, JavaScript, CSS...) The engine is the product Started as a fork of KHTML and KJS in 2001 Open Source since 2005 Among other things, it’s useful for: Web browsers Using web technologies for UI development WebKit and Blink Juan J. -

Insight Manufacturers, Publishers and Suppliers by Product Category

Manufacturers, Publishers and Suppliers by Product Category 2/15/2021 10/100 Hubs & Switch ASANTE TECHNOLOGIES CHECKPOINT SYSTEMS, INC. DYNEX PRODUCTS HAWKING TECHNOLOGY MILESTONE SYSTEMS A/S ASUS CIENA EATON HEWLETT PACKARD ENTERPRISE 1VISION SOFTWARE ATEN TECHNOLOGY CISCO PRESS EDGECORE HIKVISION DIGITAL TECHNOLOGY CO. LT 3COM ATLAS SOUND CISCO SYSTEMS EDGEWATER NETWORKS INC Hirschmann 4XEM CORP. ATLONA CITRIX EDIMAX HITACHI AB DISTRIBUTING AUDIOCODES, INC. CLEAR CUBE EKTRON HITACHI DATA SYSTEMS ABLENET INC AUDIOVOX CNET TECHNOLOGY EMTEC HOWARD MEDICAL ACCELL AUTOMAP CODE GREEN NETWORKS ENDACE USA HP ACCELLION AUTOMATION INTEGRATED LLC CODI INC ENET COMPONENTS HP INC ACTI CORPORATION AVAGOTECH TECHNOLOGIES COMMAND COMMUNICATIONS ENET SOLUTIONS INC HYPERCOM ADAPTEC AVAYA COMMUNICATION DEVICES INC. ENGENIUS IBM ADC TELECOMMUNICATIONS AVOCENT‐EMERSON COMNET ENTERASYS NETWORKS IMC NETWORKS ADDERTECHNOLOGY AXIOM MEMORY COMPREHENSIVE CABLE EQUINOX SYSTEMS IMS‐DELL ADDON NETWORKS AXIS COMMUNICATIONS COMPU‐CALL, INC ETHERWAN INFOCUS ADDON STORE AZIO CORPORATION COMPUTER EXCHANGE LTD EVGA.COM INGRAM BOOKS ADESSO B & B ELECTRONICS COMPUTERLINKS EXABLAZE INGRAM MICRO ADTRAN B&H PHOTO‐VIDEO COMTROL EXACQ TECHNOLOGIES INC INNOVATIVE ELECTRONIC DESIGNS ADVANTECH AUTOMATION CORP. BASF CONNECTGEAR EXTREME NETWORKS INOGENI ADVANTECH CO LTD BELDEN CONNECTPRO EXTRON INSIGHT AEROHIVE NETWORKS BELKIN COMPONENTS COOLGEAR F5 NETWORKS INSIGNIA ALCATEL BEMATECH CP TECHNOLOGIES FIRESCOPE INTEL ALCATEL LUCENT BENFEI CRADLEPOINT, INC. FORCE10 NETWORKS, INC INTELIX -

Building a Browser for Automotive: Alternatives, Challenges and Recommendations

Building a Browser for Automotive: Alternatives, Challenges and Recommendations Juan J. Sánchez Automotive Linux Summit 2015, Tokyo Myself, Igalia and Webkit/Chromium Co-founder of Igalia Open source consultancy founded in 2001 Igalia is Top 5 contributor to upstream WebKit/Chromium Working with many industry actors: automotive, tablets, phones, smart tv, set-top boxes, IVI and home automation Building a Browser for Automotive Juan J. Sánchez Outline 1 A browser for automotive: requirements and alternatives 2 WebKit and Chromium, a historical perspective 3 Selecting between WebKit and Chromium based alternatives Building a Browser for Automotive Juan J. Sánchez PART 1 A browser for automotive: requirements and alternatives Building a Browser for Automotive Juan J. Sánchez Requirements Different User Experiences UI modifications (flexibility) New ways of interacting: accessibility support Support of specific standards (mostly communication and interfaces) Portability: support of specific hardware boards (performance optimization) Functionality and completeness can be less demanding in some cases (for now) Provide both browser as an application and as a runtime Building a Browser for Automotive Juan J. Sánchez Available alternatives Option 1) Licensing a proprietary solution: might bring a reduced time-to-market but involves a cost per unit and lack of flexibility Option 2) Deriving a new browser from the main open source browser technologies: Firefox (Gecko) Chromium WebKit (Safari and others) Mozilla removed support in their engine for third -

Introduction to the Web

Introduction to the Web MPRI 2.26.2: Web Data Management Antoine Amarilli 1/25 POLL: World Wide Web creation The Web was invented... • A: About at the same as the Internet • B: 2 years after the Internet • C: 5 years after the Internet • D: More than 5 years after the Internet 2/25 POLL: World Wide Web creation The Web was invented... • A: About at the same as the Internet • B: 2 years after the Internet • C: 5 years after the Internet • D: More than 5 years after the Internet 2/25 The old days 1969 ARPANET (ancestor of the Internet) 1974 TCP 1990 The World Wide Web, HTTP, HTML 1994 Yahoo! was founded 1995 Amazon.com, Ebay, AltaVista are founded 1998 Google are founded 2001 Wikipedia is created 3/25 POLL: Number of Internet domains How many domain names exist on the Internet? • A: 3 million • B: 30 million • C: 300 million • D: 3 billion 4/25 POLL: Number of Internet domains How many domain names exist on the Internet? • A: 3 million • B: 30 million • C: 300 million • D: 3 billion 4/25 Statistics • Around 370 million domains, including 150 million in .com1 • 54% of content in English and 4% in French2 • Google knows over one trillion (1012) of unique URLs3 ! The same content can live in many dierent URLs ! Parts of the Web are not indexable: the hidden Web or deep Web 1https://www.verisign.com/en_US/domain-names/dnib/index.xhtml 2https://w3techs.com/technologies/overview/content_language/all 3https://googleblog.blogspot.fr/2008/07/we-knew-web-was-big.html 5/25 POLL: Internet users Which proportion of the world population is using the Internet -

Harrnonic Flow Analysis in Power Distribution Networks

Harrnonic Flow Analysis in Power Distribution Networks by Nima Bayan A Thesis Submitted to the College of Graduate Studies and Research through the Electrical Engineering Program in Partial Fulfillment of the Requirements for the Degree of Master of Applied Science at the University of Windsor Windsor, Ontario 1999 National Library Bibliothèque nationale 1*1 of Canada du Canada Acquisitions and Acquisitions et Bibliographie Services services bibliographiques 395 Wellington Street 395. me Wellirigtori OttawaON KlAON4 OttawaON KlAON4 Canada Canada The author has granted a non- L'auteur a accordé une licence non exclusive licence aliowing the exclusive permettant à la National Library of Canada to Bibliothèque nationale du Canada de reproduce, loan, distribute or sel1 reproduire, prêter, distribuer ou copies of this thesis in microform, vendre des copies de cette thèse sous paper or electronic formats. la forme de microfiche/tilm, de reproduction sur papier ou sur format électronique. The author retains ownership of the L'auteur conserve la propriété du copyright in Uiis thesis. Neither the droit d'auteur qui protège cette thèse. thesis nor substantial extracts fiom it Ni la thèse ni des extraits substantiels may be printed or othenvise de celle-ci ne doivent être imprimés reproduced without the author's ou autrement reproduits sans son permission. autorisation. ONima Bayan 1999 Conventional AC electric power systems are designed to operate with sinusoidal voltages and currents. However, nonlinear and electronically switched loads will distort steady state AC voltage and current waveforrns. Periodically distorted waveforms can be studied by examining the hamonic components of the waveforms. Power system harmonic analysis investigates the generation and propagation of these components throughout the system. -

Everything You Need to Know. Everyone You Need to Reach

Sponsored by Everything You Need to Know. Everyone You Need to Reach. • A look at what digital & mobile learning are • Digital learning resources & apps at the district, school, and classroom levels • Company profiles and resources • Essential components of a mobile classroom • Best practices and examples • Closing achievement gaps with digital learning • Marketplace update Produced by eSchool Media | 2275 Research Blvd, Rockville, MD 20850 | 301.913.0115 | eSchoolNews.com Ensure digital equity for your K-12 students with anytime, anywhere Internet access. Connectivity for School Buildings Ensure every school building is connected to reliable Internet. School Bus Wi-Fi Off-Campus Access Extend the classroom for Students with school bus Wi-Fi. Provide access outside the classroom with Wi-Fi hotspots or LTE Chromebooks. Kajeet gives schools and districts the power to close the digital divide with: • CIPA-compliant, education-only Internet • Program control and visibility through the cloud portal, Sentinel® Contact Kajeet to learn more about • Dedicated support from the Kajeet ensuring digital equity for your students. Account Management team (240) 482-3500 | [email protected] From school to home, Kajeet has K-12 connectivity covered. www.kajeet.net/esn Guides K-12 Digital & Mobile Learning About eSN Guides About eSchool News Guides We are excited to bring you the latest in the eSchool News K-12 Guides series. eSchool News K-12 Guides are full of resources, tips, trends, and insights from indus- try experts on a variety of topics that are essential to the classroom, school, and district. The November Guide, the eSchool News K-12 Digital & Mobile Learning Guide, offers expert insight on the reasons digital and mobile learning support students’ aca- demic achievement and build the skills they’ll carry with them into the global economy.