Stan User's Guide 2.27

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gordon Ramsay Uncharted

SPECIAL PROMOTION SIX DESTINATIONS ONE CHEF “This stuff deserves to sit on the best tables of the world.” – GORDON RAMSAY; CHEF, STUDENT AND EXPLORER SPECIAL PROMOTION THIS MAGAZINE WAS PRODUCED BY NATIONAL GEOGRAPHIC CHANNEL IN PROMOTION OF THE SERIES GORDON RAMSAY: CONTENTS UNCHARTED PREMIERES SUNDAY JULY 21 10/9c FEATURE EMBARK EXPLORE WHERE IN 10THE WORLD is Gordon Ramsay cooking tonight? 18 UNCHARTED TRAVEL BITES We’ve collected travel stories and recipes LAOS inspired by Gordon’s (L to R) Yuta, Gordon culinary journey so that and Mr. Ten take you can embark on a spin on Mr. Ten’s your own. Bon appetit! souped-up ride. TRAVEL SERIES GORDON RAMSAY: ALASKA Discover 10 Secrets of UNCHARTED Glacial ice harvester Machu Picchu In his new series, Michelle Costello Gordon Ramsay mixes a Manhattan 10 Reasons to travels to six global with Gordon using ice Visit New Zealand destinations to learn they’ve just harvested from the locals. In from Tracy Arm Fjord 4THE PATH TO Go Inside the Labyrin- New Zealand, Peru, in Alaska. UNCHARTED thine Medina of Fez Morocco, Laos, Hawaii A rare look at Gordon and Alaska, he explores Ramsay as you’ve never Road Trip: Maui the culture, traditions seen him before. and cuisine the way See the Rich Spiritual and only he can — with PHOTOS LEFT TO RIGHT: ERNESTO BENAVIDES, Cultural Traditions of Laos some heart-pumping JON KROLL, MARK JOHNSON, adventure on the side. MARK EDWARD HARRIS Discover the DESIGN BY: Best of Anchorage MARY DUNNINGTON 2 GORDON RAMSAY: UNCHARTED SPECIAL PROMOTION 3 BY JILL K. -

Humanistic Climate Philosophy: Erich Fromm Revisited

Humanistic Climate Philosophy: Erich Fromm Revisited by Nicholas Dovellos A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Philosophy College of Arts and Sciences University of South Florida Co-Major Professor (Deceased): Martin Schönfeld, Ph.D. Co-Major Professor: Alex Levine, Ph.D. Co-Major Professor: Joshua Rayman, Ph.D. Govindan Parayil, Ph.D. Michael Morris, Ph.D. George Philippides, Ph.D. Date of Approval: July 7, 2020 Key Words: Climate Ethics, Actionability, Psychoanalysis, Econo-Philosophy, Productive Orientation, Biophilia, Necrophilia, Pathology, Narcissism, Alienation, Interiority Copyright © 2020 Nicholas Dovellos Table of Contents Abstract .................................................................................................................................... iii Introduction ................................................................................................................................ 1 Part I: The Current Climate Conversation: Work and Analysis of Contemporary Ethicists ........ 13 Chapter 1: Preliminaries ............................................................................................................ 16 Section I: Cape Town and Totalizing Systems ............................................................... 17 Section II: A Lack in Step Two: Actionability ............................................................... 25 Section III: Gardiner’s Analysis .................................................................................... -

Celebration Schedule 2012 Provost's Office Gettysburg College

Celebration Celebration 2012 May 5th, 9:00 AM - 5:00 PM Celebration Schedule 2012 Provost's Office Gettysburg College Follow this and additional works at: https://cupola.gettysburg.edu/celebration Part of the Higher Education Commons Share feedback about the accessibility of this item. Provost's Office, "Celebration Schedule 2012" (2012). Celebration. 55. https://cupola.gettysburg.edu/celebration/2012/Panels/55 This open access event is brought to you by The uC pola: Scholarship at Gettysburg College. It has been accepted for inclusion by an authorized administrator of The uC pola. For more information, please contact [email protected]. Description Full presentation schedule for Celebration, May 5, 2012 Location Gettysburg College Disciplines Higher Education This event is available at The uC pola: Scholarship at Gettysburg College: https://cupola.gettysburg.edu/celebration/2012/Panels/55 From the Provost I am most pleased to welcome you to Gettysburg College’s Fourth Annual Colloquium on Undergraduate Research, Creative Activity, and Community Engagement. Today is truly a cause for celebration, as our students present the results of the great work they’ve been engaged in during the past year. Students representing all four class years and from across the disciplines are demonstrating today what’s best about the Gettysburg College experience— intentional collaborations between students and their mentors such that students acquire both knowledge and skills that can be applied to many facets of their future personal and professional lives. The benefits for those who mentor these young adults may, at first, be more difficult to discern. Most of those who engage in mentoring do it because they enjoy being around students who are eager to learn what they have to teach them. -

Murder-Suicide Ruled in Shooting a Homicide-Suicide Label Has Been Pinned on the Deaths Monday Morning of an Estranged St

-* •* J 112th Year, No: 17 ST. JOHNS, MICHIGAN - THURSDAY, AUGUST 17, 1967 2 SECTIONS - 32 PAGES 15 Cents Murder-suicide ruled in shooting A homicide-suicide label has been pinned on the deaths Monday morning of an estranged St. Johns couple whose divorce Victims had become, final less than an hour before the fatal shooting. The victims of the marital tragedy were: *Mrs Alice Shivley, 25, who was shot through the heart with a 45-caliber pistol bullet. •Russell L. Shivley, 32, who shot himself with the same gun minutes after shooting his wife. He died at Clinton Memorial Hospital about 1 1/2 hqurs after the shooting incident. The scene of the tragedy was Mrsy Shivley's home at 211 E. en name, Alice Hackett. Lincoln Street, at the corner Police reconstructed the of Oakland Street and across events this way. Lincoln from the Federal-Mo gul plant. It happened about AFTER LEAVING court in the 11:05 a.m. Monday. divorce hearing Monday morn ing, Mrs Shivley —now Alice POLICE OFFICER Lyle Hackett again—was driven home French said Mr Shivley appar by her mother, Mrs Ruth Pat ently shot himself just as he terson of 1013 1/2 S. Church (French) arrived at the home Street, Police said Mrs Shlv1 in answer to a call about a ley wanted to pick up some shooting phoned in fromtheFed- papers at her Lincoln Street eral-Mogul plant. He found Mr home. Shivley seriously wounded and She got out of the car and lying on the floor of a garage went in the front door* Mrs MRS ALICE SHIVLEY adjacent to -• the i house on the Patterson got out of-'the car east side. -

September 2010

THE ISBA BULLETIN Vol. 17 No. 3 September 2010 The official bulletin of the International Society for Bayesian Analysis AMESSAGE FROM THE membership renewal (there will be special provi- PRESIDENT sions for members who hold multi-year member- ships). Peter Muller¨ The Bayesian Nonparametrics Section (IS- ISBA President, 2010 BA/BNP) is already up and running, and plan- [email protected] ning the 2011 BNP workshop. Please see the News from the World section in this Bulletin First some sad news. In August we lost two and our homepage (select “business” and “mee- big Bayesians. Julian Besag passed away on Au- tings”). gust 6, and Arnold Zellner passed away on Au- gust 11. Arnold was one of the founding ISBA ISBA/SBSS Educational Initiative. Jointly presidents and was instrumental to get Bayesian with ASA/SBSS (American Statistical Associa- started. Obituaries on this issue of the Analysis tion, Section on Bayesian Statistical Science) we Bulletin and on our homepage acknowledge Juli- launched a new joint educational initiative. The an and Arnold’s pathbreaking contributions and initiative formalized a long standing history of their impact on the lives of many people in our collaboration of ISBA and ASA/SBSS in related research community. They will be missed dearly! matters. Continued on page 2. ISBA Elections 2010. Please check out the elec- tion statements of the candidates for the upco- In this issue ming ISBA elections. We have an amazing slate ‰ A MESSAGE FROM THE BA EDITOR of candidates. Thanks to the nominating commit- *Page 2 tee, Mike West (chair), Renato Martins Assuncao,˜ Jennifer Hill, Beatrix Jones, Jaeyong Lee, Yasuhi- ‰ 2010 ISBA ELECTION *Page 3 ro Omori and Gareth Robert! ‰ SAVAGE AWARD AND MITCHELL PRIZE 2010 *Page 8 Sections. -

IMS Bulletin 39(4)

Volume 39 • Issue 4 IMS1935–2010 Bulletin May 2010 Meet the 2010 candidates Contents 1 IMS Elections 2–3 Members’ News: new ISI members; Adrian Raftery; It’s time for the 2010 IMS elections, and Richard Smith; we introduce this year’s nominees who are IMS Collections vol 5 standing for IMS President-Elect and for IMS Council. You can read all the candi- 4 IMS Election candidates dates’ statements, starting on page 4. 9 Amendments to This year there are also amendments Constitution and Bylaws to the Constitution and Bylaws to vote Letter to the Editor 11 on: they are listed The candidate for IMS President-Elect is Medallion Preview: Laurens on page 9. 13 Ruth Williams de Haan Voting is open until June 26, so 14 COPSS Fisher lecture: Bruce https://secure.imstat.org/secure/vote2010/vote2010.asp Lindsay please visit to cast your vote! 15 Rick’s Ramblings: March Madness 16 Terence’s Stuff: And ANOVA thing 17 IMS meetings 27 Other meetings 30 Employment Opportunities 31 International Calendar of Statistical Events The ten Council candidates, clockwise from top left, are: 35 Information for Advertisers Krzysztof Burdzy, Francisco Cribari-Neto, Arnoldo Frigessi, Peter Kim, Steve Lalley, Neal Madras, Gennady Samorodnitsky, Ingrid Van Keilegom, Yazhen Wang and Wing H Wong Abstract submission deadline extended to April 30 IMS Bulletin 2 . IMs Bulletin Volume 39 . Issue 4 Volume 39 • Issue 4 May 2010 IMS members’ news ISSN 1544-1881 International Statistical Institute elects new members Contact information Among the 54 new elected ISI members are several IMS members. We congratulate IMS IMS Bulletin Editor: Xuming He Fellow Jon Wellner, and IMS members: Subhabrata Chakraborti, USA; Liliana Forzani, Assistant Editor: Tati Howell Argentina; Ronald D. -

Romantic Comedy-A Critical and Creative Enactment 15Oct19

Romantic comedy: a critical and creative enactment by Toni Leigh Jordan B.Sc., Dip. A. Submitted in fulfilment of the requirements for the degree of Doctor of Philosophy Deakin University June, 2019 Table of Contents Abstract ii Acknowledgements iii Dedication iv Exegesis 1 Introduction 2 The Denier 15 Trysts and Turbulence 41 You’ve Got Influences 73 Conclusion 109 Works cited 112 Creative Artefact 123 Addition 124 Fall Girl 177 Our Tiny, Useless Hearts 259 Abstract For this thesis, I have written an exegetic complement to my published novels using innovative methodologies involving parody and fictocriticism to creatively engage with three iconic writers in order to explore issues of genre participation. Despite their different forms and eras, my subjects—the French playwright Molière (1622-1673), the English novelist Jane Austen (1775-1817), and the American screenwriter Nora Ephron (1941-2012)—arguably participate in the romantic comedy genre, and my analysis of their work seeks to reveal both genre mechanics and the nature of sociocultural evaluation. I suggest that Molière did indeed participate in the romantic comedy genre, and it is both gender politics and the high cultural prestige of his work (and the low cultural prestige of romantic comedy) that prevents this classification. I also suggest that popular interpretations of Austen’s work are driven by romanticised analysis (inseparable from popular culture), rather than the values implicit in the work, and that Ephron’s clever and conscious use of postmodern techniques is frequently overlooked. Further, my exegesis shows how parody provides a productive methodology for critically exploring genre, and an appropriate one, given that genre involves repetition—or parody—of conventions and their relationship to form. -

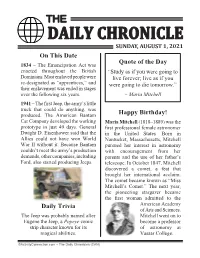

On This Date Daily Trivia Happy Birthday! Quote Of

THE SUNDAY, AUGUST 1, 2021 On This Date 1834 – The Emancipation Act was Quote of the Day enacted throughout the British “Study as if you were going to Dominions. Most enslaved people were live forever; live as if you re-designated as “apprentices,” and were going to die tomorrow.” their enslavement was ended in stages over the following six years. ~ Maria Mitchell 1941 – The first Jeep, the army’s little truck that could do anything, was produced. The American Bantam Happy Birthday! Car Company developed the working Maria Mitchell (1818–1889) was the prototype in just 49 days. General first professional female astronomer Dwight D. Eisenhower said that the in the United States. Born in Allies could not have won World Nantucket, Massachusetts, Mitchell War II without it. Because Bantam pursued her interest in astronomy couldn’t meet the army’s production with encouragement from her demands, other companies, including parents and the use of her father’s Ford, also started producing Jeeps. telescope. In October 1847, Mitchell discovered a comet, a feat that brought her international acclaim. The comet became known as “Miss Mitchell’s Comet.” The next year, the pioneering stargazer became the first woman admitted to the Daily Trivia American Academy of Arts and Sciences. The Jeep was probably named after Mitchell went on to Eugene the Jeep, a Popeye comic become a professor strip character known for its of astronomy at magical abilities. Vassar College. ©ActivityConnection.com – The Daily Chronicles (CAN) UNDAY UGUST S , A 1, 2021 Today is Mahjong Day. While some folks think that this Chinese matching game was invented by Confucius, most historians believe that it was not created until the late 19th century, when a popular card game was converted to tiles. -

Tea: a High-Level Language and Runtime System for Automating

Tea: A High-level Language and Runtime System for Automating Statistical Analysis Eunice Jun1, Maureen Daum1, Jared Roesch1, Sarah Chasins2, Emery Berger34, Rene Just1, Katharina Reinecke1 1University of Washington, 2University of California, 3University of Seattle, WA Berkeley, CA Massachusetts Amherst, femjun, mdaum, jroesch, [email protected] 4Microsoft Research, rjust, [email protected] Redmond, WA [email protected] ABSTRACT Since the development of modern statistical methods (e.g., Though statistical analyses are centered on research questions Student’s t-test, ANOVA, etc.), statisticians have acknowl- and hypotheses, current statistical analysis tools are not. Users edged the difficulty of identifying which statistical tests people must first translate their hypotheses into specific statistical should use to answer their specific research questions. Almost tests and then perform API calls with functions and parame- a century later, choosing appropriate statistical tests for eval- ters. To do so accurately requires that users have statistical uating a hypothesis remains a challenge. As a consequence, expertise. To lower this barrier to valid, replicable statistical errors in statistical analyses are common [26], especially given analysis, we introduce Tea, a high-level declarative language that data analysis has become a common task for people with and runtime system. In Tea, users express their study de- little to no statistical expertise. sign, any parametric assumptions, and their hypotheses. Tea A wide variety of tools (such as SPSS [55], SAS [54], and compiles these high-level specifications into a constraint satis- JMP [52]), programming languages (e.g., R [53]), and libraries faction problem that determines the set of valid statistical tests (including numpy [40], scipy [23], and statsmodels [45]), en- and then executes them to test the hypothesis. -

Saleyards Lifeline

Friday, 12 February, 2021 WEATHER PAGE 18 TV GUIDE PAGES 21-22, 43-44 PUZZLES PAGES 12-13, 20 CLASSIFIEDS PAGES 47-49 borderwatch.com.au | $3.00 City rail history Saleyards turned to eyesore STORY PAGE 3 lifeline RAQUEL MUSTILLO [email protected] LIVESTOCK agent John Chay has wel- comed Wattle Range Council’s decision to keep the Millicent Saleyards opera- tional, but warned “we have not won the war” due to the imposition of a number of conditions for its retention. After two years of uncertainty, the council-owned cattle selling facility was saved from permanent closure after elected members backed a motion to keep the gates open conditional on the yards maintaining a yearly throughput of 8500. At Tuesday night’s council meeting, elected members resumed discussion on a motion by cattle producer and Councillor Moira Neagle to continue the operations of the facility until 2025, de- velop a management plan for the faculty and consider recurrent funding for capi- tal upgrades. But Penola-based Rick Paltridge - who has been a vocal opponent of continuing the saleyards - told the chamber he had spoken to “numerous people around the region” including stock agents, truck drivers, meat buyers and farmers who he claimed believed the saleyards should be closed down. UNSIGHTLY: National Trust South Australia Mount Gambier branch chair Nathan Woodruff has urged maintenance to occur at the old Mount Gambier STORY PAGE 5 roundhouse site, which has become an eyesore adjacent to the popular shared use path. Picture: MOLLY TAYLOR Arsenic alarm at Bay RAQUEL MUSTILLO an onsite well. -

December 2000

THE ISBA BULLETIN Vol. 7 No. 4 December 2000 The o±cial bulletin of the International Society for Bayesian Analysis A WORD FROM already lays out all the elements mere statisticians might have THE PRESIDENT of the philosophical position anything to say to them that by Philip Dawid that he was to continue to could possibly be worth ISBA President develop and promote (to a listening to. I recently acted as [email protected] largely uncomprehending an expert witness for the audience) for the rest of his life. defence in a murder appeal, Radical Probabilism He is utterly uncompromising which revolved around a Modern Bayesianism is doing in his rejection of the realist variant of the “Prosecutor’s a wonderful job in an enormous conception that Probability is Fallacy” (the confusion of range of applied activities, somehow “out there in the world”, P (innocencejevidence) with supplying modelling, data and in his pragmatist emphasis P ('evidencejinnocence)). $ analysis and inference on Subjective Probability as Contents procedures to nourish parts that something that can be measured other techniques cannot reach. and regulated by suitable ➤ ISBA Elections and Logo But Bayesianism is far more instruments (betting behaviour, ☛ Page 2 than a bag of tricks for helping or proper scoring rules). other specialists out with their What de Finetti constructed ➤ Interview with Lindley tricky problems – it is a totally was, essentially, a whole new ☛ Page 3 original way of thinking about theory of logic – in the broad ➤ New prizes the world we live in. I was sense of principles for thinking ☛ Page 5 forcibly struck by this when I and learning about how the had to deliver some brief world behaves. -

A Study of Postmodern Narrative in Akbar Radi's Khanomche and Mahtabi

International Journal of Applied Linguistics & English Literature ISSN 2200-3592 (Print), ISSN 2200-3452 (Online) Vol. 1 No. 4; September 2012 A Study of Postmodern Narrative in Akbar Radi's Khanomche and Mahtabi Hajar Abbasi Narinabad Address: 43, Jafari Nasab Alley, Hafte-Tir, Karaj, Iran Tel: 00989370931548 E-mail: [email protected] Received: 30-06- 2012 Accepted: 30-08- 2012 Published: 01-09- 2012 doi:10.7575/ijalel.v.1n.4p.245 URL: http://dx.doi.org/10.7575/ijalel.v.1n.4p.245 Abstract This project aims to explore representation of narratology based on some recent postmodern theories backed up by the ideas of some postmodern discourses including Roland Barthes, Jean-François Lyotard, Julia Kristeva and William James with an especial focus on Akbar Radi-the Persian Playwright- drama Khanomche and Mahtabi .The article begins by providing a terminological view of narrative in general and postmodern narrative in specific. As postmodern narrative elements are too general and the researcher could not cover them all, she has gone through the most eminent elements: intertextuality, stream of consciousness style, fragmentation and representation respectively which are delicately utilized in Khanomche and Mahtabi. The review then critically applies the theories on the mentioned drama. The article concludes by recommending a few directions for the further research. Keywords: Intertextuality, Stream of Consciousness, Fragmentation, Postmodern Reality 1. Introduction Narratologists have tried to define narrative in one way or another; one will define it without difficulty as "the representation of an event or sequence of events"(Genette, 1980, p. 127). In Genette's terminology it refers to the producing narrative action and by extension, the whole of the real or fictional situation in which that action takes place (Ibid.