Application of Automatic Speech Recognition Technologies to Singing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DOCUMENT RESUME ED 262 129 UD 024 466 Hawaiian Studies

DOCUMENT RESUME ED 262 129 UD 024 466 TITLE Hawaiian Studies Curriculum Guide. Grades K-1. INSTITUTION Hawaii State Dept. of Education, Honolulu. Office of Instructional Services. PUB DATE Dec 83 NOTE 316p.; For the Curriculum Guides for Grades 2,3, and 4, see UD 024 467-468, and ED 255 597. "-PUB TYPE Guides Classroom Use - Guides (For Teachers) (052) EDRS PRICE MF01/PC13 Plus Postage. DESCRIPTORS Community Resources; *Cultural Awareness; *Cultural Education; *Early Childhood Education; Grade 1; Hawaiian; *Hawaiians; Instructional Materials; Kindergarten; *Learning Activities; Pacific Americans; *Teacher Aides; Vocabulary IDENTIFIERS *Hawaii ABSTRACT This curriculum guide suggests activities and educational experiences within a Hawaiian cultural context for kindergarten and Grade 1 students in Hawaiian schools. First, a introduction-discusses the contents of the guide, the relations Hip of - the classroom teacher and the kupuna (ljawaiian-speaking elder); the identification and scheduling of Kupunas; and how to use the ide. The remainder of the guide is divided into two major sections. Each is preceded by an overview which outlines the subject areas into which Hawaiian Studies instruction is integrated; the emphases or major lesson topics taken up within each subject, area; the learning objectives addressed by the instructional activities; and a key to the unit's appendices, which provide cultural information to supplement the activities. The activities in Unit I focus on the "self" and the immediate environment. They are said to give children ___Dppor-tumit-ies to investigate and experience feelings and ideas and then to determine whether they are acceptable within classroom and home situations. The activities of Unit II involve the children in experiences dealing with the "'ohana" (family) by having them identify roles, functions, dependencies, rights, responsibilities, occupations, and other cultural characteristics of the 'ohana. -

MUSIC NOTES: Exploring Music Listening Data As a Visual Representation of Self

MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall A thesis submitted in partial fulfillment of the requirements for the degree of: Master of Design University of Washington 2016 Committee: Kristine Matthews Karen Cheng Linda Norlen Program Authorized to Offer Degree: Art ©Copyright 2016 Chad Philip Hall University of Washington Abstract MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall Co-Chairs of the Supervisory Committee: Kristine Matthews, Associate Professor + Chair Division of Design, Visual Communication Design School of Art + Art History + Design Karen Cheng, Professor Division of Design, Visual Communication Design School of Art + Art History + Design Shelves of vinyl records and cassette tapes spark thoughts and mem ories at a quick glance. In the shift to digital formats, we lost physical artifacts but gained data as a rich, but often hidden artifact of our music listening. This project tracked and visualized the music listening habits of eight people over 30 days to explore how this data can serve as a visual representation of self and present new opportunities for reflection. 1 exploring music listening data as MUSIC NOTES a visual representation of self CHAD PHILIP HALL 2 A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF: master of design university of washington 2016 COMMITTEE: kristine matthews karen cheng linda norlen PROGRAM AUTHORIZED TO OFFER DEGREE: school of art + art history + design, division -

D Onion River Reviewd

Completely Casey Lendway My mother always told me that there would come a day when the plot of my own life would not make sense to me anymore. She never told me about boys who are godless with their mouths, or the ones who sit like cicadas on your doorstep screaming about the ghosts who inhabit their brainstems. d Onion River Review 201 6 d D Onion River Review d 2016 dd Onion River Review d 2016 river run by Briana Brady Agi Chretien Lily Gardner Victoria Sullivan Cory Warren Cody Wasuta Editors’ Note As we put together this year’s Onion River Review, we tried our very best to produce another weird little book of which we could be proud. Every year, the Onion takes a new shape, filled with the imaginations of its submitters and pieced together by a changing group of editors whose only common denominator is that they’re as fantastic (read: bizarre) as it gets. This year, as we sifted through submissions, we found work that made us think stuff, feel stuff, move stuff, pick stuff up and put it back down. We loved stuff, fought for stuff, ate way too many Double Stufs, and, in the end, managed to stuff this book with some pretty great stuff. So what is all this stuff? In the process of compiling the pieces that make up this year’s issue, we dove into some of the Big Questions: art, politics, relatability, perspective, lighting, birds, vegetables, and, most usefully, how to correctly identify and eradicate the plague of our time, lizard people. -

January 1988

VOLUME 12, NUMBER 1, ISSUE 99 Cover Photo by Lissa Wales Wales PHIL GOULD Lissa In addition to drumming with Level 42, Phil Gould also is a by songwriter and lyricist for the group, which helps him fit his drums into the total picture. Photo by Simon Goodwin 16 RICHIE MORALES After paying years of dues with such artists as Herbie Mann, Ray Barretto, Gato Barbieri, and the Brecker Bros., Richie Morales is getting wide exposure with Spyro Gyra. by Jeff Potter 22 CHICK WEBB Although he died at the age of 33, Chick Webb had a lasting impact on jazz drumming, and was idolized by such notables as Gene Krupa and Buddy Rich. by Burt Korall 26 PERSONAL RELATIONSHIPS The many demands of a music career can interfere with a marriage or relationship. We spoke to several couples, including Steve and Susan Smith, Rod and Michele Morgenstein, and Tris and Celia Imboden, to find out what makes their relationships work. by Robyn Flans 30 MD TRIVIA CONTEST Win a Yamaha drumkit. 36 EDUCATION DRIVER'S SEAT by Rick Mattingly, Bob Saydlowski, Jr., and Rick Van Horn IN THE STUDIO Matching Drum Sounds To Big Band 122 Studio-Ready Drums Figures by Ed Shaughnessy 100 ELECTRONIC REVIEW by Craig Krampf 38 Dynacord P-20 Digital MIDI Drumkit TRACKING ROCK CHARTS by Bob Saydlowski, Jr. 126 Beware Of The Simple Drum Chart Steve Smith: "Lovin", Touchin', by Hank Jaramillo 42 Squeezin' " NEW AND NOTABLE 132 JAZZ DRUMMERS' WORKSHOP by Michael Lawson 102 PROFILES Meeting A Piece Of Music For The TIMP TALK First Time Dialogue For Timpani And Drumset FROM THE PAST by Peter Erskine 60 by Vic Firth 104 England's Phil Seamen THE MACHINE SHOP by Simon Goodwin 44 The Funk Machine SOUTH OF THE BORDER by Clive Brooks 66 The Merengue PORTRAITS 108 ROCK 'N' JAZZ CLINIC by John Santos Portinho A Little Can Go Long Way CONCEPTS by Carl Stormer 68 by Rod Morgenstein 80 Confidence 116 NEWS by Roy Burns LISTENER'S GUIDE UPDATE 6 Buddy Rich CLUB SCENE INDUSTRY HAPPENINGS 128 by Mark Gauthier 82 Periodic Checkups 118 MASTER CLASS by Rick Van Horn REVIEWS Portraits In Rhythm: Etude #10 ON TAPE 62 by Anthony J. -

June 2020 Volume 87 / Number 6

JUNE 2020 VOLUME 87 / NUMBER 6 President Kevin Maher Publisher Frank Alkyer Editor Bobby Reed Reviews Editor Dave Cantor Contributing Editor Ed Enright Creative Director ŽanetaÎuntová Design Assistant Will Dutton Assistant to the Publisher Sue Mahal Bookkeeper Evelyn Oakes ADVERTISING SALES Record Companies & Schools Jennifer Ruban-Gentile Vice President of Sales 630-359-9345 [email protected] Musical Instruments & East Coast Schools Ritche Deraney Vice President of Sales 201-445-6260 [email protected] Advertising Sales Associate Grace Blackford 630-359-9358 [email protected] OFFICES 102 N. Haven Road, Elmhurst, IL 60126–2970 630-941-2030 / Fax: 630-941-3210 http://downbeat.com [email protected] CUSTOMER SERVICE 877-904-5299 / [email protected] CONTRIBUTORS Senior Contributors: Michael Bourne, Aaron Cohen, Howard Mandel, John McDonough Atlanta: Jon Ross; Boston: Fred Bouchard, Frank-John Hadley; Chicago: Alain Drouot, Michael Jackson, Jeff Johnson, Peter Margasak, Bill Meyer, Paul Natkin, Howard Reich; Indiana: Mark Sheldon; Los Angeles: Earl Gibson, Andy Hermann, Sean J. O’Connell, Chris Walker, Josef Woodard, Scott Yanow; Michigan: John Ephland; Minneapolis: Andrea Canter; Nashville: Bob Doerschuk; New Orleans: Erika Goldring, Jennifer Odell; New York: Herb Boyd, Bill Douthart, Philip Freeman, Stephanie Jones, Matthew Kassel, Jimmy Katz, Suzanne Lorge, Phillip Lutz, Jim Macnie, Ken Micallef, Bill Milkowski, Allen Morrison, Dan Ouellette, Ted Panken, Tom Staudter, Jack Vartoogian; Philadelphia: Shaun Brady; Portland: Robert Ham; San Francisco: Yoshi Kato, Denise Sullivan; Seattle: Paul de Barros; Washington, D.C.: Willard Jenkins, John Murph, Michael Wilderman; Canada: J.D. Considine, James Hale; France: Jean Szlamowicz; Germany: Hyou Vielz; Great Britain: Andrew Jones; Portugal: José Duarte; Romania: Virgil Mihaiu; Russia: Cyril Moshkow. -

Call Me Maybe Deconstructed

Hit Songs Deconstructed Deconstructing Today's Hits for Songwriting Success http://reports.hitsongsdeconstructed.com Call Me Maybe Deconstructed Skip to: Audio/Video At a Glance Song Overview Structural Analysis Momentum/Tension/Intensity (MTI) Lyrics & Harmonic Progression The Music The Vocal Melody Primary Instrumentation, Tone & Mix Compositional Assessment Hit Factor Assessment Conclusion Why it’s a Hit Songwriter/Producer Take Aways Audio/Video Back to Top At a Glance Back to Top Artist: Carly Rae Jepsen Song/Album: Call Me Maybe / Curiosity Songwriters: Josh Ramsay, Tavish Crowe, Carly Rae Jepsen Genre: Pop Sub Genre: Dance/Club, Teen Pop Length: 3:13 Structure: A-B-A-B-C-B-C Tempo: Mid/Up (120 bpm) First Chorus Occurrence: 0:28 (15% into the song) Intro Length: 0:04 Outro Length: 0:09 1 / 36 Hit Songs Deconstructed Deconstructing Today's Hits for Songwriting Success http://reports.hitsongsdeconstructed.com Primary Instrumentation: Combo (Electric + “acoustic” sounding synth strings) Lyrical Theme: Love/Relationships Title Occurrences: “Call Me Maybe” occurs 12 times within the song Primary Lyrical P.O.V: 1st & 2nd Song Overview Back to Top If there was just one thing to be learned from Carly Rae Jepsen’s Call Me Maybe, it would be that EXCEPTIONAL CRAFT is paramount to opening the doors to success, especially in today’s mainstream music industry. If the song wasn’t as strong as it is, Justin Bieber and Selena Gomez would never have picked up on it, tweeted about it, and in the end help launch the song, and Carly Rae Jepsen into virtual overnight superstardom. -

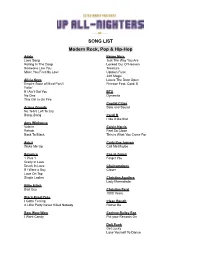

Band Song-List

SONG LIST Modern Rock, Pop & Hip-Hop Adele Bruno Mars Love Song Just The Way You Are Rolling In The Deep Locked Out Of Heaven Someone Like You Treasure Make You Feel My Love Uptown Funk 24K Magic Alicia Keys Leave The Door Open Empire State of Mind Part II Finesse Feat. Cardi B Fallin' If I Ain't Got You BTS No One Dynamite This Girl Is On Fire Capital Cities Ariana Grande Safe and Sound No Tears Left To Cry Bang, Bang Cardi B I like it like that Amy Winhouse Valerie Calvin Harris Rehab Feel So Close Back To Black This is What You Came For Avicii Carly Rae Jepsen Wake Me Up Call Me Maybe Beyonce Cee-lo Green 1 Plus 1 Forget You Crazy In Love Drunk In Love Chainsmokers If I Were a Boy Closer Love On Top Single Ladies Christina Aguilera Lady Marmalade Billie Eilish Bad Guy Christina Perri 1000 Years Black-Eyed Peas I Gotta Feeling Clean Bandit A Little Party Never Killed Nobody Rather Be Bow Wow Wow Corinne Bailey Rae I Want Candy Put your Records On Daft Punk Get Lucky Lose Yourself To Dance Justin Timberlake Darius Rucker Suit & Tie Wagon Wheel Can’t Stop The Feeling Cry Me A River David Guetta Love You Like I Love You Titanium Feat. Sia Sexy Back Drake Jay-Z and Alicia Keys Hotline Bling Empire State of Mind One Dance In My Feelings Jess Glynne Hold One We’re Going Home Hold My Hand Too Good Controlla Jessie J Bang, Bang DNCE Domino Cake By The Ocean Kygo Disclosure Higher Love Latch Katy Perry Dua Lipa Chained To the Rhythm Don’t Start Now California Gurls Levitating Firework Teenage Dream Duffy Mercy Lady Gaga Bad Romance Ed Sheeran Just Dance Shape Of You Poker Face Thinking Out loud Perfect Duet Feat. -

AWARDS 10X DIAMOND ALBUM September // 9/1/16 - 9/30/16 CHRIS STAPLETON//TRAVELLER 2X MULTI-PLATINUM ALBUM

RIAA GOLD & PLATINUM ADELE//25 AWARDS 10X DIAMOND ALBUM September // 9/1/16 - 9/30/16 CHRIS STAPLETON//TRAVELLER 2X MULTI-PLATINUM ALBUM In September 2016, RIAA certified 87 Digital Single Awards and FIFTH HARMONY//7/27 23 Album Awards. All RIAA Awards GOLD ALBUM dating back to 1958, plus top tallies for your favorite artists, are available THOMAS RHETT//TANGLED UP at riaa.com/gold-platinum! PLATINUM ALBUM FARRUKO//VISIONARY SONGS PLATINO ALBUM www.riaa.com //// //// GOLD & PLATINUM AWARDS SEPTEMBER // 9/1/16 - 9/31/16 MULTI PLATINUM SINGLE // 23 Cert Date// Title// Artist// Genre// Label// Plat Level// Rel. Date// 9/20/16 Hello Adele Pop Columbia/Xl Recordings 10/23/15 R&B/ 9/9/16 Exchange Bryson Tiller RCA 9/21/15 Hip Hop 9/28/16 Call Me Maybe Carly Rae Jepsen Pop Schoolboy/Interscope 3/1/12 R&B/ Waverecordings/Empire/ 9/23/16 Broccoli D.R.A.M. 4/6/16 Hip Hop Atlantic Hollywood Records & Island 9/23/16 Cool For The Summer Demi Lovato Pop 7/1/15 Records R&B/ Young Money/Cash Money/ 9/1/16 One Dance Drake Hip Hop 4/12/16 Republic Records Dance/Elec R&B/Hip Young Money/Cash Money/ 9/1/16 One Dance Drake Hop 4/12/16 Republic Records Dance/Elec R&B/ Young Money/Cash Money/ 9/1/16 One Dance Drake Hip Hop 4/12/16 Republic Records Dance/Elec Work From Home 9/6/16 Fifth Harmony Pop Syco Music/Epic 2/26/16 Feat. Ty Dolla $Ign Future Feat. -

Infusion (Faxable).Pub

Song List CURRENT/CLUB AIN’T IT FUN—Paramore HEY YA—Outkast AIN’T NO OTHER MAN - Christina Aguilera HOLD IT AGAINST ME—Britney Spears ALL THIS TIME—One Republic I FEEL LIKE BUSTIN’ LOOSE—Chuck Brown ALL TIED UP—Robin Thicke I GOTTA FEELIN’ - Black Eyed Peas APPLAUSE—Lady Gaga I KNEW YOU WERE TROUBLE—Taylor Swift ATMOSPHERE—Kaskade I KNOW YOU WANT ME—Pitbull BAD ROMANCE - Lady Gaga I LIKE IT LIKE THAT - Hot Chelle Rae BEAUTIFUL—Akon I NEED YOUR LOVE—Clavin Harris & Ellie Goulding BLOW ME ONE LAST KISS - Pink I WANNA DANCE WITH SOMEBODY—Whitney Houston BLURRED LINES—Robin Thicke & Pharrell I WANT YOU BACK—Cher Lloyd BORN THIS WAY—Lady Gaga INTERNATIONAL LOVE—Pitbull/Chris Brown CALIFORNIA GURLS - Katy Perry JUMP AROUND—House of Payne CALL ME MAYBE - Carly Rae Jepsen JUST DANCE - Lady Gaga CLARITY—Zedd JUST FINE—Mary J. Blige COMPASS—Lady Antebellum LADIES NIGHT - Kool & The Gang CRAZY IN LOVE - Beyonce’ LE FREAK—Chic DA BUTT—E.U. LET’S GET IT STARTED - Black Eye Peas DÉJÀ VU—Beyonce LET’S GO - Neyo DISCO INFERNO - The Trammps LOCKED OUT OF HEAVEN—Bruno Mars DJ GOT US FALLING IN LOVE—Usher/Pitbull LOVE ON TOP—Beyonce DOMINO—Jessie J LOVE SHACK—The B 52’s DRIVE BY - Train LOVE YOU LIKE A SONG—Selena Gomez DYNAMITE—Taio Cruz MO MONEY MO PROBLEMS—Notorious BIG EDGE OF GLORY - Lady Gaga MONSTER—Eminem & Rihanna EMPIRE STATE OF MIND—Alicia Keys & Jay Z MORE - Usher EVERY LITTLE STEP - Bobby Brown MOVES LIKE JAGGER - Maroon 5 FEELS SO CLOSE—Calvin Harris OMG - Usher FINALLY—CeCe Peniston ON THE FLOOR—Jennifer Lopez FIREWORK - Katy Perry ONE MORE -

Commission Meeting Agenda a Public Notice of the Federal Communications

Commission Meeting Agenda A Public Notice of the Federal Communications Federal Communications Commission Commission 445 12th Street, S.W. News Media Information (202) 418-0500 Washington, D.C. 20554 Fax-On-Demand (202) 418-2830 Internet: http://www.fcc.gov ftp.fcc.gov December 11, 2006 **Revised** FCC Announces Agenda for Public Hearing on Media Ownership in Nashville, Tennessee (NOTE: The information included in the previously released meeting agenda is supplemented in this notice.) The Federal Communications Commission today announced further details of its previously announced Nashville field hearing regarding media ownership (see announcements dated November 14, December 1 and December 5). As previously announced, the hearing date, time, and location are as follows: Date: Monday, December 11, 2006 Time: 1:00p.m. CST Location: Belmont University Massey Performing Arts Center Massey Concert Hall 1900 Belmont Blvd Nashville, TN Link to Massey Performing Arts Center: http://www.belmont.edu/music_about/facilities/concert_hall_massey.html Link to Belmont Campus Map and Directions: http://www.belmont.edu/campusmap/ The purpose of the hearing is to fully involve the public in the process of the 2006 Quadrennial Broadcast Media Ownership Review that the Commission is currently conducting. This hearing is the second in a series of media ownership hearings the Commission intends to hold across the country. *The summaries listed in this notice are intended for the use of the public attending open Commission meetings. Information not summarized may also be considered at such meetings. Consequently these summaries should not be interpreted to limit the Commission's authority to consider any relevant information. The hearing is open to the public, and seating will be available on a first-come, first- served basis. -

George P. Johnson Negro Film Collection LSC.1042

http://oac.cdlib.org/findaid/ark:/13030/tf5s2006kz No online items George P. Johnson Negro Film Collection LSC.1042 Finding aid prepared by Hilda Bohem; machine-readable finding aid created by Caroline Cubé UCLA Library Special Collections Online finding aid last updated on 2020 November 2. Room A1713, Charles E. Young Research Library Box 951575 Los Angeles, CA 90095-1575 [email protected] URL: https://www.library.ucla.edu/special-collections George P. Johnson Negro Film LSC.1042 1 Collection LSC.1042 Contributing Institution: UCLA Library Special Collections Title: George P. Johnson Negro Film collection Identifier/Call Number: LSC.1042 Physical Description: 35.5 Linear Feet(71 boxes) Date (inclusive): 1916-1977 Abstract: George Perry Johnson (1885-1977) was a writer, producer, and distributor for the Lincoln Motion Picture Company (1916-23). After the company closed, he established and ran the Pacific Coast News Bureau for the dissemination of Negro news of national importance (1923-27). He started the Negro in film collection about the time he started working for Lincoln. The collection consists of newspaper clippings, photographs, publicity material, posters, correspondence, and business records related to early Black film companies, Black films, films with Black casts, and Black musicians, sports figures and entertainers. Stored off-site. All requests to access special collections material must be made in advance using the request button located on this page. Language of Material: English . Conditions Governing Access Open for research. All requests to access special collections materials must be made in advance using the request button located on this page. Portions of this collection are available on microfilm (12 reels) in UCLA Library Special Collections. -

Hundreds of Country Artists Have Graced the New Faces Stage. Some

314 performers, new face book 39 years, 1 stage undreds of country artists have graced the New Faces stage. Some of them twice. An accounting of every one sounds like an enormous Requests Htask...until you actually do it and realize the word “enormous” doesn’t quite measure up. 3 friend requests Still, the Country Aircheck team dug in and tracked down as many as possible. We asked a few for their memories of the experience. For others, we were barely able to find biographical information. And we skipped the from his home in Nashville. for Nestea, Miller Beer, Pizza Hut and “The New Faces Show had Union 76, among others. details on artists who are still active. (If you need us to explain George Strait, all those radio people, and for instance, you’re probably reading the wrong publication.) Enjoy. I made a lot of friends. I do Jeanne Pruett: Alabama native Pruett remember they had me use enjoyed a solid string of hits from the early the staff band, and I was ’70s right into the ’80s including the No. resides in Nashville, still tours and will suffering some anxiety over not being able 1 smash, “Satin Sheets.” Pruett is based receive a star in the Hollywood Walk of to use my band.” outside of Nashville and is still active as a 1970 Fame in October, 2009. performer and as a member of the Grand Jack Barlow: He charted with Ole Opry. hits like “Baby, Ain’t That Love” Bobby Harden: Starting out with his two 1972 and “Birmingham Blues,” but by sisters as the pop-singing Harden Trio, Connie Eaton: The Nashville native started Mel Street: West Virginian Street racked the mid-’70s Barlow had become the nationally Harden cracked the country Top 50 back her country career as a teenager and hit the up a long string of hits throughout the ’70s, famous voice of Big Red chewing gum.