Decamds User's Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Validated Products List, 1995 No. 3: Programming Languages, Database

NISTIR 5693 (Supersedes NISTIR 5629) VALIDATED PRODUCTS LIST Volume 1 1995 No. 3 Programming Languages Database Language SQL Graphics POSIX Computer Security Judy B. Kailey Product Data - IGES Editor U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 July 1995 QC 100 NIST .056 NO. 5693 1995 NISTIR 5693 (Supersedes NISTIR 5629) VALIDATED PRODUCTS LIST Volume 1 1995 No. 3 Programming Languages Database Language SQL Graphics POSIX Computer Security Judy B. Kailey Product Data - IGES Editor U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 July 1995 (Supersedes April 1995 issue) U.S. DEPARTMENT OF COMMERCE Ronald H. Brown, Secretary TECHNOLOGY ADMINISTRATION Mary L. Good, Under Secretary for Technology NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY Arati Prabhakar, Director FOREWORD The Validated Products List (VPL) identifies information technology products that have been tested for conformance to Federal Information Processing Standards (FIPS) in accordance with Computer Systems Laboratory (CSL) conformance testing procedures, and have a current validation certificate or registered test report. The VPL also contains information about the organizations, test methods and procedures that support the validation programs for the FIPS identified in this document. The VPL includes computer language processors for programming languages COBOL, Fortran, Ada, Pascal, C, M[UMPS], and database language SQL; computer graphic implementations for GKS, COM, PHIGS, and Raster Graphics; operating system implementations for POSIX; Open Systems Interconnection implementations; and computer security implementations for DES, MAC and Key Management. -

The Design and Verification of the Alphastation 600 5-Series Workstation by John H

The Design and Verification of the AlphaStation 600 5-series Workstation by John H. Zurawski, John E. Murray, and Paul J. Lemmon ABSTRACT The AlphaStation 600 5-series workstation is a high-performance, uniprocessor design based on the Alpha 21164 microprocessor and on the PCI bus. Six CMOS ASICs provide high-bandwidth, low-latency interconnects between the CPU, the main memory, and the I/O subsystem. The verification effort used directed, pseudorandom testing on a VERILOG software model. A hardware-based verification technique provided a test throughput that resulted in a significant improvement over software tests. This technique currently involves the use of graphics cards to emulate generic DMA devices. A PCI hardware demon is under development to further enhance the capability of the hardware-based verification. INTRODUCTION The high-performance AlphaStation 600 5-series workstation is based on the fastest Alpha microprocessor to date -- the Alpha 21164.[1] The I/O subsystem uses the 64-bit version of the Peripheral Component Interconnect (PCI) and the Extended Industry Standard Architecture (EISA) bus. The AlphaStation 600 supports three operating systems: Digital UNIX (formerly DEC OSF/1), OpenVMS, and Microsoft's Windows NT. This workstation series uses the DECchip 21171 chip set designed and built by Digital. These chips provide high-bandwidth, low-latency interconnects between the CPU, the main memory, and the PCI bus. This paper describes the architecture and features of the AlphaStation 600 5-series workstation and the DECchip 21171 chip set. The system overview is first presented, followed by a detailed discussion of the chip set. The paper then describes the cache and memory designs, detailing how the memory design evolved from the workstation's requirements. -

Alpha and VAX Comparison Based on Industry-Standard Benchmark

Alpha and VAX Comparison based on Industry-standard Benchmark Results Digital Equipment Corporation December 1994 EC-N3909-10 Version 3.0 December 1994 The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that may appear in this document. Digital conducts its business in a manner that conserves the environment and protects the safety and health of its employees, customers, and the community. Restricted Rights: Use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c) (1 )(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227 7013. Copyright© 1994 Digital Equipment Corporation All rights reserved. Printed in U.S.A. The following are trademarks of Digital Equipment Corporation: AlphaServer, AlphaStation, AlphaGeneration, DEC, OpenVMS, VMS, ULTRIX, and the DIGITAL logo. The following are third-party trademarks: MIPS is a trademark of MIPS Computer Systems, Inc. TPC-A is a trademark of the Transaction Processing Performance Council. INFORMIX is a registered trademark of lnformix Software, Inc. OSF/1 is a registered trademark of the Open Software Foundation, Inc. ORACLE is a registered trademark of Oracle Corporation. SPEC, SPECfp92, and SPECratio are trademarks of Standard Performance Evaluation Corporation. MIPS is a trademark of MIPS Computer Systems, Inc. All other trademarks and registered -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Software Product Information

Software Product Information PRODUCT NAME: clearVISN Traffic Policy Manager Version 1.0a - Windows Suite 9033168 September, 1999 Description clearVISN Traffic Policy Manager is a network management application for balancing the traffic load across the multiple LANs on the MultiSwitch 900 backplane. This helps to ensure optimal network availability and response times for network users. clearVISN Traffic Policy Manager lets you use the bandwidth that you already purchased when buying DIGITAL’s MultiSwitch 900 switching platforms with Ethernet switching and port switching modules. Traffic Policy Manager provides a simple, automatic way to balance the load across multiple segments. Instead of manually moving ports from one segment to another as traffic load patterns change, you can run Traffic Policy Manager to continuously sense and react to ever changing conditions on the LAN segments. Traffic Policy Manager monitors the utilization of DECswitch or VNswitch ports attached to the backplane and the utilization on each port of a PORTswitch module, then moves PORTswitch ports from a heavily utilized LAN to a more lightly utilized LAN. Traffic Policy Manager can be run in any one of three modes: 1) Monitor-only mode: Traffic Policy Manager displays all of the Ethernet backplane LANs and to which LAN each of the PORTswitch ports is connected. A bar-graph to the right of the matrix displays the utilization on each of the LANs. A vertical bar associated with each PORTswitch port represents the port utilization. As you place the pointer over a port bar graph or over a LAN segment, a pop-up message identifies the name of the port or segment. -

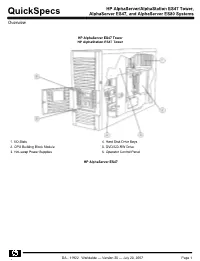

HP Alphaserver/Alphastation ES47 Tower, Alphaserver ES47, And

HP AlphaServer/AlphaStation ES47 Tower, QuickSpecs AlphaServer ES47, and AlphaServer ES80 Systems Overview HP AlphaServer ES47 Tower HP AlphaStation ES47 Tower 1. I/O Slots 4. Hard Disk Drive Bays 2. CPU Building Block Module 5. DVD/CD-RW Drive 3. Hot-swap Power Supplies 6. Operator Control Panel HP AlphaServer ES47 DA - 11922 Worldwide — Version 25 — July 23, 2007 Page 1 HP AlphaServer/AlphaStation ES47 Tower, QuickSpecs AlphaServer ES47, and AlphaServer ES80 Systems Overview 1. Cable/DSL HUB 2. StorageWorks Drawer (optional) 3. PCI/PCI-X I/O Expansion Drawer(s) (optional) 4. System Building Block Drawer (Model 2 includes 1 drawer; Model 4 includes 2 drawers) 5. AC input controller(s) (mandatory options) HP AlphaServer ES80 DA - 11922 Worldwide — Version 25 — July 23, 2007 Page 2 HP AlphaServer/AlphaStation ES47 Tower, QuickSpecs AlphaServer ES47, and AlphaServer ES80 Systems Overview 1. Cable/DSL HUB 2. StorageWorks Drawer (optional) 3. PCI/PCI-X I/O Expansion Drawer(s) (optional) 4. System Building Block Drawer (Model 2 includes 1 drawer; Model 3 includes 2 drawers; Model 6 includes 3 drawers; Model 8 includes 4 drawers) 5. AC input controller(s) (mandatory options) DA - 11922 Worldwide — Version 25 — July 23, 2007 Page 3 HP AlphaServer/AlphaStation ES47 Tower, QuickSpecs AlphaServer ES47, and AlphaServer ES80 Systems Overview At A Glance AlphaServer ES47/ES80 systems Up to 8 Alpha 21364 EV7 processors at 1150 MHz and 1000 MHz with advanced on-chip memory controllers and switch logic capable of providing 12.3 GB/s of memory bandwidth -

Personal Workstation (Alpha) User Information

Personal Workstation (Alpha) User Information Order Number: EK-ALMIA-UI. A01 October 1996 Digital Equipment Corporation Maynard, Massachusetts October 1996 The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that might appear in this document. The software, if any, described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. No responsibility is assumed for the use or reliability of software or equipment that is not supplied by Digital Equipment Corporation or its affiliated companies. Restricted Rights: Use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013. Copyright 1996 Digital Equipment Corporation. All Rights Reserved. The following are trademarks of Digital Equipment Corporation: AlphaStation, DEC, Digital, Digital Semiconductor, OpenVMS, ThinWire, and the DIGITAL logo. The following are third-party trademarks: Microsoft and Windows NT are registered trademarks of Microsoft Corporation. UNIX is a registered trademark in the United States and other countries licensed exclusively through X/Open Company Ltd. All other trademarks or registered trademarks are the property of their respective holders. This document was produced with Microsoft Word for Windows, V6.0c. FCC Information - Class B This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC rules. -

VAX VMS at 20

1977–1997... and beyond Nothing Stops It! Of all the winning attributes of the OpenVMS operating system, perhaps its key success factor is its evolutionary spirit. Some would say OpenVMS was revolutionary. But I would prefer to call it evolutionary because its transition has been peaceful and constructive. Over a 20-year period, OpenVMS has experienced evolution in five arenas. First, it evolved from a system running on some 20 printed circuit boards to a single chip. Second, it evolved from being proprietary to open. Third, it evolved from running on CISC-based VAX to RISC-based Alpha systems. Fourth, VMS evolved from being primarily a technical oper- ating system, to a commercial operat- ing system, to a high availability mission-critical commercial operating system. And fifth, VMS evolved from time-sharing to a workstation environment, to a client/server computing style environment. The hardware has experienced a similar evolution. Just as the 16-bit PDP systems laid the groundwork for the VAX platform, VAX laid the groundwork for Alpha—the industry’s leading 64-bit systems. While the platforms have grown and changed, the success continues. Today, OpenVMS is the most flexible and adaptable operating system on the planet. What start- ed out as the concept of ‘Starlet’ in 1975 is moving into ‘Galaxy’ for the 21st century. And like the universe, there is no end in sight. —Jesse Lipcon Vice President of UNIX and OpenVMS Systems Business Unit TABLE OF CONTENTS CHAPTER I Changing the Face of Computing 4 CHAPTER II Setting the Stage 6 CHAPTER -

Digital Alphastation 250 Series User Information

Digital AlphaStation™ 250 Series User Information Order Number: EK-PCCTA-UI. A01 Digital Equipment Corporation Maynard, Massachusetts January 1995 The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that might appear in this document. The software, if any, described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. No responsibility is assumed for the use or reliability of software or equipment that is not supplied by Digital Equipment Corporation or its affiliated companies. Restricted Rights: Use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013. Copyright 1995 Digital Equipment Corporation. All Rights Reserved. The following are trademarks of Digital Equipment Corporation: AlphaGeneration, AlphaStation, DEC, Digital, OpenVMS, ThinWire, and the DIGITAL logo. The following are third-party trademarks: Microsoft and Windows NT are registered trademarks of Microsoft Corporation. SIMM is a trademark of Molex Corporation. OSF/1 is a trademark of the Open Software Foundation, Inc. All other trademarks or registered trademarks are the property of their respective holders. This document was produced with Microsoft Word for Windows, V2.0c. FCC Information - Class B This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC rules. -

Digital Personal Workstation Service Maintenance Guide

Digital Personal Workstation Service Maintenance Manual DIGITAL PERSONAL WORKSTATION Digital Personal Workstation Service Maintenance Manual Part Number: ER-B30WW-SM. A01 DIGITAL PERSONAL WORKSTATION Digital Equipment Corporation September 1996 The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that might appear in this document. The software described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. No responsibility is assumed for the use or reliability of software or equipment that is not supplied by Digital Equipment Corporation or its affiliated companies. Restricted Rights: Use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013. Digital Personal Workstation Service Maintenance Manual Copyright Digital Equipment Corporation. All Rights Reserved. DEC and the Digital logo are registered trademarks of Digital Equipment Corporation. Intel is a registered trademark of Intel Corporation. Pentium-Pro are registered trademarks of Intel Corporation. Microsoft and Windows NT are registered trademarks of Microsoft Corporation. SIMM is a registered trademark of Wang Laboratories. All other trademarks and registered trademarks are the property of their respective holders. The FCC wants you to know... This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC rules. -

The Computer History Simulation Project

The Computer History Simulation Project The Computer History Simulation Project The Computer History Simulation Project is a loose Internet-based collective of people interested in restoring historically significant computer hardware and software systems by simulation. The goal of the project is to create highly portable system simulators and to publish them as freeware on the Internet, with freely available copies of significant or representative software. Simulators SIMH is a highly portable, multi-system simulator. ● Download the latest sources for SIMH (V3.5-1 updated 15-Oct-2005 - see change log). ● Download a zip file containing Windows executables for all the SIMH simulators. The VAX and PDP-11 are compiled without Ethernet support. Versions with Ethernet support are available here. If you download the executables, you should download the source archive as well, as it contains the documentation and other supporting files. ● If your host system is Alpha/VMS, and you want Ethernet support, you need to download the VMS Pcap library and execlet here. SIMH implements simulators for: ● Data General Nova, Eclipse ● Digital Equipment Corporation PDP-1, PDP-4, PDP-7, PDP-8, PDP-9, PDP-10, PDP-11, PDP- 15, VAX ● GRI Corporation GRI-909 ● IBM 1401, 1620, 1130, System 3 ● Interdata (Perkin-Elmer) 16b and 32b systems ● Hewlett-Packard 2116, 2100, 21MX ● Honeywell H316/H516 ● MITS Altair 8800, with both 8080 and Z80 ● Royal-Mcbee LGP-30, LGP-21 ● Scientific Data Systems SDS 940 Also available is a collection of tools for manipulating simulator file formats and for cross- assembling code for the PDP-1, PDP-7, PDP-8, and PDP-11. -

Digital Alphastation 200 4/233 CPU Upgrade Information

t Digital AlphaStation™ 200 4/233 CPU Upgrade Information Order Number: EK–PCDTA–CP. A01 Digital Equipment Corporation Maynard, Massachusetts December 1995 The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that might appear in this document. The software, if any, described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. No responsibility is assumed for the use or reliability of software or equipment that is not supplied by Digital Equipment Corporation or its affiliated companies. Restricted Rights: Use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013. Copyright 1995 Digital Equipment Corporation. All Rights Reserved. The following are trademarks of Digital Equipment Corporation: AlphaGeneration, AlphaStation, DEC, Digital, and the DIGITAL logo. The following are third-party trademarks: Microsoft and Microsoft Windows NT are registered trademarks of Microsoft Corporation. All other trademarks or registered trademarks are the property of their respective holders. This document was produced with Microsoft Word for Windows, V2.0c. FCC Information - Class B This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC rules. These limits are designed to provide reasonable protection against harmful interference in a residential installation.