Alpha and VAX Comparison Based on Industry-Standard Benchmark

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alphaserver 4000/4100 Configuration and Installation Guide

AlphaServer 4000/4100 Configuration and Installation Guide Order Number: EK–4100A–CG. E01 This manual is for manufacturing and field personnel, and resellers who require AlphaServer 4000/4100 system drawer configuration information. Digital Equipment Corporation Maynard, Massachusetts First Printing, June 1997 Digital Equipment Corporation makes no representations that the use of its products in the manner described in this publication will not infringe on existing or future patent rights, nor do the descriptions contained in this publication imply the granting of licenses to make, use, or sell equipment or software in accordance with the description. The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that may appear in this document. The software, if any, described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. No responsibility is assumed for the use or reliability of software or equipment that is not supplied by Digital Equipment Corporation or its affiliated companies. Copyright 1997 by Digital Equipment Corporation. All rights reserved. The following are trademarks of Digital Equipment Corporation: AlphaServer, OpenVMS, StorageWorks, VAX, and the DIGITAL logo. The following are third-party trademarks: UNIX is a registered trademark in the U.S. and other countries, licensed exclusively through X/Open Company Ltd. Windows NT is a trademark of Microsoft, Inc. All other trademarks and registered trademarks are the property of their respective holders. FCC Notice: The equipment described in this manual generates, uses, and may emit radio frequency energy. -

Validated Products List, 1995 No. 3: Programming Languages, Database

NISTIR 5693 (Supersedes NISTIR 5629) VALIDATED PRODUCTS LIST Volume 1 1995 No. 3 Programming Languages Database Language SQL Graphics POSIX Computer Security Judy B. Kailey Product Data - IGES Editor U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 July 1995 QC 100 NIST .056 NO. 5693 1995 NISTIR 5693 (Supersedes NISTIR 5629) VALIDATED PRODUCTS LIST Volume 1 1995 No. 3 Programming Languages Database Language SQL Graphics POSIX Computer Security Judy B. Kailey Product Data - IGES Editor U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 July 1995 (Supersedes April 1995 issue) U.S. DEPARTMENT OF COMMERCE Ronald H. Brown, Secretary TECHNOLOGY ADMINISTRATION Mary L. Good, Under Secretary for Technology NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY Arati Prabhakar, Director FOREWORD The Validated Products List (VPL) identifies information technology products that have been tested for conformance to Federal Information Processing Standards (FIPS) in accordance with Computer Systems Laboratory (CSL) conformance testing procedures, and have a current validation certificate or registered test report. The VPL also contains information about the organizations, test methods and procedures that support the validation programs for the FIPS identified in this document. The VPL includes computer language processors for programming languages COBOL, Fortran, Ada, Pascal, C, M[UMPS], and database language SQL; computer graphic implementations for GKS, COM, PHIGS, and Raster Graphics; operating system implementations for POSIX; Open Systems Interconnection implementations; and computer security implementations for DES, MAC and Key Management. -

The Design and Verification of the Alphastation 600 5-Series Workstation by John H

The Design and Verification of the AlphaStation 600 5-series Workstation by John H. Zurawski, John E. Murray, and Paul J. Lemmon ABSTRACT The AlphaStation 600 5-series workstation is a high-performance, uniprocessor design based on the Alpha 21164 microprocessor and on the PCI bus. Six CMOS ASICs provide high-bandwidth, low-latency interconnects between the CPU, the main memory, and the I/O subsystem. The verification effort used directed, pseudorandom testing on a VERILOG software model. A hardware-based verification technique provided a test throughput that resulted in a significant improvement over software tests. This technique currently involves the use of graphics cards to emulate generic DMA devices. A PCI hardware demon is under development to further enhance the capability of the hardware-based verification. INTRODUCTION The high-performance AlphaStation 600 5-series workstation is based on the fastest Alpha microprocessor to date -- the Alpha 21164.[1] The I/O subsystem uses the 64-bit version of the Peripheral Component Interconnect (PCI) and the Extended Industry Standard Architecture (EISA) bus. The AlphaStation 600 supports three operating systems: Digital UNIX (formerly DEC OSF/1), OpenVMS, and Microsoft's Windows NT. This workstation series uses the DECchip 21171 chip set designed and built by Digital. These chips provide high-bandwidth, low-latency interconnects between the CPU, the main memory, and the PCI bus. This paper describes the architecture and features of the AlphaStation 600 5-series workstation and the DECchip 21171 chip set. The system overview is first presented, followed by a detailed discussion of the chip set. The paper then describes the cache and memory designs, detailing how the memory design evolved from the workstation's requirements. -

HS1CP Device Channel Processor Operating Software HSOF V3.1

TM HS1CP Device Channel Processor Operating Software HSOF Version 3.1 Release Notes Order Number: EK−HS1CP−RN. E01 This document summarizes features and characteristics of the HS1CP Device Channel Processor operating software Version 3.1 that are not covered elsewhere in the documentation. These release notes also contain instructions for installing the software. _____________________________ Note____________________________ These Release Notes contain instructions for C_SWAP and HSUTIL that supersede information in the product documentation. Keep these Release Notes near your StorageWorks subsystem. ______________________________________________________________ Software Version: HSOF V3.1 Digital Equipment Corporation Maynard, Massachusetts April 1997 While Digital Equipment Corporation believes the information included in this manual is correct as of the date of publication, it is subject to change without notice. DIGITAL makes no representations that the interconnection of its products in the manner described in this document will not infringe existing or future patent rights, nor do the descriptions contained in this document imply the granting of licenses to make, use, or sell equipment or software in accordance with the description. No responsibility is assumed for the use or reliability of firmware on equipment not supplied by DIGITAL or its affiliated companies. Possession, use, or copying of the software or firmware described in this documentation is authorized only pursuant to a valid written license from DIGITAL, an authorized sublicensor, or the identified licensor. Commercial Computer Software, Computer Software Documentation and Technical Data for Commercial Items are licensed to the U.S. Government with DIGITAL’s standard commercial license and, when applicable, the rights in DFAR 252.227-7015, “Technical Data—Commercial Items.” © Digital Equipment Corporation 1997. -

Xa9846175 Indc(Nds)

XA9846175 international Atomic Energy Agency INDC(NDS)-374 Distr. G, NC I N DC INTERNATIONAL NUCLEAR DATA COMMITTEE Report on the Consultants' Meeting on CO-ORDINATION OF THE NUCLEAR REACTION DATA CENTERS (Technical Aspects) IAEA Headquarters, Vienna, Austria 26-28 May 1997 Edited by O. Schwerer and H. Wienke October 1997 IAEA NUCLEAR DATA SECTION, WAGRAMERSTRASSE §, A-1400 VIENNA Reproduced by the IAEA in Austria October 1997 INDC(NDS)-374 Distr. G, NC Report on the Consultants' Meeting on CO-ORDINATION OF THE NUCLEAR REACTION DATA CENTERS (Technical Aspects) IAEA Headquarters, Vienna, Austria 26-28 May 1997 Edited by O. Schwerer and H. Wienke October 1997 Contents Introduction 7 The Network of Nuclear Reaction Data Centers 9 List of Acronyms 11 Agenda 13 List of Participants 15 Conclusions and Actions 17 Appendix 1 Agreement on Charged-Particle Data Compilation Responsibility 25 Status Reports Rl Nuclear Data Section 27 R2 RIKEN 41 R3 JCPRG 43 R4 CAJAD 47 R5 NDG-VNIIEF 51 R6 CNDC 53 R7 CJD 57 R8 NEA Data Bank 59 R9 ATOMKI 67 RIO NNDC 73 Selected Working Papers 77 NEXT PAQE(S) left BLANK -7- IAEA Consultants' Meeting on Co-ordination of the Nuclear Reaction Data Centers (Technical Aspects) IAEA Headquarters, Vienna, Austria 26-28 May 1997 Introduction The IAEA Nuclear Data Section convenes in annual intervals coordination meetings of the Network of the Nuclear Reaction Data Centers. The last meeting, with center heads and technical staff present, took place in Brookhaven, USA, 3-7 June 1996. See the report INDC(NDS)-360. The present meeting was attended by technical staff only to discuss technical matters of the nuclear data compilation and exchange by means of the jointly operated computerized systems CINDA, EXFOR, ENDF and others. -

Software Product Description

Software Product Description PRODUCT NAME: Uniplex™ Business Software for ULTRIX SPD 32.83.01 on RISC, Version 7.00c DESCRIPTION • On-screen print effects Uniplex Business Software is a product of Uniplex Lim- • Paragraph and page numbering ited and distributed under Digital Equipment Corpora- • Decimal tab and column align tion’s Terms and Conditions. • Headers, footers, footnotes and endnotes Uniplex Business Software is a suite of UNIX™-based • Line and box drawing, sketch mode office applications designed to handle routine office ac- tivities. Uniplex II Plus, the base program for all Uniplex • Auto backup and auto save Business Software, provides word processing, spread- • Document boilerplate merge sheet, database and business graphics. In addition to Uniplex II Plus, several optional applications can be • Flexible search and replace added to expand overall office functionality. • Automatic index and table of contents generation Uniplex Advanced Office System (AOS) adds electronic • Spelling dictionary and thesaurus mail, time manager, personal organizer, card index and report writer. Uniplex Advanced Graphics System • Flexible printer formatting on Digital printers (AGS) provides charting, graphing and freehand draw Spreadsheet capabilities. Uniplex Additional Dictionary Pack (ADP) provides support for multiple dictionaries which enables The Uniplex spreadsheet provides the user with a users to spellcheck documents in various languages. choice of using either the Uniplex interface or an in- dustry standard interface (ISSI) similar to that used by Uniplex Business Software uses consistent commands, other leading spreadsheet products. Data can imported menus and softkeys throughout all applications. Users from Lotus® 1-2-3, DIF or ASCII files into a matrix as may "hot-key" between several applications or between large as 1024 rows x 256 columns. -

VAX 4000 V96-2.3—10 Feb 1997

TM VAX 4000 V96-2.3—10 Feb 1997 DIGITAL Systems and Options Catalog Product Description VAX 4000 systems provide commercial systems performance, high availability, and a compact footprint. They support a wide range of applications and options, including FDDI networks and Q-bus peripherals. System enclosure supports internal storage and Q-bus expansion through a B400X expansion cabinet. VAX 4000 systems come in three packages: Desktop Model 106A, Desktop/Deskside Model 108, and Pedestal/Deskside Model 505A/705A DSSI and Ethernet adapter chips—each driven by a 10-MIP on-chip RISC processor—are tightly integrated on the CPU module with direct access to memory. Digital's DSSI to SCSI HSD10 storage solutions replace DSSI RF36 disk technology in all VAX 4000 systems. Digital’s HSD10 DSSI-to-SCSI controller. mounted internally in system cabinet, supports standard RZxx SCSI storage on VAX 4000 systems while still supporting DSSI clustering. External StorageWorks HSD10 controllers are supported. VAX 4000 Model 106A offers performance of 10-ns NVAX chip. Systems achieve 215 transactions per second (TPS). With internal support for the HSD10, DSSI-to-SCSI controller, VAX 4000 customers can take advantage of low-cost, more flexible and open StorageWorks solutions. VAX 4000 Model 108 offers identical performance, is compatible with Model 106A, but is housed in a new Desktop/Deskside minitower enclosure. In addition, these systems offer enchancements in the memory and storage capacity, supporting up to 512 MB of standard SIMM memory and six storage devices in the system enclosure. VAX 4000 Model 505A and 705A offer 12 ns and 9 ns performance, respectively in a Q-bus Pedestal package. -

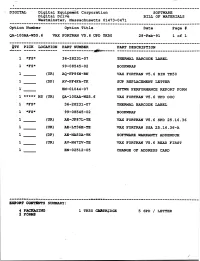

I Txso C~T Dc~ 5 SPD / LETTER FC~R

i DIGITAL Digital Equipm~t3t Corporation SOFTW~►,RE Digital Drive SILL OF MATERIALS Westminster, Massachusetts 01473-0471 Option Number Option Title Date Page ~ QA-10 OAA-W55.6 ~rAX FORTF~? V5.6 UPD TK5 0 28-Feb-91 1 of 1 ~TY PICK LOCATION PART NLJL~ER PART DESCRIPTION .~_ -.. 1 *FS* 36-28231-07 TRE~I~ZA~L S~~RCODE LABEL 1 *FS* 99-08545-02 BOOKT~IRAP 1 t UR) AQ-FP 8 6N-BN ~irAX FORTP~AN V5.6 BIN TK5 0 1 ~ DP ~ AV-PF4FA-TK SUP REPLACEMENT LETTER 1 EN-01044-07 SFT'WR PERFOF~!~ANCE REPORT FORM 1 ***** NS ~~~ QA-10 OAA-WZ 5.6 ~irAX FORTF~AN V5.6 UPD DOC 1 *FS* 36-28231-0? THEF~!zA,L P.~ARCODE LABEL 1 *FS* 99-08545-02 BOOKT~IRAP 1 (UR} AE-JF8?L-TE ~irAX FORTP►AN V5.6 SPD 2 5.16.3 6 1 - t~~ AE-LT36H-TE ~irAX FORTP;AN SSA 2 5.16.3 6 -A 1 SDP ) AE -1~tA,5 OA- TK SOFTWARE W TY ADDENDUM 1 tUR~ AV-N672V-TE V'AX FORTP►AN V5.6 PAD FIRS T 1 EN-02512-05 C~3LANGE OF ADDRESS CARD ilk.~ r~~i~li~ilpirfi~~ra~~reis~-~-~~~------~----~~~~ t~ii~~l~+~r~~~-~~~--~--~.r~.~ i Txso c~t Dc~ 5 SPD / LETTER FC~R : AV-PF4FA- TK October, 1990 d 9 Dear Service Customer, Enclosed is a software product update/maintenance release supplied as part of your software maintenance agreement. As part of its planned License Management Zbols program, Digital has initiated replacement of all Service Update PAKs (SUPs) for licensed software product with License Product Authorization Keys (PAKs). -

VAX 4000 Model 300

VAX 4000 Model 300 Technical Information Order Number: EK-,337 AA-TI-001 VAX 4000 Model 300 Technical Information Order Number EK-337AA-TI-001 digital equipment corporation maynard, massachusetts First Printing, March 1990 The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that may appear in this document. The software, if any, described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. No responsibility is assumed for the use or reliability of software or equipment that is not supplied by Digital Equipment Corporation or its affiliated companies. Restricted Rights: Use, duplication or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c)(l)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013. © Digital Equipment Corporation 1990. All rights reserved. Printed in U.S.A The Reader's Comments form at the end of this document requests your critical evaluation to assist in preparing future documentation. The following are trademarks of Digital Equipment Corporation. COMPACTape DSSI ULTRIX DDCMP !VIS UNffiUS DEC Letterprinter VAX DEC direct Letterwriter VAX 4000 DECnet MicroVAX VAXcluster DECserver PDP VAX DOCUMENT DECsystem 5400 Professional VAXELN DECUS Q-bus VAXlab DECwrlter ReGIS VMS DELNI RQDX VT DELQA ScriptPrinter lM DEQNA ThinWire mOmOOmD DESTA Amphenol is a trademark of Amphenol Corporation. Bell is a trademark of Bell telephone companies. -

HP Openvms Cluster Software SPD 29.78.27

Software Product Description PRODUCT NAME: HP OpenVMS Cluster Software SPD 29.78.27 This Software Product Description describes Versions a mix of AlphaServer and VAX or AlphaServer and In- 6.2–1H3, 7.1–1H1, 7.1–1H2, 7.1–2, 7.2, 7.2–1, 7.2– tegrity server systems. In this SPD, this environment is 1H1, 7.2-2, 7.3, 7.3–1, 7.3–2, 8.2, 8.2–1 and 8.3 of the referred to as an OpenVMS Cluster system. following products: Systems in an OpenVMS Cluster system can share pro- • HP VMScluster Software for OpenVMS for Integrity cessing, mass storage (including system disks), and Servers other resources under a single OpenVMS security and management domain. Within this highly integrated en- • HP VMScluster Software for OpenVMS AlphaServers vironment, systems retain their independence because (through V8.3) they use local, memory-resident copies of the OpenVMS operating system. Thus, OpenVMS Cluster systems • HP VAXcluster Software for OpenVMS VAX (through can boot and shut down independently while benefiting V7.3) from common resources. • HP OpenVMS Cluster Client Software for Integrity Servers Applications running on one or more systems in an OpenVMS Cluster system can access shared resources • HP OpenVMS Cluster Client Software for Al- in a coordinated manner. OpenVMS Cluster soft- phaServers (part of NAS150) ware components synchronize access to shared re- sources, allowing multiple processes on any system in • HP OpenVMS Cluster Client Software for VAX the OpenVMS Cluster to perform coordinated, shared (through V7.3) (part of NAS150) data updates. Except where noted, the features described in this Because resources are shared, OpenVMS Cluster sys- SPD apply equally to Integrity server, AlphaServers and tems offer higher availability than standalone systems. -

Validated Processor List

NISTIR 4557 Programming Languages and Database Language SQL VALIDATED PROCESSOR UST Including GOSIP Conformance Testing Registers Judy B. Kailey Editor U.S. DEPARTMENT OF COMMERCE National Institute of Standards and Technology National Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 April 1991 (Supersedes January 1991 Issue) U.S. DEPARTMENT OF COMMERCE Robert A. Mosbacher, Secretary NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY John W. Lyons, Director NIST > NISTIR 4557 Programming Languages and Database Language SQL VALIDATED PROCESSOR LIST Including GOSIP Conformance Testing Registers Judy B. Kailey Editor U.S. DEPARTMENT OF COMMERCE National Institute of Standards and Technology National Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 April 1991 (Supersedes January 1991 Issue) U.S. DEPARTMENT OF COMMERCE Robert A. Mosbacher, Secretary NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY John W. Lyons, Director lib t TABLE OF CONTENTS 1. INTRODUCTION 1 1.1 Purpose 1 1.2 Document Organization 1 1.2.1 Language Processors 1 1.2.2 Contributors to the VPL 2 1.2.3 Other FIPS Conformance Testing Products 2 1.2.4 GOSIP Registers 2 1.3 FIPS Programming and Database Language Standards 3 1.4 Validation of Processors 3 1.4.1 Validation Requirements 3 1.4.2 Placement in the List 4 1.4.3 Removal from the List 4 1.4.4 Validation Procedures 4 1.5 Certificate of Validation 4 1.6 Registered Report 4 1.7 Processor Validation Suites 5 2. COBOL PROCESSORS 7 3. FORTRAN PROCESSORS 13 4. Ada PROCESSORS 21 5. Pascal PROCESSORS 35 6. SQL PROCESSORS 37 APPENDIX A CONTRIBUTORS TO THE LIST A-1 APPENDIX B OTHER FIPS CONFORMANCE TESTING B-1 APPENDIX C REGISTER OF GOSIP ABSTRACT TEST SUITES C-1 APPENDIX D REGISTER OF GOSIP MEANS OF TESTING D-1 APPENDIX E REGISTER OF GOSIP CONFORMANCE TESTING LABORATORIES E-1 . -

1 DS-DWZZH-05 Ultrascsi Hub 1.1 General Overview

TruCluster Software Products Hardware Configuration Technical Update for DS-DWZZH-05 UltraSCSI Hub December 1998 Product Version: TruCluster Production Server Software Software Version 1.4A and TruCluster Production Server Software Version 1.5 and TruCluster Available Server Software 1.4A and TruCluster Available Server Software Version 1.5 Operating System and Version: Compaq’s DIGITAL UNIX Version 4.0B and Compaq’s DIGITAL UNIX Version 4.0D This technical update describes how to configure the DS-DWZZH-05 UltraSCSI hub in a TruCluster Software Products environment. Compaq Computer Corporation Houston, Texas © Compaq Computer Corporation 1998 All rights reserved. The following are trademarks of Compaq Computer Corporation: ALL–IN–1, Alpha AXP, AlphaGeneration, AlphaServer, AltaVista, ATMworks, AXP, Bookreader, CDA, DDIS, DEC, DEC Ada, DEC Fortran, DEC FUSE, DECnet, DECstation, DECsystem, DECterm, DECUS, DECwindows, DTIF, Massbus, MicroVAX, OpenVMS, POLYCENTER, PrintServer, Q–bus, StorageWorks, TruCluster, ULTRIX, ULTRIX Mail Connection, ULTRIX Worksystem Software, UNIBUS, VAX, VAXstation, VMS, XUI, and the Compaq logo. Prestoserve is a trademark of Legato Systems, Inc.; the trademark and software are licensed to Compaq Computer Corporation by Legato Systems, Inc. NFS is a registered trademark of Sun Microsystems, Inc. Open Software Foundation, OSF, OSF/1, OSF/Motif, and Motif are trademarks of the Open Software Foundation, Inc. UNIX is a registered trademark in the United States and other countries, licensed exclusively through X/Open Company,