On Ttethernet for Integrated Fault- Tolerant Spacecraft Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Ttethernet for Integrated Fault-Tolerant Spacecraft Networks

On TTEthernet for Integrated Fault-Tolerant Spacecraft Networks Andrew Loveless∗ NASA Johnson Space Center, Houston, TX, 77058 There has recently been a push for adopting integrated modular avionics (IMA) princi- ples in designing spacecraft architectures. This consolidation of multiple vehicle functions to shared computing platforms can significantly reduce spacecraft cost, weight, and de- sign complexity. Ethernet technology is attractive for inclusion in more integrated avionic systems due to its high speed, flexibility, and the availability of inexpensive commercial off-the-shelf (COTS) components. Furthermore, Ethernet can be augmented with a variety of quality of service (QoS) enhancements that enable its use for transmitting critical data. TTEthernet introduces a decentralized clock synchronization paradigm enabling the use of time-triggered Ethernet messaging appropriate for hard real-time applications. TTEther- net can also provide two forms of event-driven communication, therefore accommodating the full spectrum of traffic criticality levels required in IMA architectures. This paper explores the application of TTEthernet technology to future IMA spacecraft architectures as part of the Avionics and Software (A&S) project chartered by NASA's Advanced Ex- ploration Systems (AES) program. Nomenclature A&S = Avionics and Software Project AA2 = Ascent Abort 2 AES = Advanced Exploration Systems Program ANTARES = Advanced NASA Technology Architecture for Exploration Studies API = Application Program Interface ARM = Asteroid Redirect Mission -

Ethercat – Ultra-Fast Communication Standard

EtherCAT – ultra-fast communication standard In 2003, Beckhoff introduces its EtherCAT tech- In 2007, EtherCAT is adopted as an IEC standard, EtherCAT: nology into the market. The EtherCAT Technology underscoring how open the system is. To this Group (ETG) is formed, supported initially by day, the specification remains unchanged; it has global standard 33 founder members. The ETG goes on to stan- only been extended and compatibility has been dardize and maintain the technology. The group is retained. As a result, devices from the early years, the largest fieldbus user organization in the world, even from as far back as 2003, are still interopera- for real-time with more than 5000 members (as of 2019) cur- ble with today’s devices in the same networks. rently. In 2005, the Safety over EtherCAT protocol Another milestone is achieved in 2016 Ethernet from the is rolled out, expanding the EtherCAT specification with EtherCAT P, which introduces the ability to to enable safe transmission of safety-relevant carry power (2 x 24 V) on a standard Cat.5 cable field to the I/Os control data. The low-footprint protocol uses a alongside EtherCAT data. This paves the way for so-called Black Channel, making it completely machines without control cabinets. independent of the communication system used. The launch of EtherCAT G/G10 in 2018 in- How it works The key functional principle of EtherCAT lies in how its nodes process Ethernet frames: each node reads the data addressed to it and writes its data back to Flexible topology the frame all while the frame is An EtherCAT network can sup- moving downstream. -

The Role of CAN in the Age of Ethernet and IOT

iCC 2017 CAN in Automation The role of CAN in the age of Ethernet and IOT Christian Schlegel, HMS Industrial Networks CAN technology was developed in the 1980s and became available in 1987, just as other industrial fieldbus systems like PROFIBUS or INTERBUS entered the stage of industrial communication. Beside the fact that CAN is a success in the automotive industry and used in all types of cars today, it has also made its way in many other industrial areas. About 15 years ago, new technologies based on Ethernet started to emerge, with ap- pealing and sometimes outstanding features. Some six years ago Ethernet also started to find its way into automobiles. Today, other new communication technologies are showing up on the horizon driven by the omnipresent Industrial Internet of Things. But even now, 30 years after their introduction, these “classic” fieldbus technologies are still alive – with varying success. Since CAN was initially developed with a focus for use in automobiles, CAN has certain features that still make it the best choice for many applications in automobiles and industrial areas – even when compared to the newer technologies. This paper discusses why CAN is still a valid or even better choice for certain applica- tion areas than Ethernet-based technologies, not just focusing on the advanced fea- tures provided by the enhanced capabilities of CAN FD but also highlighting how these applications benefit from the features of “classic” CAN. Looking back into history … the requirement to transmit data between … when CAN was born these ECUs but also to connect sensors and actuators to them. -

An Extension of the Omnet++ INET Framework for Simulating Real-Time Ethernet with High Accuracy

An Extension of the OMNeT++ INET Framework for Simulating Real-time Ethernet with High Accuracy Till Steinbach, Hermand Dieumo Kenfack, Franz Korf, Thomas C. Schmidt HAW-Hamburg, Department Informatik Berliner Tor 7, D-20099 Hamburg, Germany {till.steinbach, hermand.dieumo, korf, schmidt}@informatik.haw-hamburg.de ABSTRACT those fields; real-time Ethernet is a promising candidate for Real-time extensions to standard switched Ethernet widen the upcoming tasks. the realm of computer networking into the time-critical do- Ethernet has proven to be a flexible, widely deployed, and main. These technologies have started to establish in pro- highly scalable protocol. Current Ethernet is a technology cess automation, while Ethernet-based communication in- based on switching that also allows to increase the amount of frastructures in vehicles are novel and challenged by particu- traffic simultaneously transferred, by using segregated com- larly hard real-time constraints. Simulation tools are of vital munication in groups. However, due to its randomised me- importance to explore the technical feasibility and facilitate dia access and best effort approach, it does not provide re- the distributed process of vehicle infrastructure design. liable temporal performance bounds. Real-time extensions This paper introduces an extension of the OMNeT++ to Ethernet promise to overcome those obstacles. INET framework for simulating real-time Ethernet with high In process automation, several Ethernet-based products temporal accuracy. Our module implements the TTEther- already provide real-time functionality for tasks with strict net protocol, a real-time extension to standard Ethernet that temporal constraints. The use of real-time Ethernet in an is proposed for standardisation. -

The Future of CAN / Canopen and the Industrial Ethernet Challenge by Wilfried Voss, President Esd Electronics, Inc USA

The Future of CAN / CANopen and the Industrial Ethernet Challenge by Wilfried Voss, President esd electronics, Inc USA Industrial Ethernet technologies are a formidable challenge to CANopen as the low-cost industrial networking technology of choice. Ethernet technologies will eventually replace the majority of CANopen applications, at least in regards to new developments. For many years, Controller Area Network (CAN) and CANopen, a higher-layer protocol based on CAN, represented the best choice for low-cost industrial embedded networking. However, since the official introduction of CAN in 1986, there has been a quest to replace CAN and CANopen to overcome the most obvious shortcomings such as limited baud rate and limited network length. Industrial Ethernet technologies are currently the most formidable challenge to CANopen as the low-cost industrial networking technology of choice. Ethernet technologies will eventually replace the majority of CANopen applications, at least in regards to new developments, starting at this very moment in certain areas such as industrial control including motion control and, especially, robotics. Ironically, CAN - the underlying hardware layer of CANopen - has a far greater lifetime expectancy in the North American market than CANopen as a higher layer protocol. However, there can be too much of a good thing, and that is definitely the case when it comes to Ethernet-based fieldbus technologies. There are currently more than 20 different industrial Ethernet solutions available, all with their distinctive advantages and disadvantages, making a pro/contra decision difficult. The major question, besides the technical aspect, is which of these technologies will survive in the market, and how do they support the current need for control components. -

Profinet Vs Profibus

Profinet vs Profibus Pouya Aminaie1 and Poorya Aminaie2 1Department of ECE, Shiraz University, Shiraz, Iran 2 Department of ECE, Shahid Beheshti University, Tehran, Iran E-mail: [email protected] Abstract We present a step by step definition of Profinet and Profibus. We introduced different types of each of the two communication protocols. We then described the topology and performance of each one individually. Finally, the properties of them have been compared to show that which one has better performance in the industry. Keywords: Profinet, Profibus, Industrial Ethernet and Communication Networks 1. Introduction 1.1. Profinet Profinet is the abbreviation for Process Field Net, which refers to technical standards for data communication through Ethernet in the industry. These types of standards are used for gathering data and controlling industrial equipment. As can be seen from Fig.1, Profinet satisfies all the needs of industrial technologies. Fig.1 Requirement of automation technology [1] 1 The need for Profinet is felt in production automation and processing automation sections, where its use can resolve many of these needs. Profinet can be divided into two main categories, as follows: • Profinet IO • Profinet CBA 1.2. Profibus The word Profibus is taken from the phrase Process Field Bus. The scope of this protocol covers from the field level to the control level. The advantages of Profibus are as follows: 1. Low noise acceptance due to twisted pair cable being the transmission interface. 2. Appropriate bandwidth due to the use of an appropriate transmission method such as RS485. 3. Secure and non-interfering data exchange for using the token pass access method. -

ETHERNET Powerlink Real-Time Industrial ETHERNET Servo Drives & Controllers

aerospace climate control electromechanical filtration fluid & gas handling hydraulics pneumatics process control sealing & shielding ETHERNET Powerlink Real-Time Industrial ETHERNET Servo Drives & Controllers Parker Hannifin Corporation • Electromechanical Automation Division • 800-358-9070 • www.parkermotion.com 1 ETHERNET Powerlink MotionBus Systems from the Global Leader in Motion Control Parker understands the challenges facing OEMs in high- tech industries. To help meet their challenges, Parker’s team of highly experienced motion system designers use a systematic project management process to deliver the most advanced linear motion technologies available. For all industrial automation solutions, Parker Automation combines speed, accuracy and high-load capability to give machine builders and OEMs a competitive edge. Medical device manufacturers Parker is the only supplier that utilize Parker’s integrated can provide complete technical automation solutions specifically and engineered solutions designed to reduce time-to- to OEMs for any packaging market and engineering costs requirement. Parker’s innovative while improving compliance with engineering, breadth of line, today’s stringent government worldwide distribution, and regulations. outstanding customer service set the standard for the industrial For semiconductor manufacturers, motion market in all these areas: our extensive expertise in vacuum preparation, cleanroom • Application analysis facilities and large-format • Engineering assistance systems enable us to design and • -

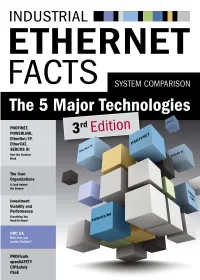

Industrial Ethernet Facts Compares PROFINET (RT, IRT), POWERLINK, Ethernet/IP, Ethercat, and SERCOS III, I.E

Luca Lachello Wratil Peter Anton Meindl Stefan Schönegger Singh Karunakaran Bhagath Huazhen Song Stéphane Potier Preface Outsiders are not alone in finding the world of Industrial Ethernet somewhat confusing. Experts who examine the matter are similarly puzzled by a broad and intransparent line-up of competing systems. Most manufacturers provide very little information of that rare sort that captures techni- cal characteristics and specific functionalities of a certain standard in a way that is both com- prehensive and easy to comprehend. Users will find themselves even more out of luck if they are seeking material that clearly compares major systems to facilitate an objective assessment. We too have seen repeated inquiries asking for a general overview of the major systems and wondering “where the differences actually lie”. We have therefore decided to dedicate an issue of the Industrial Ethernet Facts to this very topic. In creating this, we have tried to remain as objective as a player in this market can be. Our roundup focuses on technical and economic as well as on strategic criteria, all of which are relevant for a consideration of the long-term via- bility of investments in Industrial Ethernet equipment. The arguments made in this publication were advanced and substantiated in numerous conversations and discussions with developers and decision-makers in this field. We have made every attempt to verify claims whenever practically possible. This document must not be modified Despite all our efforts, though, we were unable to ascertain exact, verifiable information on without prior consent of its publisher. some aspects, which prompts us to ask for your help: if you would like to propose any Passing on the document in its entirety amendments or corrections, please send us an e-mail or simply give us a call. -

OPC Unified Architecture Interoperability for Industrie 4.0 and the Internet of Things

1 OPC Unified Architecture Interoperability for Industrie 4.0 and the Internet of Things IoT Industrie 4.0 M2M 2 Welcome to the OPC Foundation! As the international standard for vertical and horizontal communication, OPC-UA provides semantic interoper- ability for the smart world of connect- ed systems. Thomas J. Burke President und Executive Director OPC Foundation OPC Unified Architecture (OPC-UA) is the data ex- OPC-UA is an IEC standard and therefore ideally change standard for safe, reliable, manufacturer- suited for cooperation with other organizations. and platform-independent industrial communication. As a global non-profit organization, the OPC Foun- It enables data exchange between products from dation coordinates the further development of the different manufacturers and across operating sys- OPC standard in collaboration with users, manufac- tems. The OPC-UA standard is based on specifica- turers and researchers. Activities include: tions that were developed in close cooperation be- tween manufacturers, users, research institutes and ➞ Development and maintenance of specifications consortia, in order to enable safe information ex- ➞ Certification and compliance tests of change in heterogeneous systems. implementations ➞ Cooperation with other standards organizations OPC has been very popular in the industry and also becoming more popular in other markets like the This brochure provides an overview of IoT, M2M Internet of Things (IoT). With the introduction of Ser- (Machine to Machine) and Industrie 4.0 requirements vice-Oriented-Architecture (SOA) in industrial auto- and illustrates solutions, technical details and imple- mation systems in 2007, OPC-UA started to offer a mentations based on OPC-UA. scalable, platform-independent solution which com- The broad approval among representatives from re- bines the benefits of web services and integrated search, industry and associations indicates OPC-UA security with a consistent data model. -

Developing Deterministic Networking Technology for Railway Applications Using Ttethernet Software-Based End Systems

INDustrial EXploitation of the genesYS cross-domain architecture Developing deterministic networking technology for railway applications using TTEthernet software-based end systems Project n° 100021 Astrit Ademaj, TTTech Computertechnik AG Outline INDustrial EXploitation of the genesYS cross-domain architecture GENESYS requirements - railway Time-triggered communication TTEthernet SW based implementation of the TTEthernet Conclusion ARTEMISIA Association Title Presentation - 2 GENESYS – GENeric Embedded SYStems INDustrial EXploitation of the genesYS cross-domain architecture Instruction how to build your embedded systems architecture GENESYS: ¾ is a reference architecture template providing specifications and requirements to design a cross domain embedded systems architecture. ¾ architecture style supports a composable, robust and comprehensible, component based framework with strict separation of computation from message based communication ¾ distinguishes between 3 integration levels: • Chip Level (IP cores communicate via a deterministic Network-on-a-Chip) • Device Level (Chips communicate within a device) • System Level (Devices communicate in an open or closed environment) ARTEMISIA Association Title Presentation - 3 GENESYS and the railway domain INDustrial EXploitation of the genesYS cross-domain architecture Safety-critical applications in the railway domain require ¾ deterministic communication networks ¾ robustness and ¾ composability are key issues. GENESYS ¾ architecture style supports a composable, robust and comprehensible, -

Protocol Selection Table

Protocol Selection Table Bender offers signal line protection devices for many different communication and signal protocols. Use the table below to know which protectors must be used for a good surge protection. Selection table Protocol Signal Bender Surge protector I/O ± 5 VDC, < 250kHz NSL7v5-G NSLT1-7v5 I/O ± 12 VDC, < 250kHz NSL18-G NSLT1-18 I/O ± 24 VDC, < 250kHz NSL36-G NSLT1-36 I/O 0-20mA / 4-20mA NSL420-G NSLT1-36 I/O RS-232 NSL-DH I/O RS-422 NSL485-EC90 (x2) I/O RS-452 NSL485-EC90 (x2) I/O RS-485 NSL485-EC90 I/O 1-Wire NSL485-EC90 Protocol Signal Bender Surge protector 10/100/1000BaseT Ethernet NTP-RJ45-xCAT6 AS-i 32 VDC 1-pair NSL36-G NSLT1-36 BACnet ARCNET / Ethernet / BACnet/IP NTP-RJ45-xCAT6 BACnet RS-232 NSL-DH BACnet RS-485 NSL485-EC90 BitBus RS-485 NSL485-EC90 CAN Bus (Signal) 5 VDC 1-Pair NSL485-EC90 C-Bus 36 VDC 1-pair NSSP6A-38 CC-Link/LT/Safety RS-485 NSL485-EC90 CC-Link IE Field Ethernet NTP-RJ45-xCAT6 CCTV Power over Ethernet NTP-RJ45-xPoE DALI Digital Serial Interface NSL36-G NSLT1-36 Data Highway/Plus RS-485 NSL485-EC90 DeviceNet (Signal) 5 VDC 1-Pair NSL7v5-G NSLT1-7v5 DF1 RS-232 NSL-DH DirectNET RS-232 NSL-DH DirectNET RS-485 NSL485-EC90 Dupline (Signal) 5 VDC 1-Pair NSL7v5-G NSLT1-7v5 Dynalite DyNet NTP-RJ45-xCAT6 EtherCAT Ethernet NTP-RJ45-xCAT6 Ethernet Global Data Ethernet NTP-RJ45-xCAT6 Ethernet Powerlink Ethernet NTP-RJ45-xCAT6 Protocol Signal Bender Surge protector FIP Bus RS-485 NSL485-EC90 FINS Ethernet NTP-RJ45-xCAT6 FINS RS-232 NSL-DH FINS DeviceNet (Signal) NSL7v5-G NSLT1-7v5 FOUNDATION Fieldbus H1 -

The Field Guide for Industrial Ethernet FIELD GUIDE

The Field Guide for Industrial Ethernet FIELD GUIDE Published 8/28/2013 HISTORY OF ETHERNET • Environment: The designs for wiring, obert Metcalf and David Boggs invented connectors and other Ethernet devices must Ethernet while working for Xerox PARC after be designed to tolerate harsh applications R being tasked to share a laser printer with a including exposure to oils, moisture, ozone, UV, network of personal computers. By 1979 Robert welding, extreme temperatures and flexing. Metcalf convinced several companies that in order • Noise: Unlike office environments; which for Ethernet to be accepted worldwide they would normally run on 110 VAC, factories operate need to create a standard. The group “DIX” was with a variety of AC and DC circuits including formed by Digital Equipment Corporation, Intel, and both high and low voltage. Industrial Ethernet Xerox and the Ethernet from that period is often is exposed to equipment running on all referred to as DIX. The first version of the standard of these variations as well as equipment was officially published on September 30, 1980 as like motor drives, welders and robots. This “The Ethernet, A Local Area Network. Data Link Layer results in increased RFI and EMI interference and Physical Layer Specifications.”1 The next version which can decrease system performance. was published a few years later as Version 2 and • Vibration: Industrial Ethernet cable, wiring defines what is known today as Ethernet 2. Today, the and devices are often mounted directly onto group (known as the IEEE 802 LAN/MAN Standards equipment. Machine vibration or movement can Committee) maintains the 802 family of standards accelerate the rate of component wear causing which continues to expand with the advancements decreased performance or permanent damage.