ROAM: a Rich Object Appearance Model with Application to Rotoscoping

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gestaltungspotenzial Von Digitalen Compositingsystemen

Gestaltungspotenzial von digitalen Compositingsystemen Thorsten Wolf Diplomarbeit Wintersemester 03/04 1. Betreuer Herr Prof. Martin Aichele 2. Betreuer Herr Prof. Christian Fries Fachhochschule Furtwangen Fachbereich Digitale Medien „Das vielleicht größte Missverständnis über die Fotografie kommt in den Worten ‚die Kamera lügt nicht‛ zum Ausdruck. Genau das Gegenteil ist richtig. Die weitaus meisten Fotos sind ‚Lügen‛ in dem Sinne, daß sie nicht vollkommen der Wirklichkeit entsprechen: sie sind zweidimensionale Abbildungen dreidimensionaler Objekte, Schwarzweißbilder farbiger Wirklichkeit, ‚starre‛ Fotos bewegter Objekte. … “ [Kan78] S. 54f Für Mama und Papa, of course. Eidesstattliche Erklärung i Eidesstattliche Erklärung Ich, Thorsten Wolf, erkläre hiermit an Eides statt, dass ich die vorliegende Diplomarbeit selbstständig und ohne unzulässige fremde Hilfe angefertigt habe. Alle verwendeten Quellen und Hilfsmittel sind angegeben. Furtwangen, 24. Februar 2004 Thorsten Wolf Vorwort iii Vorwort In meiner Diplomarbeit „Gestaltungspotenzial von digitalen Compositingsystemen“ untersuche ich den vielseitigen visuellen Bereich der Medieninformatik. In der vorliegenden Arbeit sollen die gestalterischen Potenziale von digitalem Compositing ausgelotet werden. Hierzu untersuche ich theoretisch wie praktisch die digitalen Bildverarbeitungsverfahren und –möglichkeiten für analoge und digitale Bildquellen. Mein besonderes Augenmerk liegt hierbei auf dem Bereich der Bewegtbildgestaltung durch digitale Compositingsysteme. Diese Arbeit entstand in enger Zusammenarbeit mit der Firma on line Video 46 AG, Zürich. Besonderen Dank möchte ich Herrn Richard Rüegg, General Manager, für seine Unterstützung und allen Mitarbeitern, die mir in technischen Fragestellung zur Seite standen, aussprechen. Des weiteren bedanke ich mich bei Patrischa Freuler, Marian Kaiser, Marianne Klein, Jörg Volkmar und Tanja Wolf für ihre Unterstützung während der Diplomarbeitszeit. Für die gute Betreuung möchte ich meinen beiden Tutoren, Herrn Prof. Martin Aichele (Erstbetreuer) und Prof. -

Premier Trial Version Download Sign up for Adobe Premiere Free Trial with Zero Risk

premier trial version download Sign Up for Adobe Premiere Free Trial With Zero Risk. Adobe is a multinational computer software company based in San Jose, California. The firm is best known for its various creativity and design software products, although it has also forayed into digital marketing software. Adobe owes its household-name status to widely known, industry-standard software products such as Premiere Pro, Photoshop, Lightroom, Adobe Flash, and Adobe Acrobat Reader. There are now over 18 million subscribers of Adobe’s Creative Cloud services around the world. Adobe Premiere Pro —often simply referred to as Premiere—is Adobe’s professional video editing software. The program is equipped with state-of-the-art creative tools and is easily integrated with other apps and services. As a result, amateur and professional filmmakers and videographers are able to turn raw footage into seamless films and videos. Main Things You Should Know About the Free Trial of Adobe Premiere. You can get a seven-day Adobe Premiere Pro free trial in two different ways . You can sign up for it as: —$20.99/month after the trial A part of the Creative Cloud suite —available from $52.99/month after the trial. Credit card information is required to start your Premiere free trial. As a side note—you can cancel your Adobe Creative Cloud membership or free trial in a few minutes with the help of DoNotPay. How to Download the Adobe Premiere Free Trial. To start your seven-day free trial of Adobe Premiere Pro sold as an individual app, follow these easy steps: Visit the Adobe catalog Hit the Start Free Trial under Adobe Premiere Click the Start Free Trial button in the left column for Premiere only in the pop-up window Enter your email address in the open field Pick a plan from the drop-down menu on the right—the annual plan paid monthly is the cheapest and therefore the best bet Click on Continue to Payment Sign in with your Adobe credentials or continue with your Apple, Google, or Facebook account Enter your credit card details Click on Start Free Trial to confirm. -

Product Note: VFX Film Making 2018-19 Course Code: OV-3108 Course Category: Career INDUSTRY

Product Note: VFX Film Making 2018-19 Course Code: OV-3108 Course Category: Career INDUSTRY Indian VFX Industry grew from INR 2,320 Crore in 2016 to reach INR 3,130 Crore in 2017. The Industry is expected to grow nearly double to INR 6,350 Crore by 2020. From VFX design & pre-viz to the creation of a digital photo-realistic fantasy world as per the vision of a Film director, Visual effects has become an essential part of today's filmmaking process. The Study of Visual effects is a balance of both art and technology. You learn the art of VFX Design as well as the latest VFX techniques using the state-of-the-art 3D &VFX soft wares used in the industry. * Source :FICCI-EY Media & Entertainment Report 2018 INDUSTRY TRENDS India Becoming a Powerhouse in Global VFX Share market India has evolved in terms of skillset which has helped local & domestic studios work for Global VFX projects. The VFX Industry is expected to hire about 2,500-3,000 personnel in the coming year. Visual Effects in Hollywood Films Top Studios working on Global VFX Top International Studios like MPC, Double Negative & Frame store who have studios & Film Projects partners in India have worked on the VFX of Top Hollywood Blockbuster Films Blade Runner 2049, The Fast & the Furious 8, Pirates of the Caribbean- Dead men Tell No Tales, Technicolor India The Jungle Book and many more. MPC TIPS VFX Trace VFX Visual Effects in Bollywood Films Prime Focus World BAHUBALI 2 – The Game Changer for Bollywood VFX Double Negative Post the Box office success of Blockbuster Bahubali 2, there is a spike in VFX Budgets in Legend 3D Bollywood as well as Regional films. -

VFX Prime 2018-19 Course Code: OV-3103 Course Category : Career VFX INDUSTRY

Product Note: VFX Prime 2018-19 Course Code: OV-3103 Course Category : Career VFX INDUSTRY Indian VFX Industry grew from INR 2,320 Crore in 2016 to reach INR 3,130 Crore in 2017.The Industry is expected to grow nearly double to INR 6,350 Crore by 2020. Where reality meets and blends with the imaginary, it is there that VFX begins. The demand for VFX has been rising relentlessly with the production of movies and television shows set in fantasy worlds with imaginary creatures like dragons, magical realms, extra-terrestrial planets and galaxies, and more. VFX can transform the ordinary into something extraordinary. Have you ever been fascinated by films like Transformers, Dead pool, Captain America, Spiderman, etc.? Then you must know that a number of Visual Effects are used in these films. Now the VFX industry is on the verge of changing with the introduction of new tools, new concepts, and ideas. Source:* FICCI-EY Media & Entertainment Report 2018 INDUSTRY TRENDS VFX For Television Episodic Series SONY Television's Show PORUS showcases state-of-the-art Visual Effects to be seen on Television. Based on the tale of King Porus, who fought against Alexander, The Great to stop him from invading India, the show is said to have been made on a budget of Rs500 crore. VFX-based Content for Digital Platforms like Amazon & Netflix Popular web series like House of Cards, Game of Thrones, Suits, etc. on streaming platforms such as Netflix, Amazon Prime, Hot star and many more are unlike any conventional television series. They are edgy and fresh, with high production values, State-of-the-art Visual Effects, which are only matched with films, and are now a rage all over the world. -

Avid DS - Your Future Is Now

DSWiki DSWiki Table Of Contents 1998 DS SALES BROCHURE ............................................. 4 2005 DS Wish List ..................................................... 8 2007 Unfiltered DS Wish List ............................................. 13 2007 Wish Lists ....................................................... 22 2007DSWishListFinalistsRound2 ........................................... 28 2010 Wish List ........................................................ 30 A ................................................................. 33 About .............................................................. 53 AchieveMoreWithThe3DDVE ............................................. 54 AmazonStore ......................................................... 55 antler .............................................................. 56 Arri Alexa ........................................................... 58 Avid DS - Your Future Is Now ............................................. 59 Avid DS for Colorists ................................................... 60 B ................................................................. 62 BetweenBlue&Green ................................................... 66 Blu-ray Copy ......................................................... 67 C ................................................................. 68 ColorItCorrected ...................................................... 79 Commercial Specifications ............................................... 80 Custom MC Color Surface Layouts ........................................ -

Curriculium Vitae Biography Main Software

CURRICULIUM VITAE BIOGRAPHY MAIN SOFTWARE ...................................................................................................................................................................................... ...................................................................................................................................................................................... I’m an energetic and diligent Compositor with an eye for pixel Nuke Studio Nuke X perfection. During my last years, I have been working with high-end visual effects houses in London combining the experience of working in films, tv-shows and commercials. SECONDARY SOFTWARE Working on these subfields gave me the ability to solve different types ...................................................................................................................................................................................... of shots proposing distinct solutions. I reshuffled my approaches and merged all the knowledge improving my workflow and technical PF Track After Effects Photoshop proficiency in digital compositing. Silhouette FX Mocha Pro DaVinci Resolve As an information-seeker, I am always eager to push my boundaries facing each project as a new challenge, opportunity to learn and self-development. PROJECT MANAGEMENT SOFTWARE Believing that quality is of utmost importance, my major goal is to ..................................................................................................................................................................................... -

NANCE PATERNOSTER Digital Artist - Compositor - Instructor 503 • 621 • 1073

NANCE PATERNOSTER Digital Artist - Compositor - Instructor 503 • 621 • 1073 OBJECTIVE: Working in a Creative Capacity for Apple Computer or Adobe Products Digital Compositing, Motion Graphics & Special Effects Animation Creating Digital Art & Animated Sequences Teaching in an Animation Department at an Art School EDUCATION: MA 1993 – San Francisco State University Masters in Computer & Film Animation/Video Disk Technology BFA 1984 - Syracuse University: Syracuse, New York Art Media Program: Computer Graphics Major/College of Visual & Performing Arts (Film, Video, Photo. & Computer Graphics/Programming - Fine Art Emphasis) Additional Computer Graphics Training: Art Institute of Portland - Maya Courses Academy of Art College 1994; Pratt Institute, N.Y., NY 1985; The School of Visual Art, NY 1984 N.Y. Institute of Technology 1984-1985; Pratt Brooklyn, 1985 Center for Electronics Arts, S.F., CA 1988-1989 COMPUTER GRAPHIC EQUIPMENT Platforms - Mac/SGI/PC Software - Flame, After Effects Photoshop, Illustrator, Painter, Quark Some - Maya, Max, Lightwave, Soft Image Xaos Tools - Pandemonium, NTITLE Some - Avid, Final Cut, Premiere Amazon Paint 2D/3D, PIRANHA Unix, Dos, + Some C++, Pascal, Fortran, Javascript , HTML Additional Photography, Stereo Photography. Analog and Digital Video editing, Film editing. RELATED WORK: Art Institute of Portland - Adjunct Faculty VEMG/DFV Depts. - Present Pacific Northwest College of Art - Adjunct Faculty - Present Art Institutes Online - Adjunct Faculty - Present Art Institutes Online- Full Time Faculty - Animation Department - 2006-2007 Art Institute of Portland - Full Time Faculty - Animation Department Teaching Compositing, Motion Graphics, Special FX Animation, Advanced Image Manipulation, Digital Paint, Independent Study for Senior Animation Students, & Digital Portfolio. 2000 - 2005 Art Institutes Online - Adjunct Faculty - Game Art & Design Department Teaching Digital Ink & Paint Online, 2D Animation, Image Manipulation. -

Toon Boom Harmony 12.1 Essentials

TOON BOOM HARMONY 12.1 - Essentials Edition - Compositing and Effects Guide Legal Notices Toon Boom Animation Inc. 4200 Saint-Laurent, Suite 1020 Montreal, Quebec, Canada H2W 2R2 Tel: +1 514 278 8666 Fax: +1 514 278 2666 toonboom.com Disclaimer The content of this guide is covered by a specific limited warranty and exclusions and limit of liability under the applicable License Agreement as supplemented by the special terms and conditions for Adobe®Flash® File Format (SWF). For details, refer to the License Agreement and to those special terms and conditions. The content of this guide is the property of Toon Boom Animation Inc. and is copyrighted. Any reproduction in whole or in part is strictly prohibited. Trademarks Harmony is a trademark of Toon Boom Animation Inc. Publication Date 2015-08-07 Copyright © 2015 Toon Boom Animation Inc. All rights reserved. 2 Harmony 12.1 Essentials - Compositing and Effects Guide Contents Toon Boom Harmony 12.1- Essentials Edition -Compositing and Effects Guide 1 Contents 3 Chapter 1: Compositing - An Introduction 7 Chapter 2: Import 9 Importing Bitmap Images 9 Importing a Bitmap Image Without Vectorization 10 Bitmap Image Quality 11 Importing and Vectorizing Bitmap Images 11 Importing PSD Files as Separate Layers 14 Exporting a Layout 20 Scanning Images 24 Importing AI and PDF Files 32 Importing SWF Files 34 Redistributing Elements 36 Importing QuickTime Movies 37 Custom Vectorization Parameters 38 Options Tab 39 Help Tab 41 File IO Options 41 Registration Options 42 Filtering Options 42 Options for bitmap -

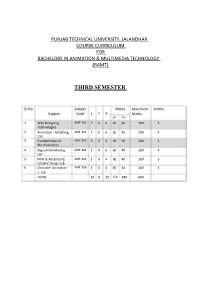

Third Semester

PUNJAB TECHNICAL UNIVERSITY, JALANDHAR COURSE CURRICULUM FOR BACHELORS IN ANIMATION & MULTIMEDIA TECHNOLOGY (BAMT) THIRD SEMESTER Sr.No Subject Marks Maximum credits Subject Code L T P Marks Int. Ext. 1 Web Designing AMT-301 3 0 0 40 60 100 3 Technologies 2 Animation - Modeling AMT-302 1 0 6 60 40 100 4 Lab 3 Fundamentals of AMT-303 3 0 0 40 60 100 3 Pre-Production 4 Digital Film Making AMT-304 1 0 6 60 40 100 4 Lab 5 Print & Advertising AMT-305 1 0 4 60 40 100 3 Graphic Design Lab 6 Character Animation - AMT-306 1 0 4 60 40 100 3 1 Lab TOTAL 10 0 20 320 280 600 SEMESTER - III Internal: 40 Marks External: 60 Marks AMT- 301 Web Designing Technologies L-3 T-0 P-0 OBJECTIVE - The main objective of the subject is to impart the basic understanding of the methods and techniques of developing a web site. 1) Introduction to the Internet (5%) Modes of connecting to internet, search optimization on internet, applications of internet, introduction to World Wide Web (WWW). 2) Introduction to HTML (5%) HTML tags, HTML Editor. 3) Tags, Attributes, Lists and Tables (15%) Structure Tags (HTML,HEAD,TITLE,BODY),Paired and unpaired tags, Ordered list, unordered list, formatting list, tables in HTML, formatting table, cell spacing, cell padding 4) Links and Images (15%) Making hyper links- anchor tag, images as link, Adding Images, Aligning the image Using Images as a link, Using Background images. 5) Cascading Style Sheets (5%) Style sheet design, using internal and external style sheets 6) Creating a Basic Web Page (20%) Creating web pages using basic HTML Tags. -

Linux Mint - 2Nde Partie

Linux Mint - 2nde partie - Mise à jour du 10.03.2017 1 Sommaire 1. Si vous avez raté l’épisode précédent… 2. Utiliser Linux Mint au quotidien a) Présentation de la suite logicielle par défaut b) Et si nous testions un peu ? c) Windows et Linux : d’une pratique logicielle à une autre d) L’installation de logiciels sous Linux 3. Vous n’êtes toujours pas convaincu(e)s par Linux ? a) Encore un argument : son prix ! b) L’installer sur une vieille ou une nouvelle machine, petite ou grande c) Par philosophie et/ou curiosité d) Pour apprendre l'informatique 4. À retenir Sources 2 1. Si vous avez raté l’épisode précédent… Linux, c’est quoi ? > Un système d’exploitation > Les principaux systèmes d'exploitation > Les distributions 3 1. Si vous avez raté l’épisode précédent… Premiers pas avec Linux Mint > Répertoire, dossier ou fichier ? > Le bureau > Gestion des fenêtres > Gestion des fichiers 4 1. Si vous avez raté l’épisode précédent… Installation > Méthode « je goûte ! » : le LiveUSB > Méthode « j’essaye ! » : le dual-boot > Méthode « je fonce ! » : l’installation complète 5 1. Si vous avez raté l’épisode précédent… Installation L'abréviation LTS signifie Long Term Support, ou support à long terme. 6 1. Si vous avez raté l’épisode précédent… http://www.linuxliveusb.com 7 1. Si vous avez raté l’épisode précédent… Installation 8 1. Si vous avez raté l’épisode précédent… Installation 9 1. Si vous avez raté l’épisode précédent… Installation 10 1. Si vous avez raté l’épisode précédent… Installation 11 2. Utiliser Linux Mint au quotidien a) Présentation de la suite logicielle par défaut Le fichier ISO Linux Mint est compressé et contient environ 1,6 GB de données. -

Op E N So U R C E Yea R B O O K 2 0

OPEN SOURCE YEARBOOK 2016 ..... ........ .... ... .. .... .. .. ... .. OPENSOURCE.COM Opensource.com publishes stories about creating, adopting, and sharing open source solutions. Visit Opensource.com to learn more about how the open source way is improving technologies, education, business, government, health, law, entertainment, humanitarian efforts, and more. Submit a story idea: https://opensource.com/story Email us: [email protected] Chat with us in Freenode IRC: #opensource.com . OPEN SOURCE YEARBOOK 2016 . OPENSOURCE.COM 3 ...... ........ .. .. .. ... .... AUTOGRAPHS . ... .. .... .. .. ... .. ........ ...... ........ .. .. .. ... .... AUTOGRAPHS . ... .. .... .. .. ... .. ........ OPENSOURCE.COM...... ........ .. .. .. ... .... ........ WRITE FOR US ..... .. .. .. ... .... 7 big reasons to contribute to Opensource.com: Career benefits: “I probably would not have gotten my most recent job if it had not been for my articles on 1 Opensource.com.” Raise awareness: “The platform and publicity that is available through Opensource.com is extremely 2 valuable.” Grow your network: “I met a lot of interesting people after that, boosted my blog stats immediately, and 3 even got some business offers!” Contribute back to open source communities: “Writing for Opensource.com has allowed me to give 4 back to a community of users and developers from whom I have truly benefited for many years.” Receive free, professional editing services: “The team helps me, through feedback, on improving my 5 writing skills.” We’re loveable: “I love the Opensource.com team. I have known some of them for years and they are 6 good people.” 7 Writing for us is easy: “I couldn't have been more pleased with my writing experience.” Email us to learn more or to share your feedback about writing for us: https://opensource.com/story Visit our Participate page to more about joining in the Opensource.com community: https://opensource.com/participate Find our editorial team, moderators, authors, and readers on Freenode IRC at #opensource.com: https://opensource.com/irc . -

Software Guide (PDF)

Animation - Maya and 3ds Max: autodesk.com. freesoftware/ Operating system: All Educational institutions can access a range of software for 3D modelling, animation and rendering. Free trial available. Games - Synfig: synfig.org/ Operating system: All Twine: twinery.org/ - Vector-based 2D animation suite. Operating system: All Easy-to-create interactive, story-based game - Three.Js: threejs.org/ engine. Add slides and embed media. Coding Operating system: Web-based knowledge is not required. Create animated 3D computer graphics on a web browser using HTML. - GameMaker: yoyogames.com/gamemaker Operating system: Windows and macOS - Blender: blender.org/ Simple-to-use 2D game development engine. Operating system: All Coding knowledge is not required. Free trial Easy-to-use software to create 3D models, available. environments and animated films. Can be used for VFX and games. - Unreal Engine: unrealengine.com/en-US/ what-is-unreal-engine-4 - Stop Motion Studio: cateater.com/ Operating system: Web-based Operating system: Windows, macOS, Andriod Advanced game engine to create 2D, and iOS 3D, mobile and VR games. Knowledge of Stop-motion animation app with in-app programming is not required. purchases. - Playcanvas: playcanvas.com Operating system: Web-based Simple-to-use 3D game engine using HTML5. Create apps faster using Google Docs-style realtime collaboration. Learn how to use your work to build a - Unity: unity3d.com/ portfolio and get a job: Operating system: Windows and macOS screenskills.com/building-your-portfolio Easy-to-use game engine for importing 3D models, creating textures and building Software guide environments. Find a job profile that uses your skills: Free software to help you develop screenskills.com/job-profiles Chatmapper: chatmapper.com/ your skills and create a portfolio - Operating system: Windows for the film, TV, animation, Software for writing non-linear dialogue, ideal VFX (visual effects) and games industries for games.