User Interface Aspects of a Human-Hand Simulation System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microsoft Publisher Version 2007

Microsoft Publisher version 2007 Getting Started Select a publication type from the left-hand panel. Your design choices will appear in the center panel. Page 1 of 8 © D. Rich, 2010 Single click a design choice from the center panel. In the right-hand panel, you can edit the default color and font scheme if desired. Options will differ, depending on the chosen publication type. Then click the Create button in the lower right-hand corner. Publication Options NOTE: available options depend on publication type Page 2 of 8 © D. Rich, 2010 To close Task Pane, click X. To reopen at any time, choose View > Task Pane. Zoom Use drop down menu or + - icons. Multi-page publications contain navigation buttons at the bottom of the screen. Page 3 of 8 © D. Rich, 2010 Working With Text Editing Text All text in Publisher is contained within text boxes. These text boxes, by default, contain “filler text.” You will need to delete the filler text and enter your own text. Formatting Text All text in Publisher is contained within text boxes. You will need to click inside the text box to select the text you wish to edit. Edit and format as you would in Word, by using the Formatting toolbar, You can also choose Format > Font for more formatting options. Text Boxes If text is too large for text box, the text box overflow (A…) symbol appears. To enlarge a text box, grab and drag one of the white circles. To move a text box, hover your mouse on the edge of the box and the crosshairs icon will appear. -

Using the Tools Palette in Collaborate (The Whiteboard)

Using the Tools Palette in Collaborate (The Whiteboard) The Tools Palette contains drawing and text tools that can be used to create and manipulate objects on the Whiteboard. It also has options to place a screenshot or clip art. The Tools Palette Tools in the palette with a tabbed lower right corner have additional optional tools that can be swapped into their spot. Click and hold the tool to expand the options. Participants have access to the Whiteboard tools if they have been granted the Whiteboard tools permission. The Properties Editor When you click on any of the text or drawing tools in the Tools palette, the Properties Editor palette will appear at the bottom of the Whiteboard. From here you can format your text or graphic by specifying various properties. The contents of the Properties Editor palette changes depending on which tool is being used or which objects are selected. 1 Updated November 29, 2012 Inserting Text The Whiteboard has two text tools: The Simple Text Tool ( ) creates text as an object directly on the canvas. The text can be manipulated just like a shape or image in a graphic design program. The Text Editor ( ) creates a bounding box for text, and the text inside can be edited as in a word processor or publishing program. To insert text on to the Whiteboard: 1. Click on the Text Editor Tool. If the Text Editor tool is not in the Tools Palette, click and hold the Simple Text tool until it expands to reveal the Text Editor tool icon, then click on the Text Editor tool. -

Using Microsoft Visual Studio to Create a Graphical User Interface ECE 480: Design Team 11

Using Microsoft Visual Studio to Create a Graphical User Interface ECE 480: Design Team 11 Application Note Joshua Folks April 3, 2015 Abstract: Software Application programming involves the concept of human-computer interaction and in this area of the program, a graphical user interface is very important. Visual widgets such as checkboxes and buttons are used to manipulate information to simulate interactions with the program. A well-designed GUI gives a flexible structure where the interface is independent from, but directly connected to the application functionality. This quality is directly proportional to the user friendliness of the application. This note will briefly explain how to properly create a Graphical User Interface (GUI) while ensuring that the user friendliness and the functionality of the application are maintained at a high standard. 1 | P a g e Table of Contents Abstract…………..…………………………………………………………………………………………………………………………1 Introduction….……………………………………………………………………………………………………………………………3 Operation….………………………………………………….……………………………………………………………………………3 Operation….………………………………………………….……………………………………………………………………………3 Visual Studio Methods.…..…………………………….……………………………………………………………………………4 Interface Types………….…..…………………………….……………………………………………………………………………6 Understanding Variables..…………………………….……………………………………………………………………………7 Final Forms…………………....…………………………….……………………………………………………………………………7 Conclusion.…………………....…………………………….……………………………………………………………………………8 2 | P a g e Key Words: Interface, GUI, IDE Introduction: Establishing a connection between -

MATLAB Creating Graphical User Interfaces COPYRIGHT 2000 - 2004 by the Mathworks, Inc

MATLAB® The Language of Technical Computing Creating Graphical User Interfaces Version 7 How to Contact The MathWorks: www.mathworks.com Web comp.soft-sys.matlab Newsgroup [email protected] Technical support [email protected] Product enhancement suggestions [email protected] Bug reports [email protected] Documentation error reports [email protected] Order status, license renewals, passcodes [email protected] Sales, pricing, and general information 508-647-7000 Phone 508-647-7001 Fax The MathWorks, Inc. Mail 3 Apple Hill Drive Natick, MA 01760-2098 For contact information about worldwide offices, see the MathWorks Web site. MATLAB Creating Graphical User Interfaces COPYRIGHT 2000 - 2004 by The MathWorks, Inc. The software described in this document is furnished under a license agreement. The software may be used or copied only under the terms of the license agreement. No part of this manual may be photocopied or repro- duced in any form without prior written consent from The MathWorks, Inc. FEDERAL ACQUISITION: This provision applies to all acquisitions of the Program and Documentation by, for, or through the federal government of the United States. By accepting delivery of the Program or Documentation, the government hereby agrees that this software or documentation qualifies as commercial computer software or commercial computer software documentation as such terms are used or defined in FAR 12.212, DFARS Part 227.72, and DFARS 252.227-7014. Accordingly, the terms and conditions of this Agreement and only those rights specified in this Agreement, shall pertain to and govern the use, modification, reproduction, release, performance, display, and disclosure of the Program and Documentation by the federal government (or other entity acquiring for or through the federal government) and shall supersede any conflicting contractual terms or conditions. -

Google Slides

Google Slides Google Slides is a web-based presentation program offered through Google Suites which allows users to create slideshow presentation remotely on most devices. Google Slides also allows groups of people to edit presentations simultaneously and automatically saves changes. In today’s class, you are going to learn how to do the basic functions in Google Slides. ***Only users with Gmail accounts can access Google Slides*** By the end of today’s lesson, you will be able to: Access and create Google Slides presentation Edit presentation Present Google Slides presentation Share presentation Download Google Slides presentation to computer as a PowerPoint presentation and/or PDF Upload PowerPoint presentation to Google Slides Access and create Google Slides presentation 1. Log in to your Gmail account and click on the overflow icon in the top right-hand corner. A drop-down menu will appear 2. Click on the Google Slides icon 3. Once in Google Slides, click on the Blank presentation icon and presentation page will appear 4. Title the document by clicking “Untitled presentation” in the top left corner and typing in a new title. 5. Edit the document as you usually would on Microsoft PowerPoint presentation. 6. Slides automatically saves changes once you stop typing. When you are done, simply close the window or click on the Google Slides icon next to the document title. Edit presentation 1. Add New Slide/ Delete a Slide Add new slide – Click on the plus sign icon below the Google Slides icon Delete a slide – In preview panel on the left, choose the slide you would like to delete. -

Graphical User Interface Design Document

EUROPEAN COMMISSION EUROPEAN MARITIME SAFETY AGENCY Cais Do Sodré 1249-206 Lisbon, Portugal SafeSeaNet Graphical User Interface Design Document NSW Prototype Document version: 1.78 Document release date: July 2015 NSW Prototype Version: 1.78 Graphical User Interface Design Document July 2015 Sa fe Se a N e t Document Approval NAME DATE SIGNATURE Prepared by: E. Thanasopoulos 03.07.2015 M. Ntirogianni C. Trigonis Checked by: A. Argyropoulos 07.07.2015 Quality control by: N. Karioti 07.07.2015 Approved by: G. Carayannis 07.07.2015 Distribution List COMPANY NAME FUNCTION FOR INFO / APPROVAL EMSA Duchesne Philippe EMSA Abela Carmelo Member States SSN central system contractor Change control History VERSION DATE AUTHOR DESCRIPTION 0.10 5 Aug 2013 Intrasoft First Draft submitted to internal QA for International Review. 0.90 12 Aug 2013 Intrasoft Submitted to EMSA for Review. International 1.00 05 Sep 2013 Intrasoft Incorporated EMSA review comments. International 1.10 18 Oct 2013 Intrasoft Defined the Consult Acknowledgement web International pages and updated the Authority Information Exchange web pages. 1.20 29 Nov 2013 Intrasoft Incorporated EMSA review comments. International 1.30 16 Dec 2013 Intrasoft Updated according to the SDD design review International teleconference on 09/12/2013. 1.40 18 Jan 2014 Intrasoft Updated to incorporate the additional International functionalities for the NSW prototype based on the SC#07 evolutive maintenance task. 1.45 17 Mar 2014 Intrasoft Updated to incorporate EMSA review International comments. 1.50 23 May 2014 Intrasoft Updated to incorporate design changes part International of SC#09. Submitted to EMSA for review 2 of 62 NSW Prototype Version: 1.78 Graphical User Interface Design Document July 2015 Sa fe Se a N e t 1.55 05 Jun 2014 Intrasoft Updated to incorporate EMSA review International comments. -

Microsoft Word 2010

Microsoft Word 2010 Prepared by Computing Services at the Eastman School of Music – July 2010 Contents Microsoft Office Interface ................................................................................................................................................ 4 File Ribbon Tab ................................................................................................................................................................. 5 Microsoft Office Quick Access Toolbar ............................................................................................................................. 6 Appearance of Microsoft Word ........................................................................................................................................ 7 Creating a New Document ............................................................................................................................................... 8 Opening a Document ........................................................................................................................................................ 8 Saving a Document ........................................................................................................................................................... 9 Home Tab - Styling your Document ............................................................................................................................... 10 Font Formatting ......................................................................................................................................................... -

Unit 2 Lesson 2.1-3

Designing User Interface-1 Unit 2 Designing User Interface-1 Introduction In previous lesson, we have learned how to write simple Visual Basic code. In this lesson, we will learn how to work with some common controls and write codes for them. Some of the commonly used controls are label, text box, button, list box and combo box. However, in this lesson, we shall only deal with the text box the label, and buttons we shall deal with other controls later. Lesson 2.1-3 Adding Basic Controls Upon completion of this unit you will be able to . Place textbox control on the Form. Place label control on the Form. Place command button on the Form. Outcomes TextBox Controls The TextBox is the standard control for accepting input from the user as well as to display the output. For this reason, they tend to be the most frequently used controls in the majority of Windows applications. It can handle string (text) and numeric data but not images or pictures. String in a TextBox can be converted to a numeric data by using the function Val (text). In this section, we will discuss the most useful properties of TextBox controls. After you place a TextBox control on a form, you must set a few basic properties. The first thing I do as soon as I create a new TextBox control is clear its Textproperty. If this is a multiline field, I also set the MultiLineproperty to True. You can set the Alignment property of TextBox controls to left align, right align, or center the contents of the control. -

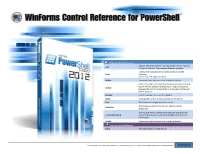

Text Sets the Text of the Control. This May Be the Title of a Form Or The

Important Shared Properties: Sets the Text of the control. This may be the Title of a Form or Text the text of a button. Most controls share this property. Defines which boarders of the control are bound to the Dock container. Values: Top, Left, Right, Fill, None Visible Determines whether the control is visible or hidden. Defines the edges of the container to which a certain control is bound. When a control is anchored to an edge, the distance Anchor between the control's closest edge and the specified edge will remain constant. Enabled Indicates whether the control is enabled. Name Indicates the name in the script to identify the control Font The font used to display text in the control. The foreground color of the control, which is used to ForeColor display text. The shortcut menu to display when the user right-clicks the ContextMenuStrip control. Requires user to add a ContextMenuStrip control in the designer. Visible Determines whether the control is visible or hidden. Important Shared Methods: Focus Sets the input focus to the control. PowerShell Studio: Form Controls Quick Reference v1.0, SAPIEN Technologies, Inc. | Copyright © 2012 SAPIEN Technologies, Inc. All Rights Reserved 1 Button Represents a Windows button control. Important Properties: Text Sets the Button's Text (i.e. $textbox1.Text = "Name") The dialog-box result produced in a modal form by click the button. DialogResult None, OK, Cancel, Abort, Retry, Ignore, Yes, No Image The image that will be displayed on the button Enabled Indicates whether the button is enabled. Important Events: Click Occurs when the button is 'clicked' CheckBox Represents a Windows CheckBox. -

Quick Guide for Accessible Powerpoint Presentations

Quick Guide for Accessible PowerPoint Presentations Accessible PowerPoint Presentations 1. On the Home tab, in the Drawing group, Add Alternative Text for Images and click the Arrange menu. Graphics Source: http://office.microsoft.com/en- 2. Click Selection Pane. Note: Alt text should be added for pictures, clip 001/powerpoint-help/creating-accessible- art, charts, tables, shapes, and SmartArt 3. Click the eye icon next to the text box to powerpoint-presentations- graphics. HA102013555.aspx?CTT=1 toggle its visibility. Add alt text by doing the following: Use Built-In Layout and Styling Features Review Outline View 1. Right click the image or object, and then click Format. Using pre-defined layouts and templates will The outline pane shows the reading order and 2. Click Alt Text. ensure help that your presentations have structure of the text in your presentation. 3. Type a description of the image or properly structured headings, lists, and proper • From Normal View, click on the outline object into the Description text box. reading order. tab to display the text outline for your The image description should focus on To apply “true layout” to a slide or an existing presentation. Go through the outline to the purpose and/or content of the image slide see if any information is missing. in the document. 1. Go to menu item: Home Set a Logical Tab Order TIP Use clear, but concise descriptions. 2. In the Slides section, select the Layout For example, “a red Ferrari” tells the button To check the order in which your slide content reader more about the image than “a 3. -

HIPE Owner's Guide 1

Build 15.0.3262 HIPE Owner's Guide 1. Welcome Welcome to HIPE, the Herschel Interactive Processing Environment! This guide contains everything you need to know about the main software tool for the analysis of Herschel data. Here is what the next pages have in store for you: • Section 2 – Installing HIPE and performing basic configuration. • Section 3 – Basic concepts about HIPE. • Section 4 – Fine-tuning every aspect of HIPE with the Preferences dialogue window. • Section 5 – The Help System and its browsing and search features. • Section 6 – Extending HIPE with plug-ins. • Section 7 – Managing memory. • Section 8 – Managing running jobs. • Section 9 – Opening and running scripts. • Section 10 – Running scripts outside HIPE. • Section 11 – Editing scripts and configuring the editor. • Section 12 – Managing log messages. • Section 13 – Saving and restoring variables and sessions. • Section 14 – Managing calibration sources. • Section 15 – Viewing data products and observations. • Section 16 – Giving feedback about HIPE. • Section 17 – HIPE views. • Section 18 – HIPE perspectives. • Section 19 – Customising views and perspectives. • Section 20 – Mouse click behaviours and keyboard shortcuts. • Section 21 – Managing monospaced fonts. • Section 22 – Comparison tables to translate the most used IDL commands into HIPE. There is much more to HIPE than is contained in this manual: • If you want to learn more about graphical tools to download, visualise and analyse your data, check the Data Analysis Guide. • If you want to learn the scripting language used in HIPE and master the command line interface, have a look at the Scripting Guide. 1 HIPE Owner's Guide Build 15.0.3262 • See the Welcome page of the HIPE Help System for links to our YouTube and Twitter channels. -

Sci-Fi Button Worksheet a Taste of User Interface

Sci-Fi button worksheet A taste of user interface (UI) design - creating a sci-fi button Workshop developed by Aiden Le Santo Objectives This task will begin in Adobe Illustrator to create a basic shape, before copying it into Adobe Photoshop • To understand the role of a UI designer to apply colour and effects. • To design a prototype button that could be used for a sci-fi game 1. Setting up the canvas • To see related areas of employment • Open Adobe Illustrator, or an alternative Vector Graphics program like Inkscape or Affinity Materials required • Create a new canvas. Start with 1000 x 1000 Adobe Photoshop and Illustrator. A free trial, if pixels (px) required, can be downloaded here Introduction UX/UI is the design of user interfaces (or menu systems) for machines and software, such as computers, home appliances, mobile devices, and other electronic devices, with the focus on making user friendly and contextually appropriate products. Sprung Studios are one of the world’s leading UI design studios who specialise in Video Games and Aiden has worked on projects such as Injustice 2, Call of Duty (War Zone and Modern Warfare), Fast and Furious Take Down and Trials Rising. 2. Basic tools in illustrator These are a few of the key tools we will use throughout this first section: Top tip: • The Selection Tool (V) for selecting objects on the canvas Instead of dragging to create the rectangle, you • Rectangle or Shape Tool (M) for drawing can also just click once objects anywhere on the canvas to set the size manually using • Right click on this tool to choose different this dialogue box.