Quality Performance Assessment: a Guide for Schools and Districts |

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Issue Hero Villain Place Result Avengers Spotlight #26 Iron Man

Issue Hero Villain Place Result Avengers Spotlight #26 Iron Man, Hawkeye Wizard, other villains Vault Breakout stopped, but some escape New Mutants #86 Rusty, Skids Vulture, Tinkerer, Nitro Albany Everyone Arrested Damage Control #1 John, Gene, Bart, (Cap) Wrecking Crew Vault Thunderball and Wrecker escape Avengers #311 Quasar, Peggy Carter, other Avengers employees Doombots Avengers Hydrobase Hydrobase destroyed Captain America #365 Captain America Namor (controlled by Controller) Statue of Liberty Namor defeated Fantastic Four #334 Fantastic Four Constrictor, Beetle, Shocker Baxter Building FF victorious Amazing Spider-Man #326 Spiderman Graviton Daily Bugle Graviton wins Spectacular Spiderman #159 Spiderman Trapster New York Trapster defeated, Spidey gets cosmic powers Wolverine #19 & 20 Wolverine, La Bandera Tiger Shark Tierra Verde Tiger Shark eaten by sharks Cloak & Dagger #9 Cloak, Dagger, Avengers Jester, Fenris, Rock, Hydro-man New York Villains defeated Web of Spiderman #59 Spiderman, Puma Titania Daily Bugle Titania defeated Power Pack #53 Power Pack Typhoid Mary NY apartment Typhoid kills PP's dad, but they save him. Incredible Hulk #363 Hulk Grey Gargoyle Las Vegas Grey Gargoyle defeated, but escapes Moon Knight #8-9 Moon Knight, Midnight, Punisher Flag Smasher, Ultimatum Brooklyn Ultimatum defeated, Flag Smasher killed Doctor Strange #11 Doctor Strange Hobgoblin, NY TV studio Hobgoblin defeated Doctor Strange #12 Doctor Strange, Clea Enchantress, Skurge Empire State Building Enchantress defeated Fantastic Four #335-336 Fantastic -

(“Spider-Man”) Cr

PRIVILEGED ATTORNEY-CLIENT COMMUNICATION EXECUTIVE SUMMARY SECOND AMENDED AND RESTATED LICENSE AGREEMENT (“SPIDER-MAN”) CREATIVE ISSUES This memo summarizes certain terms of the Second Amended and Restated License Agreement (“Spider-Man”) between SPE and Marvel, effective September 15, 2011 (the “Agreement”). 1. CHARACTERS AND OTHER CREATIVE ELEMENTS: a. Exclusive to SPE: . The “Spider-Man” character, “Peter Parker” and essentially all existing and future alternate versions, iterations, and alter egos of the “Spider- Man” character. All fictional characters, places structures, businesses, groups, or other entities or elements (collectively, “Creative Elements”) that are listed on the attached Schedule 6. All existing (as of 9/15/11) characters and other Creative Elements that are “Primarily Associated With” Spider-Man but were “Inadvertently Omitted” from Schedule 6. The Agreement contains detailed definitions of these terms, but they basically conform to common-sense meanings. If SPE and Marvel cannot agree as to whether a character or other creative element is Primarily Associated With Spider-Man and/or were Inadvertently Omitted, the matter will be determined by expedited arbitration. All newly created (after 9/15/11) characters and other Creative Elements that first appear in a work that is titled or branded with “Spider-Man” or in which “Spider-Man” is the main protagonist (but not including any team- up work featuring both Spider-Man and another major Marvel character that isn’t part of the Spider-Man Property). The origin story, secret identities, alter egos, powers, costumes, equipment, and other elements of, or associated with, Spider-Man and the other Creative Elements covered above. The story lines of individual Marvel comic books and other works in which Spider-Man or other characters granted to SPE appear, subject to Marvel confirming ownership. -

From “Destroying Angel” to “The Most Dangerous Woman in America”: A

University of Washington Tacoma UW Tacoma Digital Commons History Undergraduate Theses History Spring 6-2016 From “Destroying Angel” to “The oM st Dangerous Woman in America”: A Study of Mary Mallon’s Depiction in Popular Culture Claire Sandoval-Peck [email protected] Follow this and additional works at: https://digitalcommons.tacoma.uw.edu/history_theses Part of the American Popular Culture Commons, Diseases Commons, Public Health Commons, Social History Commons, and the Women's History Commons Recommended Citation Sandoval-Peck, Claire, "From “Destroying Angel” to “The osM t Dangerous Woman in America”: A Study of Mary Mallon’s Depiction in Popular Culture" (2016). History Undergraduate Theses. 25. https://digitalcommons.tacoma.uw.edu/history_theses/25 This Undergraduate Thesis is brought to you for free and open access by the History at UW Tacoma Digital Commons. It has been accepted for inclusion in History Undergraduate Theses by an authorized administrator of UW Tacoma Digital Commons. From “Destroying Angel” to “The Most Dangerous Woman in America”: A Study of Mary Mallon’s Depiction in Popular Culture A Senior Paper Presented in Partial Fulfillment of the Requirements for Graduation Undergraduate History Program of the University of Washington Tacoma by Claire Sandoval-Peck University of Washington Tacoma June 2016 Advisor: Dr. Libi Sundermann 2 Acknowledgments This paper would not have been possible without the support of Diane, my parents and siblings, James in the TLC, Ari, and last but not least, Dr. Libi Sundermann. Thank you for all the encouragement, advice, proofreading, and tea. 1 “Typhoid” Mary Mallon: “A special guest of the City of New York”1 Introduction: If you say the name Mary Mallon to someone today, there is a good chance they will not know who you are talking about. -

PDF Download Civil War: Wolverine (New Printing)

CIVIL WAR: WOLVERINE (NEW PRINTING) PDF, EPUB, EBOOK Marc Guggenheim | 168 pages | 31 Mar 2016 | Marvel Comics | 9780785195702 | English | New York, United States Civil War: Wolverine (new Printing) PDF Book Customer Reviews. Theme: What the Duck?! Sell one like this. However, Dr. Theme : Decomixado Number of Covers : Date :. The Fantastic Four return from a jaunt across time and space in a futile bid to save their homeworld! The cult of Carnage comes to New York City! Theme: Deadpool Number of Covers: Date:. Its people, never happier. Theme: Remastered Number of Covers: Date:. This amount is subject to change until you make payment. Typhoid Mary! In order to promote the release of the new Champions comic series, these variants paired members of the new team with those of the original Champions of Los Angeles. In anticipation of the movie X-Men: First Class , these wraparound covers showcase the evolution of the design of some of the most popular X-Men characters. Tags: Hulk , Punisher , Wolverine. This listing has ended. Despite this symbolic claim to Confederate space, the First Minnesota page quickly found itself surrounded by three pages of Confederate news as the Union printers in their haste also printed the already-set third page of the Conservato r next to their own Union sheet. Tiger Shark! New: A brand-new, unused, unopened and undamaged item. Uncovering it will involve new friends Doctor Strange and the Wasp, and lethal new enemies like the Taskmaster! The most popular of these mash-ups occurred in the variant for Deadpool's Secret Secret Wars 2 , merging Gwen with Deadpool into Gwenpool. -

Typhoid Mary and Social Distancing

Typhoid Mary and Social Distancing Typhoid Fever—a life-threatening disease caused by bacteria, not a virus—still impacts about 21.5 million people every year. It spreads, quickly, when living conditions are unclean. It is possible for a healthy person, who is not ill with typhoid, to be a carrier of the disease. Mary Mallon—an Irish-immigrant cook—was such a person. She became known as "Typhoid Mary." Quarantined even though she was not ill herself, Mary Mallon went to the sickhouse on March 27, 1915 (where she lived for most of her remaining life). The UK’s Science Museum, in London, tells us more about the woman who became infamous for spreading a disease which she had personally never experienced (although she was, without doubt, a carrier of it): Typhoid Mary was Mary Mallon, a healthy carrier of the disease who infected a number of people - at least three of whom died - in New York City. Once identified as the source of these outbreaks she eventually became demonized in the eyes of the public. Her nickname remains an all-purpose pejorative term for individuals - real or imagined - who wilfully spread disease. Born in County Tyrone, Ireland [one of the six counties of today's Northern Ireland], Mary emigrated to New York in her teens where, working as a household cook, she left a trail of illness wherever she was employed. After being first identified as a carrier in 1907, she was quarantined to the Riverside Hospital on New York’s North Brother Island [an island in New York's East River]. -

X-Men, Dragon Age, and Religion: Representations of Religion and the Religious in Comic Books, Video Games, and Their Related Media Lyndsey E

Georgia Southern University Digital Commons@Georgia Southern University Honors Program Theses 2015 X-Men, Dragon Age, and Religion: Representations of Religion and the Religious in Comic Books, Video Games, and Their Related Media Lyndsey E. Shelton Georgia Southern University Follow this and additional works at: https://digitalcommons.georgiasouthern.edu/honors-theses Part of the American Popular Culture Commons, International and Area Studies Commons, and the Religion Commons Recommended Citation Shelton, Lyndsey E., "X-Men, Dragon Age, and Religion: Representations of Religion and the Religious in Comic Books, Video Games, and Their Related Media" (2015). University Honors Program Theses. 146. https://digitalcommons.georgiasouthern.edu/honors-theses/146 This thesis (open access) is brought to you for free and open access by Digital Commons@Georgia Southern. It has been accepted for inclusion in University Honors Program Theses by an authorized administrator of Digital Commons@Georgia Southern. For more information, please contact [email protected]. X-Men, Dragon Age, and Religion: Representations of Religion and the Religious in Comic Books, Video Games, and Their Related Media An Honors Thesis submitted in partial fulfillment of the requirements for Honors in International Studies. By Lyndsey Erin Shelton Under the mentorship of Dr. Darin H. Van Tassell ABSTRACT It is a widely accepted notion that a child can only be called stupid for so long before they believe it, can only be treated in a particular way for so long before that is the only way that they know. Why is that notion never applied to how we treat, address, and present religion and the religious to children and young adults? In recent years, questions have been continuously brought up about how we portray violence, sexuality, gender, race, and many other issues in popular media directed towards young people, particularly video games. -

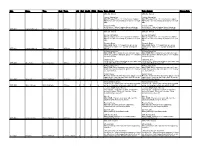

Current Change Date AFF-001 Captain Marvel Main Char

blac Name Type Cost Team Atk Def Health Flight Range Text - Original Text - Current Change Date AKA Ms. Marvel AKA Ms. Marvel Energy Absorption Energy Absorption Main [Energy]: Put a +1/+1 counter on Captain Main [Energy]: Put a +1/+1 counter on Captain Marvel for each other [ranged] character on your Marvel for each other [ranged] character on your side. side. Woman of War Woman of War Level Up (4) - When Captain Marvel stuns an Level Up (4) - When Captain Marvel stuns an AFF-001 Captain Marvel Main Character A-Force 2 4 6 x x enemy character in combat, she gains an XP. enemy character in combat, she gains an XP. AKA Ms. Marvel AKA Ms. Marvel Energy Absorption Energy Absorption Main [Energy]: Put a +1/+1 counter on Captain Main [Energy]: Put a +1/+1 counter on Captain Marvel for each other [ranged] character on your Marvel for each other [ranged] character on your side. side. Photonic Blast Photonic Blast Main [Skill]: Put a -1/-1 counter on an enemy Main [Skill]: Put a -1/-1 counter on an enemy character for each +1/+1 counter on Captain character for each +1/+1 counter on Captain AFF-002 Captain Marvel Main Character A-Force 4 6 6 x x Marvel. Marvel. A-Force Assemble! A-Force Assemble! Main [Skill]: When characters on your side team Main [Skill]: When characters on your side team attack the next time this turn, put a +1/+1 counter attack the next time this turn, put a +1/+1 counter on each of them. -

Marvel: Universe of Super Heroes

222 NORTH 20TH STREET, PHILADELPHIA, PA 19103 P 215.448.1200 www.fi.edu PUBLIC RELATIONS FOR IMMEDIATE RELEASE For more information, contact: Stefanie Santo [email protected] or 215.448.1152 THE FRANKLIN INSTITUTE ANNOUNCES EXCLUSIVE PREVIEW PARTY Marvel: Universe of Super Heroes RED CARPET EXPERIENCE ▪ TALKS WITH MARVEL COMICS CREATIVE TALENT MARVEL COSTUMED CHARACTERS ▪ LIVE AERIAL ARTIST PERFORMANCES EARLY EXHIBIT ACCESS PHILADELPHIA March 20, 2019—The Franklin Institute has announced plans for an exclusive preview party event to launch Marvel: Universe of Super Heroes, Friday, April 12 at 6 pm. The adults (21+) event features panel talks with creative talent from Marvel Comics including Editor-in-Chief C.B. Cebulski, a red-carpet photo opportunity with official Marvel costumed characters Spider-Man and Black Panther, live aerial dance performances, music by DJ Reddz, cash bar, and early access to the exhibition—all in a vibrant party atmosphere. The exclusive exhibit experience is designed for fans from all corners of the Marvel Universe, from comics to films, TV to games, in celebration of this year’s 80th Anniversary of Marvel and the East Coast premiere of Marvel: Universe of Super Heroes at The Franklin Institute. The highly-anticipated exhibit features more than 300 original artifacts, including some of Marvel’s most iconic costumes, props, and original art, and debuts at The Franklin Institute on Saturday, April 13. The Philadelphia stop brings a thoroughly reimagined layout, ambitious new installations, and never-before-seen content. Preview Party Event Highlight! Marvel Panel: Celebrating 80 Years: The Evolution of the Marvel Universe 7 PM and 8:30 PM Marvel’s Editor-in-Chief C.B. -

Uncarded Figure Keyword List

Uncarded Figure Keyword List Infinity Challenge ............................................................................................................... 2 Hypertime ........................................................................................................................... 4 Clobberin' Time .................................................................................................................. 5 Xplosion ............................................................................................................................. 7 Indy..................................................................................................................................... 8 Cosmic Justice .................................................................................................................. 10 Critical Mass..................................................................................................................... 11 Universe ........................................................................................................................... 13 Unleashed ......................................................................................................................... 14 Ultimates .......................................................................................................................... 16 Mutant Mayhem ............................................................................................................... 17 City of Heroes ................................................................................................................. -

Marvel Women: Femininity, Representation and Postfeminism in Films Based on Marvel Comics

Marvel Women: Femininity, Representation and Postfeminism in Films Based on Marvel Comics Miriam Kent Thesis submitted for the degree of Doctor of Philosophy in Film Studies (Research) School of Art, Media and American Studies University of East Anglia September 2016 This copy of the thesis has been supplied on condition that anyone who consults it is understood to recognise that its copyright rests with the author and that use of any information derived there from must be in accordance with current UK Copyright Law. In addition, any quotation or extract must include full attribution. Contents List of Images ........................................................................................................................ 5 Acknowledgements ....................................................................................................... 9 Abstract .......................................................................................................................................10 Introducing... The Mighty Women of Marvel! ............................. 11 Why Comics, Why Film? Adaptation and Beyond......................... 18 The Role of Feminist Film Theory ................................................ 27 We’re in This Together Now: Mediating “Womanhood” Through Postfeminist Culture ............................................................... 34 The Structure of the Thesis ............................................................ 40 Final Remarks .............................................................................. -

Checklist Card

COMMON 029. Gamora - Genius Trainer 030. Ghost Rider - Hellfi re 001. Anger Issues - Basic Action Card 031. Groot - Highly Intelligent 002. Besmirch - Basic Action Card 032. Hela - Asgardian 003. Big Entrance - Basic Action Card 033. Hulk - Immortal 004. Counterstrike - Basic Action Card 034. Iron Lad - Fractured Time Stream 005. Focus - Basic Action Card 035. Iron Man - Ordinary PhDs 006. Inspiring - Basic Action Card 036. Kang - Council of Kangs 007. Investigation - Basic Action Card 037. Loki - Powerful Magic 008. Nefarious Broadcast - Basic Action Card 038. Moon Knight - Stronger than 10 Men 009. Pizza - Basic Action Card 039. Ms. Marvel - NJ Native 010. Poker Night - Basic Action Card 040. Nebula - Sister to Gamora 011. Power Surge - Basic Action Card 041. Pip the Troll - In Search of Warlock 012. Rally! - Basic Action Card 042. Proxima Midnight - The Black Order 013. Retribution - Basic Action Card 043. Rocket Raccoon - I’m the Baddest! 014. Surprise Attack - Basic Action Card 044. Sandman - Frightful 015. True Believer - Basic Action Card 045. She-Hulk - Greeny 016. Villainous Pact - Basic Action Card 046. Spider-Man - Back to Basics 017. Adam Warlock - Golden Gladiator 047. Star-Lord - Legendary 018. Angela - Demon Hunter 048. Supergiant - Intangibility 019. Black Dwarf - Powerhouse 049. Thanos - Courting Death 020. Black Panther - Stealthy Adversary 050. The Collector - Stolen Cosmic Cube 021. Black Swan - The Cabal 051. The Spot - Dr. Jonathan Ohnn 022. Black Widow - Space Gem 052. Thor - Scion of All Asgard 023. Bullseye - Imposter Hawkeye 053. Tombstone - Eliminating the 024. Captain Marvel - Captain Whiz Bang Competition 025. Corvus Glaive - Madness 054. Turk Barrett - Small-Time Crook 026. -

Daredevil Epic Collection: a Touch of Typhoid Pdf, Epub, Ebook

DAREDEVIL EPIC COLLECTION: A TOUCH OF TYPHOID PDF, EPUB, EBOOK Ron Lim,John Romita,Ann Nocenti | 472 pages | 02 Feb 2016 | Marvel Comics | 9780785196884 | English | New York, United States Daredevil Epic Collection: A Touch of Typhoid PDF Book Powered by Wordpress. Under attack from both sides, the champion of Hell's Kitchen may be powerless to resist! Marvel rebooted many of its solo superhero titles in and , but none with as big a splash as Daredevil — who relaunched with filmmaker Kevin Smith at its helm for the first arc. You know the saying: There's no time like the present The last two, featuring Freedom Force and a devil, are less interesting because they're big fights, but they're still decently good. See Marvel Universe Events: Siege. Preview — Daredevil Epic Collection Vol. Great 80's vibe, buy it while it's out on the shelves! Email Gravatar. Doug Moench and Paul Gulacy's blend of kung fu action and globetrotting espionage reached beyond the already high standard for the title and pushed Contest of Champions II But that means Doctor Doom is alive too! Appreciate your reading orders soooooo much, as have over 35 years of Marvels to catch on. Whilce Portacio Illustrations. Juhani rated it it was amazing Oct 28, Omnibus editions are also listed below in their chronological placement, as they are frequently the only coverage of a specific run. She's absolutely completely really crazy. If it is related to the current page but both sides are persistent, it is useless to let it continue. Also, see Wolverine.