Global Journal of Computer Science and Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Science & Technology Trends 2020-2040

Science & Technology Trends 2020-2040 Exploring the S&T Edge NATO Science & Technology Organization DISCLAIMER The research and analysis underlying this report and its conclusions were conducted by the NATO S&T Organization (STO) drawing upon the support of the Alliance’s defence S&T community, NATO Allied Command Transformation (ACT) and the NATO Communications and Information Agency (NCIA). This report does not represent the official opinion or position of NATO or individual governments, but provides considered advice to NATO and Nations’ leadership on significant S&T issues. D.F. Reding J. Eaton NATO Science & Technology Organization Office of the Chief Scientist NATO Headquarters B-1110 Brussels Belgium http:\www.sto.nato.int Distributed free of charge for informational purposes; hard copies may be obtained on request, subject to availability from the NATO Office of the Chief Scientist. The sale and reproduction of this report for commercial purposes is prohibited. Extracts may be used for bona fide educational and informational purposes subject to attribution to the NATO S&T Organization. Unless otherwise credited all non-original graphics are used under Creative Commons licensing (for original sources see https://commons.wikimedia.org and https://www.pxfuel.com/). All icon-based graphics are derived from Microsoft® Office and are used royalty-free. Copyright © NATO Science & Technology Organization, 2020 First published, March 2020 Foreword As the world Science & Tech- changes, so does nology Trends: our Alliance. 2020-2040 pro- NATO adapts. vides an assess- We continue to ment of the im- work together as pact of S&T ad- a community of vances over the like-minded na- next 20 years tions, seeking to on the Alliance. -

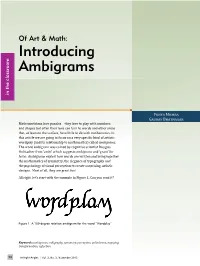

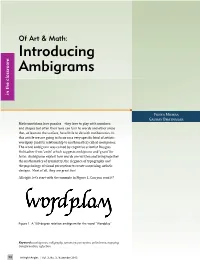

Introducing Ambigrams in the Classroom

Of Art & Math: Introducing Ambigrams in the classroom Mathematicians love puzzles—they love to play with numbers P���� M����� and shapes but often their love can turn to words and other areas G����� B�������� that, at least on the surface, have little to do with mathematics. In wordplay (and its relationship to mathematics) called ambigrams. Thethis articleword ambigram we are going was to coined focus byon cognitivea very speci�ic scientist kind Douglas of artistic Hofstadter from ‘ambi’ which suggests ambiguous and ‘gram’ for letter. Ambigrams exploit how words are written and bring together the mathematics of symmetry, the elegance of typography and the psychology of visual perception to create surprising, artistic designs. Most of all, they are great fun! All right, let’s start with the example in Figure 1. Can you read it? Figure 1. A 180-degree rotation ambigram for the word “Wordplay” Keywords: ambigrams, calligraphy, symmetry, perception, palindrome, mapping, transformation, re�lection 28 At Right Angles | Vol. 2, No. 3, November 2013 Rotating the page you are holding will reveal something interesting. The word stays the same! much sense in the design for “ambigram” has now In other words, it has rotational symmetry. the natural “g-b” transformation that made so maps onto itself. Thus ambigrams are a way of writing words such shifted to a “b-a” transformation while “g” now that they can be read or interpreted in more than one way. Figure 2 is another one, an ambigram for the word “ambigram.” Figure 3.The fi rst of two ambigrams, for “ambigrams.” This design reads the same when rotated 180-degrees. -

The Dream of Zobrist to Avoid the Growth Population

THE DREAM OF ZOBRIST TO AVOID THE GROWTH POPULATION A PAPER BY BULAN HANDAYANI REG. NO. 132202061 DIPLOMA III ENGLISH STUDY PROGRAM FACULTY OF CULTURE STUDY UNIVERSITY OF NORTH SUMATERA MEDAN 2017 UNIVERSITAS SUMATERA UTARA It has been approved by Supervisor, Drs. Siamir Marulafau, M.Hum NIP. 19580517198503 003 Submitted to Faculty of Cultural Study, University of Sumatera Utara in partial fulfillment of the requirements for Diploma-III in English Study Program Approved by Head of English Diploma Study Program, Dr. Matius C.A Sembiring, M.A NIP. 19521126198112 1 001 Approved by the Diploma-III of English Study Program Faculty of Cultural Study, University of Sumatera Utara as a Paper for the Diploma-III Examination UNIVERSITAS SUMATERA UTARA Accepted by Board of Examiners in partial fulfillment of the requirements for the D-III Examination of the Diploma Study Program, Faculty of Cultural Study, University of Sumatera Utara The examination is held on January 2017 Faculty of Cultural Study, University of Sumatera Utara Dean, Dr. Budi Agustono, M.S Board of Examiners Signature 1. Dr. Matius C.A. Sembiring, M.A (Head of ESP) ……….... 2. Drs. Siamir Marulafau, M.Hum (Supervisor) ………… 3. Dr. Matius C.A. Sembiring, M.A (Reader) ………… UNIVERSITAS SUMATERA UTARA AUTHOR’S DECLARATION I am BULAN HANDAYANI, declare that I am the sole author of this paper. Except where the reference is made in the text of this paper, this paper contains no material published elsewhere or extracted in whole or in part from a paper by which I have qualified for or awarded another degree. No other person’s work has been used without due acknowledgement in the main text of this paper. -

Challenge Your Students to Create and 3D Print an Intersecting Silhouette

TETe – INTERSECTING SILHOUETTES intersecting silhouettes CHALLENGE YOUR STUDENTS TO CREATE AND 3D PRINT AN INTERSECTING SILHOUETTE echnology and engineering students meaning to a person who sees the object from are often given challenges to design a particular vantage point using the silhouette and create a prototyping for a product of the object. Silhouettes are filled outlines of that meets a particular need. However, an object without any internal distinguishing Tin process engineering, there may be instances features. They have been used for millennia where students could instead be asked to refine (Jackson, 1911), with applications in historiogra- BY a particular process and find applications for phy, art, design, and signage. A silhouette can it. This article shares a basic procedure for be cut out of paper, steel, or other flat sheets of JIM creating a product that is the intersection of material. As you walk around it to view it edge- FLOWERS silhouettes, and it is especially appropriate for on, the image disappears into a line. technology labs where students can use 3D design software and 3D printers. Now imagine a physical object where you see a silhouette by looking at the front of the object. BACKGROUND But with this object, as you walk around it or rotate the object in your hand, a completely In two-dimensional graphics, the term “ambig- Intersecting different silhouette appears, a 3D ambigram. ram” refers to “a word, art form, or other symbol- silhouettes are Jonathan Borofsky’s 2004 sculpture at Penn ic representation whose elements retain mean- Station in Bal- sometimes ing when viewed or interpreted from a different timore, MD, direction, perspective, or orientation” (Wikipedia, greeted with Male/Female, n.d., Para. -

Banksy. Urban Art in a Material World

Ulrich Blanché BANKSY Ulrich Blanché Banksy Urban Art in a Material World Translated from German by Rebekah Jonas and Ulrich Blanché Tectum Ulrich Blanché Banksy. Urban Art in a Material World Translated by Rebekah Jonas and Ulrich Blanché Proofread by Rebekah Jonas Tectum Verlag Marburg, 2016 ISBN 978-3-8288-6357-6 (Dieser Titel ist zugleich als gedrucktes Buch unter der ISBN 978-3-8288-3541-2 im Tectum Verlag erschienen.) Umschlagabbildung: Food Art made in 2008 by Prudence Emma Staite. Reprinted by kind permission of Nestlé and Prudence Emma Staite. Besuchen Sie uns im Internet www.tectum-verlag.de www.facebook.com/tectum.verlag Bibliografische Informationen der Deutschen Nationalbibliothek Die Deutsche Nationalbibliothek verzeichnet diese Publikation in der Deutschen Nationalbibliografie; detaillierte bibliografische Angaben sind im Internet über http://dnb.ddb.de abrufbar. Table of Content 1) Introduction 11 a) How Does Banksy Depict Consumerism? 11 b) How is the Term Consumer Culture Used in this Study? 15 c) Sources 17 2) Terms and Definitions 19 a) Consumerism and Consumption 19 i) The Term Consumption 19 ii) The Concept of Consumerism 20 b) Cultural Critique, Critique of Authority and Environmental Criticism 23 c) Consumer Society 23 i) Narrowing Down »Consumer Society« 24 ii) Emergence of Consumer Societies 25 d) Consumption and Religion 28 e) Consumption in Art History 31 i) Marcel Duchamp 32 ii) Andy Warhol 35 iii) Jeff Koons 39 f) Graffiti, Street Art, and Urban Art 43 i) Graffiti 43 ii) The Term Street Art 44 iii) Definition -

Devnagari- Latin Ambigrams

Typography in Publication Design Devnagari- Latin Ambigrams Pallavi Apte, Free-lance Graphic Designer, Mumbai, India, [email protected] Abstract: The core content of this paper is bi-lingual-bi-scriptural ambigrams in Devnagari and Latin scripts. Through these ambigram artworks and detailed demonstration of their creation process, the paper intends to establish ambigrams in the Indian scenario and thereby create overall awareness about the concept. Key words: Ambigram, Devnagari-Latin combination, bi-lingual, bi-scriptural, inversion, flip script, vertical palindrome. 1. Introduction The earliest known published reference to the term ‘Ambigram’ was by Douglas Hofstadter who describes an ambigram as "a calligraphic design that manages to squeeze two different readings into the selfsame set of curves." Thus an ambigram is a graphical figure that is rendered in a known script by modifying its letterforms in such a manner that it spells out one or more words not only as presented but also in another direction or orientation. Ambigrams are also referred to by names such as ‘Designatures’, ‘Inversions’, ‘Flipscript’, ‘Vertical Palindromes’ etc. The concept of ambigrams has been known for over a century now. But most of the popular ambigrams seen today are in the Latin script. Published instances of ambigrams in other scripts are minimal. This paper explores the area of ambigram creation in the Devnagari script as well as combination ambigrams of Devnagari and Latin. In these bi-scriptural combination ambigrams, the typographic unit is designed to spell out word/s in Devnagari from one orientation/point of view and in Latin from the other. It is essential to note here that these combination ambigrams are termed as ‘bi-scriptural' and not ‘bi-lingual’. -

Mathemagical Ambigrams

Mathemagical Ambigrams Burkard Polster* Abstract Ambigrams are calligraphic designs that have several interpretations as written words. In this concise introduction to ambigrams we focus on the mathematical aspects of ambigrams and ambigram design. 1. Introduction This essay has been written with a special audience in mind. You belong to this audi- ence if you are, just like me and many other mathematicians, fascinated by Escher’s drawings, puzzles, wordplay, and illusions. If you belong to this category, then it is almost certain that you will also like ambigrams. Furthermore, you also will not need to be told why it is that ambigrams are fun, you almost certainly would appreciate to see your name and those of other persons and things close to your heart ambi- grammed, and may even want to know how to construct ambigrams yourself. What we will concentrate on in the following is to summarise some basic in- formation about ambigrams that will appeal especially to minds that are wired in a mathematical way. We do this by playing some typical games that mathematicians like to play. For example, for many of us mathematics is the study of symmetry in one form or another. Therefore, a mathematically minded ambigrammist will want to see everybody’s favourite mathematical terms turned into beautiful calligraphic de- signs displaying unusual geometric symmetries. Or what about constructing magic squares and other mathematical puzzles that incorporate an additional ambigrammatic dimension? Or, more generally, what about fusing ambigrams with other worlds of ambiguity and wordplay, by constructing appropriate ambigrammatic captions to Escher’s drawings, creating an ambigram of the word ambigram, or an ambigram of a palindrome? 2. -

PUNYA MISHRA, Ph.D. Associate Dean for Scholarship & Innovation

PUNYA MISHRA, Ph.D. Associate Dean for Scholarship & Innovation Professor, Educational Leadership & Innovation Affiliate Faculty, Mary Lou Fulton Teachers College Herberger Institute for Design and the Arts Arizona State University Contact Information Farmer Education Building, Suite 402 [email protected] 1050 S. Forest mall Phone: 517.303.9567 PO Box 871811 Web: punyamishra.com Tempe, AZ 85287-1811 Twitter: @punyamishra BIO Dr. Punya Mishra is Associate Dean of Scholarship & Innovation and Professor in the Division of Educational Leadership & Innovation in the Mary Lou Fulton Teachers College at Arizona State University. He also has an affiliate faculty position in the Herberger Institute for Design and the Arts. Prior to coming to ASU, Dr. Mishra was at Michigan State University where he directed the award-winning Master of Arts in Educational Technology program. In 2016 he received the William J. Beal Outstanding Faculty Award for his comprehensive and sustained record of scholarly excellence in research and creative activities, instruction and outreach. He has served on the Executive Council of the Society for Information Technology in Teacher Education. He started and served as chair of the Creativity Special Interest Group at the Society for Information Technology in Teacher Education for two years. He (with Dr. Matthew J. Koehler) co-chaired the SITE2011 conference and is former chair of the Innovation & Technology Committee of the American Association of Colleges of Teacher Education (AACTE). He was a member of the School Board for the Okemos Public School District. He has an undergraduate degree in Electrical & Electronics Engineering (from Birla Institute of Technology & Science, Pilani), Masters degrees in Visual Communication (from Industrial Design Center, IIT Mumbai), and Mass Communications (from Miami University, Oxford Ohio), and a Ph.D. -

Amazing Words, Lederer Puts on Yet Another Dazzling Display, Elucidating the Wondrous Stories and Secrets That Lie Hidden in the Language

“Is there anyone alive who has more fun with words than Richard Lederer? In dozens of books, from Crazy English to The Word Circus, he has continually entertained and amazed us with his boundless knowledge of and enthusiasm for all things linguistic. Now, in Amazing Words, Lederer puts on yet another dazzling display, elucidating the wondrous stories and secrets that lie hidden in the language. There’s an ooh! and an ah! and a hearty ha-ha-ha! on every page.” —Charles Harrington Elster, author of The Accidents of Style “Richard Lederer has outdone Richard Lederer. Amazing Words is clever, compelling, and totally captivating. This book is for anyone and everyone who loves the English language. It is one of a dozen books I would select to take along if I were exiled to a desert island.” —Paul Dickson, author of Words “This is not simply a book about Amazing Words, it is also an amazing book about words—and one that could have only been written by the inimitable Richard Lederer. Enjoy, word lovers, enjoy!” —Dr. Mardy Grothe, author of I Never Metaphor I Didn’t Like “Amazing Words is chock full of constant surprise. Each entry is a font of delight and knowledge. I had to keep reading because I unfailingly wanted to see how the next word would bedazzle, beguile, and bewitch me.” 2 —Caroline McCullagh, author of American Trivia “Every page instructs, delights, and entrances readers—often all at once.” —Rod L. Evans, author of Tyrannosaurus Lex “Words are wonderfully interesting, yes, but only Richard Lederer makes them amazing.” —Robert Hartwell Fiske, editor of The Vocabula Review and author of The Best Words “Richard Lederer’s Amazing Words is amazing indeed. -

Of Art & Math: Introducing Ambigrams

Of Art & Math: Introducing Ambigrams in the classroom Mathematicians love puzzles—they love to play with numbers P���� M����� and shapes but often their love can turn to words and other areas G����� B�������� that, at least on the surface, have little to do with mathematics. In wordplay (and its relationship to mathematics) called ambigrams. Thethis articleword ambigram we are going was to coined focus byon cognitivea very speci�ic scientist kind Douglas of artistic Hofstadter from ‘ambi’ which suggests ambiguous and ‘gram’ for letter. Ambigrams exploit how words are written and bring together the mathematics of symmetry, the elegance of typography and the psychology of visual perception to create surprising, artistic designs. Most of all, they are great fun! All right, let’s start with the example in Figure 1. Can you read it? Figure 1. A 180-degree rotation ambigram for the word “Wordplay” Keywords: ambigrams, calligraphy, symmetry, perception, palindrome, mapping, transformation, re�lection 28 At Right Angles | Vol. 2, No. 3, November 2013 Rotating the page you are holding will reveal something interesting. The word stays the same! much sense in the design for “ambigram” has now In other words, it has rotational symmetry. the natural “g-b” transformation that made so maps onto itself. Thus ambigrams are a way of writing words such shifted to a “b-a” transformation while “g” now that they can be read or interpreted in more than one way. Figure 2 is another one, an ambigram for the word “ambigram.” Figure 3.The fi rst of two ambigrams, for “ambigrams.” This design reads the same when rotated 180-degrees. -

Global Journal on Humanites & Social Sciences

Global Journal on Humanites & Social Sciences Vol 3 (2016) 397-409 Selected Paper of 4th World Conference on Design and Arts, (DAE-2015) 26-28 June 2015, St.Petersburg Christan University, St. Petersburg, Russia. Visual perception management in advertisements Seda Nur Atasoy*, Tortum Vocational High School, Design Department, Graphic Design Program, Atatürk University, Tortum, Erzurum 25430, Turkey. Suggested Citation: Atasoy, S., N. (2015). Visual perception management in advertisements, Global Journal on Humanites & Social Sciences. [Online]. 03, pp 397-404. Available from: http://sproc.org/ojs/index.php/pntsbs Received January 03, 2015; revised March 11, 2015; accepted May 06, 2015. Selection and peer review under responsibility of Prof. Dr. Milan Matijevic. ©2016 SciencePark Research, Organization & Counseling. All rights reserved. Abstract The subconscious includes all mental events that stay out of conscious perception and have no potential to access to the level of conscious. In our present day, the conscious is one of the objects where the advertisers often use it to make hidden inducements in order to influence the behaviors of the consumers and where the desire to buy a product is created, placed in it to best market and publicize their own trademarks and products. When the consumer wants to buy a product, it is necessary that the product for which advertisement is made in the mind emerge. And, this makes us question the necessity of the visual perception management in the human brain. In daily life, thousands of information, pictures and sounds go to the memory on a daily basis, and the human brain recalls them if only they are necessary. -

A Description of the Main Characters' Conflicts in Dan Brown's Novel Inferno"

A DESCRIPTION OF THE MAIN CHARACTERS’ CONFLICTS IN DAN BROWN’S NOVEL INFERNO A PAPER BY: KHALIDA SABRINA BATUBARA REG.NO 152202031 DIPLOMA-III ENGLISH STUDY PROGRAM FACULTY OF CULTURAL STUDIES UNIVERSITY OF SUMATERA UTARA MEDAN 2018 UNIVERSITAS SUMATERA UTARA UNIVERSITAS SUMATERA UTARA UNIVERSITAS SUMATERA UTARA AUTHOR’S DECLARATION I am KHALIDA SABRINA BATUBARA, declare that I am the sole author of this paper. Except where the references is made in the text of this paper, this paper contains no material published elsewhere or extracted in whole or in part from a paper by which I have qualified for or awarded another degree. No other person’s work has been used without due acknowledgement in the main text of this paper. This paper has not been submitted for the award of another degree in any tertiary education. Signed : Date : i UNIVERSITAS SUMATERA UTARA COPYRIGHT DECLARATION Name : KHALIDA SABRINA BATUBARA Title of Paper : A Description of The Main Characters’ Conflicts in Dan Brown’s Novel Inferno Qualification : D-III / Ahli Madya Study Program : English I am willing that my paper should be available for reproduction at the direction the Librarian of Diploma-III English Study Program Faculty of Cultural Studies USU on the understanding that users made aware of their obligation under law of the Republic of Indonesia. Signed : Date : ii UNIVERSITAS SUMATERA UTARA ABSTRAK Judul kertas karya ini adalah "A Description of the Main Characters' Conflicts in Dan Brown's Novel Inferno". Penelitian ini bertujuan untuk menganalisis konflik- konflik di dalam novel Inferno. Tujuan dari penelitian ini adalah menemukan konflik yang dialami oleh tokoh utama.