DV Technology for Video Computer Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sony Recorder

Sony Recorder www.ctlny.com 24 All prices subject to change DVCAM, J-Series, Portable & Betacam Recorders DVCAM Recorders J-Series Betacam Recorders SP Betacam Recorders Sony Model# DSR1500A Sony Model# J1/901 Sony Model# PVW2600 Sales price $5,680.08 Sales price $5,735.80 Sales price $12,183.36 Editing recorder also play Beta/SP/SX Player w/ Betacam SP Video Editing DVCPRO,SDI-YUV Component Output Player with TBC & TC optional 8-3/8 x 5-1/8 x 16-5/8 16-7/8 x 7-5/8 x 19-3/8 Model # List Sales price Model # List Sales price Model # List Sales price DSR1500A $7,245.00 $5,680.08 J1/901 $6,025.00 $5,735.80 PVW2600 $15,540.00 $12,183.3 Editing recorder also play DVCPRO,SDI-YUV optional Beta/SP/SX Player w/ Component Output Betacam SP Video Editing Player with TBC & TC 6 DSR1600 $6,975.00 $5,468.40 J1/902 $7,050.00 $6,711.60 PVW2650 $22,089.00 $17,317.7 Edit Player w/ DVCPRO playback, RS-422 & DV Output Beta/SP/SX Editing Player w/ SDI Output Betacam SP Editing Player w. Dynamic Tracking, TBC & TC8 DSR1800 $9,970.00 $7,816.48 J2/901 $10,175.00 $9,686.60 PVW2800 $23,199.00 $18,188.0 Edit Recorder w/DVCPRO playback,RS422 & DV Output IMX/SP/SX Editing Player w/ Component Output Betacam SP Video Editing Recorder with TBC & TC 2 DSR2000 $15,750.00 $13,229.4 J2/902 $11,400.00 $10,852.8 UVW1200 $6,634.00 $5,572.56 DVCAM/DVCPRO Recorder w/Motion Control,SDI/RS422 4 IMX/SP/SX Editing Player w/ SDI Output 0 Betacam Player w/ RGB & Auto Repeat Function DSR2000P $1,770.00 $14,868.0 J3/901 $12,400.00 $11,804.8 UVW1400A $8,988.00 $7,549.92 PAL DVCAM/DVCPRO -

DV-983H 1080P Up-Converting Universal DVD Player with VRS by Anchor Bay Video Processing and 7.1CH Audio

DV-983H 1080p Up-Converting Universal DVD Player with VRS by Anchor Bay Video Processing and 7.1CH Audio DV-983H is the new flagship model in OPPO's line of award-winning up-converting DVD players. Featuring Anchor Bay's leading video processing technologies, 7.1-channel audio, and 1080p HDMI up-conversion, the DV-983H Universal DVD Player delivers the breath-taking audio and video performance needed to make standard DVDs look their best on today's large screen, high resolution displays. The DV-983H provides a rich array of features for serious home theater enthusiasts. By applying source-adaptive, motion-adaptive, and edge-adaptive techniques, the DV-983H produces an outstanding image for any DVD, whether it’s mastered from an original theatrical release film or from a TV series. Aspect ratio conversion and multi-level zooming enable users to take full control of the viewing experience – maintain the original aspect ratio, stretch to full screen, or crop the unsightly black borders. Special stretch modes make it possible to utilize the full resolution of ultra high-end projectors with anamorphic lens. For users with an international taste, the frame rate conversion feature converts PAL movies for NTSC output without any loss of resolution or tearing. Custom home theater installers will find the DV-983H easy to integrate into whole-house control systems, thanks to its RS-232 and IR IN/OUT control ports. To complete the home theatre experience, the DV-983H produces stunning sound quality. Its 7.1 channel audio with Dolby Digital Surround EX decoding offers more depth, spacious ambience, and sound localization. -

Understanding Digital Video

chapter1 Understanding Digital Video Are you ready to learn more about how digital video works? This chapter introduces you to the concept of digital video, the benefits of going digital, the different types of digital video cameras, the digital video workflow, and essential digital video terms. COPYRIGHTED MATERIAL What Is Digital Video? ........................................ 4 Understanding the Benefits of Going Digital ................................................6 Discover Digital Video Cameras .......................8 The Digital Video Workflow ............................10 Essential Digital Video Terms .........................12 What Is Digital Video? Digital video is a relatively inexpensive, high-quality video format that utilizes a digital video signal rather than an analog video signal. Consumers and professionals use digital video to create video for the Web and mobile devices, and even to create feature-length movies. Analog versus Digital Video Recording Media versus Format Analog video is variable data represented as The recording medium is essentially the physical electronic pulses. In digital video, the data is broken device on which the digital video is recorded, like down into a binary format as a series of ones and a tape or solid-state medium (a medium without zeros. A major weakness of analog recordings is that moving parts, such as flash memory). The format every time analog video is copied from tape to tape, refers to the way in which video and audio data is some of the data is lost and the image is degraded, coded and organized on the media. Three popular which is referred to as generation loss. Digital video examples of digital video formats are DV (Digital is less susceptible to deterioration when copied. -

Blu-Ray Disc™ HDD Recorder

sr1500-1250_sales_guide.qxd 10.1.27 7:40 PM Page 1 Glossary Blu-ray Disc™ HDD Recorder G1080i GHDMI (High-definition Multimedia Interface) (500GB HDD) In a single high-definition image, 1080 (1125) alternating scan lines pass every 1/60th (NTSC) Established in Dec. 2002, HDMI is an interface for digital electronic equipment that acts as the SR-HD1500 or 1/50th (PAL) of a second to create an interlace image. And because 1080i (1125i) more than connection standard between PCs and displays. It transmits uncompressed HD digital audio doubles the current scan lines of 480i (525i) found on television broadcasts, it helps to ensure and video signals on a single cable without distortion. The DVI interface was its predecessor, (250GB HDD) that details are much clearer, enabling the creation of more realistic and richer images. and HDMI has been enhanced for AV equipment by adding functions such as audio SR-HD1250 transmission capability, copy protection of digital content and other intellectual properties, as well as the ability to transfer color-variation information. GAVCHD (Advanced Video Codec High Definition) AVCHD is an acronym for Advanced Video Codec High Definition, and it is the format for HD GMPEG-2 (Moving Picture Experts Group 2) camcorders used to record and playback high-definition video images. AVCHD uses the MPEG-2 is a standard for efficient data compression and color video expansion that is widely H.264/MPEG-4 AVC compression format for video to enable highly efficient encoding, the Dolby used for media such as DVDs and satellite-based digital broadcastings. Digital (AC-3) format with LPCM option for audio, and MPEG-2-TS for multiplexing. -

Digital Video Quality Handbook (May 2013

Digital Video Quality Handbook May 2013 This page intentionally left blank. Executive Summary Under the direction of the Department of Homeland Security (DHS) Science and Technology Directorate (S&T), First Responders Group (FRG), Office for Interoperability and Compatibility (OIC), the Johns Hopkins University Applied Physics Laboratory (JHU/APL), worked with the Security Industry Association (including Steve Surfaro) and members of the Video Quality in Public Safety (VQiPS) Working Group to develop the May 2013 Video Quality Handbook. This document provides voluntary guidance for providing levels of video quality in public safety applications for network video surveillance. Several video surveillance use cases are presented to help illustrate how to relate video component and system performance to the intended application of video surveillance, while meeting the basic requirements of federal, state, tribal and local government authorities. Characteristics of video surveillance equipment are described in terms of how they may influence the design of video surveillance systems. In order for the video surveillance system to meet the needs of the user, the technology provider must consider the following factors that impact video quality: 1) Device categories; 2) Component and system performance level; 3) Verification of intended use; 4) Component and system performance specification; and 5) Best fit and link to use case(s). An appendix is also provided that presents content related to topics not covered in the original document (especially information related to video standards) and to update the material as needed to reflect innovation and changes in the video environment. The emphasis is on the implications of digital video data being exchanged across networks with large numbers of components or participants. -

Digital Video Recording

Digital Video Recording Five general principles for better video: Always use a tripod — always. Use good microphones and check your audio levels. Be aware of lighting and seek more light. Use the “rule of thirds” to frame your subject. Make pan/tilt movements slowly and deliberately. Don’t shoot directly against a wall. Label your recordings. Tripods An inexpensive tripod is markedly better than shooting by hand. Use a tripod that is easy to level (look for ones with a leveling bubble) and use it for every shot. Audio Remember that this is an audiovisual medium! Use an external microphone when you are recording, not the built-in video recorder microphone. Studies have shown that viewers who watch video with poor audio quality will actually perceive the image as more inferior than it actually is, so good sound is crucial. The camera will automatically disable built-in microphones when you plug in an external. There are several choices for microphones. Microphones There are several types of microphones to choose from: Omnidirectional Unidirectional Lavalier Shotgun Handheld In choosing microphones you will need to make a decision to use a wired or a wireless system. Hardwired systems are inexpensive and dependable. Just plug one end of the cord into the microphone and the other into the recording device. Limitations of wired systems: . Dragging the cord around on the floor causes it to accumulate dirt, which could result in equipment damage or signal distortion. Hiding the wiring when recording can be problematic. You may pick up a low hum if you inadvertently run audio wiring in a parallel path with electrical wiring. -

Model DV6600 User Guide Super Audio CD/DVD Player

E61M7ED/E61M9ED(EN).qx3 05.8.4 5:27 PM Page 1 Model DV6600 User Guide Super Audio CD/DVD Player CLASS 1 LASER PRODUCT E61M7ED/E61M9ED(EN).qx3 05.8.4 5:27 PM Page 2 PRECAUTIONS ENGLISH ESPAÑOL WARRANTY GARANTIA For warranty information, contact your local Marantz distributor. Para obtener información acerca de la garantia póngase en contacto con su distribuidor Marantz. RETAIN YOUR PURCHASE RECEIPT GUARDE SU RECIBO DE COMPRA Your purchase receipt is your permanent record of a valuable purchase. It should be Su recibo de compra es su prueba permanente de haber adquirido un aparato de kept in a safe place to be referred to as necessary for insurance purposes or when valor, Este recibo deberá guardarlo en un lugar seguro y utilizarlo como referencia corresponding with Marantz. cuando tenga que hacer uso del seguro o se ponga en contacto con Marantz. IMPORTANT IMPORTANTE When seeking warranty service, it is the responsibility of the consumer to establish proof Cuando solicite el servicio otorgado por la garantia el usuario tiene la responsabilidad and date of purchase. Your purchase receipt or invoice is adequate for such proof. de demonstrar cuándo efectuó la compra. En este caso, su recibo de compra será la FOR U.K. ONLY prueba apropiada. This undertaking is in addition to a consumer's statutory rights and does not affect those rights in any way. ITALIANO GARANZIA FRANÇAIS L’apparecchio è coperto da una garanzia di buon funzionamento della durata di un anno, GARANTIE o del periodo previsto dalla legge, a partire dalla data di acquisto comprovata da un Pour des informations sur la garantie, contacter le distributeur local Marantz. -

Hd-A3ku Hd-A3kc

HD DVD player HD-A3KU HD-A3KC Owner’s manual In the spaces provided below, record the Model and Serial No. located on the rear panel of your player. Model No. Serial No. Retain this information for future reference. SAFETY PRECAUTIONS CAUTION The lightning fl ash with arrowhead symbol, within an equilateral triangle, RISK OF ELECTRIC SHOCK is intended to alert the user to the presence of uninsulated dangerous DO NOT OPEN voltage within the products enclosure that may be of suffi cient magnitude RISQUE DE CHOC ELECTRIQUE NE to constitute a risk of electric shock to persons. ATTENTION PAS OUVRIR The exclamation point within an equilateral triangle is intended to alert WARNING : TO REDUCE THE RISK OF ELECTRIC SHOCK, DO NOT REMOVE the user to the presence of important operating and maintenance COVER (OR BACK). NO USERSERVICEABLE (servicing) instructions in the literature accompanying the appliance. PARTS INSIDE. REFER SERVICING TO QUALIFIED SERVICE PERSONNEL. WARNING: TO REDUCE THE RISK OF FIRE OR ELECTRIC SHOCK, DO NOT EXPOSE THIS APPLIANCE TO RAIN OR MOISTURE. DANGEROUS HIGH VOLTAGES ARE PRESENT INSIDE THE ENCLOSURE. DO NOT OPEN THE CABINET. REFER SERVICING TO QUALIFIED PERSONNEL ONLY. CAUTION: TO PREVENT ELECTRIC SHOCK, MATCH WIDE BLADE OF PLUG TO WIDE SLOT, FULLY INSERT. ATTENTION: POUR ÉVITER LES CHOCS ÉLECTRIQUES, INTRODUIRE LA LAME LA PLUS LARGE DE LA FICHE DANS LA BORNE CORRESPONDANTE DE LA PRISE ET POUSSER JUSQU’AU FOND. CAUTION: This HD DVD player employs a Laser System. To ensure proper use of this product, please read this owner’s manual carefully and retain for future reference. -

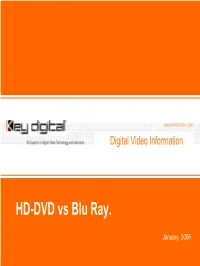

CEDIA 2005 Key Digital Press Conference & Training Presentation

Blu Ray vs HD-DVD Digital Video Information HD-DVD vs Blu Ray. January, 2006 Blu Ray vs HD-DVD For the last three years major consumer electronics companies together with Hollywood movie content providers, software developers, game platforms develop is in the competition to develop new movie/game/software disk format that will replace current DVD disk today. Unfortunately two competing camps Blu Ray and HD-DVD do not have any plans to merge yet and go full steam to the market with players and content. We at Key Digital® would like to inform our customers so informed preparation for this major new disk format. Blu Ray vs HD-DVD Blu Ray vs HD-DVD Comparison Parameters Blu Ray HD-DVD Comments Disk capacity Single layer disc – 25 Single layer disc – 20 Both m ay support up to GB GB 54GB Video MPEG2 H264, VC-1, MPEG2 H264 and VC-1 are next Compression generation compression and use higher compression ratio then MPEG2 Audio formats All Digital Audio All Digital Audio formats formats Play time Two hours+ HDTV Two hours+ HDTV AV Quality Very high Very high Price suggested at $1,800 $499 Price suggestions at this CES2006 stage are very preliminary Output Video HDMI only HDMI only Hard commitment on interface for HDMI only output for 1080i/720p H D TV is identical from both camps 1080p Output Yes Yes Both formats committed to capability allow 1080p output format for HDM I interface Output Video Com ponent Com ponent Com ponent output m ay interface for not be available altogether 480i/480p G am e platform Play Station XBOX support R ollout schedule Sim ilar to H D -D VD , full Starting March 2006 Q uantities to m anufacture production 3Q/2006 x10,000/month, full are very prelim inary production 3Q/2006 Content M ajor H ollyw ood M ajor H ollyw ood and Content providers availability com m itted M icrosoft com m itted alliances w ill shift as format winner become apparent. -

DVE) Competency Requirements

Certified Digital Video Editor (DVE) Competency Requirements This Competency listing serves to identify the major knowledge, skills, and standards areas which a certified Digital Video Editor needs in order to perform the professional tasks associated with the development of electronic digital videos for digital technology. Digital Video Editors must be knowledgeable in the following technical areas: 1.0 Screen Format 1.1 Explain Aspect Ratios in detail including: 1.1.1 the differences between the following: 1.1.1.1 Widescreen Aspect ratio, 16:9 1.1.1.2 Standard Aspect ratio, 4:3 1.2 Explain the use of letterbox technique 1.3 Describe the ‘Scan and Span’ technique use 1.4 Explain the screen formatting’s ‘safe zone’ 1.5 Describe the ‘split screen’ and its usage 1.6 Identify how Picture in Picture (PIP) is used in video editing 1.7 Explain ‘Overscan’ and associated terms: 1.7.1 Title Safe 1.7.2 Action Safe 1.7.3 Underscan 2.0 Video Fundamentals 2.1 Explain the following color details: 2.1.1 Luminance 2.1.2 Chrominance 2.1.3 RGB 2.1.4 YUV 2.2 Describe the significance of Frame and Frame Rates per second in digital video editing 2.3 Explain in detail progressive scanning or noninterlaced scanning 2.4 Explain the following video terminology: 2.4.1 Master 2.4.2 Talking Head 2.4.3 Freeze Frame 2.4.4 Dub 2.4.5 Explain video techniques such as: 2.4.5.1 Pan 2.4.5.2 Tilt 2.4.5.3 Roll 2.4.6 Identify the differences between the following video transitions: 2.4.6.1 Fades 2.4.6.2 Wipes 2.4.7 Explain the differences between “open caption” and “closed -

From Hard Drive to Optimized Video File

March 10 2003 Strategies and Techniques for Designers, Developers, and Managers of eLearning THIS WEEK — DEVELOPMENT TECHNIQUES Repurposing Taped Video for e-Learning, Part 2: Video is an increas- From Hard Drive to Optimized ingly important part of e-Learning Video File and there are many BY STEPHEN HASKIN ways to deliver it to n Part 1 of this two-part series, we learned how to move the learner’s desk- video content from digital or analog tape onto a hard top. With the right Idrive. This is half the job of repurposing taped video. This tools, making use week, we’ll learn how to use Adobe Premiere to render digi- tized video to other media: the Web, CD-ROM, and DVD. of these delivery You’ve got the tape. You’ve got the computer. You’ve options is easy. grabbed the video. Now it’s in the computer and you have to This week, learn do something with it. A file in your comput- as the video if you want, or give it another how to move digi- er is nice, but it’s not going to get any train- name. Remember, the schema for naming ing done. So what do you do? files is yours and I can’t know what kinds of tized video to the file names your organization uses, so what- Setting up projects in Premiere ever you call the Premiere project is OK. Web, to CD-ROM, Let’s start with the video you just Last week, I didn’t explain what happens grabbed. -

HDV Workflow

Whitepaper Author: Jon Thorn, Field Systems Engineer—AJA Video Systems, Inc. HDV Workfl ow JVC HDV and Sony HDV Workfl ow Using the AJA KonaLH or KonaLHe with Final Cut Pro 5 Introduction With the introduction of the HDV format, a broader era of HD acquisition has grown from the portable camcorder sized devices that could record compressed HD on inexpensive MiniDV tapes. However, akin to the beginning of the DV era in the late 1990s, this format is still early in its development and deployment. Though support for the format has grown quickly by comparison to its digital forerunner—DV, the format’s very structure has proven to be somewhat problematic in a conventional post-production workfl ow. The Format What makes HDV such an amazing format is that it manages to capture HD images via MPEG2 compression and allow for record- ing the signal to a MiniDV tape (thankfully a tape format already ubiquitous in the marketplace among all major camera manu- facturers and tape stock providers.) This MPEG2 compression is similar to a DVD (although DVD is a program stream vs. HDV’s transport stream and HDV uses a constant bit rate whereas DVDs use variable bit rates). The issue for post production is that the HDV transport stream is based around a long-GOP structure (group of pictures) which produces images based on information over a section of time, via I, P and B frames; Intraframes, predicted frames and bi-direction- al frames. Formats that do not use this scheme treat frames as individual units, as in the progressive formats where a frame truly is a frame, or as interlaced frames where two fi elds create the image.