Digital Video Quality Handbook (May 2013

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

881/882 Video Test Instrument User Guide

881/882 Video Test Instrument User Guide 881/882 Video Test Instrument, User Guide, Revision A.35 (9/23/10) Copyright 2010 Quantum Data. All rights reserved. The information in this document is provided for use by our customers and may not be incorporated into other products or publications without the expressed written consent of Quantum Data. Quantum Data reserves the right to make changes to its products to improve performance, reliability, producibility, and (or) marketability. Information furnished by Quantum Data is believed to be accurate and reliable. However, no responsibility is assumed by Quantum Data for its use. Updates to this manual are available at http://www.quantumdata.com/support/downloads/ . Table of Contents Chapter 1 Getting Started Introduction . 2 882D features . 2 Video interfaces . 4 Computer interfaces . 7 Front panel interface . 9 Status indicators . 9 Menu selection keys . 10 882 file system and media . 13 882 file system . 13 882 media . 13 882 operational modes . 14 Booting up the 882 . 14 Basic mode. 15 Browse mode . 15 Web interface . 20 Working with the Virtual Front Panel . 20 Working with the CMD (Command) Terminal. 22 Working with the 882 FTP Browser . 23 Copying files between 882s . 27 Command line interface . 30 Working with the serial interface. 30 Working with the network interface. 33 Sending commands interactively . 34 Sending command files (serial interface only) . 34 Working with user profiles . 36 Chapter 2 Testing Video Displays General video display testing procedures . 40 882 Video Test Instrument User Guide (Rev A.35) i Making physical connection . 40 Selecting interface type . 41 Selecting video format . -

VC-1 Compressed Video Bitstream Format and Decoding Process

_________________________________________________________________ SMPTE 421M-2006 SMPTE STANDARD VC-1 Compressed Video Bitstream Format and Decoding Process _________________________________________________________________ Intellectual property notice Copyright 2003-2006 THE SOCIETY OF MOTION PICTURE AND TELEVISION ENGINEERS 3 Barker Ave. White Plains, NY 10601 +1 914 761 1100 Fax +1 914 761-3115 E-mail [email protected] Web http://www.smpte.org The user’s attention is called to the possibility that compliance with this document may require use of inventions covered by patent rights. By publication of this document, no position is taken with respect to the validity of these claims or of any patent rights in connection therewith. The patent holders have, however, filed statements of willingness to grant a license under these rights on fair, reasonable and nondiscriminatory terms and conditions to applicants desiring to obtain such a license. Contact information may be obtained from the SMPTE. No representation or warranty is made or implied that these are the only licenses that may be required to avoid infringement in the use of this document. © SMPTE 2003-2006 – All rights reserved Approved 24-February-2006 i Foreword SMPTE (the Society of Motion Picture and Television Engineers) is an internationally-recognized standards developing organization. Headquartered and incorporated in the United States of America, SMPTE has members in over 80 countries on six continents. SMPTE’s Engineering Documents, including Standards, Recommended Practices and Engineering Guidelines, are prepared by SMPTE’s Technology Committees. Participation in these Committees is open to all with a bona fide interest in their work. SMPTE cooperates closely with other standards-developing organizations, including ISO, IEC and ITU. -

Understanding Digital Video

chapter1 Understanding Digital Video Are you ready to learn more about how digital video works? This chapter introduces you to the concept of digital video, the benefits of going digital, the different types of digital video cameras, the digital video workflow, and essential digital video terms. COPYRIGHTED MATERIAL What Is Digital Video? ........................................ 4 Understanding the Benefits of Going Digital ................................................6 Discover Digital Video Cameras .......................8 The Digital Video Workflow ............................10 Essential Digital Video Terms .........................12 What Is Digital Video? Digital video is a relatively inexpensive, high-quality video format that utilizes a digital video signal rather than an analog video signal. Consumers and professionals use digital video to create video for the Web and mobile devices, and even to create feature-length movies. Analog versus Digital Video Recording Media versus Format Analog video is variable data represented as The recording medium is essentially the physical electronic pulses. In digital video, the data is broken device on which the digital video is recorded, like down into a binary format as a series of ones and a tape or solid-state medium (a medium without zeros. A major weakness of analog recordings is that moving parts, such as flash memory). The format every time analog video is copied from tape to tape, refers to the way in which video and audio data is some of the data is lost and the image is degraded, coded and organized on the media. Three popular which is referred to as generation loss. Digital video examples of digital video formats are DV (Digital is less susceptible to deterioration when copied. -

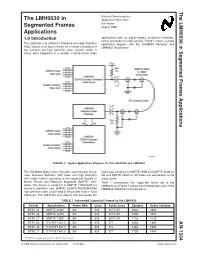

Application Note 1334 the LMH0030 in Segmented Frames Applications

The LMH0030 in Segmented Frames Applications AN-1334 National Semiconductor The LMH0030 in Application Note 1334 Kai Peters Segmented Frames August 2006 Applications 1.0 Introduction applications such as digital routers, production switchers, format converters or video servers. Figure 1 shows a typical The LMH0030 and LMH0031 Standard and High Definition application diagram with the LMH0030 Serializer and Video chipset is an ideal solution for a variety of products in LMH0031 Deserializer. the standard and high definition video systems realm. It allows easy integration in a number of professional video 20108501 FIGURE 1. Typical Application Diagram for the LMH0030 and LMH0031 The LMH0030 Digital Video Serializer automatically recog- video data compliant to SMPTE 259M and SMPTE 344M for nizes Standard Definition (SD) video and High Definition SD and SMPTE 292M for HD Video and serialization to the (HD) video formats according to the respective Society of output ports. Motion Picture and Television Engineers (SMPTE) stan- Table 1 summarizes the supported frame set of the dards. The device is compliant to SMPTE 125M/267M for LMH0030 and Figure 2 shows the simplified data path of the standard definition and SMPTE 260M/274M/295M/296M LMH0030 SD/HD Encoder/Serializer. high definition video as provided to the parallel 10bit or 20bit interfaces. The LMH0030 auto-detects and processes the TABLE 1. Automated Supported Frames by the LMH0030 Format Apecification Frame Rate Lines Active Lines Samples Active Samples SDTV, 54 SMPTE 344M 60I 525 507/1487 3432 2880 SDTV, 36 SMPTE 267M 60I 525 507/1487 2288 1920 SDTV, 27 SMPTE 125M 60I 525 507/1487 1716 1440 SDTV, 54 ITU-R BT 601.5 50I 625 577 3456 2880 SDTV, 36 ITU-R BT 601.5 50I 625 577 2304 1920 SDTV, 27 ITU-R BT 601.5 50I 625 577 1728 1440 PHYTER® is a registered trademark of National Semiconductor. -

Model 5280 Digital to Analog Composite Video Converter Data Pack

Model 5280 Digital to Analog Composite Video Converter Data Pack ENSEMBLE DESIGNS Revision 2.1 SW v2.0 This data pack provides detailed installation, configuration and operation information for the 5280 Digital to Analog Composite Video Converter and the 5210 Genlock option submodule as part of the Avenue Signal Integration System. The module information in this data pack is organized into the following sections: • Module Overview • Applications • Installation • Cabling • Module Configuration and Control ° Front Panel Controls and Indicators ° Avenue PC Remote Control ° Avenue Touch Screen Remote Control • Troubleshooting • Software Updating • Warranty and Factory Service • Specifications 5280-1 Model 5280 Video DAC MODULE OVERVIEW The 5280 module converts serial digital component video into composite analog video. Six separate composite or two Y/C (S-video) analog video outputs are available. The following analog formats are supported: • NTSC Composite with or without setup • PAL Composite A serial output BNC is provided for applications requiring the serial digital input signal to loop-through to another device. Output timing can be adjusted relative to a reference input signal by installing the 5210 Genlock Option, a submodule that plugs onto the 5280 circuit board. Incorporating a full- frame synchronizer, the 5210 also allows the 5280 to accept serial inputs that are asyn- chronous to the reference. As shown in the block diagram on the following page, the serial digital input signal first passes through serial receiver circuitry then on to EDH processing and deserializing. The serial output signal goes to a cable driver and is then AC coupled to a loop-through output BNC on the backplane. -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

Bit-Serial Digital Interface for High-Definition Television Systems

ANSI/SMPTE 292M-1996 SMPTE STANDARD for Television ---- Bit-Serial Digital Interface for High-Definition Television Systems Page 1 of 9 pages 1 Scope ANSI/SMPTE 274M-1995, Television ---- 1920 × 1080 Scanning and Interface This standard defines a bit-serial digital coaxial and fiber-optic interface for HDTV component signals ANSI/SMPTE 291M-1996, Television ---- Ancillary operating at data rates in the range of 1.3 Gb/s to 1.5 Data Packet and Space Formatting Gb/s. Bit-parallel data derived from a specified source format are multiplexed and serialized to form the serial SMPTE RP 184-1995, Measurement of Jitter in Bit- data stream. A common data format and channel Serial Digital Interfaces coding are used based on modifications, if necessary, to the source format parallel data for a given high- IEC 169-8 (1978), Part 8: R.F. Coaxial Connectors with definition television system. Coaxial cable interfaces Inner Diameter of Outer Conductor 6.5 mm (0.256 in) are suitable for application where the signal loss does with Bayonet Lock ---- Characteristic Impedance 50 not exceed an amount specified by the receiver manu- Ohms (Type BNC), and Appendix A (1993) facturer. Typical loss amounts would be in the range of up to 20 dB at one-half the clock frequency. Fiber IEC 793-2 (1992), Optical Fibres, Part 2: Product optic interfaces are suitable for application at up to 2 Specifications km of distance using single-mode fiber. IEC 874-7 (1990), Part 7: Fibre Optic Connector Several source formats are referenced and others Type FC operating within the covered data rate range may be serialized based on the techniques defined by this 3 Definition of terms standard. -

On the Accuracy of Video Quality Measurement Techniques

On the accuracy of video quality measurement techniques Deepthi Nandakumar Yongjun Wu Hai Wei Avisar Ten-Ami Amazon Video, Bangalore, India Amazon Video, Seattle, USA Amazon Video, Seattle, USA Amazon Video, Seattle, USA, [email protected] [email protected] [email protected] [email protected] Abstract —With the massive growth of Internet video and therefore measuring and optimizing for video quality in streaming, it is critical to accurately measure video quality these high quality ranges is an imperative for streaming service subjectively and objectively, especially HD and UHD video which providers. In this study, we lay specific emphasis on the is bandwidth intensive. We summarize the creation of a database measurement accuracy of subjective and objective video quality of 200 clips, with 20 unique sources tested across a variety of scores in this high quality range. devices. By classifying the test videos into 2 distinct quality regions SD and HD, we show that the high correlation claimed by objective Globally, a healthy mix of devices with different screen sizes video quality metrics is led mostly by videos in the SD quality and form factors is projected to contribute to IP traffic in 2021 region. We perform detailed correlation analysis and statistical [1], ranging from smartphones (44%), to tablets (6%), PCs hypothesis testing of the HD subjective quality scores, and (19%) and TVs (24%). It is therefore necessary for streaming establish that the commonly used ACR methodology of subjective service providers to quantify the viewing experience based on testing is unable to capture significant quality differences, leading the device, and possibly optimize the encoding and delivery to poor measurement accuracy for both subjective and objective process accordingly. -

Digital Video Recording

Digital Video Recording Five general principles for better video: Always use a tripod — always. Use good microphones and check your audio levels. Be aware of lighting and seek more light. Use the “rule of thirds” to frame your subject. Make pan/tilt movements slowly and deliberately. Don’t shoot directly against a wall. Label your recordings. Tripods An inexpensive tripod is markedly better than shooting by hand. Use a tripod that is easy to level (look for ones with a leveling bubble) and use it for every shot. Audio Remember that this is an audiovisual medium! Use an external microphone when you are recording, not the built-in video recorder microphone. Studies have shown that viewers who watch video with poor audio quality will actually perceive the image as more inferior than it actually is, so good sound is crucial. The camera will automatically disable built-in microphones when you plug in an external. There are several choices for microphones. Microphones There are several types of microphones to choose from: Omnidirectional Unidirectional Lavalier Shotgun Handheld In choosing microphones you will need to make a decision to use a wired or a wireless system. Hardwired systems are inexpensive and dependable. Just plug one end of the cord into the microphone and the other into the recording device. Limitations of wired systems: . Dragging the cord around on the floor causes it to accumulate dirt, which could result in equipment damage or signal distortion. Hiding the wiring when recording can be problematic. You may pick up a low hum if you inadvertently run audio wiring in a parallel path with electrical wiring. -

Model DV6600 User Guide Super Audio CD/DVD Player

E61M7ED/E61M9ED(EN).qx3 05.8.4 5:27 PM Page 1 Model DV6600 User Guide Super Audio CD/DVD Player CLASS 1 LASER PRODUCT E61M7ED/E61M9ED(EN).qx3 05.8.4 5:27 PM Page 2 PRECAUTIONS ENGLISH ESPAÑOL WARRANTY GARANTIA For warranty information, contact your local Marantz distributor. Para obtener información acerca de la garantia póngase en contacto con su distribuidor Marantz. RETAIN YOUR PURCHASE RECEIPT GUARDE SU RECIBO DE COMPRA Your purchase receipt is your permanent record of a valuable purchase. It should be Su recibo de compra es su prueba permanente de haber adquirido un aparato de kept in a safe place to be referred to as necessary for insurance purposes or when valor, Este recibo deberá guardarlo en un lugar seguro y utilizarlo como referencia corresponding with Marantz. cuando tenga que hacer uso del seguro o se ponga en contacto con Marantz. IMPORTANT IMPORTANTE When seeking warranty service, it is the responsibility of the consumer to establish proof Cuando solicite el servicio otorgado por la garantia el usuario tiene la responsabilidad and date of purchase. Your purchase receipt or invoice is adequate for such proof. de demonstrar cuándo efectuó la compra. En este caso, su recibo de compra será la FOR U.K. ONLY prueba apropiada. This undertaking is in addition to a consumer's statutory rights and does not affect those rights in any way. ITALIANO GARANZIA FRANÇAIS L’apparecchio è coperto da una garanzia di buon funzionamento della durata di un anno, GARANTIE o del periodo previsto dalla legge, a partire dalla data di acquisto comprovata da un Pour des informations sur la garantie, contacter le distributeur local Marantz. -

The H.264 Advanced Video Coding (AVC) Standard

Whitepaper: The H.264 Advanced Video Coding (AVC) Standard What It Means to Web Camera Performance Introduction A new generation of webcams is hitting the market that makes video conferencing a more lifelike experience for users, thanks to adoption of the breakthrough H.264 standard. This white paper explains some of the key benefits of H.264 encoding and why cameras with this technology should be on the shopping list of every business. The Need for Compression Today, Internet connection rates average in the range of a few megabits per second. While VGA video requires 147 megabits per second (Mbps) of data, full high definition (HD) 1080p video requires almost one gigabit per second of data, as illustrated in Table 1. Table 1. Display Resolution Format Comparison Format Horizontal Pixels Vertical Lines Pixels Megabits per second (Mbps) QVGA 320 240 76,800 37 VGA 640 480 307,200 147 720p 1280 720 921,600 442 1080p 1920 1080 2,073,600 995 Video Compression Techniques Digital video streams, especially at high definition (HD) resolution, represent huge amounts of data. In order to achieve real-time HD resolution over typical Internet connection bandwidths, video compression is required. The amount of compression required to transmit 1080p video over a three megabits per second link is 332:1! Video compression techniques use mathematical algorithms to reduce the amount of data needed to transmit or store video. Lossless Compression Lossless compression changes how data is stored without resulting in any loss of information. Zip files are losslessly compressed so that when they are unzipped, the original files are recovered. -

Hd-A3ku Hd-A3kc

HD DVD player HD-A3KU HD-A3KC Owner’s manual In the spaces provided below, record the Model and Serial No. located on the rear panel of your player. Model No. Serial No. Retain this information for future reference. SAFETY PRECAUTIONS CAUTION The lightning fl ash with arrowhead symbol, within an equilateral triangle, RISK OF ELECTRIC SHOCK is intended to alert the user to the presence of uninsulated dangerous DO NOT OPEN voltage within the products enclosure that may be of suffi cient magnitude RISQUE DE CHOC ELECTRIQUE NE to constitute a risk of electric shock to persons. ATTENTION PAS OUVRIR The exclamation point within an equilateral triangle is intended to alert WARNING : TO REDUCE THE RISK OF ELECTRIC SHOCK, DO NOT REMOVE the user to the presence of important operating and maintenance COVER (OR BACK). NO USERSERVICEABLE (servicing) instructions in the literature accompanying the appliance. PARTS INSIDE. REFER SERVICING TO QUALIFIED SERVICE PERSONNEL. WARNING: TO REDUCE THE RISK OF FIRE OR ELECTRIC SHOCK, DO NOT EXPOSE THIS APPLIANCE TO RAIN OR MOISTURE. DANGEROUS HIGH VOLTAGES ARE PRESENT INSIDE THE ENCLOSURE. DO NOT OPEN THE CABINET. REFER SERVICING TO QUALIFIED PERSONNEL ONLY. CAUTION: TO PREVENT ELECTRIC SHOCK, MATCH WIDE BLADE OF PLUG TO WIDE SLOT, FULLY INSERT. ATTENTION: POUR ÉVITER LES CHOCS ÉLECTRIQUES, INTRODUIRE LA LAME LA PLUS LARGE DE LA FICHE DANS LA BORNE CORRESPONDANTE DE LA PRISE ET POUSSER JUSQU’AU FOND. CAUTION: This HD DVD player employs a Laser System. To ensure proper use of this product, please read this owner’s manual carefully and retain for future reference.