Models and Algorithms for Control of Sounding Objects

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nightlife, Djing, and the Rise of Digital DJ Technologies a Dissertatio

UNIVERSITY OF CALIFORNIA, SAN DIEGO Turning the Tables: Nightlife, DJing, and the Rise of Digital DJ Technologies A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Communication by Kate R. Levitt Committee in Charge: Professor Chandra Mukerji, Chair Professor Fernando Dominguez Rubio Professor Kelly Gates Professor Christo Sims Professor Timothy D. Taylor Professor K. Wayne Yang 2016 Copyright Kate R. Levitt, 2016 All rights reserved The Dissertation of Kate R. Levitt is approved, and it is acceptable in quality and form for publication on microfilm and electronically: _____________________________________________ _____________________________________________ _____________________________________________ _____________________________________________ _____________________________________________ _____________________________________________ Chair University of California, San Diego 2016 iii DEDICATION For my family iv TABLE OF CONTENTS SIGNATURE PAGE……………………………………………………………….........iii DEDICATION……………………………………………………………………….......iv TABLE OF CONTENTS………………………………………………………………...v LIST OF IMAGES………………………………………………………………….......vii ACKNOWLEDGEMENTS…………………………………………………………….viii VITA……………………………………………………………………………………...xii ABSTRACT OF THE DISSERTATION……………………………………………...xiii Introduction……………………………………………………………………………..1 Methodologies………………………………………………………………….11 On Music, Technology, Culture………………………………………….......17 Overview of Dissertation………………………………………………….......24 Chapter One: The Freaks -

CDJ-2000 & CDJ-900 Natural Selection

CDJ-2000 & CDJ-900 Natural Selection A new species of CDJ has arrived. Introducing the CDJ-2000 & CDJ-900 multi-format players. Designed for the ever evolving needs of DJs, the new CDJs handle all major music formats from multiple sources including USB and SD memory devices. CDJ-2000 Natural Selection Introducing a major leap forward for our legendary club standard CDJ series: the CDJ-2000 is the new professional player for the digital age. Playback of multiple file formats / Perform off USB storage devices & SD card / rekordbox™ music database management software included / Pro DJ Link allows music data sharing among players from single source / High-quality Wolfson DAC / 6.1 full-colour LCD screen / Rotary selector function for easy track selection / Needle Search bar for rapid track point selection / MIDI & HID control via USB / Hot Cue Banks / Quantize Looping / 4 point Jog wheel illumination with increased tension adjustability / Tag tracks The CDJ-2000 fizzes with fresh DNA and can play music Wolfson DAC which processes high-quality audio files up from multiple sources, including CD, DVD, USB storage to 96kHz and has a signal-to-noise ratio of 117dB (11dB devices and SD memory cards. Simply take your USB higher than the CDJ-1000MK3), giving incredibly accurate device to the club, plug into the CDJ-2000 and play. sound reproduction. Supported file formats include MP3, AAC, WAV and Each CDJ has a built-in 24-bit/48kHz sound card, along with AIFF (both 16-bit and 24-bit), for the next generation of MIDI and HID control over USB. -

Sheer Genius and Totally Cool! It's Zomo!

SHEER GENIUS AND TOTALLY COOL! IT’S ZOMO! CONTENT Furniture 3 Founded in 2003, the Zomo brand is a division of Headphones 12 GSM-Multimedia GmbH. Developed by Dj’s with a total understanding of the club and nightlife scenes. Pro Stands 18 Zomo products - used by serious Dj’s and found in all good record stores. Laptop Stands 20 Equipment Cases 21 Racks & Equipments 42 Record cases 43 Bags 44 CD-Bags & Cases 50 Accessories 52 Snap this QR code and get an instant access to www.zomo.de Capacity 75/25 Trolley 19” Rack Unit Number of items held inside Classic Cut Luxury travelling with trolley 1 unit = 44.45mm Notebook Size 50/50 Adjustable Panel Rotation Maximum notebook size Half cut for perfect storage Adjustable position/location Rotatable Pro Flight Removable Lid Wheels Cable Cover Extreme flight proofed quality Removable top part Wheels for easy transporting Protect & arrange cables Detailed information on all products on www.zomo.de FURNITURE 3 Berlin MK2 Deckstand Miami MK2 Deckstand Detailed information on all products on www.zomo.de 4 FURNITURE Miami MK2 Toxic Deckstand LT D Berlin MK2 Toxic Deckstand LT D Powerkit PK1 Acryl Front Laptop Tray Acryl Headphone Tray Acryl Powerkit PK2 Detailed information on all products on www.zomo.de FURNITURE 5 Milano Studio Desk Vegas Deckstand Detailed information on all products on www.zomo.de 6 FURNITURE Ibiza 120 Deckstand Ibiza 150 Deckstand Detailed information on all products on www.zomo.de FURNITURE 7 Detroit 120 Detroit 150 Detailed information on all products on www.zomo.de 8 FURNITURE -

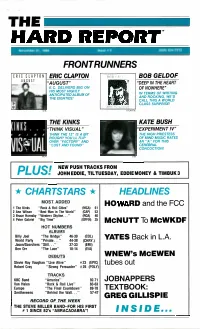

HARD REPORT' November 21, 1986 Issue # 6 (609) 654-7272 FRONTRUNNERS ERIC CLAPTON BOB GELDOF "AUGUST" "DEEP in the HEART E.C

THE HARD REPORT' November 21, 1986 Issue # 6 (609) 654-7272 FRONTRUNNERS ERIC CLAPTON BOB GELDOF "AUGUST" "DEEP IN THE HEART E.C. DELIVERS BIG ON OF NOWHERE" HIS MOST HIGHLY ANTICIPATED ALBUM OF IN TERMS OF WRITING AND ROCKING, WE'D THE EIGHTIES! CALL THIS A WORLD CLASS SURPRISE! ATLANTIC THE KINKS KATE BUSH NINNS . "THINK VISUAL" "EXPERIMENT IV" THINK THE 12" IS A BIT THE HIGH PRIESTESS ROUGH? YOU'LL FLIP OF MIND MUSIC RATES OVER "FACTORY" AND AN "A" FOR THIS "LOST AND FOUND" CEREBRAL CONCOCTION! MCA EMI JN OE HWN PE UD SD Fs RD OA My PLUS! ETTRACKS EDDIE MONEY & TIMBUK3 CHARTSTARS * HEADLINES MOST ADDED HOWARD and the FCC 1 The Kinks "Rock & Roll Cities" (MCA) 61 2 Ann Wilson "Best Man in The World" (CAP) 53 3 Bruce Hornsby "Western Skyline..." (RCA) 40 4 Peter Gabriel "Big Time" (GEFFEN) 35 McNUTT To McWKDF HOT NUMBERS ALBUMS Billy Joel "The Bridge" 46-39 (COL) YATES Back in L.A. World Party "Private. 44-38 (CHRY.) Jason/Scorchers"Still..." 37-33 (EMI) Ben Orr "The Lace" 18-14 (E/A) DEBUTS WNEW's McEWEN Stevie Ray Vaughan "Live Alive" #23(EPIC) tubes out Robert Cray "Strong Persuader" #26 (POLY) TRACKS KBC Band "America" 92-71 JOBNAPPERS Van Halen "Rock & Roll Live" 83-63 Europe "The Final Countdown" 89-78 TEXTBOOK: Smithereens "Behind the Wall..." 57-47 GREG GILLISPIE RECORD OF THE WEEK THE STEVE MILLER BAND --FOR HIS FIRST # 1 SINCE 82's "ABRACADABRA"! INSIDE... %tea' &Mai& &Mal& EtiZiraZ CiairlZif:.-.ZaW. CfMCOLZ &L -Z Cad CcIZ Cad' Ca& &Yet Cif& Ca& Ca& Cge. -

Music & Film Memorabilia

MUSIC & FILM MEMORABILIA Friday 11th September at 4pm On View Thursday 10th September 10am-7pm and from 9am on the morning of the sale Catalogue web site: WWW.LSK.CO.Uk Results available online approximately one hour following the sale Buyer’s Premium charged on all lots at 20% plus VAT Live bidding available through our website (3% plus VAT surcharge applies) Your contact at the saleroom is: Glenn Pearl [email protected] 01284 748 625 Image this page: 673 Chartered Surveyors Glenn Pearl – Music & Film Memorabilia specialist 01284 748 625 Land & Estate Agents Tel: Email: [email protected] 150 YEARS est. 1869 Auctioneers & Valuers www.lsk.co.uk C The first 91 lots of the auction are from the 506 collection of Jonathan Ruffle, a British Del Amitri, a presentation gold disc for the album writer, director and producer, who has Waking Hours, with photograph of the band and made TV and radio programmes for the plaque below “Presented to Jonathan Ruffle to BBC, ITV, and Channel 4. During his time as recognise sales in the United Kingdom of more a producer of the Radio 1 show from the than 100,000 copies of the A & M album mid-1980s-90s he collected the majority of “Waking Hours” 1990”, framed and glazed, 52 x 42cm. the lots on offer here. These include rare £50-80 vinyl, acetates, and Factory Records promotional items. The majority of the 507 vinyl lots being offered for sale in Mint or Aerosmith, a presentation CD for the album Get Near-Mint condition – with some having a Grip with plaque below “Presented to Jonathan never been played. -

SL1 Manual for Serato Scratch Live 2.5.0

SETTING THE stanDARD FOR PROFESSIONAL DJS RANE SL1 FOR SERATO SCRATCH LIVE • OPERATOR’S MANUAL 2.5.0 Important Safety Instructions FCC Statement FCC Declaration of Conformity For the continued safety of yourself and This equipment has been tested and Brand: Rane others we recommend that you read found to comply with the limits for a Class Model: SL1 the following safety and installation B digital device, pursuant to part 15 of instructions. Keep this document in a safe the FCC Rules. These limits are designed This device complies with part 15 of the location for future reference. Please heed to provide reasonable protection against FCC Rules. Operation is subject to the all warnings and follow all instructions. harmful interference in a residential following two conditions: (1) This device installation. This equipment generates, may not cause harmful interference, Do not use this equipment in a location uses and can radiate radio frequency and (2) this device must accept where it might become wet. Clean only energy and, if not installed and used any interference received, including with a damp cloth. This equipment may in accordance with the instructions, interference that may cause undesired be used as a table top device, although may cause harmful interference to operation. stacking of the equipment is dangerous radio communications. However, there and not recommended. is no guarantee that interference will Responsible Party: not occur in a particular installation. Rane Corporation Equipment may be located directly above If this equipment does cause harmful 10802 47th Avenue West or below this unit, but note that some interference to radio or television Mukilteo WA 98275-5000 USA equipment (like large power amplifiers) reception, which can be determined Phone: 425-355-6000 may cause an unacceptable amount of by turning the equipment off and on, hum or may generate too much heat the user is encouraged to try to correct and degrade the performance of this the interference by one or more of the equipment. -

A G Lim P S E Thrzough T

A G l i m p s e T h r z o u g h T 2A Thursday, February 20,1988 Daily Nexus Join us Thursdays at 5:30 PM for : paI o o o o o o o o ooooooooooooooo G 9 Id $ 5 No Golden Ponies Here Who on earth are The Golden Palominos? The core of bites. the band is drummer and producer Anton Fier and bass Apart from these three songs, the rest of Visions of guitarist Bill Laswell. Visions o f Excess also boasts a Excess shows little promise. “ B oy(G o)” has a full, lengthy list of guest appearances, including Richard heavy sound — a wall of percussion. If it contained more Thompson, John Lydon (a.k.a. Johnny Rotten), Benue melody, it would sound more like R.E.M. Stipe’s voice is Worrel of Talking Heads fame and R.E.M.’s Michael unmistakable but this stuff is just not of the same Stipe. I have never heard of Syd Straw before but she has caliber. The different vocal levels may prevent a sense a unique voice — a lot like Chrissie Hynde, Exene of complete repetitiveness but the song is overly in- 2/20 The New Male-Female Cervenka and Stevie Nicks all in one. cantatory in places. “ Clustering Train” is similar, with Relationship Despite the impressive name-dropping, the album is, too much guitar accompanying Stipe’s drawn-out whine Janice and John Baldwin, Ph.D. as a whole, a bit mediocre. A few songs, however, to be true R.E.M. -

3Rd Party Controller Quickstart Guide • Pioneer

3RD PARTY CONTROLLER QUICKSTART GUIDE • PIONEER CDJ-400 • NUMARK DMC2 • • DENON DN-HD2500 • DENON DN-HC4500 • • NUMARK ICDX • SERATO SCRATCH LIVE 3RD PARTY CONTROLLER QUICKSTART 1.8.21 To use a pair of CDJ400s with Scratch LIVE, you Below is the information displayed on the will need at least 3 available USB ports. LCD screen of the Pioneer CDJ-400 when PIONEER CDJ-400 used with Scratch LIVE. If you don’t have 3 ports available you may be able to connect your CDJ400s to a powered Artist name / Song name / Album name. Use the USB hub. It is however, important to always Text Mode / Utility Mode button to toggle* connect your Scratch LIVE hardware directly to your computer. Track number* First, make sure you are running Scratch LIVE BPM - changes in value with pitch slider 1.8.2 or later. (Earlier versions do not movement* support the CDJ400) Tempo % - changes in value with pitch slider Connect your Scratch LIVE hardware (SSL1, movement. TTM57 or the MP4) as per normal into an +- Tempo range. +-6 / +-10 / +-16. Use the available USB port on your computer. Tempo button to toggle. Do the same with both of your CDJ400s, connecting each player to separate USB ports. Time elapsed / Time remaining (Digits) Use the Start Scratch LIVE and set each virtual deck to Time Mode / Auto Cue button to toggle. Internal Mode. Time elapsed / Time remaining (Visual readout) Turn on both CDJ400s, and switch them to USB Use the Time Mode / Auto Cue button to mode by pressing the button in the top left toggle. -

Performance in EDM - a Study and Analysis of Djing and Live Performance Artists

California State University, Monterey Bay Digital Commons @ CSUMB Capstone Projects and Master's Theses Capstone Projects and Master's Theses 12-2018 Performance in EDM - A Study and Analysis of DJing and Live Performance Artists Jose Alejandro Magana California State University, Monterey Bay Follow this and additional works at: https://digitalcommons.csumb.edu/caps_thes_all Part of the Music Performance Commons Recommended Citation Magana, Jose Alejandro, "Performance in EDM - A Study and Analysis of DJing and Live Performance Artists" (2018). Capstone Projects and Master's Theses. 364. https://digitalcommons.csumb.edu/caps_thes_all/364 This Capstone Project (Open Access) is brought to you for free and open access by the Capstone Projects and Master's Theses at Digital Commons @ CSUMB. It has been accepted for inclusion in Capstone Projects and Master's Theses by an authorized administrator of Digital Commons @ CSUMB. For more information, please contact [email protected]. Magaña 1 Jose Alejandro Magaña Senior Capstone Professor Sammons Performance in EDM - A Study and Analysis of DJing and Live Performance Artists 1. Introduction Electronic Dance Music (EDM) culture today is often times associated with top mainstream DJs and producers such as Deadmau5, Daft Punk, Calvin Harris, and David Guetta. These are artists who have established their career around DJing and/or producing electronic music albums or remixes and have gone on to headline world-renowned music festivals such as Ultra Music Festival, Electric Daisy Carnival, and Coachella. The problem is that the term “DJ” can be mistakenly used interchangeably between someone who mixes between pre-recorded pieces of music at a venue with a set of turntables and a mixer and an artist who manipulates or creates music or audio live using a combination of computers, hardware, and/or controllers. -

The Underrepresentation of Female Personalities in EDM

The Underrepresentation of Female Personalities in EDM A Closer Look into the “Boys Only”-Genre DANIEL LUND HANSEN SUPERVISOR Daniel Nordgård University of Agder, 2017 Faculty of Fine Art Department of Popular Music There’s no language for us to describe women’s experiences in electronic music because there’s so little experience to base it on. - Frankie Hutchinson, 2016. ABSTRACT EDM, or Electronic Dance Music for short, has become a big and lucrative genre. The once nerdy and uncool phenomenon has become quite the profitable business. Superstars along the lines of Calvin Harris, David Guetta, Avicii, and Tiësto have become the rock stars of today, and for many, the role models for tomorrow. This is though not the case for females. The British magazine DJ Mag has an annual contest, where listeners and fans of EDM can vote for their favorite DJs. In 2016, the top 100-list only featured three women; Australian twin duo NERVO and Ukrainian hardcore DJ Miss K8. Nor is it easy to find female DJs and acts on the big electronic festival-lineups like EDC, Tomorrowland, and the Ultra Music Festival, thus being heavily outnumbered by the go-go dancers on stage. Furthermore, the commercial music released are almost always by the male demographic, creating the myth of EDM being an industry by, and for, men. Also, controversies on the new phenomenon of ghost production are heavily rumored among female EDM producers. It has become quite clear that the EDM industry has a big problem with the gender imbalance. Based on past and current events and in-depth interviews with several DJs, both female and male, this paper discusses the ongoing problems women in EDM face. -

Karen Mantler by Karen Mantler

Karen Mantler by Karen Mantler I was conceived by Carla Bley and Michael Mantler at the Newport Jazz Festival in 1965. Born in 1966, I was immediately swept into the musician's life on the road. After having checked me at the coatroom of the Berlin Jazz Festival, to the horror of the press, my parents realized that I was going to have to learn to play an instrument in order to be useful. But since I was still just a baby and they couldn't leave me alone, they had to bring me on stage with them and keep me under the piano. This is probably why I feel most at home on the stage. In 1971, when I was four, my mother let me have a part in Escalator Over The Hill and the next year I sang on another of her records, Tropic Appetites. By 1977 I had learned to play the glockenspiel, and I joined the Carla Bley Band. I toured Europe and the States with her several times and played on her Musique Mecanique album. After playing at Carnegie Hall in 1980, where I tried to steal the show by pretending to be Carla Bley, my mother fired me, telling me "get your own band". I realized that I was going to have to learn a more complicated instrument. After trying drums, bass, and flute, which I always lost interest in, I settled on the clarinet. I joined my elementary school band and quickly rose to the head of the clarinet section. The band director let me take the first improvised solo in the history of the Phoenicia (a small town near Woodstock, NY) elementary school. -

Connections Pioneer CDJ-900/CDJ-2000

Pioneer CDJ-900/CDJ-2000 Scratch Live Connection Guide Connections Connect the Multi Player (or players, if more than one are to be connected*) to the computer with the use of a USB cable. The example here is for connecting to CDJ-900, but the method is the same for CDJ-2000. *Up to 3 Multi Players can be connected to the Scratch Live. Connecting with Serato Scratch Live Computer Scratch Live USB cable USB cable USB cable Scratch Live SL1/SL3 LEFT DECK RIGHT DECK 3456 Audio cable to Amp POWER R L DIGITAL AUDIO OUT CONTROL OUT CDJ-900 DJ mixer CDJ-900 Power cable To an AC outlet * Scratch Live is a registered trademark of Serato Audio Research. 1 Using Multi Players as Scratch Live Controllers Switch on the power to all units once the connections have been made. Then, set up the Multi Players in accordance with the following procedures. [MENU] button [LINK] button UTILITY BACK TAG TRACK BROWSE TAG LIST INFO MENU /REMOVE OFF POWER ON LINK STANDBY USB DISC EJECT STOP USB ROTARY SELECTOR DISC 1 / 16 1 1 / 4 TIME AUTO VINYL MODE CUE SPEED ADJUST TOUCH/RELEASE 1 / 8 2 1 / 3 IN / CUE OUTRELOOP/EXIT CUE/LOOP DELETE MEMORY 1 / 4 4 1 / 2 LOOP CALL JOG MODE IN ADJUST OUT ADJUST 1 / 2 3 / 4 SLIP 8 VINYL AUTO BEAT LOOP Press the [LINK] button on the Multi Player. When [CONTROL MODE (HID STANDARD] is displayed 1 BEAT SELECT on the Multi Player’s main display area, press and hold the [MENU] button for at least one second to DIRECTION TEMPO 6 10 16 WIDE REV advance to the [UTILITY] mode.