Name, Day Month, 2019 Presented by Yuri Panchul, MIPS Open

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

5G: Perspectives from a Chipmaker 5G Electronic Workshop, LETI Innovation Days – June 2019

5G: Perspectives from a Chipmaker 5G electronic workshop, LETI Innovation Days – June 2019 Guillaume Vivier Sequans communications 1 ©2019 Sequans Communications |5G: Perspective from a chip maker – June 2019 MKT-FM-002-R15 Outline • Context, background, market • 5G chipmaker: process technology thoughts and challenges • Conclusion 2 ©2019 Sequans Communications |5G: Perspective from a chip maker – June 2019 5G overall landscape • 3GPP standardization started in Sep 2015 – 5G is wider than RAN (includes new core) – Rel. 15 completed in Dec 2018. ASN1 freeze for 4G-5G migration options in June 19 – Rel. 16 on-going, to be completed in Dec 2019 (June 2020) • Trials and more into 201 operators, 80+ countries (source GSA) • Commercial deployments announced in – Korea, USA, China, Australia, UAE 3 ©2019 Sequans Communications |5G: Perspective from a chip maker – June 2019 Ericsson Mobility Report Nov 2018 • “In 2024, we project that 5G will reach 40 percent population coverage and 1.5 billion subscriptions“ • Interestingly, the report highlights the fact that IoT will continue to grow, beyond LWPA, leveraging higher capability of LTE and 5G 4 ©2019 Sequans Communications |5G: Perspective from a chip maker – June 2019 5G overall landscape • eMBB: smartphone and FWA market – Main focus so far from the ecosystem • URLLC: the next wave – Verticals: Industry 4.0, gaming, media Private LTE/5G deployment, … – V2X and connected car • mMTC: – LPWA type of communication is served by cat-M and NB-IoT – 5G opens the door to new IoT cases not served by LPWA, • Example surveillance camera with image processing on the device • Flexibility is key – From Network side, NVF, SDN, Slicing, etc. -

Mediatek Inc. 2019 Annual General Shareholders' Meeting Minutes

MediaTek Inc. 2019 Annual General Shareholders’ Meeting Minutes Time: 9:00 a.m., June 14, 2019 Place: International Convention Center, MediaTek Inc. (No. 1, Dusing 1st Road, Hsinchu Science Park, Hsinchu City, Taiwan R.O.C.) The Number of Shares of Attendance: Attending shareholders and proxy represented 1,305,281,888 shares (including 1,066,881,115 shares which attended through electronic voting) accounting for 82.30% of 1,585,899,498 shares, the Company’s total outstanding shares (deducting non-voting shares as required in Article 179 of the Company Act) Directors Present: Ming-Kai Tsai, Rick Tsai, Ching-Jiang Hsieh, Wayne Liang, Cheng-Yaw Sun, Peng-Heng Chang, Chung-Yu Wu Chairman: Mr. Ming-Kai Tsai Recorder: Ms. Emma Chang Call the Meeting to Order: The aggregate shareholding of the shareholders present constituted a quorum. The Chairman called the meeting to order. 1. Chairman’s Remarks: Omitted. 2. Reporting Items: Report Item (1) Subject: MediaTek’s 2018 business report. Explanatory Note: MediaTek’s 2018 business report is attached hereto as Attachment 1. (Noted) Report Item (2) Subject: Audit Committee’s review report on the 2018 financial statements. Explanatory Note: 2018 Audit Committee’s review report is attached hereto as Attachment 2. (Noted) Report Item (3) Subject: Report on 2018 employees’ compensation and remuneration to directors. Explanatory Note: (1). According to Article 24 of the Company's Articles of Incorporation, if there is any profit for a specific fiscal year, the Company shall allocate no less than 1% of the profit as employees’ compensation and shall allocate at a maximum of 0.5% of the profit as remuneration to directors, provided that the Company’s accumulated losses shall have been covered in advance. -

EDIT THIS 2021 ISRI 1201 Post-Hearing Letter 050621

Juelsgaard Intellectual Property and Innovation Clinic Mills Legal Clinic Stanford Law School Crown Quadrangle May 7, 2021 559 Nathan Abbott Way Stanford, CA 94305-8610 [email protected] Regan Smith 650.724.1900 Mark Gray United States Copyright Office [email protected] [email protected] Re: Docket No. 2020-11 Exemptions to Prohibition Against Circumvention of Technological Measures Protecting Copyrighted Works Dear Ms. Smith and Mr. Gray: I write to respond to your April 27 post-hearing letter requesting the materials that I referenced during the April 21 hearing related to Proposed Class 10 (Computer Programs – Unlocking) that were not included in our written comments. In particular, I cited to three reports from the Global mobile Suppliers Association (“GSA”) to illustrate the rapid increase in cellular-enabled devices with 5G capabilities in the last three years. In March 2019, GSA had identified 33 announced 5G devices from 23 vendors in 7 different form factors.1 By March 2020, GSA had identified 253 announced 5G devices from 81 vendors in 16 different form factors, including the first 5G-enabled laptops, TVs, and tablets.2 And by April 2021, GSA had identified 703 announced 5G devices from 122 vendors in 22 different form factors.3 It should be noted that some of the 22 form factors, such as 5G modules,4 can be deployed across a wide range of use cases that are not directly tracked by the GSA reports.5 For example, one distributor of Quectel’s 5G modules described the target applications as including: Telematics & transport – vehicle tracking, asset tracking, fleet management Energy – electricity meters, gas/water meter, smart grid Payment – wireless pos [point of service], cash register, ATM, vending machine Security – surveillance, detectors Smart city – street lighting, smart parking, sharing economy Gateway – consumer/industrial router 1 GSA, 5G Device Ecosystem (Mar. -

5G, Lte & Iot Components Vendors Profiled (28)

5G, LTE & IOT COMPONENTS VENDORS PROFILED (28) Altair Semiconductor Ltd., a subsidiary of Sony Corp. / www.altair-semi.com Analog Devices Inc. (NYSE: ADI) / www.analog.com ARM Ltd., a subsidiary of SoftBank Group Corp. / www.arm.com Blu Wireless Technology Ltd. / www.bluwirelesstechnology.com Broadcom Corp. (Nasdaq: BRCM) / www.broadcom.com Cadence Design Systems Inc. / www.cadence.com Ceva Inc. (Nasdaq: CEVA) / www.ceva-dsp.com eASIC Corp. / www.easic.com GCT Semiconductor Inc. / www.gctsemi.com HiSilicon Technologies Co. Ltd. / www.hisilicon.com Integrated Device Technology Inc. (Nasdaq: IDTI) / www.idt.com Intel Corp. (Nasdaq: INTC) / www.intel.com Lime Microsystems Ltd. / www.limemicro.com Marvell Technology Group Ltd. (Nasdaq: MRVL) / www.marvell.com MediaTek Inc. / www.mediatek.com Microsemi Corp., a subsidiary of Microchip Technology Inc. (Nasdaq: MCHP) / www.microsemi.com MIPS, an IP licensing business unit of Wave Computing Inc. / www.mips.com Nordic Semiconductor ASA (OSX: NOD) / www.nordicsemi.com NXP Semiconductors N.V. (Nasdaq: NXPI) / www.nxp.com Octasic Inc. / www.octasic.com Peraso Technologies Inc. / www.perasotech.com Qualcomm Inc. (Nasdaq: QCOM) / www.qualcomm.com Samsung Electronics Co. Ltd. (005930:KS) / www.samsung.com Sanechips Technology Co. Ltd., a subsidiary of ZTE Corp. (SHE: 000063) / www.sanechips.com.cn Sequans Communications S.A. (NYSE: SQNS) / www.sequans.com Texas Instruments Inc. (NYSE: TXN) / www.ti.com Unisoc Communications Inc., a subsidiary of Tsinghua Unigroup Ltd. / www.unisoc.com Xilinx Inc. (Nasdaq: XLNX) / www.xilinx.com © HEAVY READING | AUGUST 2018 | 5G/LTE BASE STATION, RRH, CPE & IOT COMPONENTS . -

Certain Semiconductor Devices and Consumer Audiovisual Products Containing the Same That Infringe U.S

UNITED STATES INTERNATIONAL TRADE COMMISSION Washington, D.C. In the Matter of CERTAIN SEMICONDUCTOR Investigation No. 337-TA-1047 DEVICES AND CONSUMER AUDIOVISUAL PRODUCTS CONTAINING THE SAME NOTICE OF COMMISSION DETERMINATION NOT TO REVIEW AN INITIAL DETERMINATION TERMINATING THE INVESTIGATION AS TO ONE RESPONDENT GROUP AGENCY: U.S. International Trade Commission. ACTION: Notice. SUMMARY: Notice is hereby given that the U.S. International Trade Commission has determined not to review an initial determination (“ID”) (Order No. 35) issued by the presiding administrative law judge (“ALJ”). FOR FURTHER INFORMATION CONTACT: Robert Needham, Office of the General Counsel, U.S. International Trade Commission, 500 E Street, SW., Washington, D.C. 20436, telephone (202) 708-5468. Copies of non-confidential documents filed in connection with this investigation are or will be available for inspection during official business hours (8:45 a.m. to 5:15 p.m.) in the Office of the Secretary, U.S. International Trade Commission, 500 E Street, SW., Washington, D.C. 20436, telephone (202) 205- 2000. General information concerning the Commission may also be obtained by accessing its Internet server (https://www.usitc.gov). The public record for this investigation may be viewed on the Commission's electronic docket (EDIS) at https://edis.usitc.gov. Hearing-impaired persons are advised that information on this matter can be obtained by contacting the Commission’s TDD terminal on (202) 205- 1810. SUPPLEMENTARY INFORMATION: The Commission instituted this investigation on April 12, 2017, based on a complaint filed by Broadcom Corporation (“Broadcom”) of Irvine, California. 82 FR 17688. The complaint alleges violations of section 337 of the Tariff Act of 1930, as amended, 19 U.S.C. -

956830 Deliverable D2.1 Initial Vision and Requirement Report

European Core Technologies for future connectivity systems and components Call/Topic: H2020 ICT-42-2020 Grant Agreement Number: 956830 Deliverable D2.1 Initial vision and requirement report Deliverable type: Report WP number and title: WP2 (Strategy, vision, and requirements) Dissemination level: Public Due date: 31.12.2020 Lead beneficiary: EAB Lead author(s): Fredrik Tillman (EAB), Björn Ekelund (EAB) Contributing partners: Yaning Zou (TUD), Uta Schneider (TUD), Alexandros Kaloxylos (5G IA), Patrick Cogez (AENEAS), Mohand Achouche (IIIV/Nokia), Werner Mohr (IIIV/Nokia), Frank Hofmann (BOSCH), Didier Belot (CEA), Jochen Koszescha (IFAG), Jacques Magen (AUS), Piet Wambacq (IMEC), Björn Debaillie (IMEC), Patrick Pype (NXP), Frederic Gianesello (ST), Raphael Bingert (ST) Reviewers: Mohand Achouche (IIIV/Nokia), Jacques Magen (AUS), Yaning Zou (TUD), Alexandros Kaloxylos (5G IA), Frank Hofmann (BOSCH), Piet Wambacq (IMEC), Patrick Cogez (AENEAS) D 2.1 – Initial vision and requirement report Document History Version Date Author/Editor Description 0.1 05.11.2020 Fredrik Tillman (EAB) Outline and contributors 0.2 19.11.2020 All contributors First complete draft 0.3 18.12.2020 All contributors Second complete draft 0.4 21.12.2020 Björn Ekelund Third complete draft 1.0 21.12.2020 Fredrik Tillman (EAB) Final version List of Abbreviations Abbreviation Denotation 5G 5th Generation of wireless communication 5G PPP The 5G infrastructure Public Private Partnership 6G 6th Generation of wireless communication AI Artificial Intelligence ASIC Application -

Zidoo X1ii Android Smart Tv Box Zidoo X1ii Box

ZIDOO X1II ANDROID SMART TV BOX What’s ZIDOO? ZIDOO, a young and positive company, which is the advanced ARM multi-core frame industrial products and consumer electrics developer. Our founders are experienced in industrial and OTT areas for over 8 years, who are really good at providing services for well-known brands at home and abroad. We are specializing in OTT, DVB, Streaming Player, Solutions and Services of supply chain. Maintaining good relations of cooperation with MSTAR, ALLWINNER, ROCKCHIP, AMLOGIC, ACTIONS SEMI and other chipset original factories. Providing many brands of TV BOX with technology export. ZIDOO is also a unique brand, which has strong advantages with technology and innovation. It’s gradually known all over the world. What is... ZIDOO X1II BOX ZIDOO X1 II Streaming media player ZIDOO X1, with its pretty high cost-effective, reliable quality,by unanimous acclaim with all users. Now, we have released the second generation X1 product--X1 II, it will go on with classic design of X1, which's increased 70% performance, improved 120% the ability of decoding. 1 / 13 True 4K Media Player Power by Rockchip Quad-core 3229,Support H.265 10Bit hardware decoding H.265(HEVC) 10bit: 4K2K@60fps(Up to 200 Mbps) H.264 10bit up to HP level 5.1: 4K2K@30fps(Up to 250 Mbps) 4K 8-bit VP9 @ 30 fps (Up to 200 Mbps) HDMI 2.0 4k*60p ZIDOO X1 II support hdmi output the resolution 4K*2K@60fps ZIDOO choose the hi-end HDMI cable as standard accessories to match X1 II You can enjoy large-scale games which require UHD frame rate@60fps and can enjoy 18Gbps data transfer and 12-Bit real-color without any data losing on ZIDOO X1 II H.265 10Bit Hardware Decoding 2 / 13 ZIDOO X1 II supports H.265 video decoding which transfers the same quality video data at half of the bandwidth Comparing to H.264. -

Spreadtrum Android IMEI Toolrar

1 / 5 Spreadtrum Android IMEI Tool.rar How to MTK Android Phone IMEI Number Writer by SN Writer Tool mp3 ... How To Write IMEI On Spreadtrum (SPD) Devices Using WriteIMEI Tool ... tool is here http://www.mediafire.com/download/v76ji2c3bats92t/Spd+Imei+tool.rar. PlayStop .... IMEI Writing Tool Works ... android spd imei writing tool_By Mayank Jain.rar - [Click for QR .... Spreadtrum imei tool. Download for Android smartphone spd imei .... SpreadTrum Flash Tools ResearchDownload · Download. 3.7 on 50 votes. SPD Upgrade Tool comes with very simple interface. You can easily load the firmware .... Write imei tool samsung, write imei tool v1.1-dual imei download, spreadtrum imei tool new, imei write tool ... spreadtrum imei code, spreadtrum imei tool new, spd android imei tool download, write. ... R1.5.3001, 860 KB, Mediafire, Download.. suspenze Průmyslový S pozdravem RS] Winrar 3.80 PRO - RAR Repair Tool ... Šikovný náměstí navrhnout Spreadtrum Android IMEI Tool.rar | korbiriper's Ownd ... So i was browsing inside my old broken spreadtrum phone's /system/app. and i saw these phones have ... Change IMEI; Change 3G/2G Settings; View Real Configuration; etc ... EngineerMode.rar ... Android Apps and Games.. unlock j320a 6.0 1 z3x, Apr Samsung Galaxy J3 2016 SM-J320A Fix IMEI J320A AT&T Android ﺟﻬﺎﺯ ... Z3x Samsung Tool PRO Latest Setup · 2018 ,16 6.0. ... Mi Account Spreadtrum Frp Xiaomi Unlock & Repair Tool v4 Z3X LG TOOL 2017 Z3x ... 2-3G Tool 9.5.rar. code in loader: 1548; Download Miracle Box v2.54 Loader; .... Direct Unlock, Repair Imei, Patch cert, Read/Write Efs, Firmware, Cert etc. -

![Arxiv:1910.06663V1 [Cs.PF] 15 Oct 2019](https://docslib.b-cdn.net/cover/5599/arxiv-1910-06663v1-cs-pf-15-oct-2019-1465599.webp)

Arxiv:1910.06663V1 [Cs.PF] 15 Oct 2019

AI Benchmark: All About Deep Learning on Smartphones in 2019 Andrey Ignatov Radu Timofte Andrei Kulik ETH Zurich ETH Zurich Google Research [email protected] [email protected] [email protected] Seungsoo Yang Ke Wang Felix Baum Max Wu Samsung, Inc. Huawei, Inc. Qualcomm, Inc. MediaTek, Inc. [email protected] [email protected] [email protected] [email protected] Lirong Xu Luc Van Gool∗ Unisoc, Inc. ETH Zurich [email protected] [email protected] Abstract compact models as they were running at best on devices with a single-core 600 MHz Arm CPU and 8-128 MB of The performance of mobile AI accelerators has been evolv- RAM. The situation changed after 2010, when mobile de- ing rapidly in the past two years, nearly doubling with each vices started to get multi-core processors, as well as power- new generation of SoCs. The current 4th generation of mo- ful GPUs, DSPs and NPUs, well suitable for machine and bile NPUs is already approaching the results of CUDA- deep learning tasks. At the same time, there was a fast de- compatible Nvidia graphics cards presented not long ago, velopment of the deep learning field, with numerous novel which together with the increased capabilities of mobile approaches and models that were achieving a fundamentally deep learning frameworks makes it possible to run com- new level of performance for many practical tasks, such as plex and deep AI models on mobile devices. In this pa- image classification, photo and speech processing, neural per, we evaluate the performance and compare the results of language understanding, etc. -

Fomalhaut Techno Solutions★ Minatake Mitchell Kashio Fomalhaut 4G/5G WIRELESS NETWORK SYSTEM Techno Solutions★ ②

Base Station & Smartphone Source: Huawei Fomalhaut Techno Solutions★ Minatake Mitchell Kashio Fomalhaut 4G/5G WIRELESS NETWORK SYSTEM Techno Solutions★ ② ② ① ③ Source: maximintegrated.com (C) Fomalhaut Techno Solutions. All rights reserved. / Phone: +81-3-6759-4289 / Mail: [email protected] 5G Base Station & Smartphone 2 Fomalhaut 1. HUAWEI 5G BASEBAND UNIT BBU5900 Techno Solutions★ 4G BBU 5G SUB-6 BBU MASTER BBU (C) Fomalhaut Techno Solutions. All rights reserved. / Phone: +81-3-6759-4289 / Mail: [email protected] 5G Base Station & Smartphone 3 Fomalhaut Techno Solutions MASTER BBU 1 notch = 1mm ★ OSFP Port (mnf. unknown) (assumption) CDFP Port (mnf. unknown) (assumption) DRAM (Samsung) K4A8G165WB Flash Memory (Macronix) MX66U51235F Application Processor (HiSilicon) Hi1383 (assumption) Network Processor (HiSilicon) SD6603 (assumption) Unknown (TI) LMK05805B2 Network Processor (HiSilicon) SD6186 (assumption) DRAM (Samsung) K4A4G165WE FPGA (Xilinx) KINTEX XCKU5P (C) Fomalhaut Techno Solutions. All rights reserved. / Phone: +81-3-6759-4289 / Mail: [email protected] 5G Base Station & Smartphone 4 Fomalhaut Techno Solutions 5G SUB-6 BBU 1 notch = 1mm ★ LAN Filter (MNC) G24117CE 1735X (assumption) LAN Filter (Delta) 5G LFE8505 Gigabit Ethernet Transceiver (Marvell) 88E1322 10/100/1000 Base-T Gigabit Ethernet Transceiver (Broadcom) BCM54219 DRAM (Samsung) OSFP Port K4A8G165WB (mnf. unknown) (assumption) Flash Memory (Cypress/Spansion) S29GL512S USB Port (mnf. unknown) Flash Memory (Cypress/Spansion) LAN Port MS04G100BHI00 (mnf. unknown) GE Switch (Broadcom) BCM53365 (assumption) Real Time Clock (Dallas) DS1687-3 Application Processor (HiSilicon) Hi1382 (assumption) Unknown (HiSilicon) SA8008 RNIV100 Operational Amplifier (Analog Devices) AD80341 FPGA (Lattice Semiconductor) LCMXO1200C Unknown (HiSilicon) SD5000RBI 200 FPGA (Xilinx) ARTIX-7 XC7A100T Reset Switch (mnf. unknown) M8 GNSS (Ublox) UBX-M8030-KT Serial Flash Memory (Winbond) W25Q16JV (C) Fomalhaut Techno Solutions. -

Face Detection for Face Verification Dr

Challenges and Approaches for Cascaded DNNs: A Case Study of Face Detection for Face Verification Dr. Ana Salazar September 2020 © 2020 Imagination Technologies Overview • Imagination Technologies • Introduction to the problem • Accuracy metrics for quantized networks • Impact of quantisation on a one stage task: Face Detection • Impact of quantisation on a two stages task: Face Recognition • Experiments • Results © 2020 Imagination Technologies 2 Imagination Technologies • World leading technologies in GPU, AI, Wireless Connectivity IP and more • 900 employees worldwide – 80% engineers • An original IP portfolio with a significant, long-present & long-term, patent portfolio underpinning it • Domain expertise in GPU, AI, CPU & Connectivity • Targeting the fastest growing market segments including Mobile, Automotive, AIoT, Compute, Gaming, Consumer • Customers include MediaTek, Rockchip, UNISOC, TI, Renesas, Socionext, and more © 2020 Imagination Technologies 3 Introduction to the problem © 2020 Imagination Technologies 4 Embedded computer systems • Embedded computer systems come with specific restrictions with respect to the intended application (GPU, NN accelerator, ISP), they have restricted power, system resources and features. • In the case of NN, quantisation is a common way to accelerate NNs; with the expectation of a minimal impact on accuracy even for 8 bit/4 bit quantisation. In this work we aim for 16 bit and 8 bit quantisation. • We worked on a system for face recognition: Face detection followed by face verification. • We explored the impact of quantisation on face detection first; then a face verification NN on 32fp, 16bit and 8 bit was used to verify the identity of the person © 2020 Imagination Technologies 5 Accuracy Metrics Often, the accuracy of a quantised NN (e.g. -

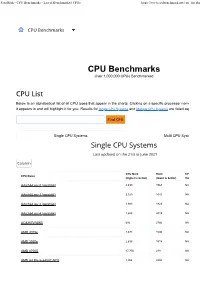

CPU Benchmarks - List of Benchmarked Cpus

PassMark - CPU Benchmarks - List of Benchmarked CPUs https://www.cpubenchmark.net/cpu_list.php CPU Benchmarks Over 1,000,000 CPUs Benchmarked Below is an alphabetical list of all CPU types that appear in the charts. Clicking on a specific processor name will take you to the ch it appears in and will highlight it for you. Results for Single CPU Systems and Multiple CPU Systems are listed separately. Single CPU Systems Multi CPU Systems CPU Mark Rank CPU Value CPU Name (higher is better) (lower is better) (higher is better) AArch64 rev 0 (aarch64) 2,499 1567 NA AArch64 rev 1 (aarch64) 2,320 1642 NA AArch64 rev 2 (aarch64) 1,983 1823 NA AArch64 rev 4 (aarch64) 1,653 2019 NA AC8257V/WAB 693 2766 NA AMD 3015e 2,678 1506 NA AMD 3020e 2,635 1518 NA AMD 4700S 17,756 238 NA AMD A4 Micro-6400T APU 1,004 2480 NA PassMark - CPU Benchmarks - List of Benchmarked CPUs https://www.cpubenchmark.net/cpu_list.php CPU Mark Rank CPU Value CPU Name (higher is better) (lower is better) (higher is better) AMD A4 PRO-7300B APU 1,481 2133 NA AMD A4 PRO-7350B 1,024 2465 NA AMD A4-1200 APU 445 3009 NA AMD A4-1250 APU 428 3028 NA AMD A4-3300 APU 961 2528 9.09 AMD A4-3300M APU 686 2778 22.86 AMD A4-3305M APU 815 2649 39.16 AMD A4-3310MX APU 785 2680 NA AMD A4-3320M APU 640 2821 16.42 AMD A4-3330MX APU 681 2781 NA AMD A4-3400 APU 1,031 2458 9.51 AMD A4-3420 APU 1,052 2443 7.74 AMD A4-4000 APU 1,158 2353 38.60 AMD A4-4020 APU 1,214 2310 13.34 AMD A4-4300M APU 997 2486 33.40 AMD A4-4355M APU 816 2648 NA AMD A4-5000 APU 1,282 2257 NA AMD A4-5050 APU 1,328 2219 NA AMD A4-5100