Music Technology As a Tool and Guideline for Composition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Core Instrumentation of the “Typical” American Community Concert Band: an Approach to Scoring Guidelines for Composers and Arrangers

Core Instrumentation of the “Typical” American Community Concert Band: An Approach to Scoring Guidelines for Composers and Arrangers Findings based on a 2012 Online survey by Composer/Conductor David Avshalomov, D.M.A 1 Approach In early 2012, after extensive observation of online email threads around the practical challenges of presenting concerts with community bands, the author decided to create and run an open online survey with the goal of gathering a sampling of reasonably reliable statistical information about the “typical” core instrumentation of a community concert band in the US. The definition of community concert band used here begins by distinguishing it from a full- instrumentation concert band or symphonic wind ensemble having all the “outlier/outsize” instruments and generally carrying only one (or perhaps at most two) players per part except for Bb clarinets. In the US these full bands are almost exclusively conservatory, university, college, community college, or advanced/large high school ensembles. Few professional concert bands exist in the US. The definition of community concert band here also excludes marching bands—school, municipal, or private—as these too have a separate and distinct instrumentation profile. Although there are some community concert bands that have fairly full instrumentation, initial observations from the survey results confirm that most, if not all, have what could be characterized as significant gaps by comparison with the “full” symphonic wind ensemble. They also often have much heavier doublings in certain common sections such as flutes and clarinets. GOAL: The intention of the survey was to draw a rough line around a “safe” core scoring, and additionally to define tentative guidelines for the inclusion of instruments outside that line, for composers and arrangers who wish to serve the community concert band population with music targeted to such groups’ strengths, not their weaknesses. -

Elton John and Billy Joel

TECHNICAL REQUIREMENTS: Back To Back - Elton John and Billy Joel GENERAL: In our experience we have for the most part found the house techs/sound companies as provided by host orchestras to be highly competent professionals who know and understand the needs of their orchestra and their performance venue. As such we are comfortable in trusting their recommendation and expertise. Conceptually all Jeans ‘n Classics productions are designed to feature the sound of the orchestra and as such the band and the orchestra should be equally sharing the musical picture as opposed to the orchestra being mixed into the back ground. The following are simply guidelines. MICS - BAND & SINGERS: Lead Vocalist (s) - 1 lead vocal mic required (cordless) - 1 lead vocal mic required (cordless)- Back Up Vocalist (s) - 2 backup vocal mics required (cordless) Band - 1 vocal mic required at piano MICS - ORCHESTRA: Strings - Contact mics on all strings is ideal - If impossible separate mics per player or minimally 1 mic per desk Winds - 1 mic per wind Brass - 1 mic per brass instrument Percussion - Individual mics on the congas/bongos area - Ambient mics on the "toys" for wind chime, tambourine etc, where the player can approach the mic - Individual mics on the vibes/marimbas area - Timpani and bass drums may or may not need ambient mics depending on the venue Special - up front solo violin on "Piano Man" BAND INSTRUMENTS: Electric Bass - Bass player will need DI line, or in some cases may bring his own ears / (Mitch Tyler) mixer and therefore will need a line to the mixer - Bass guitar to be provided: - 4- String Fender Precision (American made preferred) with strap Guitars - 1 DI lines will be required for an acoustic guitar played by lead vocalist from time to time - 2 guitar stands to be provided Piano - Grand piano will be required please. -

MOXF6/MOXF8 Data List 2 Voice List

Data List Table of Contents Voice List..................................................2 Drum Voice List ......................................12 Drum Voice Name List............................. 12 Drum Kit Assign List ................................ 13 Waveform List ........................................32 Performance List ....................................45 Master Assign List ..................................47 Arpeggio Type List .................................48 Effect Type List.......................................97 Effect Parameter List..............................98 Effect Preset List ..................................106 Effect Data Assign Table......................108 Mixing Template List ............................116 Remote Control Assignments...............117 Control List ...........................................118 MIDI Data Format.................................119 MIDI Data Table ...................................123 MIDI Implementation Chart...................146 EN Voice List PRE1 (MSB=63, LSB=0) Category Category Number Voice Name Element Number Voice Name Element Main Sub Main Sub 1 A01 Full Concert Grand Piano APno 2 65 E01 Dyno Wurli Keys EP 2 2 A02 Rock Grand Piano Piano Modrn 2 66 E02 Analog Piano Keys Synth 2 3 A03 Mellow Grand Piano Piano APno 2 67 E03 AhrAmI Keys Synth 2 4 A04 Glasgow Piano APno 4 68 E04 Electro Piano Keys EP 2 5 A05 Romantic Piano Piano APno 2 69 E05 Transistor Piano Keys Synth 2 6 A06 Aggressive Grand Piano Modrn 3 70 E06 EP Pad Keys EP 3 7 A07 Tacky Piano Modrn 2 71 E07 -

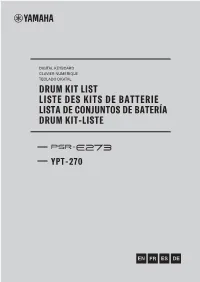

Drum Kit List

DRUM KIT LIST LISTE DES KITS DE BATTERIE LISTA DE CONJUNTOS DE BATERÍA DRUM KIT-LISTE Drum Kit List / Liste des kits de batterie/ Lista de conjuntos de batería / Drum Kit-Liste • Same as Standard Kit 1 • Comme pour Standard Kit 1 • No Sound • Absence de son • Each percussion voice uses one note. • Chaque sonorité de percussion utilise une note unique. Voice No. 117 118 119 120 121 122 Keyboard Standard Kit 1 Standard Kit 1 Indian Kit Arabic Kit SE Kit 1 SE Kit 2 Note# Note + Chinese Percussion C1 36 C 1 Seq Click H Baya ge Khaligi Clap 1 Cutting Noise 1 Phone Call C#1 37 C# 1Brush Tap Baya ke Arabic Zalgouta Open Cutting Noise 2 Door Squeak D1 38 D 1 Brush Swirl Baya ghe Khaligi Clap 2 Door Slam D#1 39 D# 1Brush Slap Baya ka Arabic Zalgouta Close String Slap Scratch Cut E1 40 E 1 Brush Tap Swirl Tabla na Arabic Hand Clap Scratch F1 41 F 1 Snare Roll Tabla tin Tabel Tak 1 Wind Chime F#1 42 F# 1Castanet Tablabaya dha Sagat 1 Telephone Ring G1 43 G 1 Snare Soft Dhol 1 Open Tabel Dom G#1 44 G# 1Sticks Dhol 1 Slap Sagat 2 A1 45 A 1 Bass Drum Soft Dhol 1 Mute Tabel Tak 2 A#1 46 A# 1 Open Rim Shot Dhol 1 Open Slap Sagat 3 B1 47 B 1 Bass Drum Hard Dhol 1 Roll Riq Tik 3 C2 48 C 2 Bass Drum Dandia Short Riq Tik 2 C#2 49 C# 2 Side Stick Dandia Long Riq Tik Hard 1 D2 50 D 2 Snare Chutki Riq Tik 1 D#2 51 D# 2 Hand Clap Chipri Riq Tik Hard 2 E2 52 E 2 Snare Tight Khanjira Open Riq Tik Hard 3 Flute Key Click Car Engine Ignition F2 53 F 2 Floor Tom L Khanjira Slap Riq Tish Car Tires Squeal F#2 54 F# 2 Hi-Hat Closed Khanjira Mute Riq Snouj 2 Car Passing -

FA-06 and FA-08 Sound List

Contents Studio Sets . 3 Preset/User Tones . 4 SuperNATURAL Acoustic Tone . 4 SuperNATURAL Synth Tone . 5 SuperNATURAL Drum Kit . .15 PCM Synth Tone . .15 PCM Drum Kit . .23 GM2 Tone (PCM Synth Tone) . .24 GM2 Drum Kit (PCM Drum Kit) . .26 Drum Kit Key Assign List . .27 Waveforms . .40 Super NATURAL Synth PCM Waveform . .40 PCM Synth Waveform . .42 Copyright © 2014 ROLAND CORPORATION All rights reserved . No part of this publication may be reproduced in any form without the written permission of ROLAND CORPORATION . © 2014 ローランド株式会社 本書の一部、もしくは全部を無断で複写・転載することを禁じます。 2 Studio Sets (Preset) No Studio Set Name MSB LSB PC 56 Dear My Friends 85 64 56 No Studio Set Name MSB LSB PC 57 Nice Brass Sect 85 64 57 1 FA Preview 85 64 1 58 SynStr /SoloLead 85 64 58 2 Jazz Duo 85 64 2 59 DistBs /TranceChd 85 64 59 3 C .Bass/73Tine 85 64 3 60 SN FingBs/Ac .Gtr 85 64 60 4 F .Bass/P .Reed 85 64 4 61 The Begin of A 85 64 61 5 Piano + Strings 85 64 5 62 Emotionally Pad 85 64 62 6 Dynamic Str 85 64 6 63 Seq:Templete 85 64 63 7 Phase Time 85 64 7 64 GM2 Templete 85 64 64 8 Slow Spinner 85 64 8 9 Golden Layer+Pno 85 64 9 10 Try Oct Piano 85 64 10 (User) 11 BIG Stack Lead 85 64 11 12 In Trance 85 64 12 No Studio Set Name MSB LSB PC 13 TB Clone 85 64 13 1–128 INIT STUDIO 85 0 1–128 129– 14 Club Stack 85 64 14 INIT STUDIO 85 1 1–128 256 15 Master Control 85 64 15 257– INIT STUDIO 85 2 1–128 16 XYZ Files 85 64 16 384 17 Fairies 85 64 17 385– INIT STUDIO 85 3 1–128 18 Pacer 85 64 18 512 19 Voyager 85 64 19 * When shipped from the factory, all USER locations were set to INIT STUDIO . -

An Evening of Motown

TECHNICAL REQUIREMENTS: An Evening Of Motown GENERAL: In our experience we have for the most part found the house techs/sound companies as provided by host orchestras to be highly competent professionals who know and understand the needs of their orchestra and their performance venue. As such we are comfortable in trusting their recommendation and expertise. Conceptually all Jeans ‘n Classics productions are designed to feature the sound of the orchestra and as such the band and the orchestra should be equally sharing the musical picture as opposed to the orchestra being mixed into the back ground. The following are simply guidelines. MICS - BAND & SINGERS: Lead Vocalist (s) - 2 lead vocal mics required (cordless) - 1 lead vocal mic required (cordless)- Backup Vocalists (s) - 3 backup vocal mics required (cordless) Band - vocal mic required at keyboard MICS - ORCHESTRA: Strings - Contact mics on all strings is ideal - If impossible separate mics per player or minimally 1 mic per desk Winds - 1 mic per wind Brass - 1 mic per brass instrument Percussion - Individual mics on the congas/bongos area - Ambient mics on the "toys" for wind chime, tambourine etc, where the player can approach the mic - Individual mics on the vibes/marimbas area - Timpani and bass drums may or may not need ambient mics depending on the venue BAND INSTRUMENTS: Electric Bass - Bass player will need DI line, or in some cases may bring his own ears / mixer and therefore will need a line to the mixer - Bass guitar to be provided: - 4- String Fender Precision (American made preferred) with strap Guitars - guitarist will bring processing equipment for electric guitar - 1 DI line will be required - 2 guitar stands to be provided Keybaord / Piano - A grand piano, or if not available a Yamaha Motif 7 p.1 Drums Standard Yamaha 5 piece: (Choose from any of the following, in order of preference): • Recording Custom • Maple Custom • Oak Custom • Absolute Series (Birch, Maple, or Oak) Or an equivalent DW 5 piece (i.e.; Collector’s Series Maple) Specifications: A. -

Ben Reifel Band & Orchestra Instruments 2021

BEN REIFEL BAND & ORCHESTRA SUPPLIES BID TAB January 11, 2021, PD #3302 ORCHESTRA INSTRUMENTS TAYLOR Description QTY SPECIFICATIONS Total Cost cello racks 4 Wenger 6 Unit bass racks 3 Wenger 4 Unit Orchestra Expressions book 2 120 Alfred $892.80 String Basics book 3 60 Kjos $412.80 Stereo Receiver 1 Sony STR-DN1080 Stereo Speakers 2 Sony SSCS3 3/4 Violins 5 Eastman VL 100 $1,440.00 4/4 Violins 7 Eastman VL 100 $2,016.00 14" Violas 5 Eastman VA 100 $1,495.00 15" Violas 7 Eastman VA 100 $2,093.00 3/4 Cellos 5 Eastman VC 95 $3,245.00 4/4 Cellos 5 Eastman VC 95 $3,245.00 1/4 Basses 1 Eastman VB 95 $1,414.00 1/2 Basses 2 Eastman VB 95 $2,828.00 3/4 Basses 1 Eastman VB 95 $1,414.00 Total $4,000.80 BAND INSTRUMENTS TAYLOR Description QTY SPECIFICATIONS Total Cost Oboe 3 Selmer 123FB $5,088.00 Bassoon 1 Fox Renard 51 $4,459.00 Clarinet 6 Jupiter 635N $1,632.00 Bass Clarinet 3 Yamaha YCL221 $5,181.00 Tenor Saxophone 3 Yamaha YTS-26 $3,333.00 Bari Saxophone 2 Selmer BS500 $6,554.00 French Horn 6 Holton H379 $14,784.00 Trombone 4 Yamaha YSL354 $1,960.00 Baritone 4 Yamaha YEP201 $5,492.00 Tuba 3 Yamaha YBB105 $8,331.00 Deluxe Percussion Percussion Cabinet Cherry Finish 1 Wenger Workstation - Cherry - 147G001 Snare Drum w/ stand 2 Pearl CRP1455 Piano Black $672.00 Snare Drum Stand 3 Pearl S1030 $320.97 Bass Drum 1 Pearl PEA-PBE3616 $781.00 Bass Drum Stand 1 Pearl STBD36F $527.00 Bass Drum Beater 2 Ludwig Payson GP - L-310 $49.98 Concert Toms w/ stand (set of 4) 1 Ludwig LECT04CCG $919.00 Timpani (set of 4) 1 Ludwig LUD-LTP504PG $11,111.00 Zildjian -

The Transbay Creative Music Calendar OCTOBER 2011

the Creativetransbay Music Calendar 3111 Deakin St., Berkeley, CA 94705 the transbay Creative Music Calendar OCTOBER 2011 CONCERT REVIEW BY AMAR CHAUDHARY / CATSYNTH | AUGUST 5 Outsound Music Summit: Sonic Foundry Too! The final concert of this year’s Outsound Music with his own percussion. the tube. The effect was like pouring water, Summit brought together various inventors of and it seems that the timbre from the bottles new musical instruments under the banner What ensued was a tight rhythmic drum duet, on the suit were matching it at times. Marsh “Sonic Foundry Too!” Rather than each inven- which reminded me a bit of Japanese drum- increased his motion in the suit, set against tor simply presenting his or her work, they per- ming. Gradually, Hopkin’s drum sounds grew a variety of environmental sounds from formed as pairs. The pairings were selected for more electronic, but the strong rhythm persist- Hutchinson such as water, fire, air and musical congruity and brought together people ed. Michalak then tossed a couple of the LEDs animal sounds. Eventually he got up and who may have never performed together into the audience and transitioned to playing a started to dance, moving the arms of the before. As such, this was truly “experimental gamelan-like instrument made of metal plates suit in fan patterns, with noisier sounds from music”, with the outcomes uncertain until they and which produced a bell-like sound. The both performers. unfolded on stage. strong rhythm faded into an ethereal mix of bell and chime sounds. There were several The following set featured Tom Nunn and As one would expect, the stage setup was other interesting instruments and musical Stephen Baker, with David Michalak return- quite impressive, with musical contraptions moments in the remainder of set. -

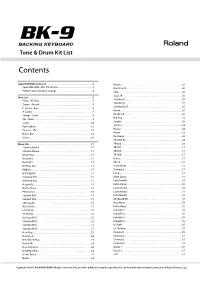

Tone & Drum Kit List

r Tone & Drum Kit List Contents SuperNATURAL Tone List . 3 Brush 2 . 24 SuperNATURAL INST Parameters . 3 Brush 2 L/R . 26 Performance Variation Sounds . 4 Jazz . .. 26 Jazz L/R . 26 Tone List . 5 Standard 1 . 26 Piano - E .Piano . 5 Standard 2 . 26 Organ - Accord . .. 5 Standard L/R . 26 E . Guitar - Bass . 6 Room . 26 A . Guitar . .. 7 Room L/R . 26 Strings - Vocal . 8 Hip Pop . 28 Sax - Brass . 9 Jungle . 28 Synth . 10 Techno . .28 Pad - Ethnic . 12 House . 28 Percuss - Sfx . 15 Power . 28 Harm . Bar . 16 Electronic . 28 Drums . 16 909 808 Kit . 28 Drum Kits . 17 TR-606 . 28 StandardNew1 . 17 TR-707 . 31 StandardNew2 . 17 TR-808 . 31 RoomNew . 17 TR-909 . 31 Rock Kit 1 . 17 Dance . 31 Rock Kit 2 . 17 CR-78 . 31 HipHop Kit 1 . 17 V-VoxDrum . 31 R&B Kit . 17 Orchestra . 31 HiFi R&B Kit . 17 Ethnic. 31 Machine Kit1 . 19 Multi Drum . 33 Machine Kit2 . 19 LatinDrmKit . .. 33 House Kit . 19 Latin Menu1 . 33 Nu Technica . .. 19 Latin Menu2 . 33 Percussion . 19 Latin Menu3 . 33 StudioX Kit1 . .19 IndiaDrmKit . .. 33 StudioX Kit2 . .19 MidEastDrKit . 33 SRX Studio . 19 Asia Menu . 33 WD Std Kit . 21 India Menu . .35 LD Std Kit . 21 IndoMix 1 . .. 35 TY Std Kit . 21 IndoMix 2 . .. 35 Kit-Euro:POP . 21 IndoMix 3 . .. 35 StandardKit1 . 21 IndoMix 4 . .. 35 StandardKit2 . 21 Or . R&B . 35 StandardKit3 . 21 Or . Techno . 35 New Pop . 21 Oriental 1 . 35 New Rock . 24 Oriental 2 . 37 New Brush Pop . 24 Oriental 3 . -

New Music for Orchestra

Michel van der Aa (*1970) Johannes Boris Borowski (*1979) Reversal (2016) 11’ Stretta (2016–17) 23’ Releases 2016–18 for orchestra for piano and orchestra WP: 13 Jan 2017 Staatsoper, Hamburg WP: 15 Nov 2017 Philharmonie, Berlin Andrey Kaydanowskiy, choreographer / Daniel Barenboim, piano / Staatskapelle Berlin / Bundesjugendballett / Bundesjugendorchester / Zubin Mehta Alexander Shelley for piano and orchestra 1.1.2(II=bcl).2(II=dbn)—4.2.2.btrbn.0—perc(2):vib/glsp/ 2.2.2.2.dbn—4.2.2.1—timp.perc(3):marimba/vib/glsp/t.bells/ marimba/BD/SD/maracas/bongo/tgl/bamboo chimes/glass SD/BD/4tom-t/2bongo/2Conga/tamb/2lion’s roar/2splash cym/ chimes/BD/log dr/cyms/whip/church bell or t.bells—harp— 2crash cym/2ride cym/3Chin.cym/tam-t/5opera gong/rainmaker/ strings(16.14.12.10.8) whip(lg)/2ratchet/wind machine/mark tree/wood chimes—harp— strings(10.8.6.6.3; alternatively 6db) John Adams (*1947) Walter Braunfels (1882–1954) Lola Montez Tag- und Nachtstücke (1933–34) 30’ Does the Spider Dance (2016) 4’ for piano and orchestra from ‘Girls of the Golden West’ WP: 13 Jul 2017 Ruhrfestspielhaus, Recklinghausen for orchestra Michael Korstick, piano / Neue Philharmonie WP: 06 Aug 2016 Civic Auditorium, Santa Cruz, CA Westfalen / Peter Ruzicka Cabrillo Festival of Contemporary Music / Marin Alsop 2(I,II=picc).2.2.2(II=dbn)—4.2.2.1—timp.perc(1):tamb/tgl/xyl/SD/ 3(III=picc).2.corA.2.bcl.2.dbn—4.3.3.0—perc(1):BD—pft—strings glsp/cyms—harp—strings (*1939) Louis Andriessen Enrico Chapela (*1974) Agamemnon (2017) 20’ Antikythera (2016) 10’ RCHESTRA for orchestra -

Medium of Performance Thesaurus for Music

A clarinet (soprano) albogue tubes in a frame. USE clarinet BT double reed instrument UF kechruk a-jaeng alghōzā BT xylophone USE ajaeng USE algōjā anklung (rattle) accordeon alg̲hozah USE angklung (rattle) USE accordion USE algōjā antara accordion algōjā USE panpipes UF accordeon A pair of end-blown flutes played simultaneously, anzad garmon widespread in the Indian subcontinent. USE imzad piano accordion UF alghōzā anzhad BT free reed instrument alg̲hozah USE imzad NT button-key accordion algōzā Appalachian dulcimer lõõtspill bīnõn UF American dulcimer accordion band do nally Appalachian mountain dulcimer An ensemble consisting of two or more accordions, jorhi dulcimer, American with or without percussion and other instruments. jorī dulcimer, Appalachian UF accordion orchestra ngoze dulcimer, Kentucky BT instrumental ensemble pāvā dulcimer, lap accordion orchestra pāwā dulcimer, mountain USE accordion band satāra dulcimer, plucked acoustic bass guitar BT duct flute Kentucky dulcimer UF bass guitar, acoustic algōzā mountain dulcimer folk bass guitar USE algōjā lap dulcimer BT guitar Almglocke plucked dulcimer acoustic guitar USE cowbell BT plucked string instrument USE guitar alpenhorn zither acoustic guitar, electric USE alphorn Appalachian mountain dulcimer USE electric guitar alphorn USE Appalachian dulcimer actor UF alpenhorn arame, viola da An actor in a non-singing role who is explicitly alpine horn USE viola d'arame required for the performance of a musical BT natural horn composition that is not in a traditionally dramatic arará form. alpine horn A drum constructed by the Arará people of Cuba. BT performer USE alphorn BT drum adufo alto (singer) arched-top guitar USE tambourine USE alto voice USE guitar aenas alto clarinet archicembalo An alto member of the clarinet family that is USE arcicembalo USE launeddas associated with Western art music and is normally aeolian harp pitched in E♭. -

Perusal Score

CONDUCTOR GRADE 5+ Weather The Storm Brian Ralston For Wind Ensemble Instrumentation ! Piccolo Flute 1 & 2 Oboe 1 Oboe 2 / English Horn Bb Clarinet 1 & 2 Bb Bass Clarinet Bb Contra Bass Clarinet Eb Alto Saxophone 1 & 2 Bb Tenor Saxophone Eb Baritone Saxophone Bassoon 1 & 2 Contra Bassoon Bb Trumpet 1 (2 players) Bb Trumpet 2 & 3 French Horn 1, 2, 3, 4 Tenor Trombone 1 & 2 Bass Trombone Euphonium 1 & 2 Tuba Piano / Celeste Harp 6 Percussion ! ( Timpani, Snare Drum, Large Bass Drum, Sus Cymbal, Large Piatti, Large Taiko Drum (or similar), Large Thunder Drum, Wind Machine, Patio Wind Chime, Triangle, Rainsticks, Large Shekere, Tambourine, Glockenspiel, Tubular Bells, Xylophone,! Marimba ) ! Published by Studio 74 Music (BMI) © Copyright 2016 - Studio 74 Music, LLC All Rights Reserved www.brianralston.com left blank. This page intentionally Program notes for the conductor:! ! Weather The Storm is a musical depiction inspired by the summer monsoon storms that rain upon the Arizona Sonoran desert daily throughout the summer months. The work is essentially told in 4 musical sections, but I did not write them in separate movements on purpose as the storm is meant to smoothly flow in its progression.! ! The opening aleatoric section is meant to replicate the distant but approaching sound of light rainfall and wind. Solo trumpet and french horn leads into the grandiose fanfare setting the stage for the grand Sonoran desert where these monsoon storms take place on a daily basis like clockwork. As the raindrops begin to fall harder and harder (letter D), the rain builds a kinetic energy.