Seattle Wide-Area Information for Travelers Field Operational Test

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Superior Performance Co-Ni Alloy Product (SPRON)® Creating Time - Optimizing Time - Enriching Time

Superior performance Co-Ni Alloy Product (SPRON)® Creating Time - Optimizing Time - Enriching Time Seiko Instruments Inc. (SII), founded in 1937 as a member of the Seiko Group specializing in the manufacture of watches, has leveraged its core competency in high precision watches to create a wide range of new products and technologies. Over the years SII has developed high-precision processed parts and machine tools that pride themselves on their sub-micron processing capability, quartz crystals that came about as a result of our quartz watch R&D, and electronic components such as micro batteries. Optimizing our extensive experience and expertise, we have since diversified into such new fields as compact, lightweight, exceedingly quiet thermal printers, and inkjet printheads, a key component in wide format inkjet printers for corporate use. SII, in the years to come, will maintain an uncompromised dedication to its time-honored technologies and innovations of craftsmanship, miniaturization, and efficiency that meet the needs of our changing society and enrich the lives of those around us. SEIKO HOLDINGS GROUP 1881 1917 1983 1997 2007 K. Hattori K. Hattori & Co., Ltd. Hattori Seiko Co., Ltd. Seiko Corporation Seiko Holdings Corporation 2005 Today’s Seiko NPC Corporation 2001 Seiko Watch Corporation 1993 Today’s Seiko Time Systems Inc. 1947 Wako Co., Ltd. 1996 Seiko Clock Inc. 1892 1970 Seikosha Seikosha Co., Ltd. 1996 Seiko Precision Inc. 2013 Seiko Solutions Inc. 1983 1937 Seiko Instruments & 1997 Daini Seikosha Co., Ltd. Electronics Ltd. -

Nano・Picometer Measurement and Control Laboratory

Mechanical Engineering 1 Nano・picometer measurement and control laboratory Professor / Masato AKETAGAWA Assistant Professor / Wei DONG Technical Staff / Masahiro YOSHIDA http://mcweb.nagaokaut.ac.jp/~picotech/ Can not make things that can not be measured. We are conducting ultra precision measurement using light (laser). A laboratory that respects the autonomy of students, it is a very free laboratory. Professor / Masato AKETAGAWA, Assistant Professor / Wei DONG Supervisor Professor Aketagawa has the experience of being a researcher at a company. Therefore, he has wide and deep knowledge about optical measurement technology. We encourage students to study in abroad, respecting our independence, and leading research with precise advice.Assistant Professor Wei always provide us various topics not only also a campus life. Research Content Mechanical In our laboratory we are researching ultra precision measurement system using leaser. We aim to contribute to the development of nanotechnology by considering a method of precision measurement, precision control and precision processing in nano (1 / billionth ) pico (1 / trillion) meter Electrical order. [1] Frequency stabilization and displacement measurement of 780nm DBR laser diode. [2]Ultra precision measurement of refractive index of air. [3] Measurement of motion error of bearing: Precise measurement of all 5 degrees of freedom bearing error by all simultaneously. Appearance of improved linear scale Materials [4]Length measurement with optical comb. calibrator A Day in the Lab Civil In our laboratory,no core time, and students has a great responsbility for a management of there resaech. Some people come early in the morning and are studying, others are still late at night. The seminars that are held twice a week are seminars progress seminar and paper seminar. -

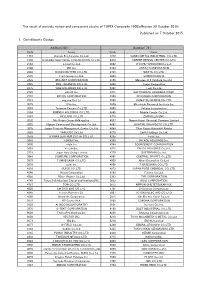

Published on 7 October 2015 1. Constituents Change the Result Of

The result of periodic review and component stocks of TOPIX Composite 1500(effective 30 October 2015) Published on 7 October 2015 1. Constituents Change Addition( 80 ) Deletion( 72 ) Code Issue Code Issue 1712 Daiseki Eco.Solution Co.,Ltd. 1972 SANKO METAL INDUSTRIAL CO.,LTD. 1930 HOKURIKU ELECTRICAL CONSTRUCTION CO.,LTD. 2410 CAREER DESIGN CENTER CO.,LTD. 2183 Linical Co.,Ltd. 2692 ITOCHU-SHOKUHIN Co.,Ltd. 2198 IKK Inc. 2733 ARATA CORPORATION 2266 ROKKO BUTTER CO.,LTD. 2735 WATTS CO.,LTD. 2372 I'rom Group Co.,Ltd. 3004 SHINYEI KAISHA 2428 WELLNET CORPORATION 3159 Maruzen CHI Holdings Co.,Ltd. 2445 SRG TAKAMIYA CO.,LTD. 3204 Toabo Corporation 2475 WDB HOLDINGS CO.,LTD. 3361 Toell Co.,Ltd. 2729 JALUX Inc. 3371 SOFTCREATE HOLDINGS CORP. 2767 FIELDS CORPORATION 3396 FELISSIMO CORPORATION 2931 euglena Co.,Ltd. 3580 KOMATSU SEIREN CO.,LTD. 3079 DVx Inc. 3636 Mitsubishi Research Institute,Inc. 3093 Treasure Factory Co.,LTD. 3639 Voltage Incorporation 3194 KIRINDO HOLDINGS CO.,LTD. 3669 Mobile Create Co.,Ltd. 3197 SKYLARK CO.,LTD 3770 ZAPPALLAS,INC. 3232 Mie Kotsu Group Holdings,Inc. 4007 Nippon Kasei Chemical Company Limited 3252 Nippon Commercial Development Co.,Ltd. 4097 KOATSU GAS KOGYO CO.,LTD. 3276 Japan Property Management Center Co.,Ltd. 4098 Titan Kogyo Kabushiki Kaisha 3385 YAKUODO.Co.,Ltd. 4275 Carlit Holdings Co.,Ltd. 3553 KYOWA LEATHER CLOTH CO.,LTD. 4295 Faith, Inc. 3649 FINDEX Inc. 4326 INTAGE HOLDINGS Inc. 3660 istyle Inc. 4344 SOURCENEXT CORPORATION 3681 V-cube,Inc. 4671 FALCO HOLDINGS Co.,Ltd. 3751 Japan Asia Group Limited 4779 SOFTBRAIN Co.,Ltd. 3844 COMTURE CORPORATION 4801 CENTRAL SPORTS Co.,LTD. -

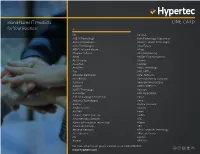

Hypertec Line Card 07 2019

Brand-Name IT Products LINE CARD for Your Business A 3M Alestron AAEON Technology Alien Technology Corporation Aastra Technologies Alliance Storage Technologies AAXA Technologies Allied Telesis ABBYY Software House Allsop Absolute Software Altair Engineering Accell AltiGen Communications ACCO Brands Altronix Accu-Tech Aluratek AccuView Ambir Technology Acer AMC OPTICS Actiontec Electronics Amer Networks ActivIdentity American Battery Company Activision American Microsystems Adaptec AMPED WIRELESS ADATA Technology Amstron Addmaster AMX Corporation Add-On Computer Peripherals Amzer Addonics Technologies Andis Adesso Andrea Electronics Adobe Systems Andrew ADTRAN Antec Advanced Merit Concept Anthro Advanced Micro Devices AOC Advanced Simulation Technology AOpen Advantek Networks APC Aerohive Networks APEX Computer Technology AFC Industries APG Cash Drawer AIC Applica Aleratec APPOSITE For more information please contact us at 1.800.876.0920 www.hypertec.com Apricorn Belkin Components CIARA Technologies (Hypertec Group) Arclyte Technologies BenQ CipherLab Arctic Cooling Best Data Products Cirago International Areca Technology Corporation BIXOLON Cisco Systems Arista Enterprises Black Box Citizen Systems Aroma Housewares Company Blue Microphones Citrix Systems Array Networks Bluelounge Design ClearOne Communications Aruba Networks Bosch Group Clover Technologies Group ASUSTeK COMPUTER Brady CMS Products AT&T Brainboxes Cobra Electronics Atdec Brenthaven CODi ATEN Technology Bretford Cognitive TPG Athenatech Brocade Communications Systems Compaq -

PDF Download

Information Restroom Restaurant, Cafe Emergency Exit Elevator Resting place Smoking Area HALL8 Communication and Information network Assoiation of Japan (CIAJ) HALL7 HALL6 HALL5 HALL4 HALL3 HALL2 The Telecommunication Technology Committee (TTC) Aecom ISGate Piezo Sonic A002 <PBX> AnchorZ JNS PRAGE NEC Platforms AXIA MAS Electronics Technology RC Solution Company Oki Electric Industry <Multimedia> Casley Consulting MicroWorld SalesOne A012 Hitachi Information & Telecommunication Engineering CIAJ Multimedia Comunications Committee Chimitox MILOQS TACK&Co. A007 A006 FUJITSU <FAX> H2L and PARONYM MORITA TECH XPAND Lounge CIAJ User Network System Committee CIAJ Image Data Comunications & Facsimile Committee Kushiro city Lab IoTBASE MovaCloud A008 A001 Kitami city Lab Sendai city Lab A011 A010 A009 A005 A004 A003 Kanagawa pref Lab Yokosuka city Lab Niigata pref Lab Yamanashi pref Lab Ina city Lab Ishikawa pref Lab Kaga city Lab B050 Gujo city Lab Mie pref Lab Osaka pref Lab B001 Osaka city Lab Microsoft Japan HATS Forum NTT Group TOKYO BUSINESS FRONTIER NEC Mitsubishi Electric KDDI OKI Sony Hitachi FUJITSU AMADA HOLDINGS Nara pref Lab Hiroshima pref Lab Minami town Lab Organization for Small &Medium Enterprises and Fukuoka pref Lab Regional Innovation, JAPAN Kitakyushu city Lab The Ibaraki Industrial Development Council for Total Solutions Total Solutions Total Solutions Metroporitan Inter-City Expressway Rejion Connection Technology JEITA Smart Drive Technology (ITS Committee) Systems(SiMPNiC) Fuji Technical Research Yamaguchi Lab., Social -

American Century Investments® Quarterly Portfolio Holdings Avantis

American Century Investments® Quarterly Portfolio Holdings Avantis® International Equity ETF (AVDE) November 30, 2020 Avantis International Equity ETF - Schedule of Investments NOVEMBER 30, 2020 (UNAUDITED) Shares/ Principal Amount ($) Value ($) COMMON STOCKS — 99.7% Australia — 6.5% Accent Group Ltd. 14,526 23,059 Adairs Ltd. 9,223 21,547 Adbri Ltd. 12,481 28,274 Afterpay Ltd.(1) 48 3,354 AGL Energy Ltd. 8,984 89,075 Alkane Resources Ltd.(1)(2) 41,938 31,367 Alliance Aviation Services Ltd.(1) 5,187 13,148 ALS Ltd. 2,039 14,302 Altium Ltd. 1,563 40,787 Alumina Ltd. 16,346 20,996 AMA Group Ltd.(1) 16,885 9,258 AMP Ltd. 223,348 280,458 Ampol Ltd. 2,595 58,421 Ansell Ltd. 1,333 36,644 APA Group 16,232 123,383 Appen Ltd. 1,821 42,222 ARB Corp. Ltd. 3,172 64,616 Ardent Leisure Group Ltd.(1) 22,550 13,624 Aristocrat Leisure Ltd. 12,775 300,907 ASX Ltd. 1,422 80,525 Atlas Arteria Ltd. 5,725 27,252 Atlassian Corp. plc, Class A(1) 2,086 469,454 Aurelia Metals Ltd. 60,190 18,351 Aurizon Holdings Ltd. 113,756 355,100 AusNet Services 71,401 97,009 Austal Ltd. 23,896 51,252 Australia & New Zealand Banking Group Ltd. 67,427 1,121,525 Australian Agricultural Co. Ltd.(1) 32,331 25,519 Australian Ethical Investment Ltd. 3,654 13,530 Australian Finance Group Ltd. 21,308 37,254 Australian Pharmaceutical Industries Ltd. 29,606 26,147 Bank of Queensland Ltd. -

Fabless: the Transformation of the Semiconductor Industry

DANIEL NENNI PAUL MCLELLAN FABLESS: THE TRANSFORMATION OF THE SEMICONDUCTOR INDUSTRY Foreword by Dr. Cliff Hou, VP of R&D, TSMC A SEMIWIKI.COM PROJECT FABLESS: The Transformation of The Semiconductor Industry DANIEL NENNI PAUL MCLELLAN WITH FOREWORD BY CLIFF HOU, VP OF R&D, TSMC A SEMIWIKI.COM PROJECT Fabless: The Transformation of the Semiconductor Industry Copyright 2013 by SemiWiki.com LLC. All rights reserved. Printed in the United States of America. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a data base or retrieval system without the prior written consent of the publisher. Authors: Daniel Nenni and Paul McLellan Editors: Beth Martin and Shushana Nenni ISBN: 978-1-4675-9307-6 BISAC: Business & Economics / General Fabless: The Transformation of the Semiconductor Industry Table of Contents Foreword .................................................................................................. iv Preface ......................................................................................................vi Chapter 1: The Semiconductor Century ............................................10 Chapter 2: The ASIC Business ............................................................21 In Their Own Words: VLSI Technology ......................................29 In Their Own Words: eSilicon Corporation .................................36 Chapter 3: The FPGA ..........................................................................44 -

A Brief Report on IEEE Milestones in Japan

A Brief Report on IEEE Milestones Activities in Japan and IEEJ Technical Meeting Featuring IEEE Milestones Akihiko Yamada, June 22, 2012/6/21, updated on 6/29 There are seventeen dedicated IEEE Milestones in Japan as of May 2012 as shown in Table 1 (next page). We had four IEEE Milestone related events in this spring. (1) Dedication Ceremony of IEEE Milestone for the International Standardization of G3 Facsimile and Lecture of IEEE Tokyo Section: April 5, 2012 Dedication Ceremony of IEEE Milestone for the International Standardization of G3 Facsimile was held on April 5, 2012, at Imperial Hotel, Tokyo, Japan. The two-dimensional coding MR (Modified READ) method for G3 facsimile was developed through the collaboration of NTT and KDDI. Gordon Day, IEEE President, presented commemorative plaques to Satoshi Miura, President and CEO, NTT, and Takashi Tanaka, President, KDDI. Dedication ceremony pictures: http://www.ieee-jp.org/section/tokyo/bulletin/2012/no72/0405milestone_pic.htm After the dedication ceremony, IEEE Tokyo Section lecture entitled “Commemoration of IEEE Milestone Award on International Standardization of G3 Facsimile in 1980” was held with 124 participants. Following lectures were given: 1) "Introduction to the IEEE Milestones", Eiichi Ohno, IEEE Japan Council History Committee Chair 2) "Redundancy Reduction Coding Techniques of G3FAX for International Standardization" by Toyomichi Yamada, NTT 3) "Role and Effectiveness of International Standardization in G3FAX" by Yasuhiro Yamazaki, KDDI (2) IEEE Technical Tour to Japan : May 20-28, 2012 This is the third IEEE Tour following the first one to Panama Canal in March 2010 and the second one to United Kingdom in May 2011. -

Japanese Semiconductor Industry Service

Japanese Semiconductor Industry Service Volume II Technology & Government Dataquest nn a company of The Dun & Bradstreet Corporation 1290 Ridder Park Drive San Jose, California 95131-2398 (408) 437-8000 Telex: 171973 Fax: (408) 437-0292 Sales/Service offices: UNITED KINGDOM GERMANY Dataquest UK Limited Dataquest GmbH 13th Floor, Centrepoint Rosenkavalierplatz 17 103 New Oxford Street D-8000 Munich 81 London WCIA IDD West Germany England (089)91 10 64 01-379-6257 Telex: 5218070 Telex: 266195 Fax: (089)91 21 89 Fax: 01-240-3653 FRANCE JAPAN Dataquest SARL Dataquest Japan, Ltd. 100, avenue Charles de Gaulle Taiyo Ginza Building/2nd Floor 92200 Neuilly-sur-Seine 7-14-16 Ginza, Chuo-ku France Tokyo 104 Japan (01)4738.13.12 (03)546-3191 Telex: 611982 Telex: 32768 Fax: (01)4738.11.23 Fax: (03)546-3198 The content of this report represents our interpretation and analysis of information generally available to the public or released by responsible individuals in the subject com panies, but is not guaranteed as to accuracy or completeness. It does not contain material provided to us in confidence by our clients. This information is not furnished in connection with a sale or offer to sell securities, or in connection with the solicitation of an offer to buy securities. This firm and its par ent and/or their officers, stockholders, or members of their families may, from time to time, have a long or short position in the securities mentioned and may sell or buy such securities. Printed in the United States of America. All rights reserved. -

Japanese Company Profiles Asahi Kasei Microsystems

Japanese Company Profiles Asahi Kasei Microsystems ASAHI KASEI MICROSYSTEMS (AKM) Asahi Kasei Microsystems Co., Ltd. U.S. Representative: TS Building AKM Semiconductor, Inc. 24-10, Yoyogi 1-chome 2001 Gateway Place, Suite 650 West Shibuya-ku, Tokyo 151 San Jose, California 95110 Japan Telephone: (408) 436-8580 Telephone: (81) (3) 3320-2060 Fax: (408) 436-7591 Fax: (81) (3) 3320-2074 IC Manufacturer Financial History ($M) 1993 1994 1995 Sales 145 215 300 Employees 800 Company Overview and Strategy Asahi Kasei Microsystems Co., Ltd. (AKM) was founded in 1983 as a joint venture between Asahi Chemical Industry Co., Ltd. and American Microsystems Inc. The venture became a wholly owned subsidiary of Asahi Chemical in 1986, though AKM and AMI still maintain a business relationship. AKM designs and manufactures CMOS mixed-signal integrated circuits combining analog and digital functions on a single chip or chipset. Its devices are targeted at telecommunications, data acquisition, mass storage, audio, and multimedia applications. Approximately half of its IC sales are custom designed products. Management Asahi Kasei Microsystems Co., Ltd. Hirotsugu Miyauchi President Kyoji Kurata Director and GM, Technical Marketing and Application Engineering AKM Semiconductor, Inc. (U.S.) Yoshihisa Iwasaki President Edward Boule Vice President, Sales Koho Goto Vice President, Marketing INTEGRATED CIRCUIT ENGINEERING CORPORATION 2-1 Asahi Kasei Microsystems Japanese Company Profiles Products and Processes AKM specializes in custom and semicustom mixed-signal ASICs -

ANNUAL REPORT 2007 Tree-Free Paper and Soy Ink

S E I K O E P S O N C O R P O R A T I O N A N N U A L R E P O R T 2 0 0 7 Seiko Epson Corporation Annual Report 2007 April 2006 – March 2007 3-5 Owa 3-chome, Suwa, Nagano 392-8502, Japan Tel: +81-266-52-3131 (main) http://www.epson.co.jp/e/ This report was produced from recycled and tree-free paper and soy ink. Corporate Data Management Philosophy (As of March 31, 2007) Epson is a progressive company, trusted throughout the world because of our commitment to customer satisfaction, ■ Company Name Seiko Epson Corporation ■ Paid-in Capital ¥53,204 million environmental conservation, individuality, and teamwork. ■ Founded May 1942 ■ Number of Employees Epson Group (consolidated): 87,626 ■ Head Office 3-5 Owa 3-chome, Suwa, Parent Company: 13,039 We are confident of our collective skills Nagano 392-8502, Japan ■ Group Companies 117 (domestic: 33, overseas: 84) and meet challenges with innovative and creative solutions. Tel: +81-266-52-3131 (main) *Including Seiko Epson Corporation (The Epson Management Philosophy has been translated into 14 languages, ■ Tokyo Office Shinjuku NS Building, and is shared by all members of the Epson Group worldwide.) 4-1 Nishishinjuku 2-chome, Shinjuku-ku Tokyo 163-0811, Japan Tel: +81-3-3348-8531 (main) ■ Corporate History May 1942 Daiwa Kogyo Ltd. (the predecessor of Seiko Oct. 1992 Complete elimination of CFCs from manufacturing Epson Corporation) is established. processes is achieved at all factories and affiliates in Japan. Epson receives the 1992 Stratospheric May 1959 Daiwa Kogyo and the Suwa Plant of Daini Ozone Protection Award from the U.S. -

Display Technologies in Japan

NASA-CR-198566 Japanese Technology Evaluation Center JTEC JTEC Panel Report on Display Technologies In Japan Lawrence E. Tannas, Jr., Co-chair William E. Glenn, Co-chair Thomas Credelle J. William Doane Arthur H. Firester Malcolm Thompson June 1992 (NASA-CR-198564) JTEC PANEL ON N95-27863 DISPLAY TECHNOLOGIES IN JAPAN Final Report (Loyola Coil.) 295 p Unclas G3/35 0049776 Coordinated by Loyola College in Maryland 4501 North Charles Street Baltimore, Maryland 21210-2699 LOYOLA ('()_E ,IN MARYLAND JAP_ TECHNOI_)OY IFv'ALUATION CENTER SPONSOR The Japanese Technology Evaluation Center (JTEC) is operated for the Federal Government to provide assessments of Japanese research and development (R&D) in selected technologies. The National Science Foundation (NSF) is the lead support agency. Paul Herer, Senior Advisor for Planning and Technology Evaluation, is NSF Program Director for the project. Other sponsors of JTEC include the National Aeronautics and Space Administration (NASA), the Department of Commerce (DOC), the Department of Energy (DOE), the Office of Naval Research (ONR), the Defense Advanced Research Projects Agency (DARPA), and the U.S. Air Force. JTEC assessments contribute to more balanced technology transfer between Japan and the United States. The Japanese excel at acquisition and perfection of foreign technologies, whereas the U.S. has relatively little experience with this process. As the Japanese become leaders in research in targeted technologies, it is essential that the United States have access to the results. JTEC provides the important first step in this process by alerting U.S. researchers to Japanese accomplishments. JTEC findings can also be helpful in formulating governmental research and trade policies.