On the Evolution of Wikipedia

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Position Description Addenda

POSITION DESCRIPTION January 2014 Wikimedia Foundation Executive Director - Addenda The Wikimedia Foundation is a radically transparent organization, and much information can be found at www.wikimediafoundation.org . That said, certain information might be particularly useful to nominators and prospective candidates, including: Announcements pertaining to the Wikimedia Foundation Executive Director Search Kicking off the search for our next Executive Director by Former Wikimedia Foundation Board Chair Kat Walsh An announcement from Wikimedia Foundation ED Sue Gardner by Wikimedia Executive Director Sue Gardner Video Interviews on the Wikimedia Community and Foundation and Its History Some of the values and experiences of the Wikimedia Community are best described directly by those who have been intimately involved in the organization’s dramatic expansion. The following interviews are available for viewing though mOppenheim.TV . • 2013 Interview with Former Wikimedia Board Chair Kat Walsh • 2013 Interview with Wikimedia Executive Director Sue Gardner • 2009 Interview with Wikimedia Executive Director Sue Gardner Guiding Principles of the Wikimedia Foundation and the Wikimedia Community The following article by Sue Gardner, the current Executive Director of the Wikimedia Foundation, has received broad distribution and summarizes some of the core cultural values shared by Wikimedia’s staff, board and community. Topics covered include: • Freedom and open source • Serving every human being • Transparency • Accountability • Stewardship • Shared power • Internationalism • Free speech • Independence More information can be found at: https://meta.wikimedia.org/wiki/User:Sue_Gardner/Wikimedia_Foundation_Guiding_Principles Wikimedia Policies The Wikimedia Foundation has an extensive list of policies and procedures available online at: http://wikimediafoundation.org/wiki/Policies Wikimedia Projects All major projects of the Wikimedia Foundation are collaboratively developed by users around the world using the MediaWiki software. -

Danish Resources

Danish resources Finn Arup˚ Nielsen November 19, 2017 Abstract A range of different Danish resources, datasets and tools, are presented. The focus is on resources for use in automated computational systems and free resources that can be redistributed and used in commercial applications. Contents 1 Corpora3 1.1 Wikipedia...................................3 1.2 Wikisource...................................3 1.3 Wikiquote...................................4 1.4 ADL......................................4 1.5 Gutenberg...................................4 1.6 Runeberg...................................5 1.7 Europarl....................................5 1.8 Leipzig Corpora Collection..........................5 1.9 Danish Dependency Treebank........................6 1.10 Retsinformation................................6 1.11 Other resources................................6 2 Lexical resources6 2.1 DanNet....................................6 2.2 Wiktionary..................................7 2.3 Wikidata....................................7 2.4 OmegaWiki..................................8 2.5 Other lexical resources............................8 2.6 Wikidata examples with medical terminology extraction.........8 3 Natural language processing tools9 3.1 NLTK.....................................9 3.2 Polyglot....................................9 3.3 spaCy.....................................9 3.4 Apache OpenNLP............................... 10 3.5 Centre for Language Technology....................... 10 3.6 Other libraries................................ -

Jimmy Wales and Larry Sanger, It Is the Largest, Fastest-Growing and Most Popular General Reference Work Currently Available on the Internet

Tomasz „Polimerek” Ganicz Wikimedia Polska WikipediaWikipedia andand otherother WikimediaWikimedia projectsprojects WhatWhat isis Wikipedia?Wikipedia? „Imagine„Imagine aa worldworld inin whichwhich everyevery singlesingle humanhuman beingbeing cancan freelyfreely shareshare inin thethe sumsum ofof allall knowledge.knowledge. That'sThat's ourour commitment.”commitment.” JimmyJimmy „Jimbo”„Jimbo” Wales Wales –– founder founder ofof WikipediaWikipedia As defined by itself: Wikipedia is a free multilingual, open content encyclopedia project operated by the non-profit Wikimedia Foundation. Its name is a blend of the words wiki (a technology for creating collaborative websites) and encyclopedia. Launched in January 2001 by Jimmy Wales and Larry Sanger, it is the largest, fastest-growing and most popular general reference work currently available on the Internet. OpenOpen and and free free content content RichardRichard StallmanStallman definition definition of of free free software: software: „The„The wordword "free""free" inin ourour namename doesdoes notnot referrefer toto price;price; itit refersrefers toto freedom.freedom. First,First, thethe freedomfreedom toto copycopy aa programprogram andand redistributeredistribute itit toto youryour neighbors,neighbors, soso thatthat theythey cancan useuse itit asas wellwell asas you.you. Second,Second, thethe freedomfreedom toto changechange aa program,program, soso ththatat youyou cancan controlcontrol itit insteadinstead ofof itit controllingcontrolling you;you; forfor this,this, thethe sourcesource -

Instructor Basics: Howtouse Wikipedia As Ateaching Tool

Instructor Basics: How to use Wikipedia as a teaching tool Wiki Education Foundation Wikipedia is the free online encyclopedia that anyone can edit. One of the most visited websites worldwide, Wikipedia is a resource used by most university students. Increasingly, many instructors around the world have used Wikipedia as a teaching tool in their university classrooms as well. In this brochure, we bring together their experiences to help you determine how to use Wikipedia in your classroom. We’ve organized the brochure into three parts: Assignment planning Learn key Wikipedia policies and get more information on designing assignments, with a focus on asking students to write Wikipedia articles for class. During the term Learn about the structure of a good Wikipedia article, the kinds of articles students should choose to improve, suggestions for what to cover in a Wikipedia lab session, and how to interact with the community of Wikipedia editors. After the term See a sample assessment structure that’s worked for other instructors. 2 Instructor Basics Assignment planning Understanding key policies Since Wikipedia started in 2001, the community of volunteer editors – “Wikipedians” – has developed several key policies designed to ensure Wikipedia is as reliable and useful as possible. Any assignment you integrate into your classroom must follow these policies. Understanding these cornerstone policies ensures that you develop an assignment that meets your learning objectives and improves Wikipedia at the same time. Free content Neutral point of view “The work students contribute to “Everything on Wikipedia must be Wikipedia is free content and becomes written from a neutral point of view. -

Combining Wikidata with Other Linked Databases

Combining Wikidata with other linked databases Andra Waagmeester, Dragan Espenschied Known variants in the CIViC database for genes reported in a WikiPathways pathway on Bladder Cancer Primary Sources: ● Wikipathways (Q7999828) ● NCBI Gene (Q20641742) ● CIViCdb (Q27612411) ● Disease Ontology (Q5282129) Example 1: Wikidata contains public data “All structured data from the main and property namespace is available under the Creative Commons CC0 License; text in the other namespaces is available under the Creative Commons Attribution-ShareAlike License; additional terms may apply. By using this site, you agree to the Terms of Use and Privacy Policy.” Wikidata requirement for Notability An item is acceptable if and only if it fulfills at least one of these two goals, that is if it meets at least one of the criteria below: ● It contains at least one valid sitelink to a page on Wikipedia, Wikivoyage, Wikisource, Wikiquote, Wikinews, Wikibooks, Wikidata, Wikispecies, Wikiversity, or Wikimedia Commons. ● It refers to an instance of a clearly identifiable conceptual or material entity.it can be described using serious and publicly available references. ● It fulfills some structural need, https://www.wikidata.org/wiki/Wikidata:Notability Wikidata property proposals “Before a new property is created, it has to be discussed here. When after some time there are some supporters, but no or very few opponents, the property is created by a property creator or an administrator. You can propose a property here or on one of the subject-specific pages listed -

Free Knowledge for a Free World

Collaborative Peer Production In a Health Context Jimmy Wales President, Wikimedia Foundation Wikipedia Founder What I will talk about •What is Wikipedia? •How the community works •Core principles of the Wikimedia Foundation •What will be free? “The ideal encyclopedia should be radical. It should stop being safe.” --1962, Charles van Doren, later a senior editor at Britannica Wikipedia’s Radical Idea: Imagine a world in which every single person is given free access to the sum of all human knowledge. That’s what we’re doing. What is the Wikimedia Foundation? •Non-profit foundation •Aims to distribute a free encyclopedia to every single person on the planet in their own language •Wikipedia and its sister projects •Funded by public donations •Partnering with select institutions wikimediafoundation.org What is Wikipedia? •Wikipedia is: • a freely licensed encyclopedia written by thousands of volunteers in many languages wikipedia.org What do I mean by free? •Free as in speech, not free as in beer •4 Freedoms – Freedom to copy – Freedom to modify – Freedom to redistribute – Freedom to redistribute modified versions How big is Wikipedia? •English Wikipedia is largest and has over 500 million words •English Wikipedia larger than Britannica and Microsoft Encarta combined •German Wikipedia equal in size to Brockhaus How big is Wikipedia Globally? • 740,000 - English • 292,000 - German • >100,000 - French, Japanese, Italian, Polish, Swedish • >50,000 - Dutch, Portuguese, Spanish • 2.2 million across 200 languages •30 with >10,000. 75 with >1000 Some Wikimedia Projects •Wikipedia •Wiktionary •Wikibooks •Wikiquote •Wikimedia Commons •Wikinews How popular is Wikipedia? • Top 40 website • According to Alexa.com, broader reach than.. -

What Is a Wiki? Tutorial 1 for New Wikieducators

What is a Wiki? Tutorial 1 for new WikiEducators wikieducator.org book - Generated using the open source mwlib toolkit - see http://code.pediapress.com for more information 2 Introducing a Wiki Objectives In this tutorial we will: • provide an overview of what wikis are, • and show some examples of their different uses. • discuss the advantages and disadvantages of using wikis to develop content • describe the main features of WikiEducator What is a Wiki? The name "Wiki" was chosen by Ward Cunningham - - the creator of the first Wiki. It is a shortened form of "wiki- wiki", the Hawaiian word for quick. A wiki is a web site that is generally editable by anyone with a computer, a web browser, and an internet connection. Wikis use a quick and easy syntax to allow users to apply formatting to text and create links between pages. This simple formatting syntax means that authors no longer need to learn the complexities of HTML to create content on the web. The main strength of a wiki is that it gives people the ability to work collaboratively on the same document. The only software you need is an Wiki wiki sign outside Honolulu International Internet browser. Consequently, wikis are used Airport. (Image courtesy of A. Barataz) for a variety of purposes. If you make a mistake, it's easy to revert back to an earlier version of the document. All content sourced from WikiEducator.org and is licensed under CC-BY-SA or CC-BY where specified. 3 Examples of Wikis The largest and most talked about Wiki on the Internet is Wikipedia[1] Wikipedia is, for the most part, editable by anyone in the world with a computer and an internet connection and, at the time of this writing, contained over 1,500,000 pages. -

Wikimedia Commons

Wikimedia Wikis Imagine a world in which every Invented by Ward Cunningham in 1995, wikis are single person is given free access dynamic websites in which any user can edit a page, to the sum of all human know- quickly and easily, using a web browser. The name ledge. That‘s what we‘re doing. is derived from the Hawaiian word “Wikiwiki”, -- Jimmy Wales meaning fast. Wikis use a simple formatting lan- Wikimedia is an international non-profit organisa- guage anyone can learn in just a few minutes. For tion dedicated to the development and distribu- example, to italicise text, surround it with two tion of free multilingual content. It hosts various apostrophes; use three for bolded text, and en- free-content projects, most notably Wikipedia, the close a word in two brackets ([[]]) to create a link award-winning online encyclopædia. Other online to another page in the wiki. projects include Wiktionary, a multilingual diction- ary; Wikibooks, a collection of free-content text- books; Wikiquote, a repository of famous quotes; and Wikisource, a repository for primary-source materials. The Wikimedia Foundation was set up in June 2003 to serve and develop the necessary infrastructure for the constantly growing projects. It owns the Wikimedia servers and covers bandwidth and hosting costs. In Germany, France and Italy, local Wikimedia chapters have been founded to support and promote the projects on a national level. Wiki- media is run mostly by volunteer staff and relies entirely on public donations and grants to meet its goal of providing free knowledge to every person in the world. -

Social Media Is Bullshit

SOCIAL MEDIA IS BULLSHIT 053-50475_ch00_4P.indd i 7/14/12 6:37 AM 053-50475_ch00_4P.indd ii 7/14/12 6:37 AM 053-50475_ch00_4P.indd iii 7/14/12 6:37 AM social media is bullshit. Copyright © 2012 by Earth’s Temporary Solu- tion, LLC. For information, address St. Martin’s Press, 175 Fifth Avenue, New York, N.Y. 10011. www .stmartins .com Design by Steven Seighman ISBN 978- 1- 250- 00295- 2 (hardcover) ISBN 978-1-250-01750-5 (e-book) First Edition: September 2012 10 9 8 7 6 5 4 3 2 1 053-50475_ch00_4P.indd iv 7/14/12 6:37 AM To Amanda: I think you said it best, “If only we had known sooner, we would have done nothing diff erent.” 053-50475_ch00_4P.indd v 7/14/12 6:37 AM 053-50475_ch00_4P.indd vi 7/14/12 6:37 AM CONTENTS AN INTRODUCTION: BULLSHIT 101 One: Our Terrible, Horrible, No Good, Very Bad Web site 3 Two: Astonishing Tales of Mediocrity 6 Three: “I Wrote This Book for Pepsi” 10 Four: Social Media Is Bullshit 15 PART I: SOCIAL MEDIA IS BULLSHIT Five: There Is Nothing New Under the Sun . or on the Web 21 Six: Shovels and Sharecroppers 28 Seven: Yeah, That’s the Ticket! 33 Eight: And Now You Know . the Rest of the Story 43 Nine: The Asshole- Based Economy 54 053-50475_ch00_4P.indd vii 7/14/12 6:37 AM Ten: There’s No Such Thing as an Infl uencer 63 Eleven: Analyze This 74 PART II: MEET THE PEOPLE BEHIND THE BULLSHIT Twelve: Maybe “Social Media” Doesn’t Work So Well for Corporations, Either? 83 Thirteen: Kia and Facebook Sitting in a Tree . -

Wikipedia in Court: When and How Citing Wikipedia and Other Consensus Websites Is Appropriate

St. John's Law Review Volume 84 Number 2 Volume 84, Spring 2010, Number 2 Article 2 Wikipedia in Court: When and How Citing Wikipedia and Other Consensus Websites Is Appropriate Jason C. Miller Hannah B. Murray Follow this and additional works at: https://scholarship.law.stjohns.edu/lawreview This Article is brought to you for free and open access by the Journals at St. John's Law Scholarship Repository. It has been accepted for inclusion in St. John's Law Review by an authorized editor of St. John's Law Scholarship Repository. For more information, please contact [email protected]. 84 St. John’s L. Rev. 633 (2010) WIKIPEDIA IN COURT: WHEN AND HOW CITING WIKIPEDIA AND OTHER CONSENSUS WEBSITES IS APPROPRIATE JASON C. MILLER† & HANNAH B. MURRAY†† I. INTRODUCTION In Bourgeois v. Peters, resolution of the controversy required a classic balancing of liberty and security.1 In rejecting a claim that the Department of Homeland Security’s elevated threat level justified more intrusive police procedures, the Eleventh Circuit became one of the first courts to rely on the expertise of Wikipedia.2 Wikipedia reported that the country had been at “yellow alert” for almost three years, an amount of time, the court reasoned, that weakened the government’s claim that the War on Terror necessitated extreme measures.3 Since then, courts throughout the country have looked to Wikipedia for geographic information,4 to establish which days are “business days,”5 to explain the meaning of common phrases,6 to define † Associate at Sherman & Howard L.L.C.; Law Clerk to the Honorable Deborah L. -

Outline of Communication

Outline of communication The following outline is provided as an overview of and 2.1.2 Types of communication by mode topical guide to communication: • Conversation Communication – purposeful activity of exchanging in- formation and meaning across space and time using vari- • Mail ous technical or natural means, whichever is available or preferred. Communication requires a sender, a message, • Mass media a medium and a recipient, although the receiver does not • Book have to be present or aware of the sender’s intent to com- municate at the time of communication; thus communi- • Film cation can occur across vast distances in time and space. • Journalism • News media • Newspaper 1 Essence of communication • Technical writing • Video • Communication theory • Telecommunication (outline) • Development communication • Morse Code • Information • Radio • Telephone • Information theory • Television • Semiotics • Internet • Verbal communication 2 Branches of communication • Writing 2.1 Types of communication 2.2 Fields of communication 2.1.1 Types of communication by scope • Communication studies • Cognitive linguistics • Computer-mediated communication • Conversation analysis • Health communication • Crisis communication • Intercultural communication • Discourse analysis • International communication • Environmental communication • • Interpersonal communication Interpersonal communication • Linguistics • Intrapersonal communication • Mass communication • Mass communication • Mediated cross-border communication • Nonverbal communication • Organizational -

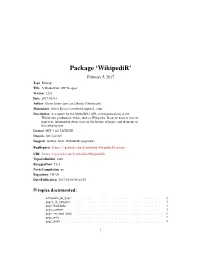

Package 'Wikipedir'

Package ‘WikipediR’ February 5, 2017 Type Package Title A MediaWiki API Wrapper Version 1.5.0 Date 2017-02-04 Author Oliver Keyes [aut, cre], Brock Tilbert [ctb] Maintainer Oliver Keyes <[email protected]> Description A wrapper for the MediaWiki API, aimed particularly at the Wikimedia 'production' wikis, such as Wikipedia. It can be used to retrieve page text, information about users or the history of pages, and elements of the category tree. License MIT + file LICENSE Imports httr, jsonlite Suggests testthat, knitr, WikidataR, pageviews BugReports https://github.com/Ironholds/WikipediR/issues URL https://github.com/Ironholds/WikipediR/ VignetteBuilder knitr RoxygenNote 5.0.1 NeedsCompilation no Repository CRAN Date/Publication 2017-02-05 08:44:55 R topics documented: categories_in_page . .2 pages_in_category . .3 page_backlinks . .4 page_content . .5 page_external_links . .6 page_info . .7 page_links . .8 1 2 categories_in_page query . .9 random_page . .9 recent_changes . 10 revision_content . 11 revision_diff . 12 user_contributions . 14 user_information . 15 WikipediR . 17 Index 18 categories_in_page Retrieves categories associated with a page. Description Retrieves categories associated with a page (or list of pages) on a MediaWiki instance Usage categories_in_page(language = NULL, project = NULL, domain = NULL, pages, properties = c("sortkey", "timestamp", "hidden"), limit = 50, show_hidden = FALSE, clean_response = FALSE, ...) Arguments language The language code of the project you wish to query, if appropriate. project The project you wish to query ("wikiquote"), if appropriate. Should be provided in conjunction with language. domain as an alternative to a language and project combination, you can also provide a domain ("rationalwiki.org") to the URL constructor, allowing for the querying of non-Wikimedia MediaWiki instances. pages A vector of page titles, with or without spaces, that you want to retrieve cate- gories for.