Dr. Ernesto Gomez : CSE 401 Most of the Material Here Is in Chapter 2 of the Text, Specifically Sections 2.1-2.8

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Class-Action Lawsuit

Case 3:20-cv-00863-SI Document 1 Filed 05/29/20 Page 1 of 279 Steve D. Larson, OSB No. 863540 Email: [email protected] Jennifer S. Wagner, OSB No. 024470 Email: [email protected] STOLL STOLL BERNE LOKTING & SHLACHTER P.C. 209 SW Oak Street, Suite 500 Portland, Oregon 97204 Telephone: (503) 227-1600 Attorneys for Plaintiffs [Additional Counsel Listed on Signature Page.] UNITED STATES DISTRICT COURT DISTRICT OF OREGON PORTLAND DIVISION BLUE PEAK HOSTING, LLC, PAMELA Case No. GREEN, TITI RICAFORT, MARGARITE SIMPSON, and MICHAEL NELSON, on behalf of CLASS ACTION ALLEGATION themselves and all others similarly situated, COMPLAINT Plaintiffs, DEMAND FOR JURY TRIAL v. INTEL CORPORATION, a Delaware corporation, Defendant. CLASS ACTION ALLEGATION COMPLAINT Case 3:20-cv-00863-SI Document 1 Filed 05/29/20 Page 2 of 279 Plaintiffs Blue Peak Hosting, LLC, Pamela Green, Titi Ricafort, Margarite Sampson, and Michael Nelson, individually and on behalf of the members of the Class defined below, allege the following against Defendant Intel Corporation (“Intel” or “the Company”), based upon personal knowledge with respect to themselves and on information and belief derived from, among other things, the investigation of counsel and review of public documents as to all other matters. INTRODUCTION 1. Despite Intel’s intentional concealment of specific design choices that it long knew rendered its central processing units (“CPUs” or “processors”) unsecure, it was only in January 2018 that it was first revealed to the public that Intel’s CPUs have significant security vulnerabilities that gave unauthorized program instructions access to protected data. 2. A CPU is the “brain” in every computer and mobile device and processes all of the essential applications, including the handling of confidential information such as passwords and encryption keys. -

Professor Won Woo Ro, School of Electrical and Electronic Engineering Yonsei University the Intel® 4004 Microprocessor, Introdu

Professor Won Woo Ro, School of Electrical and Electronic Engineering Yonsei University The 1st Microprocessor The Intel® 4004 microprocessor, introduced in November 1971 An electronics revolution that changed our world. There were no customer‐ programmable microprocessors on the market before the 4004. It propelled software into the limelight as a key player in the world of digital electronics design. 4004 Microprocessor Display at New Intel Museum A Japanese calculator maker (Busicom) asked to design: A set of 12 custom logic chips for a line of programmable calculators. Marcian E. "Ted" Hoff Recognized the integrated circuit technology (of the day) had advanced enough to build a single chip, general purpose computer. Federico Faggin to turn Hoff's vision into a silicon reality. (In less than one year, Faggin and his team delivered the 4004, which was introduced in November, 1971.) The world's first microprocessor application was this Busicom calculator. (sold about 100,000 calculators.) Measuring 1/8 inch wide by 1/6 inch long, consisting of 2,300 transistors, Intel’s 4004 microprocessor had as much computing power as the first electronic computer, ENIAC. 2 inch 4004 and 12 inch Core™2 Duo wafer ENIAC, built in 1946, filled 3000‐cubic‐ feet of space and contained 18,000 vacuum tubes. The 4004 microprocessor could execute 60,000 operations per second Running frequency: 108 KHz Founders wanted to name their new company Moore Noyce. However the name sounds very much similar to “more noise”. "Only the paranoid survive". Moore received a B.S. degree in Chemistry from the University of California, Berkeley in 1950 and a Ph.D. -

Iapx 286 PROGRAMMER's REFERENCE MANUAL

iAPX 286 PROGRAMMER'S REFERENCE MANUAL 1983 -. Additional copies of this manual or other Intel literature may be obtained from: Literature Department Intel Corporation 3065 Bowers Avenue Santa Clara, CA 95051 The information in this document is subject to change without notice. Intel Corporation makes no warranty of any kind with regard to this material, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Intel Corporation assumes no responsibility for any errors that may appear in this document. Intel Corporation makes no commitment to update nor to keep current the information contained in this document. Intel Corporation assumes no responsibility for the use of any circuitry other than circuitry embodied in an Intel product. No other circuit patent licenses are implied. Intel software products are copyrighted by and shall remain the property of Intel Corporation. Use, dupli cation or disclosure is subject to restrictions stated in Intel's software license, or as defined in ASPR 7-104.9(a)(9). No part of this document may be copied or reproduced in any form or by any means without the prior written consent of Intel Corporation. The following are trademarks of Intel Corporation and its affiliates and may be used to identify Intel products: AEDIT iDiS Intellink MICROMAINFRAME BITBUS iLBX iOSP MULTIBUS BXP im iPDS MULTICHANNEL COMMputer iMMX iRMX MULTIMODULE CREDIT Insite iSBC Plug-A-Bubble i intel iSBX PROMPT 12ICE intelBOS iSDM Ripplemode iATC Intelevision iSXM RMX/80 ICE inteligent Identifier Library Manager RUPI iCS inteligent Programming MCS System 2000 iDBP Intellec Megachassis UPI Table of Contents CHAPTER 1 Page INTRODUCTION TO iAPX 286 General Attributes ......... -

Advanced Architecture Intel Microprocessor History

Advanced Architecture Intel microprocessor history Computer Organization and Assembly Languages Yung-Yu Chuang with slides by S. Dandamudi, Peng-Sheng Chen, Kip Irvine, Robert Sedgwick and Kevin Wayne Early Intel microprocessors The IBM-AT • Intel 8080 (1972) • Intel 80286 (1982) – 64K addressable RAM – 16 MB addressable RAM – 8-bit registers – Protected memory – CP/M operating system – several times faster than 8086 – 5,6,8,10 MHz – introduced IDE bus architecture – 29K transistors – 80287 floating point unit • Intel 8086/8088 (1978) my first computer (1986) – Up to 20MHz – IBM-PC used 8088 – 134K transistors – 1 MB addressable RAM –16-bit registers – 16-bit data bus (8-bit for 8088) – separate floating-point unit (8087) – used in low-cost microcontrollers now 3 4 Intel IA-32 Family Intel P6 Family • Intel386 (1985) • Pentium Pro (1995) – 4 GB addressable RAM – advanced optimization techniques in microcode –32-bit registers – More pipeline stages – On-board L2 cache – paging (virtual memory) • Pentium II (1997) – Up to 33MHz – MMX (multimedia) instruction set • Intel486 (1989) – Up to 450MHz – instruction pipelining • Pentium III (1999) – Integrated FPU – SIMD (streaming extensions) instructions (SSE) – 8K cache – Up to 1+GHz • Pentium (1993) • Pentium 4 (2000) – Superscalar (two parallel pipelines) – NetBurst micro-architecture, tuned for multimedia – 3.8+GHz • Pentium D (2005, Dual core) 5 6 IA32 Processors ARM history • Totally Dominate Computer Market • 1983 developed by Acorn computers • Evolutionary Design – To replace 6502 in -

Protected Mode - Wikipedia

2/12/2019 Protected mode - Wikipedia Protected mode In computing, protected mode, also called protected virtual address mode,[1] is an operational mode of x86- compatible central processing units (CPUs). It allows system software to use features such as virtual memory, paging and safe multi-tasking designed to increase an operating system's control over application software.[2][3] When a processor that supports x86 protected mode is powered on, it begins executing instructions in real mode, in order to maintain backward compatibility with earlier x86 processors.[4] Protected mode may only be entered after the system software sets up one descriptor table and enables the Protection Enable (PE) bit in the control register 0 (CR0).[5] Protected mode was first added to the x86 architecture in 1982,[6] with the release of Intel's 80286 (286) processor, and later extended with the release of the 80386 (386) in 1985.[7] Due to the enhancements added by protected mode, it has become widely adopted and has become the foundation for all subsequent enhancements to the x86 architecture,[8] although many of those enhancements, such as added instructions and new registers, also brought benefits to the real mode. Contents History The 286 The 386 386 additions to protected mode Entering and exiting protected mode Features Privilege levels Real mode application compatibility Virtual 8086 mode Segment addressing Protected mode 286 386 Structure of segment descriptor entry Paging Multitasking Operating systems See also References External links History https://en.wikipedia.org/wiki/Protected_mode -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Evolution of the Pentium

Chapter 7B – The Evolution of the Intel Pentium This chapter attempts to trace the evolution of the modern Intel Pentium from the earliest CPU chip, the Intel 4004. The real evolution begins with the Intel 8080, which is an 8–bit design having features that permeate the entire line. Our discussion focuses on three organizations. IA–16 The 16–bit architecture found in the Intel 8086 and Intel 80286. IA–32 The 32–bit architecture found in the Intel 80386, Intel 80486, and most variants of the Pentium design. IA–64 The 64–bit architecture found in some high–end later model Pentiums. The IA–32 has evolved from an early 4–bit design (the Intel 4004) that was first announced in November 1971. At that time, memory came in chips no larger than 64 kilobits (8 KB) and cost about $1,600 per megabyte. Before moving on with the timeline, it is worth recalling the early history of Intel. Here, we quote extensively from Tanenbaum [R002]. “In 1968, Robert Noyce, inventor of the silicon integrated circuit, Gordon Moore, of Moore’s law fame, and Arthur Rock, a San Francisco venture capitalist, formed the Intel Corporation to make memory chips. In the first year of operation, Intel sold only $3,000 worth of chips, but business has picked up since then.” “In September 1969, a Japanese company, Busicom, approached Intel with a request for it to manufacture twelve custom chips for a proposed electronic calculator. The Intel engineer assigned to this project, Ted Hoff, looked at the plan and realized that he could put a 4–bit general–purpose CPU on a single chip that would do the same thing and be simpler and cheaper as well. -

IA-32 Architecture

Outline IA-32 Architecture Intel Microprocessors IA-32 Registers Computer Organization Instruction Execution Cycle & IA-32 Memory Management Assembly Language Programming Dr Adnan Gutub aagutub ‘at’ uqu.edu.sa [Adapted from slides of Dr. Kip Irvine: Assembly Language for Intel-Based Computers] Most Slides contents have been arranged by Dr Muhamed Mudawar & Dr Aiman El-Maleh from Computer Engineering Dept. at KFUPM 45/٢ IA-32 Architecture Computer Organization and Assembly Language slide Intel Microprocessors Intel 80286 and 80386 Processors Intel introduced the 8086 microprocessor in 1979 80286 was introduced in 1982 8086, 8087, 8088, and 80186 processors 24-bit address bus ⇒ 224 bytes = 16 MB address space 16-bit processors with 16-bit registers Introduced protected mode 16-bit data bus and 20-bit address bus Segmentation in protected mode is different from the real mode Physical address space = 220 bytes = 1 MB 80386 was introduced in 1985 8087 Floating-Point co-processor First 32-bit processor with 32-bit general-purpose registers Uses segmentation and real-address mode to address memory First processor to define the IA-32 architecture Each segment can address 216 bytes = 64 KB 32-bit data bus and 32-bit address bus 8088 is a less expensive version of 8086 232 bytes ⇒ 4 GB address space Uses an 8-bit data bus Introduced paging , virtual memory , and the flat memory model 80186 is a faster version of 8086 Segmentation can be turned off 45/٤ 45 IA-32 Architecture Computer Organization and Assembly Language slide/٣ -

Hyperace-286

AUSTRALIAN DESIGNED, BUILT AND SUPPORTED. HYPERACE·286, 286 PLUS and 286 SUPERPLUS Accelerator boards for the IBM PC/XT and compatibles. 286: 286 PLUS: • Boosts processing speeds up • Boosts processing speeds up to 3-4 times faster than a to 5 times faster than a standard PC, equalling "AT" standard PC - significantly performance. faster than ''AT'' performance. 286 SUPERPLUS: • Boosts processing speeds up to 6-7 times faster than a standard Pc' for exceeding ''AT'' performance. All three boards use the Intel 80286 processor chip and support the 80287 moths co-processor chip. AUSTRALIAN DESIGNED, BUILT AND SUPPORTED. How to improve your PC performance HVPERACE-286, HVPERACE ~ 286 HVPERACE-286, 286 PLUS and PLUS and HVPERACE-286 286 SUPERPLUS have been SUPERPLUS accelerator boards designed with heavy emphasis are versatile and powerful on quality and reliability - additions to your IBM Personal features of all HVPERTEC products. Computer or compatible. Designed and built in Australia, these boards are for the user who FEATURES: requires maximum performance from his machine. 286, 286 PLUS, 286 SUPERPlUS: HVPERACE-286 will increase the • Australian designed and performance of your PC by 3-4 built by HVPERTEC times; HVPERACE-286 PLUS, up to • Full local support from the 5 times faster; and HVPERACE-286 designers. SUPERPLUS, a staggering 6-7 times • Short accelerator boards for the faster. IBM PC/XT and compatibles. Using an Intel 80286 • Each board runs the Intel processor chip, the HVPERACE-286 80286 processor chip. runs at 6MHz, the 286 PLUS at • Compatible with any Intel 10MHz, and the 286 SUPERPLUS at Expanded Memory 12.5MHz, offering a choice of Specification Board. -

An Introduction to Software Development

Table of Contents NOTES: This Spring 1988 reprint identifies price changes with an "*" where applicable. Changes in product descriptions are noted by (UPDATED) here and on the page corner. The notation "(SEE ADDENDUM)" refers to additional products offered within the particular architecture. This reference occurs both on this page and the following Product/Environment Reference page. Introduction Product/Environment Reference 3 An Introduction To Software Development 4 System Requirements 8 80960 Microprocessor Tools (SEE ADDENDUM) 8086, 80286, 80386 Microprocessor Tools Assemblers and Relocation/Linkage Packages 9 PLIM Compilers 13 C Compilers (UPDATED) 15 FORTRAN Compiler 17 Pascal Compilers 18 Software Debugger 19 Performance Analysis Tools 20 Emulators (SEE ADDENDUM) 22 Above Board Kits (SEE ADDENDUM) 24 80376 Microprocessor Tools (SEE ADDENDUM) 8051 Microcontroller Tools Assembler and Relocation/Linkage Package 25 PLIM Compiler and Relocation/Linkage Package 26 Micro/C-51 Compiler (Micro Computer Control Corporation) 27 Emulators 28 8096 Microcontroller Tools (SEE ADDENDUM) Assembler and Relocation/Linkage Package 30 PLIM Compiler and Relocation/Linkage Package 31 C Compiler and Relocation/Linkage Package 32 Productivity Tools Text Editor 33 Program Management Tools 34 Literature 36 Support Services Software (UPDATED) 37 Hardware 38 Training Workshops (UPDATED) 39 How to Order 40 Response Subscription Card This catalog provides a convenient way to order many of Intel's development tools. It lists programming tools for the PC, VAX, and MicroVAX hosts, as well as debugging and performance analysis tools. For information on other Intel products ask us for the telephone number of your nearest Intel sales office or distributor. 800-87-INTEL FOR INFORMATION 800-874-6835 1 ©INTEL,1988 Intel assumes no responsibility forthe use of any circuitry other than circuitry embodied in an Intel product. -

Embedded Intel486™ Processor Family Developer's Manual

Embedded Intel486™ Processor Family Developer’s Manual Release Date: October 1997 Order Number: 273021-001 The Intel486™ processors may contain design defects known as errata which may cause the products to deviate from published specifications. Currently characterized errata are avail- able on request. Information in this document is provided in connection with Intel products. No license, express or implied, by estoppel or oth- erwise, to any intellectual property rights is granted by this document. Except as provided in Intel’s Terms and Conditions of Sale for such products, Intel assumes no liability whatsoever, and Intel disclaims any express or implied warranty, relating to sale and/or use of Intel products including liability or warranties relating to fitness for a particular purpose, merchantability, or infringement of any patent, copyright or other intellectual property right. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel retains the right to make changes to specifications and product descriptions at any time, without notice. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order. *Third-party brands and names are the property of their respective owners. Copies of documents which have an ordering number and are referenced in this document, or other Intel literature, may be obtained from: Intel Corporation P.O. Box 5937 Denver, CO 80217-9808 or call 1-800-548-4725 or visit Intel’s website at http:\\www.intel.com Copyright © INTEL CORPORATION, October 1997 CONTENTS CHAPTER 1 GUIDE TO THIS MANUAL 1.1 MANUAL CONTENTS ................................................................................................. -

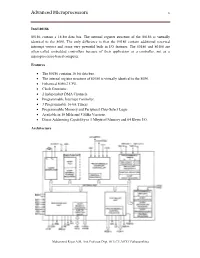

Advanced Microprocessors 1

Advanced Microprocessors 1 Intel 80186 80186 contain a 16-bit data bus. The internal register structure of the 80186 is virtually identical to the 8086. The only difference is that the 80186 contain additional reserved interrupt vectors and some very powerful built in I/O features. The 80186 and 80188 are often called embedded controllers because of their application as a controller, not as a microprocessor-based computer. Features The 80186 contains 16 bit data bus. The internal register structure of 80186 is virtually identical to the 8086. Enhanced 8086-2 CPU. Clock Generator. 2 Independent DMA Channels. Programmable Interrupt Controller. 3 Programmable 16-bit Timers. Programmable Memory and Peripheral Chip-Select Logic. Available in 10 MHz and 8 MHz Versions. Direct Addressing Capability to 1 Mbyte of Memory and 64 Kbyte I/O. Architecture Muhammed Riyas A.M, Asst.Professor,Dept. Of E.C.E,MCET Pathanamthitta Advanced Microprocessors 2 In addition to the BIU and EU, the 80186 family contains a clock generator, a programmable interrupt controller, programmable timers, a programmable DMA controller and a programmable chip selection unit. These enhancements greatly increase the utility of the 80186 and reduce the number of peripheral components required to implement a system. Clock Generator. The internal clock generator replaces the external 8284A clock generator used with the 8086/8088 microprocessors. This reduces the component count in a system. The internal clock generator has three pin connections: X1, X2, and CLKOUT. The X1 (CLKIN) and X2 (OSCOUT) pins are connected to a crystal that resonates at twice the operating frequency of the microprocessor.