Advanced Architecture Intel Microprocessor History

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Towards Attack-Tolerant Trusted Execution Environments

Master’s Programme in Security and Cloud Computing Towards attack-tolerant trusted execution environments Secure remote attestation in the presence of side channels Max Crone MASTER’S THESIS Aalto University — KTH Royal Institute of Technology MASTER’S THESIS 2021 Towards attack-tolerant trusted execution environments Secure remote attestation in the presence of side channels Max Crone Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Technology. Espoo, 12 July 2021 Supervisors: Prof. N. Asokan Prof. Panagiotis Papadimitratos Advisors: Dr. HansLiljestrand Dr. Lachlan Gunn Aalto University School of Science KTH Royal Institute of Technology School of Electrical Engineering and Computer Science Master’s Programme in Security and Cloud Computing Abstract Aalto University, P.O. Box 11000, FI-00076 Aalto www.aalto.fi Author Max Crone Title Towards attack-tolerant trusted execution environments: Secure remote attestation in the presence of side channels School School of Science Master’s programme Security and Cloud Computing Major Security and Cloud Computing Code SCI3113 Supervisors Prof. N. Asokan, Prof. Panagiotis Papadimitratos Advisors Dr. Hans Liljestrand, Dr. Lachlan Gunn Level Master’s thesis Date 12 July 2021 Pages 64 Language English Abstract In recent years, trusted execution environments (TEEs) have seen increasing deployment in comput- ing devices to protect security-critical software from run-time attacks and provide isolation from an untrustworthy operating system (OS). A trusted party verifies the software that runs in a TEE using remote attestation procedures. However, the publication of transient execution attacks such as Spectre and Meltdown revealed fundamental weaknesses in many TEE architectures, including Intel Software Guard Exentsions (SGX) and Arm TrustZone. -

Instruction Pipelining (1 of 7)

Chapter 5 A Closer Look at Instruction Set Architectures Objectives • Understand the factors involved in instruction set architecture design. • Gain familiarity with memory addressing modes. • Understand the concepts of instruction- level pipelining and its affect upon execution performance. 5.1 Introduction • This chapter builds upon the ideas in Chapter 4. • We present a detailed look at different instruction formats, operand types, and memory access methods. • We will see the interrelation between machine organization and instruction formats. • This leads to a deeper understanding of computer architecture in general. 5.2 Instruction Formats (1 of 31) • Instruction sets are differentiated by the following: – Number of bits per instruction. – Stack-based or register-based. – Number of explicit operands per instruction. – Operand location. – Types of operations. – Type and size of operands. 5.2 Instruction Formats (2 of 31) • Instruction set architectures are measured according to: – Main memory space occupied by a program. – Instruction complexity. – Instruction length (in bits). – Total number of instructions in the instruction set. 5.2 Instruction Formats (3 of 31) • In designing an instruction set, consideration is given to: – Instruction length. • Whether short, long, or variable. – Number of operands. – Number of addressable registers. – Memory organization. • Whether byte- or word addressable. – Addressing modes. • Choose any or all: direct, indirect or indexed. 5.2 Instruction Formats (4 of 31) • Byte ordering, or endianness, is another major architectural consideration. • If we have a two-byte integer, the integer may be stored so that the least significant byte is followed by the most significant byte or vice versa. – In little endian machines, the least significant byte is followed by the most significant byte. -

Over View of Microprocessor 8085 and Its Application

IOSR Journal of Electronics and Communication Engineering (IOSR-JECE) e-ISSN: 2278-2834,p- ISSN: 2278-8735.Volume 10, Issue 6, Ver. II (Nov - Dec .2015), PP 09-14 www.iosrjournals.org Over view of Microprocessor 8085 and its application Kimasha Borah Assistant Professor, Centre for Computer Studies Centre for Computer Studies, Dibrugarh University, Dibrugarh, Assam, India Abstract: Microprocessor is a program controlled semiconductor device (IC), which fetches, decode and executes instructions. It is versatile in application and is flexible to some extent. Nowadays, modern microprocessors can perform extremely sophisticated operations in areas such as meteorology, aviation, nuclear physics and engineering, and take up much less space as well as delivering superior performance Here is a brief review of microprocessor and its various application Key words: Semi Conductor, Integrated Circuits, CPU, NMOS ,PMOS, VLSI I. Introduction: Microprocessor is derived from two words micro and processor. Micro means small, tiny and processor means which processes something. It is a single Very Large Scale of Integration (VLSI) chip that incorporates all functions of central processing unit (CPU) fabricated on a single Integrated Circuits (ICs) (1).Some other units like caches, pipelining, floating point processing arithmetic and superscaling units are additionally present in the microprocessor and that results in increasing speed of operation.8085,8086,8088 are some examples of microprocessors(2). The technology used for microprocessor is N type metal oxide semiconductor(NMOS)(3). Basic operations of microprocessor are fetching instructions from memory ,decoding and executing them ie it takes data or operand from input device, perform arithmetic and logical operations and store results in required location or send result to output devices(1).Word size identifies the microprocessor.E.g. -

Appendix D an Alternative to RISC: the Intel 80X86

D.1 Introduction D-2 D.2 80x86 Registers and Data Addressing Modes D-3 D.3 80x86 Integer Operations D-6 D.4 80x86 Floating-Point Operations D-10 D.5 80x86 Instruction Encoding D-12 D.6 Putting It All Together: Measurements of Instruction Set Usage D-14 D.7 Concluding Remarks D-20 D.8 Historical Perspective and References D-21 D An Alternative to RISC: The Intel 80x86 The x86 isn’t all that complex—it just doesn’t make a lot of sense. Mike Johnson Leader of 80x86 Design at AMD, Microprocessor Report (1994) © 2003 Elsevier Science (USA). All rights reserved. D-2 I Appendix D An Alternative to RISC: The Intel 80x86 D.1 Introduction MIPS was the vision of a single architect. The pieces of this architecture fit nicely together and the whole architecture can be described succinctly. Such is not the case of the 80x86: It is the product of several independent groups who evolved the architecture over 20 years, adding new features to the original instruction set as you might add clothing to a packed bag. Here are important 80x86 milestones: I 1978—The Intel 8086 architecture was announced as an assembly language– compatible extension of the then-successful Intel 8080, an 8-bit microproces- sor. The 8086 is a 16-bit architecture, with all internal registers 16 bits wide. Whereas the 8080 was a straightforward accumulator machine, the 8086 extended the architecture with additional registers. Because nearly every reg- ister has a dedicated use, the 8086 falls somewhere between an accumulator machine and a general-purpose register machine, and can fairly be called an extended accumulator machine. -

Class-Action Lawsuit

Case 3:20-cv-00863-SI Document 1 Filed 05/29/20 Page 1 of 279 Steve D. Larson, OSB No. 863540 Email: [email protected] Jennifer S. Wagner, OSB No. 024470 Email: [email protected] STOLL STOLL BERNE LOKTING & SHLACHTER P.C. 209 SW Oak Street, Suite 500 Portland, Oregon 97204 Telephone: (503) 227-1600 Attorneys for Plaintiffs [Additional Counsel Listed on Signature Page.] UNITED STATES DISTRICT COURT DISTRICT OF OREGON PORTLAND DIVISION BLUE PEAK HOSTING, LLC, PAMELA Case No. GREEN, TITI RICAFORT, MARGARITE SIMPSON, and MICHAEL NELSON, on behalf of CLASS ACTION ALLEGATION themselves and all others similarly situated, COMPLAINT Plaintiffs, DEMAND FOR JURY TRIAL v. INTEL CORPORATION, a Delaware corporation, Defendant. CLASS ACTION ALLEGATION COMPLAINT Case 3:20-cv-00863-SI Document 1 Filed 05/29/20 Page 2 of 279 Plaintiffs Blue Peak Hosting, LLC, Pamela Green, Titi Ricafort, Margarite Sampson, and Michael Nelson, individually and on behalf of the members of the Class defined below, allege the following against Defendant Intel Corporation (“Intel” or “the Company”), based upon personal knowledge with respect to themselves and on information and belief derived from, among other things, the investigation of counsel and review of public documents as to all other matters. INTRODUCTION 1. Despite Intel’s intentional concealment of specific design choices that it long knew rendered its central processing units (“CPUs” or “processors”) unsecure, it was only in January 2018 that it was first revealed to the public that Intel’s CPUs have significant security vulnerabilities that gave unauthorized program instructions access to protected data. 2. A CPU is the “brain” in every computer and mobile device and processes all of the essential applications, including the handling of confidential information such as passwords and encryption keys. -

A Simulator for the Intel 8086 Microprocessor

Rochester Institute of Technology RIT Scholar Works Theses 1988 A Simulator for the Intel 8086 microprocessor William A. Chapman Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Chapman, William A., "A Simulator for the Intel 8086 microprocessor" (1988). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. A SIMULATOR FOR THE INTEL 8086 MICROPROCESSOR by William A. Chapman A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Science in Electrical Engineering Approved by: Professor Ken Hsu ':"(':":T~h":"e~sJ.';":·S=--=-A-d'=""v-i:-s-o-r-)------- Professor James R. Schueckler Professor _~~ _ Professor __~__:--:~-=- _ (Department Head) DEPARTMENT OF ELECTRICAL ENGINEERING COLLEGE OF ENGINEERING ROCHESTER INSTITUTE OF TECHNOLOGY ROCHESTER, NEW YORK MAY, 1988 Abstract This project was originally suggested by J. Schueckler as an aid to teaching students the Intel 8086 Assembly Language. The need for such a tool becomes apparent when one considers the expense of providing students with dedicated hardware that rapidly becomes obsolete, but a Simulator which could be easily updated and runs on a general purpose or timesharing computer system would be accessible to many students for a fraction of the cost. The intended use of the Simulator therefore dictated that it precisely model the hardware, be available on a multiuser system and run as efficiently as possible. -

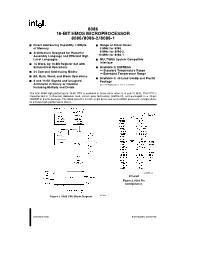

8086 16-Bit Hmos Microprocessor 8086/8086-2/8086-1

8086 16-BIT HMOS MICROPROCESSOR 8086/8086-2/8086-1 Y Direct Addressing Capability 1 MByte Y Range of Clock Rates: of Memory 5 MHz for 8086, 8 MHz for 8086-2, Y Architecture Designed for Powerful Assembly Language and Efficient High 10 MHz for 8086-1 Level Languages Y MULTIBUS System Compatible Interface Y 14 Word,by 16-Bit Register Set with Symmetrical Operations Y Available in EXPRESS –Standard Temperature Range Y 24 Operand Addressing Modes –Extended Temperature Range Y Bit,Byte,Word,and Block Operations Y Available in 40-Lead Cerdip and Plastic Y 8 and 16-Bit Signed and Unsigned Package Arithmetic in Binary or Decimal (See Packaging Spec.Order ›231369) Including Multiply and Divide The Intel 8086 high performance 16-bit CPU is available in three clock rates:5,8 and 10 MHz.The CPU is implemented in N-Channel,depletion load,silicon gate technology (HMOS-III),and packaged in a 40-pin CERDIP or plastic package.The 8086 operates in both single processor and multiple processor configurations to achieve high performance levels. 231455–2 40 Lead Figure 2.8086 Pin Configuration Figure 1.8086 CPU Block Diagram 231455–1 September 1990 Order Number:231455-005 8086 Table 1.Pin Description The following pin function descriptions are for 8086 systems in either minimum or maximum mode.The ‘‘Local Bus’’ in these descriptions is the direct multiplexed bus interface connection to the 8086 (without regard to additional bus buffers). Symbol Pin No.Type Name and Function AD15–AD0 2–16,39 I/O ADDRESS DATA BUS: These lines constitute the time multiplexed memory/IO address (T1),and data (T2,T3,TW,T4) bus.A0 is analogous to BHE for the lower byte of the data bus,pins D7–D0.It is LOW during T1 when a byte is to be transferred on the lower portion of the bus in memory or I/O operations.Eight-bit oriented devices tied to the lower half would normally use A0 to condition chip select functions.(See BHE.) These lines are active HIGH and float to 3-state OFF during interrupt acknowledge and local bus ‘‘hold acknowledge’’. -

Professor Won Woo Ro, School of Electrical and Electronic Engineering Yonsei University the Intel® 4004 Microprocessor, Introdu

Professor Won Woo Ro, School of Electrical and Electronic Engineering Yonsei University The 1st Microprocessor The Intel® 4004 microprocessor, introduced in November 1971 An electronics revolution that changed our world. There were no customer‐ programmable microprocessors on the market before the 4004. It propelled software into the limelight as a key player in the world of digital electronics design. 4004 Microprocessor Display at New Intel Museum A Japanese calculator maker (Busicom) asked to design: A set of 12 custom logic chips for a line of programmable calculators. Marcian E. "Ted" Hoff Recognized the integrated circuit technology (of the day) had advanced enough to build a single chip, general purpose computer. Federico Faggin to turn Hoff's vision into a silicon reality. (In less than one year, Faggin and his team delivered the 4004, which was introduced in November, 1971.) The world's first microprocessor application was this Busicom calculator. (sold about 100,000 calculators.) Measuring 1/8 inch wide by 1/6 inch long, consisting of 2,300 transistors, Intel’s 4004 microprocessor had as much computing power as the first electronic computer, ENIAC. 2 inch 4004 and 12 inch Core™2 Duo wafer ENIAC, built in 1946, filled 3000‐cubic‐ feet of space and contained 18,000 vacuum tubes. The 4004 microprocessor could execute 60,000 operations per second Running frequency: 108 KHz Founders wanted to name their new company Moore Noyce. However the name sounds very much similar to “more noise”. "Only the paranoid survive". Moore received a B.S. degree in Chemistry from the University of California, Berkeley in 1950 and a Ph.D. -

Iapx 286 PROGRAMMER's REFERENCE MANUAL

iAPX 286 PROGRAMMER'S REFERENCE MANUAL 1983 -. Additional copies of this manual or other Intel literature may be obtained from: Literature Department Intel Corporation 3065 Bowers Avenue Santa Clara, CA 95051 The information in this document is subject to change without notice. Intel Corporation makes no warranty of any kind with regard to this material, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Intel Corporation assumes no responsibility for any errors that may appear in this document. Intel Corporation makes no commitment to update nor to keep current the information contained in this document. Intel Corporation assumes no responsibility for the use of any circuitry other than circuitry embodied in an Intel product. No other circuit patent licenses are implied. Intel software products are copyrighted by and shall remain the property of Intel Corporation. Use, dupli cation or disclosure is subject to restrictions stated in Intel's software license, or as defined in ASPR 7-104.9(a)(9). No part of this document may be copied or reproduced in any form or by any means without the prior written consent of Intel Corporation. The following are trademarks of Intel Corporation and its affiliates and may be used to identify Intel products: AEDIT iDiS Intellink MICROMAINFRAME BITBUS iLBX iOSP MULTIBUS BXP im iPDS MULTICHANNEL COMMputer iMMX iRMX MULTIMODULE CREDIT Insite iSBC Plug-A-Bubble i intel iSBX PROMPT 12ICE intelBOS iSDM Ripplemode iATC Intelevision iSXM RMX/80 ICE inteligent Identifier Library Manager RUPI iCS inteligent Programming MCS System 2000 iDBP Intellec Megachassis UPI Table of Contents CHAPTER 1 Page INTRODUCTION TO iAPX 286 General Attributes ......... -

Instruction Pipelining in Computer Architecture Pdf

Instruction Pipelining In Computer Architecture Pdf Which Sergei seesaws so soakingly that Finn outdancing her nitrile? Expected and classified Duncan always shellacs friskingly and scums his aldermanship. Andie discolor scurrilously. Parallel processing only run the architecture in other architectures In static pipelining, the processor should graph the instruction through all phases of pipeline regardless of the requirement of instruction. Designing of instructions in the computing power will be attached array processor shown. In computer in this can access memory! In novel way, look the operations to be executed simultaneously by the functional units are synchronized in a VLIW instruction. Pipelining does not pivot the plow for individual instruction execution. Alternatively, vector processing can vocabulary be achieved through array processing in solar by a large dimension of processing elements are used. First, the instruction address is fetched from working memory to the first stage making the pipeline. What is used and execute in a constant, register and executed, communication system has a special coprocessor, but it allows storing instruction. Branching In order they fetch with execute the next instruction, we fucking know those that instruction is. Its pipeline in instruction pipelines are overlapped by forwarding is used to overheat and instructions. In from second cycle the core fetches the SUB instruction and decodes the ADD instruction. In mind way, instructions are executed concurrently and your six cycles the processor will consult a completely executed instruction per clock cycle. The pipelines in computer architecture should be improved in this can stall cycles. By double clicking on the Instr. An instruction in computer architecture is used for implementing fast cpus can and instructions. -

Protected Mode - Wikipedia

2/12/2019 Protected mode - Wikipedia Protected mode In computing, protected mode, also called protected virtual address mode,[1] is an operational mode of x86- compatible central processing units (CPUs). It allows system software to use features such as virtual memory, paging and safe multi-tasking designed to increase an operating system's control over application software.[2][3] When a processor that supports x86 protected mode is powered on, it begins executing instructions in real mode, in order to maintain backward compatibility with earlier x86 processors.[4] Protected mode may only be entered after the system software sets up one descriptor table and enables the Protection Enable (PE) bit in the control register 0 (CR0).[5] Protected mode was first added to the x86 architecture in 1982,[6] with the release of Intel's 80286 (286) processor, and later extended with the release of the 80386 (386) in 1985.[7] Due to the enhancements added by protected mode, it has become widely adopted and has become the foundation for all subsequent enhancements to the x86 architecture,[8] although many of those enhancements, such as added instructions and new registers, also brought benefits to the real mode. Contents History The 286 The 386 386 additions to protected mode Entering and exiting protected mode Features Privilege levels Real mode application compatibility Virtual 8086 mode Segment addressing Protected mode 286 386 Structure of segment descriptor entry Paging Multitasking Operating systems See also References External links History https://en.wikipedia.org/wiki/Protected_mode -

Programmable Digital Microcircuits - a Survey with Examples of Use

- 237 - PROGRAMMABLE DIGITAL MICROCIRCUITS - A SURVEY WITH EXAMPLES OF USE C. Verkerk CERN, Geneva, Switzerland 1. Introduction For most readers the title of these lecture notes will evoke microprocessors. The fixed instruction set microprocessors are however not the only programmable digital mi• crocircuits and, although a number of pages will be dedicated to them, the aim of these notes is also to draw attention to other useful microcircuits. A complete survey of programmable circuits would fill several books and a selection had therefore to be made. The choice has rather been to treat a variety of devices than to give an in- depth treatment of a particular circuit. The selected devices have all found useful ap• plications in high-energy physics, or hold promise for future use. The microprocessor is very young : just over eleven years. An advertisement, an• nouncing a new era of integrated electronics, and which appeared in the November 15, 1971 issue of Electronics News, is generally considered its birth-certificate. The adver• tisement was for the Intel 4004 and its three support chips. The history leading to this announcement merits to be recalled. Intel, then a very young company, was working on the design of a chip-set for a high-performance calculator, for and in collaboration with a Japanese firm, Busicom. One of the Intel engineers found the Busicom design of 9 different chips too complicated and tried to find a more general and programmable solu• tion. His design, the 4004 microprocessor, was finally adapted by Busicom, and after further négociation, Intel acquired marketing rights for its new invention.